ELK集群部署搭建

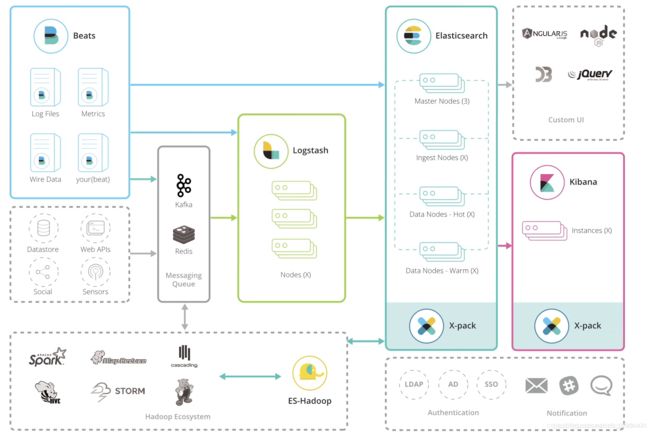

业内常用Elastic Stack的部署方案图

各部件说明

- Beats: 一个收集器,可以收集日志文件、http数据包等等

- Kafka:消息队列,用来缓冲存放从Beats流向LogStash数据

- Redis:也用作消息队列(Zset),缓冲存放从Beats流向LogStash数据

- LogStash:数据的处理器,使用多个Filter对数据进行预处理或者丰富数据

- ElasticSearch:数据处理后的最终存储位置,用于数据的存储和索引查询

- Kibana:数据的可视化显示效果

- X-pack:对集群进行一个安全监控验证

常见数据流程

- Logstash->Elasticsearch->Kibana

- Beats->Elasticsearch->Kibana

- Beats->Logstash->Elasticsearch->Kibana

- Beats->Kafka/Redis->Logstash->Elasticsearch->Kibana

综上所述总结

随着流程的细化,系统可靠性得到提高,所以我们接下来选用Beats->Kafka/Redis->Logstash->Elasticsearch->Kibana数据流程作为我们的解决方案。

案例背景

- 对现有轨迹存储方案,环境搭建,数据导入,数据测试,服务编写,测试结果对比

- 性能分析纬度: 数据量,时间范围,空间范围,空间类型

- 数据量:1千万,1亿,10亿条

- 时间范围:1天,1周,1月 时间跨度

- 空间范围:1平方公里,10平方公里,50 平方公里,

- 空间类型:多边形,圆形,矩形

案例分析

- 数据来源较为单一,可以使用PackageBeats或者LogStash直接进行数据采集

- 基于稳定性思考,采用kafka进行弹性消息处理,避免LogStash宕机造成数据丢失

- 数据不需要预处理,可以考虑从Beats->Kafka->ES,但是需要部署Kafka-connector

- 保留LogStash,Beats->Kafka->LogStash->ES ,不需要部署Kafka-connector

案例部署方案图

案例部署说明

- Filebeat:从文件、http等数据源抓取数据

- Kafka:弹性消息队列,缓冲数据源相关数据

- LogStash:消息预处理过滤器,这里主要是用来替代Kafka-connector连接器的作用

- ElasticSearch:存储处理后数据,这里数据无需处理,直接存取即可

- Kibana:对数据进行可视化效果展示

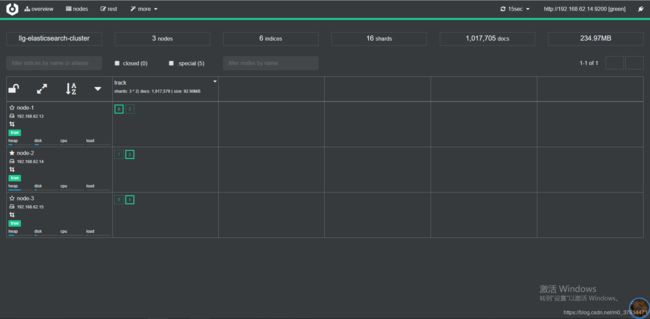

- Cerebro:对ES集群状态进行监控

案例资源说明

| 服务名称 | IP | 运行内存 | 硬盘大小 | CPU |

|---|---|---|---|---|

| Kafka | 192.168.62.8 | 2GB | 40GB | 单核 |

| FileBeat | 192.168.62.9 | 2GB | 40GB | 单核 |

| Logstash | 192.168.62.10 | 2GB | 40GB | 单核 |

| Kibana | 192.168.62.11 | 2GB | 40GB | 单核 |

| Cerebro | 192.168.62.12 | 2GB | 40GB | 单核 |

| ElasticSearch | 192.168.62.13 | 2GB | 40GB | 单核 |

| ElasticSearch | 192.168.62.14 | 2GB | 40GB | 单核 |

| ElasticSearch | 192.168.62.15 | 2GB | 40GB | 单核 |

数据建模

建模准则

1.字段属于何种类型?

float、text、keyword、long…

2.是否需要检索?

index = true | false

3.需要检索?

index_options = docs | freqs | postions | offsets

norms = false| true

3.是否需要排序和聚合分析?

doc_valus = false | true

4.是否需要另行存储?

store = true

{

"settings": {

"number_of_shards": 3,

"number_of_replicas": 1

},

"mappings": {

"dynamic":false,

"dynamic_date_formats": ["yyyy-MM-dd HH:mm:ss"],

"_source": {

"enabled": false

},

"properties": {

"MC": {

"type": "keyword",

"index": false,

"doc_values": false,

"ignore_above": 64,

"store": true

},

"HX": {

"type": "float",

"index": false,

"doc_values": false,

"store": true

},

"HS": {

"type": "float",

"index": false,

"doc_values": false,

"store": true

},

"MMSI": {

"type": "keyword",

"ignore_above": 16,

"index": true,

"index_options": "docs",

"norms": false,

"doc_values": false,

"store": true

},

"SJ": {

"type": "date",

"format": ["yyyy-MM-dd HH:mm:ss"],

"index": true,

"index_options": "docs",

"doc_values": true,

"store": true

},

"location": {

"type": "geo_point",

"store": true

}

}

}

}

搭建ElasticSearch集群

-

从官网下载最新版本的ElasticSearch压缩包

官方下载地址:https://www.elastic.co/cn/downloads/elasticsearch

当前下载地址:https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.7.1-linux-x86_64.tar.gz -

下载并解压缩elasticsearch-7.7.1-linux-x86_64.tar.gz

[llg@localhost ~]$ mkdir /winfo/

[llg@localhost ~]$ cd /winfo/

[llg@localhost winfo]$ wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-7.7.1-linux-x86_64.tar.gz

[llg@localhost winfo]$ tar -zxvf elasticsearch-7.7.0-darwin-x86_64.tar.gz -

配置elasticsearch的配置文件elasticsearch.yml

[llg@localhost /]$ cd /winfo/elasticsearch-7.7.0/config/

[llg@localhost config]$ vim elasticsearch.yml

cluster.name: llg-elasticsearch-cluster

node.name: node-1

path.data: /data

path.logs: /data/logs

http.port: 9200

network.host: 192.168.62.13

discovery.seed_hosts: [“192.168.62.14:9300”,“192.168.62.13:9300”,“192.168.62.15:9300”]

cluster.initial_master_nodes: [“node-1”] -

配置剩余的另外2台主机192.168.62.14和192.168.62.15

将3中的加粗部分更改即可,其余保持不变即可

node.name : node-2 / node-3

network.host: 192.168.62.14 / 192.168.62.15 -

三台主机分别后台启动elasticsearch

nohup /winfo/elasticsearch-7.7.0/bin/elasticsearch &

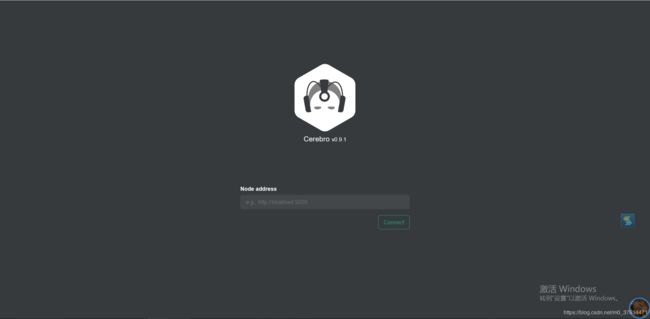

安装cerebro集群监控工具

-

从Github下载最新版本的cerebro压缩包

Github官方地址:https://github.com/lmenezes/cerebro/releases

当前下载地址:https://github.com/lmenezes/cerebro/releases/download/v0.9.1/cerebro-0.9.1.tgz -

下载并解压缩cerebro-0.9.1.tgz

[llg@localhost ~]$ cd /winfo/

[llg@localhost winfo]$ wget https://github.com/lmenezes/cerebro/releases/download/v0.9.1/cerebro-0.9.1.tgz

[llg@localhost winfo]$ tar -zxvf cerebro-0.9.1.tgz -

后台启动cerebro集群监控工具

nohup /winfo/cerebro-0.9.1/bin/cerebro &

-

访问 http://192.168.62.12:9000/

注意:192.168.62.12 是本主机地址,可根据主机ip更改

-

输入需要监控的主节点地址

注意:主节点地址随意选择一个即可

( 我这里选取的是192.168.62.13:9200)

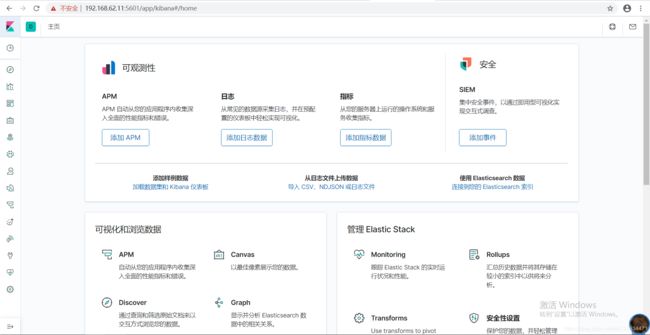

部署Kibana可视化软件

-

从官网下载最新版本的ElasticSearch压缩包

官方下载地址:https://www.elastic.co/cn/downloads/kibana

当前下载地址:https://artifacts.elastic.co/downloads/kibana/kibana-7.7.1-linux-x86_64.tar.gz -

下载并解压缩kibana-7.7.1-linux-x86_64.tar.gz

[llg@localhost ~]$ cd /winfo/

[llg@localhost winfo]$ wget https://artifacts.elastic.co/downloads/kibana/kibana-7.7.1-linux-x86_64.tar.gz

[llg@localhost winfo]$ tar -zxvf kibana-7.7.1-linux-x86_64.tar.gz -

配置kibana的配置文件/kibana.yml

[llg@localhost winfo]$ vim /winfo/kibana-7.7.0-linux-x86_64/config/kibana.yml

server.host: “192.168.62.11”

elasticsearch.hosts: [“http://192.168.62.13:9200”,“http://192.168.62.14:9200”,“http://192.168.62.15:9200”]

i18n.locale: “zh-CN” -

启动kibana

[llg@localhost /]$ nohup ./winfo/kibana-7.7.0-linux-x86_64/bin/kibana &

部署安装Kafka消息中间件

-

从官网下载最新版本的FileBeat压缩包

官方下载地址:http://kafka.apache.org/downloads

当前下载地址:https://mirror.bit.edu.cn/apache/kafka/2.5.0/kafka_2.12-2.5.0.tgz -

下载并解压缩kafka_2.12-2.5.0.tgz

[llg@localhost ~]$ cd /winfo/

[llg@localhost winfo]$ wget https://mirror.bit.edu.cn/apache/kafka/2.5.0/kafka_2.12-2.5.0.tgz

[llg@localhost winfo]$ tar -zxvf kafka_2.12-2.5.0.tgz -

配置kafka的配置文件server.properties

[llg@localhost /]$ vim /winfo/kafka_2.12-2.5.0/config/server.properties

listeners=PLAINTEXT://192.168.62.8:9092 advertised.listeners=PLAINTEXT://192.168.62.8:9092 zookeeper.connect=192.168.62.8:2181 -

启动kafka

官方启动demo地址:http://kafka.apache.org/quickstart

[llg@localhost kafka_2.12-2.5.0]$ nohup bin/zookeeper-server-start.sh config/zookeeper.properties &

[llg@localhost kafka_2.12-2.5.0]$ nohup bin/kafka-server-start.sh config/server.properties &

部署安装FileBeat数据采集

-

从官网下载最新版本的FileBeat压缩包

官方下载地址:https://www.elastic.co/cn/downloads/beats/filebeat

当前下载地址:https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.7.1-linux-x86_64.tar.gz -

下载并解压缩filebeat-7.7.1-linux-x86_64.tar.gz

[llg@localhost ~]$ cd /winfo/

[llg@localhost winfo]$ wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-7.7.1-linux-x86_64.tar.gz

[llg@localhost winfo]$ tar -zxvf filebeat-7.7.1-linux-x86_64.tar.gz -

配置filebeat的配置文件kibana.yml

[llg@localhost winfo]$ vim /winfo/filebeat-7.7.0-linux-x86_64/httpjson.yml

filebeat.inputs: - type: httpjson http_method: GET interval: 5s json_objects_array: hits.hits url : http://192.168.62.1:2888/data7 enabled: true output.kafka: hosts: ["192.168.62.8:9092"] topic: track partition.round_robin: reachable_only: false required_acks: 1 compression: gzip max_message_bytes: 1000000 -

启动filebeat

[llg@localhost filebeat-7.7.0-linux-x86_64]$ nohup ./filebeat -e -c httpjson.yml -d “publish” &

部署安装LogStash数据处理器

-

从官网下载最新版本的FileBeat压缩包

官方下载地址:https://www.elastic.co/cn/downloads/logstash

当前下载地址:https://artifacts.elastic.co/downloads/logstash/logstash-7.7.1.tar.gz -

下载并解压缩logstash-7.7.1.tar.gz

[llg@localhost ~]$ cd /winfo/

[llg@localhost winfo]$ wget https://artifacts.elastic.co/downloads/logstash/logstash-7.7.1.tar.gz

[llg@localhost winfo]$ tar -zxvf logstash-7.7.1.tar.gz -

配置logstash的配置文件kafka.yml

[llg@localhost ~]$ vim /winfo/logstash-7.7.0/config/kafka.yml

input{ kafka{ bootstrap_servers=> ["192.168.62.8:9092"] topics=> ["track"] group_id=> "628" auto_offset_reset=> "latest" consumer_threads=> 5 decorate_events => true } } filter{ json{ source=> "message" } mutate{ add_field=> {"location"=> "%{WD},%{JD}"} remove_field=> ["@metadata","@timestamp","@version","message","JD","WD"] } } output{ # 调试的时候可以打开查看控制台信息 #stdout{ #codec=>rubydebug #} elasticsearch{ hosts=>[ "192.168.62.13","192.168.62.14","192.168.62.15"] index=> "track" } } -

启动logstash

[llg@localhost /]$ nohup /winfo/logstash-7.7.0/bin/logstash -f /winfo/logstash-7.7.0/config/kafka.yml &