kubernetes集群配置实现NFS存储

1、K8S高可用集群的搭建方法

如何搭建Kubernetes高可用参见本人的《在ubuntu上通过kubeadm部署K8S(v1.13.4)高可用集群》一文。搭建好的集群类似如下:

2、NFS 服务器的安装配置

NFS是一种网络文件存储系统,该技术在Linux系统下通过配置实现,私有云K8S集群要对容器存储持久化,这是一种相对配置简单的存储技术。NFS服务器的安装配置方法可参考本人的《ubuntu 18.04下 NFS 服务安装和配置方法》一文。本文后续介绍功能采用以下节点作演示:NFS服务器ip为192.168.232.105,属于k8s的ubuntu-k8s-worker2节点;NFS客户端ip为192.168.232.101,属于k8s的ubuntu-k8s-master1节点。

3、动态创建 PV 方式使用 NFS

直接挂载 NFS和PV & PVC 方式使用 NFS可以参考csdn上哎_小羊_168的博文《

Kubernetes 集群使用 NFS 网络文件存储》一文,详细地址为:https://blog.csdn.net/aixiaoyang168/article/details/83988253

以下只补充较原文更简便一些的动态创建PV方法,下面示例中namespace名是health-report,你可以根据自己的实际情况进行修改(如default)。

1)在NFS服务器上创建数据存储目录

在NFS服务器上(ubuntu-k8s-worker2)创建/data/k8s

$ mkdir -p /data/k8s

# 修改配置

$ vim /etc/exports

/data/k8s *(rw,sync,insecure,no_subtree_check,no_root_squash)

# 使配置生效

$ exportfs -r

# 服务端查看下是否生效

$ showmount -e localhost

Export list for localhost:

/data/k8s *

/data/share 192.168.232.0/24

2)创建StorageClass

创建NFS-Client Provisioner 的yaml配置文件,注意修改其中NFS_SERVER和NFS_PATH值。下面示例中namespace名是health-report,你可以根据自己的实际情况进行修改(如default)。

$ vim nfs-client.yaml

kind: ServiceAccount

apiVersion: v1

metadata:

name: nfs-client-provisioner

namespace: health-report

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: nfs-client-provisioner-runner

rules:

- apiGroups: [""]

resources: ["persistentvolumes"]

verbs: ["get", "list", "watch", "create", "delete"]

- apiGroups: [""]

resources: ["persistentvolumeclaims"]

verbs: ["get", "list", "watch", "update"]

- apiGroups: ["storage.k8s.io"]

resources: ["storageclasses"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

resources: ["events"]

verbs: ["create", "update", "patch"]

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: run-nfs-client-provisioner

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

namespace: health-report

roleRef:

kind: ClusterRole

name: nfs-client-provisioner-runner

apiGroup: rbac.authorization.k8s.io

---

kind: Role

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: health-report

rules:

- apiGroups: [""]

resources: ["endpoints"]

verbs: ["get", "list", "watch", "create", "update", "patch"]

---

kind: RoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: leader-locking-nfs-client-provisioner

namespace: health-report

subjects:

- kind: ServiceAccount

name: nfs-client-provisioner

# replace with namespace where provisioner is deployed

namespace: health-report

roleRef:

kind: Role

name: leader-locking-nfs-client-provisioner

apiGroup: rbac.authorization.k8s.io

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-client-provisioner

namespace: health-report

spec:

selector:

matchLabels:

app: nfs-client-provisioner

replicas: 1

template:

metadata:

labels:

app: nfs-client-provisioner

spec:

serviceAccountName: nfs-client-provisioner

containers:

- name: nfs-client-provisioner

image: quay.io/external_storage/nfs-client-provisioner:latest

volumeMounts:

- name: nfs-client-root

mountPath: /persistentvolumes

env:

- name: PROVISIONER_NAME

value: fuseim.pri/ifs

- name: NFS_SERVER

value: 10.30.28.184

- name: NFS_PATH

value: /data/k8s

volumes:

- name: nfs-client-root

nfs:

server: 10.30.28.184

path: /data/k8s

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-storage

provisioner: fuseim.pri/ifs

创建命令的结果如下:

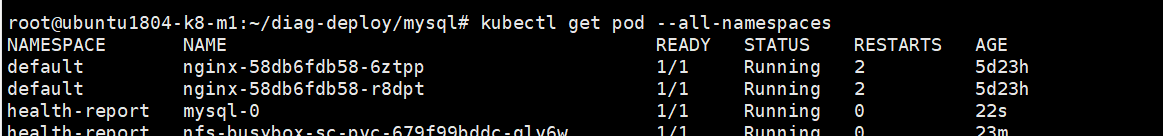

确保POD、role和rolebinding等资源对象都已经正常创建。

StorageClass也已经创建,名称为nfs-storage

3)创建PVC

为应用提前创建pvc,比如下面的yaml文件创建名为nfs-sc-pv的pvc,注意volume.beta.kubernetes.io/storage-class:填写成前面创建的nfs-storage。

vim nfs-sc-pvc.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: nfs-sc-pvc

annotations:

volume.beta.kubernetes.io/storage-class: "nfs-storage"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

然后执行kubectl apply -f nfs-sc-pvc.yaml -n health-report,再用kubectl get pvc -n health-report查看PVC是否创建成功,一般成功如下图所示nfs-sc-pvc的状态变成Bound。

4)创建应用示例busybox

下面创建名nfs-busybox-sc-pvc的应用示例,其persistentVolumeClaim定义为前一步创建的nfs-sc-pvc

vim nfs-busybox-sc-pvc.yaml

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: nfs-busybox-sc-pvc

spec:

replicas: 1

selector:

matchLabels:

name: nfs-busybox-sc-pvc

template:

metadata:

labels:

name: nfs-busybox-sc-pvc

spec:

containers:

- image: busybox

command:

- sh

- -c

- 'while true; do date > /mnt/index.html; hostname >> /mnt/index.html; sleep 10m; done'

imagePullPolicy: IfNotPresent

name: busybox

volumeMounts:

- name: nfs

mountPath: "/mnt"

volumes:

- name: nfs

persistentVolumeClaim:

claimName: nfs-sc-pvc

然后执行 kubectl apply -f nfs-busybox-sc-pvc.yaml -n health-report

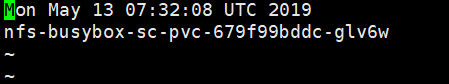

执行kubectl exec -it nfs-busybox-sc-pvc-679f99bddc-glv6w -n health-report /bin/sh 可以查看容器内/mnt/index.html的文件内容。

同时在nfs服务器的对应用目录下(如/data/k8s/health-report-nfs-sc-pvc-pvc-f267e35a-754e-11e9-8acf-0050569e7cab )有index.html,内容相同。

5)创建示例应用mysql

创建mysql应用的配置文件如下,注意storageClassName填写前一步的nfs-storage。

vim mysql-statefulset.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: mysql

namespace: health-report

data:

my.cnf: |

[mysqld]

pid-file = /var/run/mysqld/mysqld.pid

socket = /var/run/mysqld/mysqld.sock

datadir = /var/lib/mysql

secure-file-priv = NULL

symbolic-links=0

skip-host-cache

skip-name-resolve

sql_mode=STRICT_TRANS_TABLES,NO_ZERO_IN_DATE,NO_ZERO_DATE,ERROR_FOR_DIVISION_BY_ZERO,NO_ENGINE_SUBSTITUTION

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: mysql

labels:

app: health-report

namespace: health-report

spec:

serviceName: mysql

replicas: 1

selector:

matchLabels:

app: mysql

template:

metadata:

labels:

app: mysql

spec:

containers:

- name: mysql

image:mysql

imagePullPolicy: Always

ports:

- containerPort: 3306

name: mysql

volumeMounts:

- mountPath: "/var/lib/mysql"

name: healthdata

- name: config-volume

mountPath: "/etc/mysql"

volumes:

- name: config-volume

configMap:

name: mysql

items:

- key: my.cnf

path: my.cnf

volumeClaimTemplates:

- metadata:

name: healthdata

spec:

accessModes:

- ReadWriteOnce

storageClassName: nfs-storage

resources:

requests:

storage: 1Gi

执行 kubectl apply -f mysql-statefulset.yaml -n health-report

nfs服务器上也可以查到创建了对应的mysql数据存储目录