Opencv249和Opencv3.0以上的 SolvePnp函数详解(附带程序、算例)

最近要做一个算法,用到了位姿估计。位姿估计的使用范围非常广泛。主要解决的问题为:在给出2D-3D若干点对以及相片的内参信息,如何求得相机中心在世界坐标系下的坐标以及相机的方向(旋转矩阵)。为此笔者做了大量研究,看了许多主流的文章,也是用了许多相关的函数库。主要有OpenMVG、OpenGV、OpenCV这三种。这三个库虽然都集成了EPnp、Upnp、P3P等多种算法,但实际差别还是很大。这一篇博客主要对opencv中的SolvePnp算法做一个总结以及各类实验。

PnP的具体原理我就不过多解释了,这里放几个链接供大家学习:

首先是Opencv的官方文档:https://docs.opencv.org/2.4/modules/calib3d/doc/camera_calibration_and_3d_reconstruction.html#solvepnp

然后是一篇解释Pnp的很有用的一篇文章:http://blog.csdn.net/cocoaqin/article/details/77841261

然后是Epnp的英文论文:https://pdfs.semanticscholar.org/6ed0/083ff42ac966a6f37710e0b5555b98fd7565.pdf

论文记得用谷歌打开。

我们就直接上实验吧。先看原始数据

333.965515 110.721138 45.893448 164.000000 1909.000000

327.870117 110.772079 45.835598 2578.000000 1970.000000

327.908630 115.816376 43.036915 2858.000000 3087.000000

333.731628 115.862755 43.031918 95.000000 3051.000000

330.019196 110.630211 45.871151 1727.000000 1942.000000

330.043457 115.823494 43.027847 1855.000000 3077.000000

331.949371 115.881104 43.020267 943.000000 3072.000000

331.943970 110.598534 45.909332 962.000000 1926.000000

没错,用空格分开。前三列依次为x,y,z坐标,后两列为图像的像素坐标。

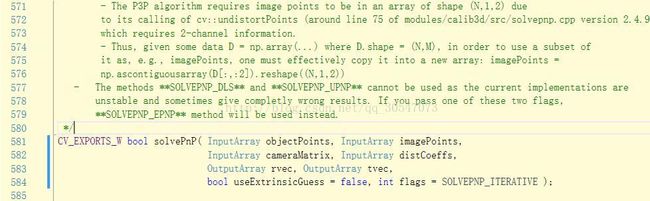

然后我们看看SovePnp的源码都需要些什么

我们发现除了函数本身定义之外上面还有许多参数解释。嗯,Opencv果然是专业。

然后我们再看Opencv源码调用:

bool solvePnP( InputArray _opoints, InputArray _ipoints,

InputArray _cameraMatrix, InputArray _distCoeffs,

OutputArray _rvec, OutputArray _tvec, bool useExtrinsicGuess, int flags )

{

CV_INSTRUMENT_REGION()

Mat opoints = _opoints.getMat(), ipoints = _ipoints.getMat();

int npoints = std::max(opoints.checkVector(3, CV_32F), opoints.checkVector(3, CV_64F));

CV_Assert( npoints >= 0 && npoints == std::max(ipoints.checkVector(2, CV_32F), ipoints.checkVector(2, CV_64F)) );

Mat rvec, tvec;

if( flags != SOLVEPNP_ITERATIVE )

useExtrinsicGuess = false;

if( useExtrinsicGuess )

{

int rtype = _rvec.type(), ttype = _tvec.type();

Size rsize = _rvec.size(), tsize = _tvec.size();

CV_Assert( (rtype == CV_32F || rtype == CV_64F) &&

(ttype == CV_32F || ttype == CV_64F) );

CV_Assert( (rsize == Size(1, 3) || rsize == Size(3, 1)) &&

(tsize == Size(1, 3) || tsize == Size(3, 1)) );

}

else

{

_rvec.create(3, 1, CV_64F);

_tvec.create(3, 1, CV_64F);

}

rvec = _rvec.getMat();

tvec = _tvec.getMat();

Mat cameraMatrix0 = _cameraMatrix.getMat();

Mat distCoeffs0 = _distCoeffs.getMat();

Mat cameraMatrix = Mat_(cameraMatrix0);

Mat distCoeffs = Mat_(distCoeffs0);

bool result = false;

if (flags == SOLVEPNP_EPNP || flags == SOLVEPNP_DLS || flags == SOLVEPNP_UPNP)

{

Mat undistortedPoints;

undistortPoints(ipoints, undistortedPoints, cameraMatrix, distCoeffs);

epnp PnP(cameraMatrix, opoints, undistortedPoints);

Mat R;

PnP.compute_pose(R, tvec);

Rodrigues(R, rvec);

result = true;

}

else if (flags == SOLVEPNP_P3P)

{

CV_Assert( npoints == 4);

Mat undistortedPoints;

undistortPoints(ipoints, undistortedPoints, cameraMatrix, distCoeffs);

p3p P3Psolver(cameraMatrix);

Mat R;

result = P3Psolver.solve(R, tvec, opoints, undistortedPoints);

if (result)

Rodrigues(R, rvec);

}

else if (flags == SOLVEPNP_ITERATIVE)

{

CvMat c_objectPoints = opoints, c_imagePoints = ipoints;

CvMat c_cameraMatrix = cameraMatrix, c_distCoeffs = distCoeffs;

CvMat c_rvec = rvec, c_tvec = tvec;

cvFindExtrinsicCameraParams2(&c_objectPoints, &c_imagePoints, &c_cameraMatrix,

c_distCoeffs.rows*c_distCoeffs.cols ? &c_distCoeffs : 0,

&c_rvec, &c_tvec, useExtrinsicGuess );

result = true;

}

else

CV_Error(CV_StsBadArg, "The flags argument must be one of SOLVEPNP_ITERATIVE, SOLVEPNP_P3P, SOLVEPNP_EPNP or SOLVEPNP_DLS");

return result;

} 然后P3P算法要求输入的控制点个数只能是4对。

好,没问题了,我们准备好图像,然后开始调用。

我们首先构造好K矩阵,fx、fy都是以像素为单位的焦距。我们必须事先知道焦距,或者能从Exif中解析出焦距信息。好,我们直接上核心代码。至于贴图函数

void MappingCloud(VertexPositionColor*&CloudPtr, int PointNum,cv::Mat IMG, cv::Mat K, cv::Mat nin_R, cv::Mat T, bool ifout , std::string OutColorCloudFileName)

{

cout << "Coloring Cloud..." << endl;

std::ofstream CloudOuter;

if (ifout == true)

CloudOuter.open(OutColorCloudFileName);

double reProjection = 0;

for (int i = 0; i < PointNum; i++)

{

//计算3D点云在影像上的投影位置(像素)

cv::Mat SingleP(cv::Matx31d(//这里必须是Matx31d,如果是f则会报错。opencv还真是娇气

CloudPtr[i].x,

CloudPtr[i].y,

CloudPtr[i].z));

cv::Mat change = K*(nin_R*SingleP + T);

change /= change.at(2, 0);

//cout << change.t() << endl;

int xPixel = cvRound(change.at(0, 0));

int yPixel = cvRound(change.at(1, 0));

//取得颜色

uchar* data = IMG.ptr(yPixel);

int Blue =data[xPixel * 3+0]; //第row行的第col个像素点的第一个通道值 Blue

int Green = data[xPixel * 3 + 1]; // Green

int Red = data[xPixel * 3 + 2]; // Red

//颜色赋值

CloudPtr[i].R = Red;

CloudPtr[i].G = Green;

CloudPtr[i].B = Blue;

if (ifout == true)

CloudOuter << CloudPtr[i].x << " " << CloudPtr[i].y << " " << CloudPtr[i].z << " " << CloudPtr[i].R << " " << CloudPtr[i].G << " " << CloudPtr[i].B << endl;

}

if (ifout == true)

CloudOuter.close();

cout << "done!" << endl;

}

std::string intToStdtring(int Num)

{

std::stringstream strStream;

strStream << Num;

std::string s = std::string(strStream.str());

return s;

}

void OpencvPnPTest()//测试opencv的Pnp算法

{

std::ifstream reader(".\\TextureMappingData\\杨芳8点坐标.txt");

if (!reader)

{

std::cout << "打开错误!";

system("pause");

exit(0);

}

string imgName = std::string(".\\TextureMappingData\\IMG_0828.JPG");

cv::Mat IMG = cv::imread(".\\TextureMappingData\\IMG_0828.JPG");

double IMGW = IMG.cols;//杨芳8点坐标.txt

double IMGH = IMG.rows;

double RealWidth = 35.9;

double CCDWidth = RealWidth / (IMGW >= IMGH ? IMGW : IMGH);//一定要取较长的那条边

double f = 100.0;

double fpixel = f / CCDWidth;

cv::Mat K_intrinsic(cv::Matx33d(

fpixel, 0, IMGH / 2.0,

0, fpixel, IMGW / 2.0,

0, 0, 1));

int UsePointCont = 5;

vector ConP3DVec;

vector ConP2DVec;

cv::Point3f ConP3D;

cv::Point2f ConP2D;

cout << "AllPoints: " << endl;

while (reader >> ConP3D.x)

{

reader >> ConP3D.y;

reader >> ConP3D.z;

reader >> ConP2D.x;

reader >> ConP2D.y;

ConP3DVec.push_back(ConP3D);

ConP2DVec.push_back(ConP2D);

cout << setprecision(10)

<< ConP3D.x << " " << ConP3D.y << " " << ConP3D.z << " "

<< ConP2D.x << " " << ConP2D.y << endl;

if (ConP3DVec.size() == UsePointCont)

break;

}

reader.close();

cout << "BaseInformation: " << endl;

cout << "imgName: " << imgName<< endl;

cout << "width: " << IMGW << endl;

cout << "height: " << IMGH << endl;

cout << "UsePointCont: " << UsePointCont << endl;

cout << "RealWidth: " << RealWidth << endl;

cout << "camerapixel: " << CCDWidth << endl;

cout << "f: " << f << endl;

cout << "fpixel: " << fpixel << endl;

cout << "KMatrix: " << K_intrinsic << endl;

//对于后母戊鼎opencv原始几种解法报告:

//对于8个点的情况:EPnP表现良好,DLS表现良好,EPnp与Upnp调用的函数接口相同

//对于4个点的情况:P3P表现良好,EPnp表现良好,然而P3P实际输入点数为4个,那么最后一个点用于检核。

//Epnp在4点的时候表现时好时坏,和控制点的选取状况相关。倘若增加为5个点,则精度有着明显提升

//EPnP在4点的情况下贴图完全错误,比后方交会的效果还要更加扭曲

//4点DLS的方法取得了和EPnp同样的贴图结果,从结果来看非常扭曲

//4点的ITERATOR方法取得了很好的贴图效果,甚至比P3P还要好,因为P3P只用三个点参与计算

//也就是说,在控制点对数量较少的情况下(4点),只有P3P可以给出正确结果

//增加一对控制点(变为5对)之后,Epnp的鲁棒性迅速提升,可以得到正确结果,DLS也同样取得了正确结果

//增加到5对控制点后P3P失效,因为只让用4个点,不多不少.

//同样的,5点的ITERATOR方法结果正确。

//----------结论:使用多于4点的EPnP最为稳妥.所求得的矩阵为计算机视觉矩阵

//int flag = cv::SOLVEPNP_EPNP;std::string OutCloudPath = std::string(".\\Output\\EPNP_") + intToStdtring(UsePointCont) + std::string("Point_HMwuding.txt");

//int flag = cv::SOLVEPNP_DLS;std::string OutCloudPath = std::string(".\\Output\\DLS_") + intToStdtring(UsePointCont) + std::string("Point_HMwuding.txt");

//int flag = cv::SOLVEPNP_P3P;std::string OutCloudPath = std::string(".\\Output\\P3P_") + intToStdtring(UsePointCont) + std::string("Point_HMwuding.txt");

int flag = cv::SOLVEPNP_ITERATIVE; std::string OutCloudPath = std::string(".\\Output\\ITERATIVE_") + intToStdtring(UsePointCont) + std::string("Point_HMwuding.txt");

cout << endl << "Soving Pnp Using Method" << flag<<"..."<< endl;

cv::Mat Rod_r ,TransMatrix ,RotationR;

bool success = cv::solvePnP(ConP3DVec, ConP2DVec, K_intrinsic, cv::noArray(), Rod_r, TransMatrix,false, flag);

Rodrigues(Rod_r, RotationR);//将旋转向量转换为罗德里格旋转矩阵

cout << "r:" << endl << Rod_r << endl;

cout << "R:" << endl << RotationR << endl;

cout << "T:" << endl << TransMatrix << endl;

cout << "C(Camera center:):" << endl << -RotationR.inv()*TransMatrix << endl;//这个C果然是相机中心,十分准确

Comput_Reprojection(ConP3DVec, ConP2DVec, K_intrinsic, RotationR, TransMatrix);

//=====下面读取点云并进行纹理映射

cout << "Reading Cloud..." << endl;

std::string CloudPath = std::string(".\\PointCloud\\HMwuding.txt");

ScarletCloudIO CloudReader(CloudPath);

CloudReader.Read();

CloudReader.PrintStationStatus();

VertexPositionColor *CloudPtr = CloudReader.PtCloud().PointCloud;

int CloudNum = CloudReader.PtCloud().PointNum;

MappingCloud(CloudPtr,CloudNum, IMG, K_intrinsic,//点云、数量、内参

RotationR, TransMatrix,true, OutCloudPath);//旋转、平移(并非是相机中心)、点云最终的放置路径

//下面可以继续研究旋转平移以及比例缩放的影响

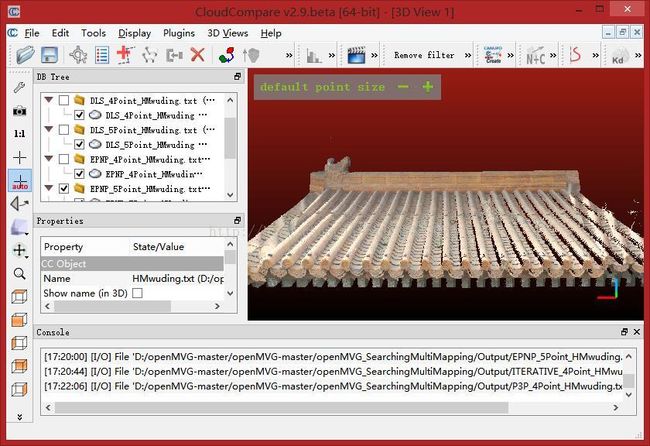

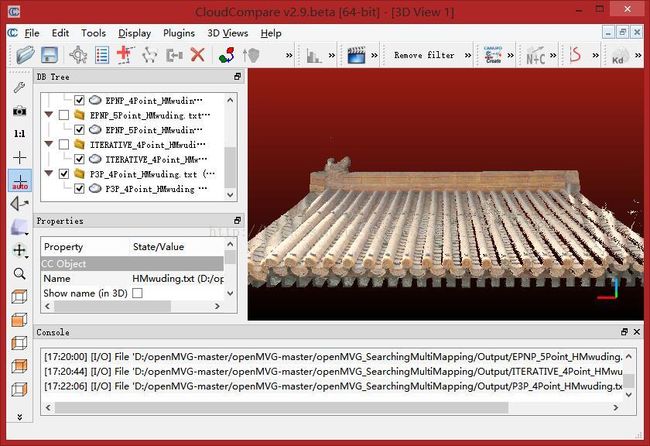

} 实验的报告以及调整的参数都已经在注释中写清楚了。下面我给出这几个贴图效果。我们采用的命名规则为:方法_控制点个数

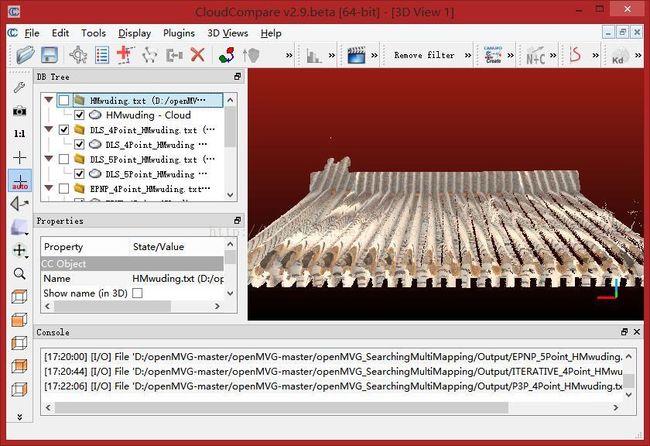

首先是原始

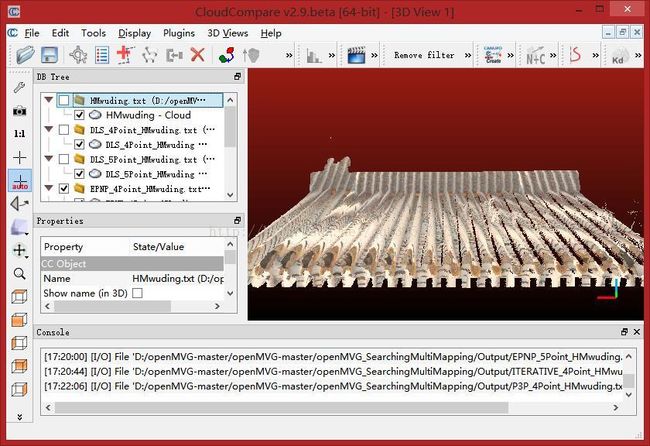

------------------DLS_4Point_HMwuding:(错误)

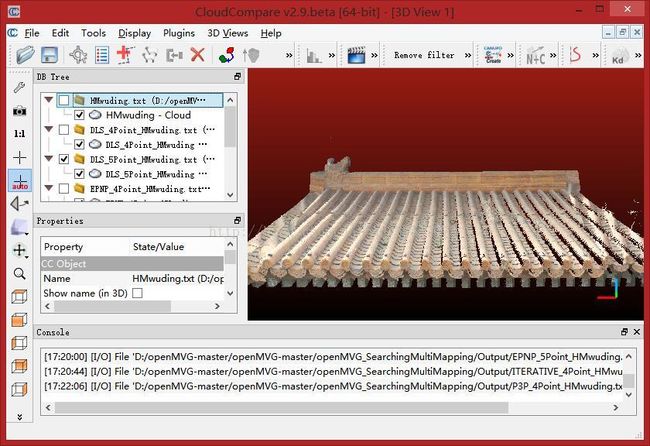

------------------DLS_5Point_HMwuding:(正确)

-----------------------EPNP_4Point_HMwuding.txt(错误)

-----------------------EPNP_5Point_HMwuding.txt(正确)

---------------------ITERATIVE_4Point_HMwuding.txt(正确)

-----------------P3P_4Point_HMwuding.txt(正确)

基本上就这么多了