Android硬编解码MediaCodec使用笔记

一、MediaCodec介绍

MediaCodec类可以用来访问底层媒体编解码器,即编码器/解码器的组件。 它是Android底层多媒体支持架构的一部分(通常与MediaExtractor,MediaSync,MediaMuxer,MediaCrypto,MediaDrm,Image,Surface和AudioTrack一起使用)。

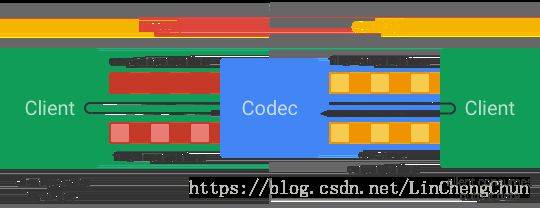

In broad terms, a codec processes input data to generate output data. It processes data asynchronously and uses a set of input and output buffers. At a simplistic level, you request (or receive) an empty input buffer, fill it up with data and send it to the codec for processing. The codec uses up the data and transforms it into one of its empty output buffers. Finally, you request (or receive) a filled output buffer, consume its contents and release it back to the codec.

从广义上讲,一个编解码器处理输入数据以生成输出数据。 它异步地处理数据,并使用一组输入和输出缓冲器。 从一个简单的层面上看,可请求(或接收)一个空的输入缓冲器,然后用数据填满它,并将其发送到编解码器去处理。 编解码器使用这些数据并转换这些数据到它某个空的输出缓冲区。 最后,您请求(或接收)一个已填充数据的输出缓冲区,消耗其内容并将其释放回并回到编解码器。

1、Data Types (数据类型)

编解码器可以处理三类数据:压缩数据、原始音频数据、原始视频数据。所有这三类数据通过ByteBuffers来执行,当然为了提高编解码效果,你最好通过Surface来显示原始视频数据;因为surface使用native层的视频缓存区,没有通过映射或拷贝到JVM空间的缓冲区。正常情况下,当你使用surface的时候,不能访问原始视频数据,但是可以通过ImageRender类来获取原始视频帧。这是一种比ByteBuffers更高效的方法,因为许多本地缓冲区被映射到直接ByteBuffers。当你使用ByteBuffers模式,你可以通过Image类和 getInput/OutputImage(int)方法获取原始视频帧。

* Compressed Buffers压缩缓冲区

输入和输出缓冲区包含了对应类型的压缩数据;对于视频类型通常是简单的压缩视频帧;音频数据通常是一个单入单元,(一种编码格式典型的包含了许多ms的音频类型),但当一个缓冲区包含了多种编码音频进入单元,可以不需要。另一方面,缓冲区不能在任意字节边界开始或停止,但当标记了BUFFER_FLAG_PARTIAL_FRAME标记时,可以访问帧或进入单元边界。

* Raw Audio Buffers原始音频缓冲区

原始音频缓冲区包含完整的PCM格式的帧数据,一种通道对应一个采样率。每一种采样率是一个16位有符号整型在规定参数里面;下面是获取通道采样率的方法

short[] getSamplesForChannel(MediaCodec codec, int bufferId, int channelIx) {

ByteBuffer outputBuffer = codec.getOutputBuffer(bufferId);

MediaFormat format = codec.getOutputFormat(bufferId);

ShortBuffer samples = outputBuffer.order(ByteOrder.nativeOrder()).asShortBuffer();

int numChannels = formet.getInteger(MediaFormat.KEY_CHANNEL_COUNT);

if (channelIx < 0 || channelIx >= numChannels) {

return null;

}

short[] res = new short[samples.remaining() / numChannels];

for (int i = 0; i < res.length; ++i) {

res[i] = samples.get(i * numChannels + channelIx);

}

return res;

}- Raw Video Buffers原始视频缓冲区

在ByteBuffer模式,视频缓冲区根据颜色格式;可以通过 getCodecInfo().getCapabilitiesForType(…).colorFormats 获取支持的颜色格式,视频编码支持三种类型的颜色格式:

native raw video format: 标记COLOR_FormatSurface,可以配合输入输出surface使用

flexible YUV buffers:COLOR_FormatYUV420Flexible,可以配合输入输出surface、在ByteBuffer模式,可以通过getInput/OutputImage(int)访问

other, specific formats:这些格式只在ByteBuffer 模式支持。一些格式是厂商特有的,其他的定义在MediaCodecInfo.CodecCapabilities;

自从5.1.1之后,所有的编解码器支持YUV 4:2:0 buffers。 - Accessing Raw Video ByteBuffers on Older Devices在老的设备上面访问原始视频缓冲区

~~~

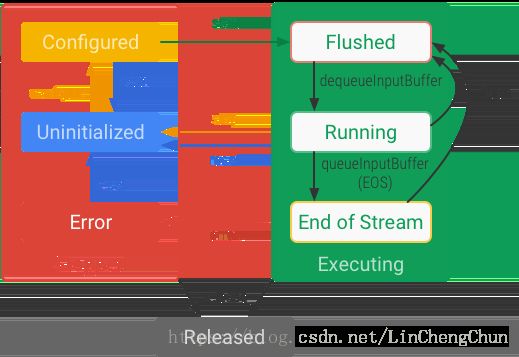

2、States状态

编解码器理论上存在三种状态:停止、执行、释放;停止状态也包含三种子状态:未初始化的、已配置的、错误;执行状态也包含三种子状态:已刷新、正在运行、流结束;

当你用工厂方法创建一个编解码器,是出于未初始化状态的。第一步,通过 configure(…)方法进入已配置状态;其次,通过start()方法进入执行状态;此时,你可以通过上面缓冲队列来处理数据了。

在start()之后,编解码出于已刷新子状态,此时持有所有的缓冲区;当第一个输入缓冲块被出队时,编解码器会耗费许多时间进入运行状态。当一个输入缓冲块被入队时(被标记流结束标记),编解码器进入流结束状态;此时,编解码器不在接收输入缓冲块,但是可以产生输出缓冲块,直到流结束块被出队。你可以在任意时刻,通过调用flush(),进入已刷新状态。

调用stop(),让其进入未初始化状态,如果需要使用,需要再配置一次。当你已经用完编解码器,你需要release();

某些情况下,编解码器会遭遇错误进入错误状态;可以根据不合法返回值或者异常来判断;调用reset()可以复位编码器,让其可以重新使用,并进入未初始化状态。调用releases()进入最终释放状态;

3、Creation创建

可以根据指定的MediaFormat通过MediaCodecList一个编解码器;可以根据 MediaExtractor.getTrackFormat来创建一个可以用于解码文件和流的编解码器;在引入其他格式之前,当你想MediaFormat.setFeatureEnabled,需要通过MediaCodecList.findDecoderForFormat获得与名字对应的特殊的媒体格式的编解码器; 最后通过createByCodecName(String)创建;

另外的,你可以通过MIME类型使用createDecoder/EncoderByType(String)来创建;

* Creating secure decoders

~~~

4、Initialization初始化

创建好之后,可以设置回调 setCallback来异步处理数据;然后configure配置指定的媒体格式。你可以为视频生成指定一个输出surface;也可以设置安全编码,参考MediaCrypto;最后编解码器运行在多个模式下,需要特殊指定在编码或解码状态;

如果你想处理原始输入视频缓冲区,可以在配置后通过createInputSurface()创建一个指定的Surface。也可以通过setInputSurface(Surface)设置编解码器使用指定的Surface。

* Codec-specific Data特定编解码源数据

一些格式,AAC audio and MPEG4, H.264 and H.265 video格式要求预置启动参数或者编解码特殊数据。当处理一些压缩格式时,这些数据必须在任意帧数据之前和start()之后提交到编解码器。这些数据在调用queueInputBuffer时需要被标记BUFFER_FLAG_CODEC_CONFIG。

这些数据也可以通过configure来配置,可以从MediaExtractor获取并放在MediaFromat里面。这些数据会在start()时提交到比爱你解码器里面。

编码器会在任何可用数据之前创建和返回特定标记了codec-config标记的编码参数,缓冲区包含了没有时间戳的codec-specific-data。

5、Data Processing 执行编解码

每一个编解码器在API调用时维持一系列根据buffer-ID对应的输入输出缓冲区;在成功start()编解码器之后,owns客户端既没有输入也没有输出缓冲区。在同步模式下,通过dequeueInput/OutputBuffer()方法从codec里面获取一个输入/输出缓冲区;在异步模式,你可以注册一个回调,通过MediaCodec.Callback.onInput/OutputBufferAvailable(…)自动接收缓冲区。

在获取一个输入缓冲区之后,填充数据,并通过queueInputBuffer提交到codec,不要提交多个同样时间戳一样的输入数据到codec。

codec处理完后,会返回一个只读输出缓冲区数据;异步模式可以通过 onOutputBufferAvailable读取,同步模式通过dequeuOutputBuffer读取;最后需要调用releaseOutputBuffer返回缓冲区到codec。

* Asynchronous Processing using Buffers异步模式使用缓冲数组处理

通常MediaCodec会类似下面的方式在异步模式使用。

MediaCodec codec = MediaCodec.createByCodecName(name);

MediaFormat mOutputFormat; // member variable

codec.setCallback(new MediaCodec.Callback() {

@Override

void onInputBufferAvailable(MediaCodec mc, int inputBufferId) {

ByteBuffer inputBuffer = codec.getInputBuffer(inputBufferId);

// fill inputBuffer with valid data

…

codec.queueInputBuffer(inputBufferId, …);

}

@Override

void onOutputBufferAvailable(MediaCodec mc, int outputBufferId, …) {

ByteBuffer outputBuffer = codec.getOutputBuffer(outputBufferId);

MediaFormat bufferFormat = codec.getOutputFormat(outputBufferId); // option A

// bufferFormat is equivalent to mOutputFormat

// outputBuffer is ready to be processed or rendered.

…

codec.releaseOutputBuffer(outputBufferId, …);

}

@Override

void onOutputFormatChanged(MediaCodec mc, MediaFormat format) {

// Subsequent data will conform to new format.

// Can ignore if using getOutputFormat(outputBufferId)

mOutputFormat = format; // option B

}

@Override

void onError(…) {

…

}

});

codec.configure(format, …);

mOutputFormat = codec.getOutputFormat(); // option B

codec.start();

// wait for processing to complete

codec.stop();

codec.release();- Synchronous Processing using Buffers同步模式使用缓冲数组处理

通常MediaCodec会类似下面的方式在同步模式使用。

MediaCodec codec = MediaCodec.createByCodecName(name);

codec.configure(format, …);

MediaFormat outputFormat = codec.getOutputFormat(); // option B

codec.start();

for (;;) {

int inputBufferId = codec.dequeueInputBuffer(timeoutUs);

if (inputBufferId >= 0) {

ByteBuffer inputBuffer = codec.getInputBuffer(…);

// fill inputBuffer with valid data

…

codec.queueInputBuffer(inputBufferId, …);

}

int outputBufferId = codec.dequeueOutputBuffer(…);

if (outputBufferId >= 0) {

ByteBuffer outputBuffer = codec.getOutputBuffer(outputBufferId);

MediaFormat bufferFormat = codec.getOutputFormat(outputBufferId); // option A

// bufferFormat is identical to outputFormat

// outputBuffer is ready to be processed or rendered.

…

codec.releaseOutputBuffer(outputBufferId, …);

} else if (outputBufferId == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

// Subsequent data will conform to new format.

// Can ignore if using getOutputFormat(outputBufferId)

outputFormat = codec.getOutputFormat(); // option B

}

}

codec.stop();

codec.release();- Synchronous Processing using Buffer Arrays (deprecated)同步模式使用缓冲区数组处理

官方例子

MediaCodec codec = MediaCodec.createByCodecName(name);

codec.configure(format, …);

codec.start();

ByteBuffer[] inputBuffers = codec.getInputBuffers();

ByteBuffer[] outputBuffers = codec.getOutputBuffers();

for (;;) {

int inputBufferId = codec.dequeueInputBuffer(…);

if (inputBufferId >= 0) {

// fill inputBuffers[inputBufferId] with valid data

…

codec.queueInputBuffer(inputBufferId, …);

}

int outputBufferId = codec.dequeueOutputBuffer(…);

if (outputBufferId >= 0) {

// outputBuffers[outputBufferId] is ready to be processed or rendered.

…

codec.releaseOutputBuffer(outputBufferId, …);

} else if (outputBufferId == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED) {

outputBuffers = codec.getOutputBuffers();

} else if (outputBufferId == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

// Subsequent data will conform to new format.

MediaFormat format = codec.getOutputFormat();

}

}

codec.stop();

codec.release();- End-of-stream Handling如何实现结束流

当你没有输入数据的时候,需要 queueInputBuffer一个BUFFER_FLAG_END_OF_STREAM标记的数组到codec。可以把这个标签放在设置在最后一个可用输入buffer里面,或者用一个额外的空输入数据里面设置这个标志位,如果是第二种方式,可以忽略时间戳。

编解码器会继续返回输出数据,直到dequeueOutputBuffer或onOutputBufferAvailable返回的MediaCodec.BufferInfo集合出现同样的end-of-stream标志。

除非codec已经处于已刷新、已停止或者重启状态,否则不要在end-of-stream之后另外提交输入数据。 - Using an Output Surface 使用一个输出Surface

当你使用输出到surface时,需要设置codec为ByteBuffer模式;另外,当使用一个输出surface时,你有以下三种选择:

Do not render the buffer: Call releaseOutputBuffer(bufferId, false).

Render the buffer with the default timestamp: Call releaseOutputBuffer(bufferId, true).

Render the buffer with a specific timestamp: Call releaseOutputBuffer(bufferId, timestamp). - Transformations When Rendering onto Surface在渲染时改变参数

当codec处于surface模式,任何改变矩形、角度、video大小模式会自动导致一个异常; - Using an Input Surface

当你是用一个输入surface时,不允许访问输入buffers,因为buffers会自动从surface传送到codec。

6、Seeking & Adaptive Playback Support快进快退,自适应播放支持

视频解码的自适应播放支持只用工作在codec解码到一个surface。

* Stream Boundary and Key Frames流边界和关键帧

输入流的必须确保第一帧是一个关键帧,才能送到input data里面。

* For decoders that do not support adaptive playback (including when not decoding onto a Surface)

* For decoders that support and are configured for adaptive playback配置为支持自适应播放的解码器

为了对改变进度的数据进行解码,不需要刷新解码器。然而输入数据在非连续之后必须从一个可用的流起始/关键帧开始。

许多H.264, H.265, VP8 and VP9视频格式,很大可能中间改变图片大小和配置。所以需要打包新的codec-specific配置数据和关键帧到一个包含起始码的buffer里面,并提交到codec里面。

你会在图片大小发生改变之后,任何有效帧之前,并在dequeueOutputBuffer里面收到INFO_OUTPUT_FORMAT_CHANGED的数据。

7、Error handling 错误处理

二、MediaCodec与音视频基础知识点补充与编程例子

1、Activity测试页面,从mp4文件中读取音视频并播放出来,播放mp4文件

package com.cclin.jubaohe.activity.Media;

import android.media.AudioFormat;

import android.media.AudioManager;

import android.media.AudioTrack;

import android.media.MediaCodec;

import android.media.MediaExtractor;

import android.media.MediaFormat;

import android.os.Bundle;

import android.view.Surface;

import android.view.SurfaceHolder;

import android.view.SurfaceView;

import android.view.View;

import android.widget.Button;

import com.cclin.jubaohe.R;

import com.cclin.jubaohe.base.BaseActivity;

import com.cclin.jubaohe.util.CameraUtil;

import com.cclin.jubaohe.util.LogUtil;

import com.cclin.jubaohe.util.SDPathConfig;

import java.io.File;

import java.io.IOException;

import java.nio.ByteBuffer;

/**

* Created by LinChengChun on 2018/4/14.

*/

public class MediaTestActivity extends BaseActivity implements SurfaceHolder.Callback, View.OnClickListener {

private final static String MEDIA_FILE_PATH = SDPathConfig.LIVE_MOVIE_PATH+"/18-04-12-10:47:06-0.mp4";

private Surface mSurface;

private SurfaceView mSvRenderFromCamera;

private SurfaceView mSvRenderFromFile;

Button mBtnCameraPreview;

Button mBtnPlayMediaFile;

private MediaStream mMediaStream;

private Thread mVideoDecoderThread;

private AudioTrack mAudioTrack;

private Thread mAudioDecoderThread;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

mBtnCameraPreview = retrieveView(R.id.btn_camera_preview);

mBtnPlayMediaFile = retrieveView(R.id.btn_play_media_file);

mBtnCameraPreview.setOnClickListener(this);

mBtnPlayMediaFile.setOnClickListener(this);

mSvRenderFromCamera = retrieveView(R.id.sv_render);

mSvRenderFromCamera.setOnClickListener(this);

mSvRenderFromCamera.getHolder().addCallback(this);

mSvRenderFromFile = retrieveView(R.id.sv_display);

mSvRenderFromFile.setOnClickListener(this);

mSvRenderFromFile.getHolder().addCallback(this);

init();

}

@Override

protected int initLayout() {

return R.layout.activity_media_test;

}

private void init(){

File file = new File(MEDIA_FILE_PATH);

if (!file.exists()){

LogUtil.e("文件不存在!!");

return;

}

LogUtil.e("目标文件存在!!");

}

private void startVideoDecoder(){

// fill inputBuffer with valid data

mVideoDecoderThread = new Thread("mVideoDecoderThread"){

@Override

public void run() {

super.run();

MediaFormat mMfVideo = null, mMfAudio = null;

String value = null;

String strVideoMime = null;

String strAudioMime = null;

try {

MediaExtractor mediaExtractor = new MediaExtractor(); // 提取器用来从文件中读取音视频

mediaExtractor.setDataSource(MEDIA_FILE_PATH);

int numTracks = mediaExtractor.getTrackCount(); // 轨道数,一般为2

LogUtil.e("获取track数"+numTracks);

for (int i=0; i< numTracks; i++) { // 检索每个轨道的格式

MediaFormat mediaFormat = mediaExtractor.getTrackFormat(i);

LogUtil.e("单独显示track MF:"+mediaFormat);

value = mediaFormat.getString(MediaFormat.KEY_MIME);

if (value.contains("audio")){

mMfAudio = mediaFormat;

strAudioMime = value;

}else {

mMfVideo = mediaFormat;

strVideoMime = value;

mediaExtractor.selectTrack(i);

}

}

mSurface = mSvRenderFromFile.getHolder().getSurface();

MediaCodec codec = MediaCodec.createDecoderByType(strVideoMime); // 创建编解码器

codec.configure(mMfVideo, mSurface, null, 0); // 配置解码后的视频帧数据直接渲染到Surface

codec.setVideoScalingMode(MediaCodec.VIDEO_SCALING_MODE_SCALE_TO_FIT);

codec.start(); // 启动编解码器,让codec进入running模式

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo(); //缓冲区信息

int size = -1, outputBufferIndex = -1;

LogUtil.e("开始解码。。。");

long previewStampUs = 0l;

do {

int inputBufferId = codec.dequeueInputBuffer(10);// 从编码器中获取 输入缓冲区

if (inputBufferId >= 0) {

ByteBuffer inputBuffer = codec.getInputBuffer(inputBufferId); // 获取该输入缓冲区

// fill inputBuffer with valid data

inputBuffer.clear(); // 清空缓冲区

size = mediaExtractor.readSampleData(inputBuffer, 0); // 从提取器中获取一帧数据填充到输入缓冲区

LogUtil.e("readSampleData: size = "+size);

if (size < 0)

break;

int trackIndex = mediaExtractor.getSampleTrackIndex();

long presentationTimeUs = mediaExtractor.getSampleTime(); // 获取采样时间

LogUtil.e("queueInputBuffer: 把数据放入编码器。。。");

codec.queueInputBuffer(inputBufferId, 0, size, presentationTimeUs, 0); // 将输入缓冲区压入编码器

mediaExtractor.advance(); // 获取下一帧

LogUtil.e("advance: 获取下一帧。。。");

outputBufferIndex = codec.dequeueOutputBuffer(bufferInfo, 10000); // 从编码器中读取解码完的数据

LogUtil.e("outputBufferIndex = "+outputBufferIndex);

switch (outputBufferIndex) {

case MediaCodec.INFO_OUTPUT_FORMAT_CHANGED:

// MediaFormat mf = codec.getOutputFormat(outputBufferIndex); // 导致播放视频失败

MediaFormat mf = codec.getOutputFormat();

LogUtil.e("INFO_OUTPUT_FORMAT_CHANGED:"+mf);

break;

case MediaCodec.INFO_TRY_AGAIN_LATER:

LogUtil.e("解码当前帧超时");

break;

case MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED:

//outputBuffers = videoCodec.getOutputBuffers();

LogUtil.e("output buffers changed");

break;

default:

//直接渲染到Surface时使用不到outputBuffer

//ByteBuffer outputBuffer = outputBuffers[outputBufferIndex];

//延时操作

//如果缓冲区里的可展示时间>当前视频播放的进度,就休眠一下

boolean firstTime = previewStampUs == 0l;

long newSleepUs = -1;

long sleepUs = (bufferInfo.presentationTimeUs - previewStampUs);

if (!firstTime) {

long cache = 0;

newSleepUs = CameraUtil.fixSleepTime(sleepUs, cache, -100000);

}

previewStampUs = bufferInfo.presentationTimeUs;

//渲染

if (newSleepUs < 0)

newSleepUs = 0;

Thread.sleep(newSleepUs / 1000);

codec.releaseOutputBuffer(outputBufferIndex, true); // 释放输入缓冲区,并渲染到Surface

break;

}

}

}while (!this.isInterrupted());

LogUtil.e("解码结束。。。");

codec.stop();

codec.release();

codec = null;

mediaExtractor.release();

mediaExtractor = null;

} catch (IOException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

};

mVideoDecoderThread.start();

}

private void startAudioDecoder(){

mAudioDecoderThread = new Thread("AudioDecoderThread"){

@Override

public void run() {

super.run();

try {

MediaFormat mMfVideo = null, mMfAudio = null;

String value = null;

String strVideoMime = null;

String strAudioMime = null;

MediaExtractor mediaExtractor = new MediaExtractor();

mediaExtractor.setDataSource(MEDIA_FILE_PATH);

int numTracks = mediaExtractor.getTrackCount();

LogUtil.e("获取track数"+numTracks);

for (int i=0; i< numTracks; i++) {

MediaFormat mediaFormat = mediaExtractor.getTrackFormat(i);

LogUtil.e("单独显示track MF:"+mediaFormat);

value = mediaFormat.getString(MediaFormat.KEY_MIME);

if (value.contains("audio")){

mMfAudio = mediaFormat;

strAudioMime = value;

mediaExtractor.selectTrack(i);

}else {

mMfVideo = mediaFormat;

strVideoMime = value;

}

}

// mMfAudio.setInteger(MediaFormat.KEY_IS_ADTS, 1);

mMfAudio.setInteger(MediaFormat.KEY_BIT_RATE, 16000);

MediaCodec codec = MediaCodec.createDecoderByType(strAudioMime);

codec.configure(mMfAudio, null, null, 0);

codec.start();

ByteBuffer outputByteBuffer = null;

ByteBuffer[] outputByteBuffers = null;

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int size = -1, outputBufferIndex = -1;

long previewStampUs = 01;

LogUtil.e("开始解码。。。");

if (mAudioTrack == null){

int sample_rate = mMfAudio.getInteger(MediaFormat.KEY_SAMPLE_RATE);

int channels = mMfAudio.getInteger(MediaFormat.KEY_CHANNEL_COUNT);

int sampleRateInHz = (int) (sample_rate * 1.004);

int channelConfig = channels == 1 ? AudioFormat.CHANNEL_OUT_MONO : AudioFormat.CHANNEL_OUT_STEREO;

int audioFormat = AudioFormat.ENCODING_PCM_16BIT;

int bfSize = AudioTrack.getMinBufferSize(sampleRateInHz, channelConfig, audioFormat) * 4;

mAudioTrack = new AudioTrack(AudioManager.STREAM_MUSIC, sampleRateInHz, channelConfig, audioFormat, bfSize, AudioTrack.MODE_STREAM);

}

mAudioTrack.play();

// outputByteBuffers = codec.getOutputBuffers();

do {

int inputBufferId = codec.dequeueInputBuffer(10);

if (inputBufferId >= 0) {

ByteBuffer inputBuffer = codec.getInputBuffer(inputBufferId);

// fill inputBuffer with valid data

inputBuffer.clear();

size = mediaExtractor.readSampleData(inputBuffer, 0);

if (size<0)

break;

long presentationTimeUs = mediaExtractor.getSampleTime();

// LogUtil.e("queueInputBuffer: 把数据放入编码器。。。");

codec.queueInputBuffer(inputBufferId, 0, size, presentationTimeUs, 0);

mediaExtractor.advance();

// LogUtil.e("advance: 获取下一帧。。。");

outputBufferIndex = codec.dequeueOutputBuffer(bufferInfo, 50000);

switch (outputBufferIndex) {

case MediaCodec.INFO_OUTPUT_FORMAT_CHANGED:

// MediaFormat mf = codec.getOutputFormat(outputBufferIndex);

MediaFormat mf = codec.getOutputFormat();

LogUtil.e("INFO_OUTPUT_FORMAT_CHANGED:"+mf);

break;

case MediaCodec.INFO_TRY_AGAIN_LATER:

LogUtil.e( "解码当前帧超时");

break;

case MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED:

// outputByteBuffer = codec.getOutputBuffers();

LogUtil.e( "output buffers changed");

break;

default:

//直接渲染到Surface时使用不到outputBuffer

//ByteBuffer outputBuffer = outputBuffers[outputBufferIndex];

//延时操作

//如果缓冲区里的可展示时间>当前视频播放的进度,就休眠一下

LogUtil.e("outputBufferIndex = "+outputBufferIndex);

// outputByteBuffer = outputByteBuffers[outputBufferIndex];

outputByteBuffer = codec.getOutputBuffer(outputBufferIndex); // 获取解码后的数据

outputByteBuffer.clear();

byte[] outData = new byte[bufferInfo.size];

outputByteBuffer.get(outData);

boolean firstTime = previewStampUs == 0l;

long newSleepUs = -1;

long sleepUs = (bufferInfo.presentationTimeUs - previewStampUs);

if (!firstTime){

long cache = 0;

newSleepUs = CameraUtil.fixSleepTime(sleepUs, cache, -100000);

}

previewStampUs = bufferInfo.presentationTimeUs;

//渲染

if (newSleepUs < 0)

newSleepUs = 0;

Thread.sleep(newSleepUs/1000);

mAudioTrack.write(outData, 0, outData.length); // 输出音频

codec.releaseOutputBuffer(outputBufferIndex, false); // 释放输出缓冲区

break;

}

}

}while (!this.isInterrupted());

LogUtil.e("解码结束。。。");

codec.stop();

codec.release();

codec = null;

mAudioTrack.stop();

mAudioTrack.release();

mAudioTrack = null;

mediaExtractor.release();

mediaExtractor = null;

} catch (IOException e) {

e.printStackTrace();

} catch (InterruptedException e) {

e.printStackTrace();

}

}

};

mAudioDecoderThread.start();

}

@Override

public void onClick(View view){

switch (view.getId()){

case R.id.sv_render:

mMediaStream.getCamera().autoFocus(null);

break;

case R.id.sv_display:

break;

case R.id.btn_camera_preview:

break;

case R.id.btn_play_media_file:

break;

default:break;

}

}

private int getDgree() {

int rotation = getWindowManager().getDefaultDisplay().getRotation();

int degrees = 0;

switch (rotation) {

case Surface.ROTATION_0:

degrees = 0;

break; // Natural orientation

case Surface.ROTATION_90:

degrees = 90;

break; // Landscape left

case Surface.ROTATION_180:

degrees = 180;

break;// Upside down

case Surface.ROTATION_270:

degrees = 270;

break;// Landscape right

}

return degrees;

}

private void onMediaStreamCreate(){

if (mMediaStream==null)

mMediaStream = new MediaStream(this, mSvRenderFromCamera.getHolder());

mMediaStream.setDgree(getDgree());

mMediaStream.createCamera();

mMediaStream.startPreview();

}

private void onMediaStreamDestroy(){

mMediaStream.release();

mMediaStream = null;

}

@Override

protected void onPause() {

super.onPause();

onMediaStreamDestroy();

if (mVideoDecoderThread!=null)

mVideoDecoderThread.interrupt();

if (mAudioDecoderThread!=null)

mAudioDecoderThread.interrupt();

}

@Override

protected void onResume() {

super.onResume();

if (isSurfaceCreated && mMediaStream == null){

onMediaStreamCreate();

}

}

private boolean isSurfaceCreated = false;

@Override

public void surfaceCreated(SurfaceHolder holder) {

LogUtil.e("surfaceCreated: "+holder);

if (holder.getSurface() == mSvRenderFromCamera.getHolder().getSurface()){

isSurfaceCreated = true;

onMediaStreamCreate();

}else if (holder.getSurface() == mSvRenderFromFile.getHolder().getSurface()){

if (new File(MEDIA_FILE_PATH).exists()) {

startVideoDecoder();

startAudioDecoder();

}

}

}

@Override

public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) {

LogUtil.e("surfaceChanged: "

+"\nholder = "+holder

+"\nformat = "+format

+"\nwidth = "+width

+"\nheight = "+height);

}

@Override

public void surfaceDestroyed(SurfaceHolder holder) {

LogUtil.e("surfaceDestroyed: ");

if (holder.getSurface() == mSvRenderFromCamera.getHolder().getSurface()) {

isSurfaceCreated = false;

}

}

}2、将摄像头的数据编码成h264数据

final int millisPerframe = 1000 / 20;

long lastPush = 0;

@Override

public void run() {

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = 0;

byte[] mPpsSps = new byte[0];

byte[] h264 = new byte[mWidth * mHeight];

do {

outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, 10000); // 从codec中获取编码完的数据

if (outputBufferIndex == MediaCodec.INFO_TRY_AGAIN_LATER) {

// no output available yet

} else if (outputBufferIndex == MediaCodec.INFO_OUTPUT_BUFFERS_CHANGED) {

// not expected for an encoder

outputBuffers = mMediaCodec.getOutputBuffers();

} else if (outputBufferIndex == MediaCodec.INFO_OUTPUT_FORMAT_CHANGED) {

synchronized (HWConsumer.this) {

newFormat = mMediaCodec.getOutputFormat();

EasyMuxer muxer = mMuxer;

if (muxer != null) {

// should happen before receiving buffers, and should only happen once

muxer.addTrack(newFormat, true);

}

}

} else if (outputBufferIndex < 0) {

// let's ignore it

} else {

ByteBuffer outputBuffer;

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.LOLLIPOP) {

outputBuffer = mMediaCodec.getOutputBuffer(outputBufferIndex);

} else {

outputBuffer = outputBuffers[outputBufferIndex];

}

outputBuffer.position(bufferInfo.offset);

outputBuffer.limit(bufferInfo.offset + bufferInfo.size);

EasyMuxer muxer = mMuxer;

if (muxer != null) {

muxer.pumpStream(outputBuffer, bufferInfo, true);

}

boolean sync = false;

if ((bufferInfo.flags & MediaCodec.BUFFER_FLAG_CODEC_CONFIG) != 0) {// codec会产生sps和pps

sync = (bufferInfo.flags & MediaCodec.BUFFER_FLAG_SYNC_FRAME) != 0; // 标记是I帧还是同步帧

if (!sync) { // 如果是同步帧,也就是填充着pps和sps参数

byte[] temp = new byte[bufferInfo.size];

outputBuffer.get(temp);

mPpsSps = temp;

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

continue; // 等待下一帧

} else {

mPpsSps = new byte[0];

}

}

sync |= (bufferInfo.flags & MediaCodec.BUFFER_FLAG_SYNC_FRAME) != 0; // 标记是否是关键帧

int len = mPpsSps.length + bufferInfo.size;

if (len > h264.length) {

h264 = new byte[len];

}

if (sync) { // 如果是关键帧,需要在帧头添加pps sps参数

System.arraycopy(mPpsSps, 0, h264, 0, mPpsSps.length);

outputBuffer.get(h264, mPpsSps.length, bufferInfo.size);

mPusher.push(h264, 0, mPpsSps.length + bufferInfo.size, bufferInfo.presentationTimeUs / 1000, 1);

if (BuildConfig.DEBUG)

Log.i(TAG, String.format("push i video stamp:%d", bufferInfo.presentationTimeUs / 1000));

} else { // 非I帧直接读取出来

outputBuffer.get(h264, 0, bufferInfo.size);

mPusher.push(h264, 0, bufferInfo.size, bufferInfo.presentationTimeUs / 1000, 1);

if (BuildConfig.DEBUG)

Log.i(TAG, String.format("push video stamp:%d", bufferInfo.presentationTimeUs / 1000));

}

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

}

}

while (mVideoStarted);

}

@Override

public int onVideo(byte[] data, int format) {

if (!mVideoStarted) return 0;

try {

if (lastPush == 0) {

lastPush = System.currentTimeMillis();

}

long time = System.currentTimeMillis() - lastPush;

if (time >= 0) {

time = millisPerframe - time;

if (time > 0) Thread.sleep(time / 2);

}

if (format == ImageFormat.YV12) {

JNIUtil.yV12ToYUV420P(data, mWidth, mHeight);

} else {

JNIUtil.nV21To420SP(data, mWidth, mHeight);

}

int bufferIndex = mMediaCodec.dequeueInputBuffer(0);

if (bufferIndex >= 0) {

ByteBuffer buffer = null;

if (android.os.Build.VERSION.SDK_INT >= android.os.Build.VERSION_CODES.LOLLIPOP) {

buffer = mMediaCodec.getInputBuffer(bufferIndex);

} else {

buffer = inputBuffers[bufferIndex];

}

buffer.clear();

buffer.put(data);

buffer.clear();

mMediaCodec.queueInputBuffer(bufferIndex, 0, data.length, System.nanoTime() / 1000, MediaCodec.BUFFER_FLAG_KEY_FRAME); // 标记含有关键帧

}

if (time > 0) Thread.sleep(time / 2); // 添加延时,确保帧率

lastPush = System.currentTimeMillis();

} catch (InterruptedException ex) {

ex.printStackTrace();

}

return 0;

}

/**

* 初始化编码器

*/

private void startMediaCodec() throws IOException {

/*

SD (Low quality) SD (High quality) HD 720p

1 HD 1080p

1

Video resolution 320 x 240 px 720 x 480 px 1280 x 720 px 1920 x 1080 px

Video frame rate 20 fps 30 fps 30 fps 30 fps

Video bitrate 384 Kbps 2 Mbps 4 Mbps 10 Mbps

*/

int framerate = 20;

// if (width == 640 || height == 640) {

// bitrate = 2000000;

// } else if (width == 1280 || height == 1280) {

// bitrate = 4000000;

// } else {

// bitrate = 2 * width * height;

// }

int bitrate = (int) (mWidth * mHeight * 20 * 2 * 0.05f);

if (mWidth >= 1920 || mHeight >= 1920) bitrate *= 0.3;

else if (mWidth >= 1280 || mHeight >= 1280) bitrate *= 0.4;

else if (mWidth >= 720 || mHeight >= 720) bitrate *= 0.6;

EncoderDebugger debugger = EncoderDebugger.debug(mContext, mWidth, mHeight);

mVideoConverter = debugger.getNV21Convertor();

mMediaCodec = MediaCodec.createByCodecName(debugger.getEncoderName());

MediaFormat mediaFormat = MediaFormat.createVideoFormat("video/avc", mWidth, mHeight);

mediaFormat.setInteger(MediaFormat.KEY_BIT_RATE, bitrate);

mediaFormat.setInteger(MediaFormat.KEY_FRAME_RATE, framerate);

mediaFormat.setInteger(MediaFormat.KEY_COLOR_FORMAT, debugger.getEncoderColorFormat());

mediaFormat.setInteger(MediaFormat.KEY_I_FRAME_INTERVAL, 1);

mMediaCodec.configure(mediaFormat, null, null, MediaCodec.CONFIGURE_FLAG_ENCODE);

mMediaCodec.start();

Bundle params = new Bundle();

params.putInt(MediaCodec.PARAMETER_KEY_REQUEST_SYNC_FRAME, 0);

if (Build.VERSION.SDK_INT >= Build.VERSION_CODES.KITKAT) {

mMediaCodec.setParameters(params);

}

}三、总结

MediaCodec编解码器h264算法生成的pps和sps是在同一个配置帧里面,如果需要分别使用的话需要分开解析。

inputBuffers = mMediaCodec.getInputBuffers();

outputBuffers = mMediaCodec.getOutputBuffers();

int bufferIndex = mMediaCodec.dequeueInputBuffer(0);

if (bufferIndex >= 0) {

inputBuffers[bufferIndex].clear();

mConvertor.convert(data, inputBuffers[bufferIndex]);

mMediaCodec.queueInputBuffer(bufferIndex, 0, inputBuffers[bufferIndex].position(), System.nanoTime() / 1000, 0);

MediaCodec.BufferInfo bufferInfo = new MediaCodec.BufferInfo();

int outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, 0);

while (outputBufferIndex >= 0) {

ByteBuffer outputBuffer = outputBuffers[outputBufferIndex];

// String data0 = String.format("%x %x %x %x %x %x %x %x %x %x ", outData[0], outData[1], outData[2], outData[3], outData[4], outData[5], outData[6], outData[7], outData[8], outData[9]);

// Log.e("out_data", data0);

//记录pps和sps

int type = outputBuffer.get(4) & 0x07; // 判断是什么帧

// LogUtil.e(TAG, String.format("type is %d", type));

if (type == 7 || type == 8) {

byte[] outData = new byte[bufferInfo.size];

outputBuffer.get(outData);

mPpsSps = outData;

ArrayList posLists = new ArrayList<>(2);

for (int i=0; i

if (outData[i]==0 && outData[i+1]==0&& outData[i+2]==0 && outData[i+3]==1){

posLists.add(i);

}

}

int sps_pos = posLists.get(0);

int pps_pos = posLists.get(1);

posLists.clear();

posLists = null;

ByteBuffer csd0 = ByteBuffer.allocate(pps_pos);

csd0.put(outData, sps_pos, pps_pos);

csd0.clear();

mCSD0 = csd0;

LogUtil.e(TAG, String.format("CSD-0 searched!!!"));

ByteBuffer csd1 = ByteBuffer.allocate(outData.length-pps_pos);

csd1.put(outData, pps_pos, outData.length-pps_pos);

csd1.clear();

mCSD1 = csd1;

LogUtil.e(TAG, String.format("CSD-1 searched!!!"));

LocalBroadcastManager.getInstance(mApplicationContext).sendBroadcast(new Intent(ACTION_H264_SPS_PPS_GOT));

} else if (type == 5) {

//在关键帧前面加上pps和sps数据

System.arraycopy(mPpsSps, 0, h264, 0, mPpsSps.length);

outputBuffer.get(h264, mPpsSps.length, bufferInfo.size);

if (isPushing)

mEasyPusher.push(h264, 0,mPpsSps.length+bufferInfo.size, System.currentTimeMillis(), 1);

if (mEasyMuxer !=null && !isRecordPause) {

bufferInfo.presentationTimeUs = TimeStamp.getInstance().getCurrentTimeUS();

mEasyMuxer.pumpStream(outputBuffer, bufferInfo, true);// 用于保存本地视频到本地

isWaitKeyFrame = false; // 拿到关键帧,则清除等待关键帧的条件

// LocalBroadcastManager.getInstance(mApplicationContext).sendBroadcast(new Intent(ACTION_I_KEY_FRAME_GOT));

}

} else {

outputBuffer.get(h264, 0, bufferInfo.size);

if (System.currentTimeMillis() - timeStamp >= 3000) {

timeStamp = System.currentTimeMillis();

if (Build.VERSION.SDK_INT >= 23) {

Bundle params = new Bundle();

params.putInt(MediaCodec.PARAMETER_KEY_REQUEST_SYNC_FRAME, 0);

mMediaCodec.setParameters(params);

}

}

if (isPushing)

mEasyPusher.push(h264, 0, bufferInfo.size, System.currentTimeMillis(), 1);

if (mEasyMuxer !=null && !isRecordPause && !isWaitKeyFrame) {

bufferInfo.presentationTimeUs = TimeStamp.getInstance().getCurrentTimeUS();

mEasyMuxer.pumpStream(outputBuffer, bufferInfo, true);// 用于保存本地视频到本地

}

}

mMediaCodec.releaseOutputBuffer(outputBufferIndex, false);

outputBufferIndex = mMediaCodec.dequeueOutputBuffer(bufferInfo, 0);

}

} else {

Log.e(TAG, "No buffer available !");

}