动手学深度学习(tensorflow)---学习笔记整理(十、计算机视觉篇)

有关公式、基本理论等大量内容摘自《动手学深度学习》(TF2.0版))

这一部分主要是计算机视觉内容,之前说的cnn模型也是和视觉联系很大的~

通过cnn的学习,我们了解了图片的结构和图片分类等内容,计算机视觉还有两个非常重要的内容,一个是类似在图片内对目标进行检测(目标检测),另一个是生成图片(迁移学习)。

再说上述内容之前,先了解一下计算机视觉其他的基础的一些知识。

图像增广

常见的方法:翻转、裁剪和变换颜色

原图:

翻转

剪裁

变换颜色

亮度

色调

代码如下:

import tensorflow as tf

import numpy as np

print(tf.__version__)

from matplotlib import pyplot as plt

img = plt.imread('img/girl.jpg')

print(img.shape)

plt.imshow(img)

plt.show()

#绘图函数

def show_images(imgs, num_rows, num_cols, scale=2):

figsize = (num_cols * scale, num_rows * scale)

_, axes = plt.subplots(num_rows, num_cols, figsize=figsize)

for i in range(num_rows):

for j in range(num_cols):

axes[i][j].imshow(imgs[i * num_cols + j])

axes[i][j].axes.get_xaxis().set_visible(False)

axes[i][j].axes.get_yaxis().set_visible(False)

plt.show()

return axes

#运行图像增广方法aug并展示所有的结果,默认2*4个

def apply(img, aug, num_rows=2, num_cols=4, scale=1.5):

Y = [aug(img) for _ in range(num_rows * num_cols)]

show_images(Y, num_rows, num_cols, scale)

#左右翻转

apply(img, tf.image.random_flip_left_right)

#上下翻转

apply(img, tf.image.random_flip_up_down)

#随机剪裁10%-100%,像素缩放到600*600

aug=tf.image.random_crop

num_rows=2

num_cols=4

scale=1.5

#图像尺寸

crop_size=600

Y = [aug(img, (crop_size, crop_size, 3)) for _ in range(num_rows * num_cols)]

show_images(Y, num_rows, num_cols, scale)

#变换颜色亮度,50%-150%

#tf.image.random_brightness函数实现

aug=tf.image.random_brightness

num_rows=2

num_cols=4

scale=1.5

max_delta=0.5

Y = [aug(img, max_delta) for _ in range(num_rows * num_cols)]

show_images(Y, num_rows, num_cols, scale)

#变换颜色色调

#tf.image.random_hue实现

aug=tf.image.random_hue

num_rows=2

num_cols=4

scale=1.5

max_delta=0.5

Y = [aug(img, max_delta) for _ in range(num_rows * num_cols)]

show_images(Y, num_rows, num_cols, scale)

#可以从4个方面改变图像的颜色:亮度、对比度、饱和度和色调,还有两个没介绍使用图像增广训练模型

(代码有点点小问题,后续修改)

import tensorflow as tf

import numpy as np

print(tf.__version__)

from matplotlib import pyplot as plt

#绘图函数

def show_images(imgs, num_rows, num_cols, scale=2):

figsize = (num_cols * scale, num_rows * scale)

_, axes = plt.subplots(num_rows, num_cols, figsize=figsize)

for i in range(num_rows):

for j in range(num_cols):

axes[i][j].imshow(imgs[i * num_cols + j])

axes[i][j].axes.get_xaxis().set_visible(False)

axes[i][j].axes.get_yaxis().set_visible(False)

plt.show()

return axes

#获取数据集合

(x, y), (test_x, test_y) = tf.keras.datasets.cifar10.load_data()

print(x.shape, test_x.shape)

#绘制前8个

show_images(x[0:8][0], 2, 4, scale=0.8)

#定义残差神经网络

from tensorflow.keras import layers,activations

class Residual(tf.keras.Model):

def __init__(self, num_channels, use_1x1conv=False, strides=1, **kwargs):

super(Residual, self).__init__(**kwargs)

self.conv1 = layers.Conv2D(num_channels,

padding='same',

kernel_size=3,

strides=strides)

self.conv2 = layers.Conv2D(num_channels, kernel_size=3,padding='same')

if use_1x1conv:

self.conv3 = layers.Conv2D(num_channels,

kernel_size=1,

strides=strides)

else:

self.conv3 = None

self.bn1 = layers.BatchNormalization()

self.bn2 = layers.BatchNormalization()

def call(self, X):

Y = activations.relu(self.bn1(self.conv1(X)))

Y = self.bn2(self.conv2(Y))

if self.conv3:

X = self.conv3(X)

return activations.relu(Y + X)

class ResnetBlock(tf.keras.layers.Layer):

def __init__(self,num_channels, num_residuals, first_block=False,**kwargs):

super(ResnetBlock, self).__init__(**kwargs)

self.listLayers=[]

for i in range(num_residuals):

if i == 0 and not first_block:

self.listLayers.append(Residual(num_channels, use_1x1conv=True, strides=2))

else:

self.listLayers.append(Residual(num_channels))

def call(self, X):

for layer in self.listLayers.layers:

X = layer(X)

return X

class ResNet(tf.keras.Model):

def __init__(self,num_blocks,**kwargs):

super(ResNet, self).__init__(**kwargs)

self.conv=tf.keras.layers.Conv2D(64, kernel_size=7, strides=2, padding='same')

self.bn=tf.keras.layers.BatchNormalization()

self.relu=tf.keras.layers.Activation('relu')

self.mp=tf.keras.layers.MaxPool2D(pool_size=3, strides=2, padding='same')

self.resnet_block1=ResnetBlock(64,num_blocks[0], first_block=True)

self.resnet_block2=ResnetBlock(128,num_blocks[1])

self.resnet_block3=ResnetBlock(256,num_blocks[2])

self.resnet_block4=ResnetBlock(512,num_blocks[3])

self.gap=tf.keras.layers.GlobalAvgPool2D()

self.fc=tf.keras.layers.Dense(units=10,activation=tf.keras.activations.softmax)

def call(self, x):

x=self.conv(x)

x=self.bn(x)

x=self.relu(x)

x=self.mp(x)

x=self.resnet_block1(x)

x=self.resnet_block2(x)

x=self.resnet_block3(x)

x=self.resnet_block4(x)

x=self.gap(x)

x=self.fc(x)

return x

net = ResNet([2,2,2,2])

#使用随机左右翻转的图像增广来训练模型

print(type(x))

x = np.array([tf.image.random_flip_left_right(i) for i in x])

print(type(x))

net.compile(loss='sparse_categorical_crossentropy',

optimizer=tf.keras.optimizers.Adam(),

metrics=['accuracy'])

history = net.fit(x, y,

batch_size=64,

epochs=2,

validation_split=0.2)

test_scores = net.evaluate(test_x, test_y, verbose=2)

微调

说白了就是加载别人已经训练好的,然后进行修改。原理上来说,就是别人训练好的模型可以分20个类别,你仅需要预测其中的几个类别,但是数据集不太一样,但是别人的模型已经具备识别能力了,在自己的模型上进行训练(微调即可)。

热狗识别微调代码:

import tensorflow as tf

import numpy as np

import os

import zipfile

import wget

#使用的热狗数据集是从网上抓取的,它含有1400张包含热狗的正类图像,和同样多包含其他食品的负类图像。

#各类的1000张图像被用于训练,其余则用于测试。其实就是热狗类和其他类

#我们首先将压缩后的数据集下载到路径../data之下,然后在该路径将下载好的数据集解压,得到两个文件夹hotdog/train和hotdog/test。

# 这两个文件夹下面均有hotdog和not-hotdog两个类别文件夹,每个类别文件夹里面是图像文件

def download_data():

data = os.getcwd()+'/data'

#下载压缩文件

if not os.path.exists(data+'/hotdog.zip'):

base_url = 'https://apache-mxnet.s3-accelerate.amazonaws.com/'

wget.download(

base_url + 'gluon/dataset/hotdog.zip',

data)

print("已存在")

#解压文件

with zipfile.ZipFile(data+'/hotdog.zip', 'r') as z:

z.extractall(os.getcwd())

download_data()

import pathlib

#解压后的文件路径

train_dir = 'hotdog/train'

test_dir = 'hotdog/test'

#加载文件

train_dir = pathlib.Path(train_dir)

# train_count = len(list(train_dir.glob('*/*.jpg')))

test_dir = pathlib.Path(test_dir)

# test_count = len(list(test_dir.glob('*/*.jpg')))

#获取所有便签种类

CLASS_NAMES = np.array([item.name for item in train_dir.glob('*') if item.name != 'LICENSE.txt' and item.name[0] != '.'])

#总共两类

print(len(CLASS_NAMES))

print(CLASS_NAMES)

#ImageDataGenerator是tf用于图像增广的库,下属操作将训练集和测试集归一化且调整为224*224的图片

image_generator = tf.keras.preprocessing.image.ImageDataGenerator(rescale=1./255)

BATCH_SIZE = 32

IMG_HEIGHT = 224

IMG_WIDTH = 224

train_data_gen = image_generator.flow_from_directory(directory=str(train_dir),

batch_size=BATCH_SIZE,

target_size=(IMG_HEIGHT, IMG_WIDTH),

shuffle=True,

classes = list(CLASS_NAMES))

test_data_gen = image_generator.flow_from_directory(directory=str(test_dir),

batch_size=BATCH_SIZE,

target_size=(IMG_HEIGHT, IMG_WIDTH),

shuffle=True,

classes = list(CLASS_NAMES))

import matplotlib.pyplot as plt

def show_batch(image_batch, label_batch):

plt.figure(figsize=(10,10))

for n in range(15):

ax = plt.subplot(5,5,n+1)

plt.imshow(image_batch[n])

plt.title(CLASS_NAMES[label_batch[n]==1][0].title())

plt.axis('off')

plt.show()

#train_data_gen是image_generator结构,如果要提取图片需要用next函数,返回一下

image_batch, label_batch = next(train_data_gen)

#32*224*224*3

print(image_batch.shape)

show_batch(image_batch, label_batch)

#使用在ImageNet数据集上预训练的ResNet-50作为源模型。

# 这里指定weights='imagenet'来自动下载并加载预训练的模型参数。

#一个神经网络包括两部分,一部分是features,另一部分是classifier,后者是全连接层

#仅仅加载features

ResNet50 = tf.keras.applications.resnet_v2.ResNet50V2(weights='imagenet', input_shape=(224,224,3))

#也可以只是用结构

#ResNet50 = tf.keras.applications.resnet_v2.ResNet50V2(input_shape=(224,224,3))

for layer in ResNet50.layers:

layer.trainable = False

net = tf.keras.models.Sequential()

net.add(ResNet50)

net.add(tf.keras.layers.Flatten())

net.add(tf.keras.layers.Dense(2, activation='softmax'))

net.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

history = net.fit_generator(

train_data_gen,

steps_per_epoch=10,

epochs=3,

validation_data=test_data_gen,

validation_steps=10

)

没有初始化参数的模型收敛速度更慢。微调的模型因为参数初始值更好,往往在相同迭代周期下取得更高的精度。

小结:

- 迁移学习将从源数据集学到的知识迁移到目标数据集上。微调是迁移学习的一种常用技术。

- 目标模型复制了源模型上除了输出层外的所有模型设计及其参数,并基于目标数据集微调这些参数。而目标模型的输出层需要从头训练。

- 一般来说,微调参数会使用较小的学习率,而从头训练输出层可以使用较大的学习率。

目标检测和边界框

边界框

代码实现(只是人为的弄个框,并不是自动检测出来的):

import tensorflow as tf

import os

print(tf.__version__)

import matplotlib.pyplot as plt

img = plt.imread(os.getcwd()+'/img/girl2.jpg')

plt.imshow(img)

# bbox是bounding box的缩写

dog_bbox, cat_bbox = [20, 235, 300, 790], [520, 100, 750, 790]

#绘制函数

def bbox_to_rect(bbox, color):

# 将边界框(左上x, 左上y, 右下x, 右下y)格式转换成matplotlib格式:

# ((左上x, 左上y), 宽, 高)

return plt.Rectangle(

xy=(bbox[0], bbox[1]), width=bbox[2]-bbox[0], height=bbox[3]-bbox[1],

fill=False, edgecolor=color, linewidth=2)

#进行绘制

fig = plt.imshow(img)

fig.axes.add_patch(bbox_to_rect(dog_bbox, 'blue'))

fig.axes.add_patch(bbox_to_rect(cat_bbox, 'red'))

plt.show()

锚框

说白了就是以某个像素为中心可以生成特别多的边界框,这些边界框就叫做锚框。

代码如下:

import tensorflow as tf

from tensorflow import keras

import numpy as np

import matplotlib.pyplot as plt

import math

import os

img_raw = tf.io.read_file(os.getcwd()+'/img/girl2.jpg')

print(type(img_raw))

#转numpy

img = tf.image.decode_jpeg(img_raw).numpy()

print(type(img))

h, w = img.shape[0:2]

print(h, w)

#定义生成所有锚框的函数。对于整个输入图像,我们将一共生成 wh(n+m−1) 个锚框

def MultiBoxPrior(feature_map, sizes=[0.75, 0.5, 0.25], ratios=[1, 2, 0.5]):

"""

# 按照「9.4.1. 生成多个锚框」所讲的实现, anchor表示成(xmin, ymin, xmax, ymax).

https://zh.d2l.ai/chapter_computer-vision/anchor.html

Args:

feature_map: torch tensor, Shape: [N, C, H, W].

sizes: List of sizes (0~1) of generated MultiBoxPriores.

ratios: List of aspect ratios (non-negative) of generated MultiBoxPriores.

Returns:

anchors of shape (1, num_anchors, 4). 由于batch里每个都一样, 所以第一维为1

"""

pairs = [] # pair of (size, sqrt(ratio))

for r in ratios:

pairs.append([sizes[0], np.sqrt(r)])

for s in sizes[1:]:

pairs.append([s, np.sqrt(ratios[0])])

pairs = np.array(pairs)

ss1 = pairs[:, 0] * pairs[:, 1] # size * sqrt(ration)

ss2 = pairs[:, 0] / pairs[:, 1] # size / sqrt(retion)

base_anchors = tf.stack([-ss1, -ss2, ss1, ss2], axis=1) / 2

h, w = feature_map.shape[-2:]

shifts_x = tf.divide(tf.range(0, w), w)

shifts_y = tf.divide(tf.range(0, h), h)

shift_x, shift_y = tf.meshgrid(shifts_x, shifts_y)

shift_x = tf.reshape(shift_x, (-1,))

shift_y = tf.reshape(shift_y, (-1,))

shifts = tf.stack((shift_x, shift_y, shift_x, shift_y), axis=1)

anchors = tf.add(tf.reshape(shifts, (-1,1,4)), tf.reshape(base_anchors, (1,-1,4)))

return tf.cast(tf.reshape(anchors, (1,-1,4)), tf.float32)

x = tf.zeros((1,3,h,w))

y = MultiBoxPrior(x)

print(y.shape)

#3064000=800*766*5,5=len(sizes)+len(ratios)-1

#访问以(250,250)为中心的第一个锚框。

#它有4个元素,分别是锚框左上角的xx和yy轴坐标和右下角的xx和yy轴坐标,其中xx和yy轴的坐标值分别已除以图像的宽和高,因此值域均为0和1之间。

boxes = tf.reshape(y, (h,w,5,4))

print(boxes[250,250,0,:])

#描绘图像中以某个像素为中心的所有锚框的函数

def bbox_to_rect(bbox, color):

# 将边界框(左上x, 左上y, 右下x, 右下y)格式转换成matplotlib格式:

# ((左上x, 左上y), 宽, 高)

return plt.Rectangle(

xy=(bbox[0], bbox[1]), width=bbox[2]-bbox[0], height=bbox[3]-bbox[1],

fill=False, edgecolor=color, linewidth=2)

def show_bboxes(axes, bboxes, labels=None, colors=None):

def _make_list(obj, default_values=None):

if obj is None:

obj = default_values

elif not isinstance(obj, (list, tuple)):

obj = [obj]

return obj

labels = _make_list(labels)

colors = _make_list(colors, ['b', 'g', 'r', 'm', 'c'])

for i, bbox in enumerate(bboxes):

color = colors[i % len(colors)]

rect = bbox_to_rect(bbox.numpy(), color)

axes.add_patch(rect)

if labels and len(labels) > i:

text_color = 'k' if color == 'w' else 'w'

axes.text(rect.xy[0], rect.xy[1], labels[i],

va='center', ha='center', fontsize=6,

color=text_color, bbox=dict(facecolor=color, lw=0))

plt.show()

from IPython import display

def use_svg_display():

"""Use svg format to display plot in jupyter"""

display.set_matplotlib_formats('svg')

use_svg_display()

# 设置图的尺寸

plt.rcParams['figure.figsize'] = (3.5, 2.5)

fig = plt.imshow(img)

bbox_scale = tf.constant([[w,h,w,h]], dtype=tf.float32)

show_bboxes(fig.axes, tf.multiply(boxes[200,250,:,:], bbox_scale),

['s=0.75, r=1', 's=0.75, r=2', 's=0.55, r=0.5',

's=0.5, r=1', 's=0.25, r=1'])

交并比

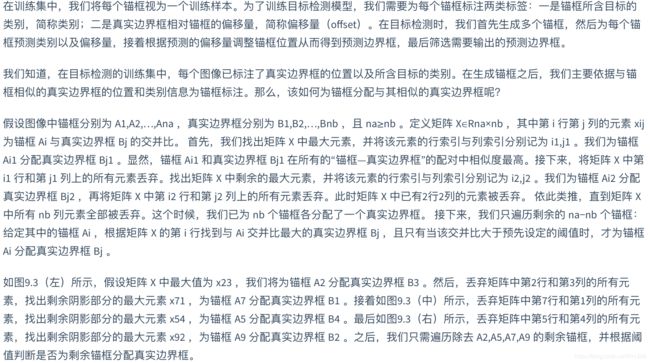

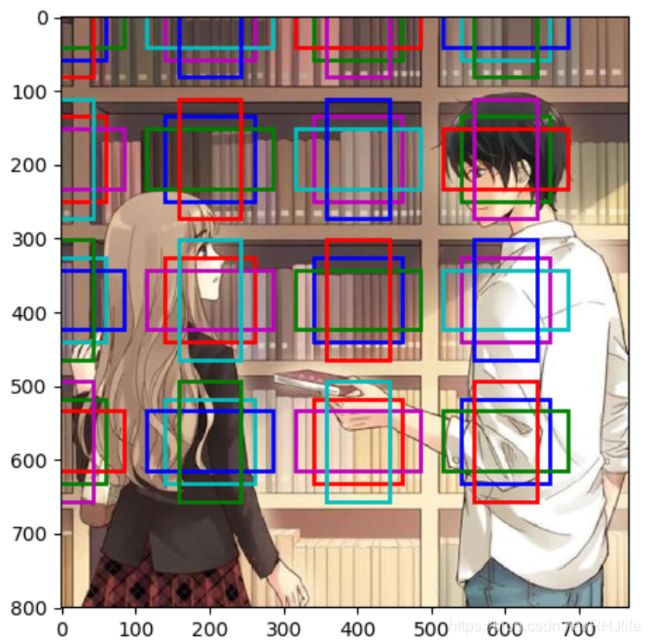

标注训练集的锚框

真实框:

生成框:

抑制后的框:

具体过程就不详细介绍了,直接看代码研究研究吧(其实我也不是很懂,大概光会用)

import tensorflow as tf

import matplotlib.pyplot as plt

import os

import numpy as np

#描绘图像中以某个像素为中心的所有锚框的函数

def bbox_to_rect(bbox, color):

# 将边界框(左上x, 左上y, 右下x, 右下y)格式转换成matplotlib格式:

# ((左上x, 左上y), 宽, 高)

return plt.Rectangle(

xy=(bbox[0], bbox[1]), width=bbox[2]-bbox[0], height=bbox[3]-bbox[1],

fill=False, edgecolor=color, linewidth=2)

def show_bboxes(axes, bboxes, labels=None, colors=None):

def _make_list(obj, default_values=None):

if obj is None:

obj = default_values

elif not isinstance(obj, (list, tuple)):

obj = [obj]

return obj

labels = _make_list(labels)

colors = _make_list(colors, ['b', 'g', 'r', 'm', 'c'])

for i, bbox in enumerate(bboxes):

color = colors[i % len(colors)]

rect = bbox_to_rect(bbox.numpy(), color)

axes.add_patch(rect)

if labels and len(labels) > i:

text_color = 'k' if color == 'w' else 'w'

axes.text(rect.xy[0], rect.xy[1], labels[i],

va='center', ha='center', fontsize=6,

color=text_color, bbox=dict(facecolor=color, lw=0))

plt.show()

set_1 = [[1,2,3,4],[5,6,7,8]]

set_2 = [[1,1,1,1],[2,2,2,2]]

lower_bounds = tf.maximum(tf.expand_dims(set_1, axis=1), tf.expand_dims(set_2, axis=0)) # (n1, n2, 2)

upper_bounds = tf.minimum(tf.expand_dims(set_1, axis=1), tf.expand_dims(set_2, axis=0)) # (n1, n2, 2)

print(tf.expand_dims(set_1, axis=1), tf.expand_dims(set_2, axis=0), lower_bounds, tf.multiply(set_1, set_2), tf.subtract(set_1, set_2))

#交并比

# 参考https://github.com/sgrvinod/a-PyTorch-Tutorial-to-Object-Detection/blob/master/utils.py#L356

def compute_intersection(set_1, set_2):

"""

计算anchor之间的交集

Args:

set_1: a tensor of dimensions (n1, 4), anchor表示成(xmin, ymin, xmax, ymax)

set_2: a tensor of dimensions (n2, 4), anchor表示成(xmin, ymin, xmax, ymax)

Returns:

intersection of each of the boxes in set 1 with respect to each of the boxes in set 2, shape: (n1, n2)

"""

# tensorflow auto-broadcasts singleton dimensions

lower_bounds = tf.maximum(tf.expand_dims(set_1[:,:2], axis=1), tf.expand_dims(set_2[:,:2], axis=0)) # (n1, n2, 2)

upper_bounds = tf.minimum(tf.expand_dims(set_1[:,2:], axis=1), tf.expand_dims(set_2[:,2:], axis=0)) # (n1, n2, 2)

# 设置最小值

intersection_dims = tf.clip_by_value(upper_bounds - lower_bounds, clip_value_min=0, clip_value_max=3) # (n1, n2, 2)

return tf.multiply(intersection_dims[:, :, 0], intersection_dims[:, :, 1]) # (n1, n2)

def compute_jaccard(set_1, set_2):

"""

计算anchor之间的Jaccard系数(IoU)

Args:

set_1: a tensor of dimensions (n1, 4), anchor表示成(xmin, ymin, xmax, ymax)

set_2: a tensor of dimensions (n2, 4), anchor表示成(xmin, ymin, xmax, ymax)

Returns:

Jaccard Overlap of each of the boxes in set 1 with respect to each of the boxes in set 2, shape: (n1, n2)

"""

# Find intersections

intersection = compute_intersection(set_1, set_2)

# Find areas of each box in both sets

areas_set_1 = tf.multiply(tf.subtract(set_1[:, 2], set_1[:, 0]), tf.subtract(set_1[:, 3], set_1[:, 1])) # (n1)

areas_set_2 = tf.multiply(tf.subtract(set_2[:, 2], set_2[:, 0]), tf.subtract(set_2[:, 3], set_2[:, 1])) # (n2)

# Find the union

union = tf.add(tf.expand_dims(areas_set_1, axis=1), tf.expand_dims(areas_set_2, axis=0)) # (n1, n2)

union = tf.subtract(union, intersection) # (n1, n2)

return tf.divide(intersection, union) #(n1, n2)

def MultiBoxPrior(feature_map, sizes=[0.75, 0.5, 0.25], ratios=[1, 2, 0.5]):

"""

# 按照「9.4.1. 生成多个锚框」所讲的实现, anchor表示成(xmin, ymin, xmax, ymax).

https://zh.d2l.ai/chapter_computer-vision/anchor.html

Args:

feature_map: torch tensor, Shape: [N, C, H, W].

sizes: List of sizes (0~1) of generated MultiBoxPriores.

ratios: List of aspect ratios (non-negative) of generated MultiBoxPriores.

Returns:

anchors of shape (1, num_anchors, 4). 由于batch里每个都一样, 所以第一维为1

"""

pairs = [] # pair of (size, sqrt(ratio))

for r in ratios:

pairs.append([sizes[0], np.sqrt(r)])

for s in sizes[1:]:

pairs.append([s, np.sqrt(ratios[0])])

pairs = np.array(pairs)

ss1 = pairs[:, 0] * pairs[:, 1] # size * sqrt(ration)

ss2 = pairs[:, 0] / pairs[:, 1] # size / sqrt(retion)

base_anchors = tf.stack([-ss1, -ss2, ss1, ss2], axis=1) / 2

h, w = feature_map.shape[-2:]

shifts_x = tf.divide(tf.range(0, w), w)

shifts_y = tf.divide(tf.range(0, h), h)

shift_x, shift_y = tf.meshgrid(shifts_x, shifts_y)

shift_x = tf.reshape(shift_x, (-1,))

shift_y = tf.reshape(shift_y, (-1,))

shifts = tf.stack((shift_x, shift_y, shift_x, shift_y), axis=1)

anchors = tf.add(tf.reshape(shifts, (-1,1,4)), tf.reshape(base_anchors, (1,-1,4)))

return tf.cast(tf.reshape(anchors, (1,-1,4)), tf.float32)

img_raw = tf.io.read_file(os.getcwd()+'/img/girl2.jpg')

img = tf.image.decode_jpeg(img_raw).numpy()

h, w = img.shape[0:2]

x = tf.zeros((1,3,h,w))

y = MultiBoxPrior(x)

print(y.shape)

boxes = tf.reshape(y, (h,w,5,4))

print(tf.expand_dims(boxes[200,250,:,:][:, :2], axis=1), tf.expand_dims(boxes[210,260,1:2,:][:, :2], axis=0)

)

print(tf.maximum(tf.expand_dims(boxes[200,250,:,:][:, :2], axis=1), tf.expand_dims(boxes[210,260,1:2,:][:, :2], axis=0))

)

#使用交并比来衡量锚框与真实边界框以及锚框与锚框之间的相似度

bbox_scale = tf.constant([[w,h,w,h]], dtype=tf.float32)

#真实框

ground_truth = tf.constant([[0, 0, 0.3, 0.39, 0.99],

[1, 0.57, 0.15, 0.99, 1]])

#生成的锚框

anchors = tf.constant([[0, 0.1, 0.2, 0.3],

[0.15, 0.2, 0.4, 0.4],

[0.63, 0.05, 0.88, 0.98],

[0.66, 0.45, 0.8, 0.8],

[0.57, 0.3, 0.92, 0.9]])

fig = plt.imshow(img)

show_bboxes(fig.axes, tf.multiply(ground_truth[:, 1:], bbox_scale),

['girl', 'boy'], 'k')

show_bboxes(fig.axes, tf.multiply(anchors, bbox_scale),

['0', '1', '2', '3', '4'])

def assign_anchor(bb, anchor, jaccard_threshold=0.5):

"""

# 按照「9.4.1. 生成多个锚框」图9.3所讲为每个anchor分配真实的bb, anchor表示成归一化(xmin, ymin, xmax, ymax).

https://zh.d2l.ai/chapter_computer-vision/anchor.html

Args:

bb: 真实边界框(bounding box), shape:(nb, 4)

anchor: 待分配的anchor, shape:(na, 4)

jaccard_threshold: 预先设定的阈值

Returns:

assigned_idx: shape: (na, ), 每个anchor分配的真实bb对应的索引, 若未分配任何bb则为-1

"""

na = anchor.shape[0]

nb = bb.shape[0]

jaccard = compute_jaccard(anchor, bb).numpy() # shape: (na, nb)

assigned_idx = np.ones(na) * -1 # 初始全为-1

# 先为每个bb分配一个anchor(不要求满足jaccard_threshold)

jaccard_cp = jaccard.copy()

for j in range(nb):

i = np.argmax(jaccard_cp[:, j])

assigned_idx[i] = j

jaccard_cp[i, :] = float("-inf") # 赋值为负无穷, 相当于去掉这一行

# 处理还未被分配的anchor, 要求满足jaccard_threshold

for i in range(na):

if assigned_idx[i] == -1:

j = np.argmax(jaccard[i, :])

if jaccard[i, j] >= jaccard_threshold:

assigned_idx[i] = j

return tf.cast(assigned_idx, tf.int32)

def xy_to_cxcy(xy):

"""

将(x_min, y_min, x_max, y_max)形式的anchor转换成(center_x, center_y, w, h)形式的.

https://github.com/sgrvinod/a-PyTorch-Tutorial-to-Object-Detection/blob/master/utils.py

Args:

xy: bounding boxes in boundary coordinates, a tensor of size (n_boxes, 4)

Returns:

bounding boxes in center-size coordinates, a tensor of size (n_boxes, 4)

"""

return tf.concat(((xy[:, 2:] + xy[:, :2]) / 2, #c_x, c_y

xy[:, 2:] - xy[:, :2]), axis=1)

def MultiBoxTarget(anchor, label):

"""

# 按照「9.4.1. 生成多个锚框」所讲的实现, anchor表示成归一化(xmin, ymin, xmax, ymax).

https://zh.d2l.ai/chapter_computer-vision/anchor.html

Args:

anchor: torch tensor, 输入的锚框, 一般是通过MultiBoxPrior生成, shape:(1,锚框总数,4)

label: 真实标签, shape为(bn, 每张图片最多的真实锚框数, 5)

第二维中,如果给定图片没有这么多锚框, 可以先用-1填充空白, 最后一维中的元素为[类别标签, 四个坐标值]

Returns:

列表, [bbox_offset, bbox_mask, cls_labels]

bbox_offset: 每个锚框的标注偏移量,形状为(bn,锚框总数*4)

bbox_mask: 形状同bbox_offset, 每个锚框的掩码, 一一对应上面的偏移量, 负类锚框(背景)对应的掩码均为0, 正类锚框的掩码均为1

cls_labels: 每个锚框的标注类别, 其中0表示为背景, 形状为(bn,锚框总数)

"""

assert len(anchor.shape) == 3 and len(label.shape) == 3

bn = label.shape[0]

def MultiBoxTarget_one(anchor, label, eps=1e-6):

"""

MultiBoxTarget函数的辅助函数, 处理batch中的一个

Args:

anchor: shape of (锚框总数, 4)

label: shape of (真实锚框数, 5), 5代表[类别标签, 四个坐标值]

eps: 一个极小值, 防止log0

Returns:

offset: (锚框总数*4, )

bbox_mask: (锚框总数*4, ), 0代表背景, 1代表非背景

cls_labels: (锚框总数, 4), 0代表背景

"""

an = anchor.shape[0]

assigned_idx = assign_anchor(label[:, 1:], anchor) ## (锚框总数, )

# 决定anchor留下或者舍去

bbox_mask = tf.repeat(tf.expand_dims(tf.cast((assigned_idx >= 0), dtype=tf.double), axis=-1), repeats=4, axis=1)

cls_labels = np.zeros(an, dtype=int) # 0表示背景

assigned_bb = np.zeros((an, 4), dtype=float) # 所有anchor对应的bb坐标

for i in range(an):

bb_idx = assigned_idx[i]

if bb_idx >= 0: # 即非背景

cls_labels[i] = label.numpy()[bb_idx, 0] + 1 # 要注意加1

assigned_bb[i, :] = label.numpy()[bb_idx, 1:]

center_anchor = tf.cast(xy_to_cxcy(anchor), dtype=tf.double) # (center_x, center_y, w, h)

center_assigned_bb = tf.cast(xy_to_cxcy(assigned_bb), dtype=tf.double) # (center_x, center_y, w, h)

offset_xy = 10.0 * (center_assigned_bb[:,:2] - center_anchor[:,:2]) / center_anchor[:,2:]

offset_wh = 5.0 * tf.math.log(eps + center_assigned_bb[:, 2:] / center_anchor[:, 2:])

offset = tf.multiply(tf.concat((offset_xy, offset_wh), axis=1), bbox_mask) # (锚框总数, 4)

return tf.reshape(offset, (-1,)), tf.reshape(bbox_mask, (-1,)), cls_labels

batch_offset = []

batch_mask = []

batch_cls_labels = []

for b in range(bn):

offset, bbox_mask, cls_labels = MultiBoxTarget_one(anchor[0, :, :], label[b,:,:])

batch_offset.append(offset)

batch_mask.append(bbox_mask)

batch_cls_labels.append(cls_labels)

batch_offset = tf.convert_to_tensor(batch_offset)

batch_mask = tf.convert_to_tensor(batch_mask)

batch_cls_labels = tf.convert_to_tensor(batch_cls_labels)

return [batch_offset, batch_mask, batch_cls_labels]

labels = MultiBoxTarget(tf.expand_dims(anchors, axis=0),tf.expand_dims(ground_truth, axis=0))

print(labels[2],labels[1],labels[0])

anchors = tf.convert_to_tensor([[0.1, 0.28, 0.38, 0.99],

[0.08, 0.2, 0.56, 0.95],

[0.15, 0.3, 0.62, 0.91],

[0.7, 0.2, 0.98, 0.96]])

offset_preds = tf.convert_to_tensor([0.0] * (4 * len(anchors)))

cls_probs = tf.convert_to_tensor([[0., 0., 0., 0.], # 背景的预测概率

[0.9, 0.6, 0.4, 0.1], # 女孩的预测概率

[0.1, 0.2, 0.3, 0.9]]) # 男孩的预测概率

print(anchors, offset_preds, cls_probs)

fig = plt.imshow(img)

show_bboxes(fig.axes, anchors * bbox_scale,

['girl=0.9', 'girl=0.6', 'girl=0.4', 'boy=0.9'])

#非极大值抑制

from collections import namedtuple

Pred_BB_Info = namedtuple("Pred_BB_Info",

["index", "class_id", "confidence", "xyxy"])

def non_max_suppression(bb_info_list, nms_threshold=0.5):

"""

非极大抑制处理预测的边界框

Args:

bb_info_list: Pred_BB_Info的列表, 包含预测类别、置信度等信息

nms_threshold: 阈值

Returns:

output: Pred_BB_Info的列表, 只保留过滤后的边界框信息

"""

output = []

# 现根据置信度从高到底排序

sorted_bb_info_list = sorted(bb_info_list,

key = lambda x: x.confidence,

reverse=True)

while len(sorted_bb_info_list) != 0:

best = sorted_bb_info_list.pop(0)

output.append(best)

if len(sorted_bb_info_list) == 0:

break

bb_xyxy = []

for bb in sorted_bb_info_list:

bb_xyxy.append(bb.xyxy)

iou = compute_jaccard(tf.convert_to_tensor(best.xyxy),

tf.squeeze(tf.convert_to_tensor(bb_xyxy), axis=1))[0] # shape: (len(sorted_bb_info_list), )

n = len(sorted_bb_info_list)

sorted_bb_info_list = [

sorted_bb_info_list[i] for i in

range(n) if iou[i] <= nms_threshold]

return output

def MultiBoxDetection(cls_prob, loc_pred, anchor, nms_threshold=0.5):

"""

# 按照「9.4.1. 生成多个锚框」所讲的实现, anchor表示成归一化(xmin, ymin, xmax, ymax).

https://zh.d2l.ai/chapter_computer-vision/anchor.html

Args:

cls_prob: 经过softmax后得到的各个锚框的预测概率, shape:(bn, 预测总类别数+1, 锚框个数)

loc_pred: 预测的各个锚框的偏移量, shape:(bn, 锚框个数*4)

anchor: MultiBoxPrior输出的默认锚框, shape: (1, 锚框个数, 4)

nms_threshold: 非极大抑制中的阈值

Returns:

所有锚框的信息, shape: (bn, 锚框个数, 6)

每个锚框信息由[class_id, confidence, xmin, ymin, xmax, ymax]表示

class_id=-1 表示背景或在非极大值抑制中被移除了

"""

assert len(cls_prob.shape) == 3 and len(loc_pred.shape) == 2 and len(anchor.shape) == 3

bn = cls_prob.shape[0]

def MultiBoxDetection_one(c_p, l_p, anc, nms_threshold=0.5):

"""

MultiBoxDetection的辅助函数, 处理batch中的一个

Args:

c_p: (预测总类别数+1, 锚框个数)

l_p: (锚框个数*4, )

anc: (锚框个数, 4)

nms_threshold: 非极大抑制中的阈值

Return:

output: (锚框个数, 6)

"""

pred_bb_num = c_p.shape[1]

# 加上偏移量

anc = tf.add(anc, tf.reshape(l_p, (pred_bb_num, 4))).numpy()

# 最大的概率

confidence = tf.reduce_max(c_p, axis=0)

# 最大概率对应的id

class_id = tf.argmax(c_p, axis=0)

confidence = confidence.numpy()

class_id = class_id.numpy()

pred_bb_info = [Pred_BB_Info(index=i,

class_id=class_id[i]-1,

confidence=confidence[i],

xyxy=[anc[i]]) # xyxy是个列表

for i in range(pred_bb_num)]

# 正类的index

obj_bb_idx = [bb.index for bb

in non_max_suppression(pred_bb_info,

nms_threshold)]

output = []

for bb in pred_bb_info:

output.append(np.append([

(bb.class_id if bb.index in obj_bb_idx

else -1.0),

bb.confidence],

bb.xyxy))

return tf.convert_to_tensor(output) # shape: (锚框个数, 6)

batch_output = []

for b in range(bn):

batch_output.append(MultiBoxDetection_one(cls_prob[b],

loc_pred[b], anchor[0],

nms_threshold))

return tf.convert_to_tensor(batch_output)

output = MultiBoxDetection(

tf.expand_dims(cls_probs, 0),

tf.expand_dims(offset_preds, 0),

tf.expand_dims(anchors, 0),

nms_threshold=0.5)

print(output)

fig = plt.imshow(img)

list_label=[]

list_anchors=[]

for i in output[0].numpy():

if i[0] == -1:

continue

if i[1]<0.5:

continue

label = ('girl=', 'boy=')[int(i[0])] + str(i[1])

list_label.append(label)

print(i,label)

list_anchors.append((i[2:]))

#show_bboxes(fig.axes, tf.multiply(i[2:], bbox_scale), label)

show_bboxes(fig.axes, list_anchors * bbox_scale,

list_label)

多尺度目标检测

将特征图的高和宽分别减半,并用更大的锚框检测更大的目标。当锚框大小设0.4时,有些锚框的区域有重合。

将特征图的高和宽进一步减半至1,并将锚框大小增至0.8。此时锚框中心即图像中心。

小结:

- 可以在多个尺度下生成不同数量和不同大小的锚框,从而在多个尺度下检测不同大小的目标。

- 特征图的形状能确定任一图像上均匀采样的锚框中心。

- 用输入图像在某个感受野区域内的信息来预测输入图像上与该区域相近的锚框的类别和偏移量。

其他内容:tf版本尚未更新,后续更新