关于2019nCoV新冠肺炎的建模(Ⅰ)—数据抓取与热地图的绘制

关于2019nCoV新冠肺炎的建模(Ⅰ)—数据抓取与热图的绘制

- 引言

- 数据抓取

- 前期准备

- 抓取数据预览

- 抓取数据与简单处理

- 数据制表与地图绘制

- 制作国际数据集

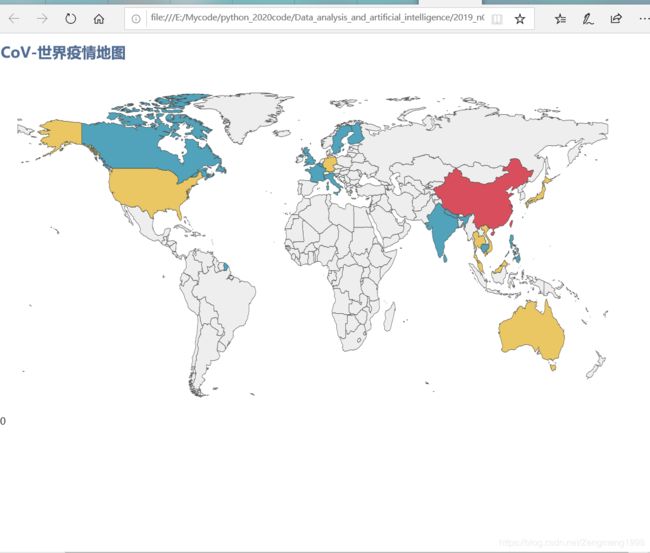

- 利用国际数据集(global_data)绘制全球热图

- 制作国内数据集

- 利用china_data绘制热图

- 用于后续建模的数据集的制作

- 手痒细化的热图

- 成果汇总

- 总结:

- 参考链接

引言

进来由于疫情势态严峻,笔者一直宅在家里做死宅,无聊逛逛csdn,发现上面有大佬在对武汉肺炎的数据进行抓取和地图绘制,笔者手痒,便依葫芦画瓢,尝试看看能不能从中获取一些实用的数据用于建模研究。遂成此文,不足之处望多加指正。

数据抓取

数据源来自腾讯的疫情动态更新

对于爬虫笔者也是一个菜鸟而已,具体过程不做过多的文字赘述,以免贻笑大方,笔者直接用代码说话。

前期准备

对于程序的实现,除了需要调用机器学习的常用的几个第三方库外,还用需要一些文件读取库、和用于疫情地图生成的第三库。如:

地图绘制库:pyecharts

(地图数据库):

echarts-china-cities-pypkg 0.0.9

echarts-china-counties-pypkg 0.0.2

echarts-china-misc-pypkg 0.0.1

echarts-china-provinces-pypkg 0.0.3

echarts-countries-pypkg

文件读取库:

requests

xlrd

zipp

在装库时,笔者这里遇到网速太慢而文件稍大无法安装的情况,笔者选择的,笔者采用的解决方案为利用国内的第三方镜像网站如豆瓣、清华大学的镜像网站进行第三库的下载。

如安装numpypip install -i http://pypi.douban.com/simple --trusted-host pypi.douban.com numpy

抓取数据预览

我们先看看简单的将数据抓取下来,所得数据的数据结构的情况

这里的运行环境为IDLE,后面的都为pycharm

>>>import time, json, requests

>>> url = 'https://view.inews.qq.com/g2/getOnsInfo?name=wuwei_ww_area_counts&callback=&_=%d'%int(time.time()*1000)

>>>

>>> data = json.loads(requests.get(url=url).json()['data'])

>>> print(type(data))

<class 'dict'>

抓取数据与简单处理

引用约定

import pandas as pd

from pyecharts.charts import Map

from pyecharts import options as opts

import time, json, requests

from pyecharts.globals import ThemeType

定义的数据抓取与处理函数

#定义数据抓取函数

def catch_data():

url = 'https://view.inews.qq.com/g2/getOnsInfo?name=disease_h5'

reponse = requests.get(url=url).json()

#返回数据字典

data = json.loads(reponse['data'])

return data

data = catch_data()

print(data.keys())

#来自腾讯的数据,其数据来自卫健委,问题1:数据可否再细化?(目前数据特征已经足够)

# 数据集包括["国内总量","国内新增","更新时间","数据明细","每日数据","每日新增"]

lastUpdateTime = data['lastUpdateTime']

chinaTotal = data['chinaTotal']

chinaAdd = data['chinaAdd']

print(chinaTotal)

print(chinaAdd)

# 定义数据处理函数

def confirm(x):

confirm = eval(str(x))['confirm']

return confirm

def suspect(x):

suspect = eval(str(x))['suspect']

return suspect

def dead(x):

dead = eval(str(x))['dead']

return dead

def heal(x):

heal = eval(str(x))['heal']

return heal

数据制表与地图绘制

想要绘制出疫情地图和继续后期建模研究,只抓取到数据还不够,还需要对数据进行切割和拼接,以便用于后期研究。

制作国际数据集

#生成国际数据集文件问题2:哪来的中英各国对照表?(自制)

global_data = pd.DataFrame(data['areaTree'])

global_data['confirm'] = global_data['total'].map(confirm)

global_data['suspect'] = global_data['total'].map(suspect)

global_data['dead'] = global_data['total'].map(dead)

global_data['heal'] = global_data['total'].map(heal)

global_data['addconfirm'] = global_data['today'].map(confirm)

global_data['addsuspect'] = global_data['today'].map(suspect)

global_data['adddead'] = global_data['today'].map(dead)

global_data['addheal'] = global_data['today'].map(heal)

world_name = pd.read_excel("世界各国中英文对照.xlsx")

global_data = pd.merge(global_data,world_name,left_on ="name",right_on = "中文",how="inner")

global_data = global_data[["name_y","中文","confirm","suspect","dead","heal","addconfirm","addsuspect","adddead","addheal"]]

#print(global_data.head())

global_data=pd.DataFrame(global_data)

#print(global_data.head())

#生成csv数据集

global_data.to_csv("global_data_2020_02_03.csv")

利用国际数据集(global_data)绘制全球热图

#利用goalal_data绘制世界区域疫情图

world_map = Map(init_opts=opts.InitOpts(theme=ThemeType.WESTEROS))

world_map.add("",[list(z) for z in zip(list(global_data["name_y"]), list(global_data["confirm"]))], "world",is_map_symbol_show=False)

world_map.set_global_opts(title_opts=opts.TitleOpts(title="2019_nCoV-世界疫情地图"),

visualmap_opts=opts.VisualMapOpts(is_piecewise=True,

pieces = [

{"min": 101 , "label": '>100'}, #不指定 max,表示 max 为无限大

{"min": 10, "max": 100, "label": '10-100'},

{"min": 0, "max": 9, "label": '0-9' }]))

world_map.set_series_opts(label_opts=opts.LabelOpts(is_show=False))

world_map.render('20200203世界疫情地图.html')

制作国内数据集

#国内数据集制作

#进行第一步处理

# 数据明细,数据结构比较复杂,一步一步打印出来看,先明白数据结构

areaTree = data['areaTree']

china_data = areaTree[0]['children']

china_list = []

for a in range(len(china_data)):

province = china_data[a]['name']

province_list = china_data[a]['children']

for b in range(len(province_list)):

city = province_list[b]['name']

total = province_list[b]['total']

today = province_list[b]['today']

china_dict = {}

china_dict['province'] = province

china_dict['city'] = city

china_dict['total'] = total

china_dict['today'] = today

china_list.append(china_dict)

china_data = pd.DataFrame(china_list)

print(china_data.head())

# 函数映射

china_data['confirm'] = china_data['total'].map(confirm)

china_data['suspect'] = china_data['total'].map(suspect)

china_data['dead'] = china_data['total'].map(dead)

china_data['heal'] = china_data['total'].map(heal)

china_data['addconfirm'] = china_data['today'].map(confirm)

china_data['addsuspect'] = china_data['today'].map(suspect)

china_data['adddead'] = china_data['today'].map(dead)

china_data['addheal'] = china_data['today'].map(heal)

china_data = china_data[["province","city","confirm","suspect","dead","heal","addconfirm","addsuspect","adddead","addheal"]]

print(china_data.head())

#以csv文件形式存储

china_data.to_csv("china_data_2020_02_03.csv",encoding='utf_8_sig')

利用china_data绘制热图

#利用china_data绘制中国疫情图

area_data = china_data.groupby("province")["confirm"].sum().reset_index()

area_data.columns = ["province","confirm"]

area_map = Map(init_opts=opts.InitOpts(theme=ThemeType.WESTEROS))

area_map.add("",[list(z) for z in zip(list(area_data["province"]), list(area_data["confirm"]))], "china",is_map_symbol_show=False)

area_map.set_global_opts(title_opts=opts.TitleOpts(title="2019_nCoV中国疫情地图"),visualmap_opts=opts.VisualMapOpts(is_piecewise=True,

pieces = [

{"min": 1001 , "label": '>1000',"color": "#893448"}, #不指定 max,表示 max 为无限大

{"min": 500, "max": 1000, "label": '500-1000',"color": "#ff585e"},

{"min": 101, "max": 499, "label": '101-499',"color": "#fb8146"},

{"min": 10, "max": 100, "label": '10-100',"color": "#ffb248"},

{"min": 0, "max": 9, "label": '0-9',"color" : "#fff2d1" }]))

area_map.render('20200203中国疫情地图.html')

用于后续建模的数据集的制作

#日数据集文件

chinaDayList = pd.DataFrame(data['chinaDayList'])

chinaDayList = chinaDayList[['date','confirm','suspect','dead','heal']]

chinaDayList.head()

chinaDayList.to_csv("china_DailyList_2020_02_03.csv",encoding='utf_8_sig')

#日增加数据集

chinaDayAddList = pd.DataFrame(data['chinaDayAddList'])

chinaDayAddList = chinaDayAddList[['date','confirm','suspect','dead','heal']]

chinaDayAddList.to_csv("chinaDayAddList.csv",encoding='utf_8_sig')

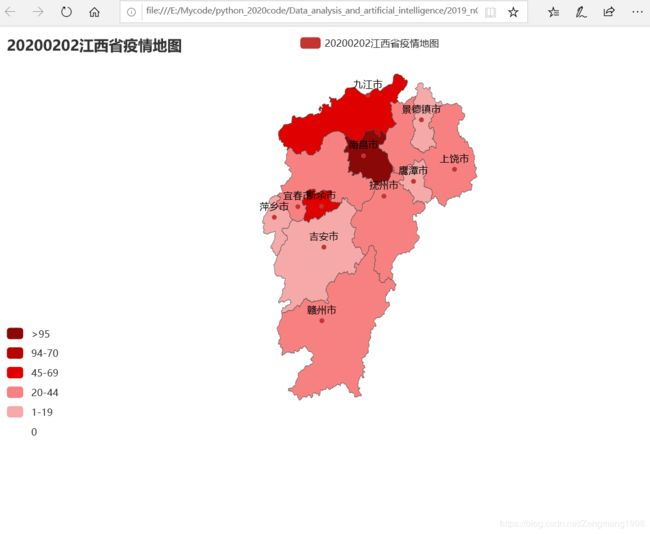

手痒细化的热图

笔者利用手中数据集与几个宜春地区和杭州地区的官方公众号公众号,将疫情地图细化,便于笔者对于关心的地区的疫情情况了解:

#利用公众号的发布信息制作进一步的细化的疫情地图

# 江西省疫情地图

province_distribution = {'南昌市':103, '九江市':64, '新余市': 50,

'赣州市':35, '宜春市':36, '抚州市': 29,

'上饶市':32, '萍乡市':18, '吉安市': 13,

'鹰潭市':8, '景德镇市':3

}

map = Map()

map.set_global_opts(

title_opts=opts.TitleOpts(title="20200202江西省疫情地图"),

visualmap_opts=opts.VisualMapOpts(max_=391, is_piecewise=True,

pieces=[

{"max": 120, "min": 95, "label": ">95", "color": "#8A0808"},

{"max": 94, "min": 70, "label": "94-70", "color": "#B40404"},

{"max": 69, "min": 45, "label": "45-69", "color": "#DF0101"},

{"max": 44, "min": 20, "label": "20-44", "color": "#F78181"},

{"max": 19, "min": 1, "label": "1-19", "color": "#F5A9A9"},

{"max": 0, "min": 0, "label": "0", "color": "#FFFFFF"},

], ) #分段

)

map.add("20200202江西省疫情地图", data_pair=province_distribution.items(), maptype="江西", is_roam=True)

map.render('20200202江西省疫情地图.html')

#市级防疫地图

city_distribution = {'靖安县':0, '奉新县':0, '铜鼓县':2 ,

'宜丰县':3, '高安市':2, '万载县': 0,

'上高县':0, '丰城市':25, '樟树市': 1,

'袁州区':3

}

# maptype='china' 只显示全国直辖市和省级

map = Map()

map.set_global_opts(

title_opts=opts.TitleOpts(title="20200202宜春市疫情地图"),

visualmap_opts=opts.VisualMapOpts(max_=34, is_piecewise=True,

pieces=[

{"max": 31, "min": 20, "label": ">20", "color": "#8A0808"},

{"max": 19, "min": 10, "label": "10-19", "color": "#B40404"},

{"max": 9, "min": 5, "label": "5-9", "color": "#DF0101"},

{"max": 4, "min": 2, "label": "2-4", "color": "#F78181"},

{"max": 1, "min": 1, "label": "1", "color": "#F5A9A9"},

{"max": 0, "min": 0, "label": "0", "color": "#FFFFFF"},

], ) #最大数据范围,分段

)

map.add("20200202宜春市疫情地图", data_pair=city_distribution.items(), maptype="宜春", is_roam=True)

map.render('20200202宜春市疫情地图.html')

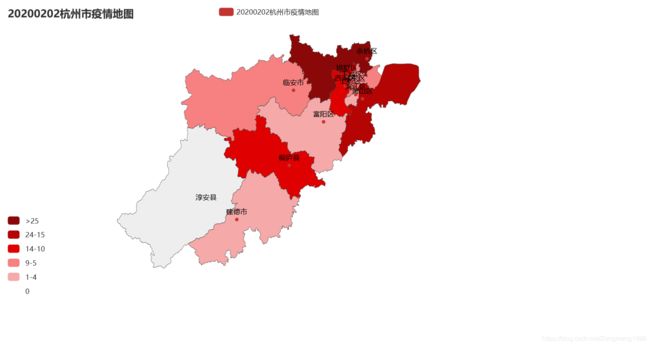

#杭州市防疫地图

city_distribution = {'余杭区':28, '萧山区':16, '桐庐县':14 ,

'西湖区':12, '拱墅区':10, '江干区': 9,

'上城区':8, '下城区':5, '临安市': 5,

'滨江区':4,'富阳区':4,'钱塘新区':2,

'建德市':1

}

# maptype='china' 只显示全国直辖市和省级

map = Map()

map.set_global_opts(

title_opts=opts.TitleOpts(title="20200202杭州市疫情地图"),

visualmap_opts=opts.VisualMapOpts(max_=118, is_piecewise=True,

pieces=[

{"max": 35, "min": 25, "label": ">25", "color": "#8A0808"},

{"max": 24, "min": 15, "label": "24-15", "color": "#B40404"},

{"max": 14, "min": 10, "label": "14-10", "color": "#DF0101"},

{"max": 9, "min": 5, "label": "9-5", "color": "#F78181"},

{"max":4 , "min": 1, "label": "1-4", "color": "#F5A9A9"},

{"max": 0, "min": 0, "label": "0", "color": "#FFFFFF"},

], ) #最大数据范围,分段

)

map.add("20200202杭州市疫情地图", data_pair=city_distribution.items(), maptype="杭州", is_roam=True)

map.render('20200202杭州市疫情地图.html')

实现的疫情地图效果:

1.江西省:

2.宜春市:

笔者所在的丰城市已然成了宜春地区的“小武汉”。看来这几天还是在家宅着比较安全。

笔者所在的丰城市已然成了宜春地区的“小武汉”。看来这几天还是在家宅着比较安全。

3.杭州市:

绘制杭州市疫情地图时,python导入的地图数据库与杭州官方公众号发布的地区在对应有误。

故无法将淳安县的数据进行在地图上的可视化。

成果汇总

总结:

笔者实现工具为pycharm,但是笔者发现,有好几个大佬的数据可视化工具为jupyter notebook,经这次的实现,方觉,在数据的可视化实现效果上,笔者个人感觉pycharm要稍逊于jupyter notebook,但在编程的便捷性上,pycharm是要更加舒适的,故最终选用了pycham作为数据分析的实现工作。以上为个人感受,具体选用,依读者的个人喜好。

最后希望疫情可以早日结束,中国加油!武汉加油!

参考链接

https://blog.csdn.net/weixin_43130164/article/details/104113559

https://blog.csdn.net/xufive/article/details/104093197