Structed Streaming写入数据到mysql,kafka中

structed streaming是spark2.x之后更新的,一句话介绍就是比spark streaming更高级的api工具。

举个例子,当我们做实时单词统计的时候,每一个批次的数据都能统计出来。如果要统计前面几个批次的所有数据该怎么办?在spark streaming里面只能自己实现,而Structed Streaming却帮我们实现好了。

不过了数据统计完成之后该如何输出保存了?目前Structed Streaming有四种方式:

1.File sink。写入到文件中。

2.Foreach sink。对输出的记录进行任意计算。比如保存到mysql中。目前spark不支持直接写入外部数据库,只提供了Foreach接收器自己来实现,而且官网也没有示例代码。

3.Console sink。输出到控制台,仅用于测试。

4.Memory sink。以表的形式输出到内存,spark可以读取内存中的表,仅用于测试。

5.Kafka sink。spark2.2.1更新了kafka sink,所以可以直接使用,如果你的版本低于2.2.1,那就只能使用第二个方法foreach sink来实现。

这里参考了外国的这篇博客:https://databricks.com/blog/2017/04/04/real-time-end-to-end-integration-with-apache-kafka-in-apache-sparks-structured-streaming.html

最主要的步骤是自定义一个类JDBCSink继承ForeachWriter,并重写其中的三个方法。

import java.sql._

import org.apache.spark.sql.{ForeachWriter, Row}

class JDBCSink(url: String, userName: String, password: String) extends ForeachWriter[Row]{

var statement: Statement = _

var resultSet: ResultSet = _

var connection: Connection = _

override def open(partitionId: Long, version: Long): Boolean = {

Class.forName("com.mysql.jdbc.Driver")

connection = DriverManager.getConnection(url, userName, password)

statement = connection.createStatement()

return true

}

override def process(value: Row): Unit = {

val word= value.getAs[String]("word")

val count = value.getAs[Integer]("count")

val insertSql = "insert into webCount(word,count)" +

"values('" + word + "'," + count + ")"

statement.execute(insertSql)

}

override def close(errorOrNull: Throwable): Unit = {

connection.close()

}

}然后就可以使用我们自定义的JDBCSink了。

import org.apache.spark.sql.SparkSession

import org.apache.spark.sql.streaming.{ProcessingTime, Trigger}

object KafkaStructedStreaming {

def main(args: Array[String]): Unit = {

val sparkSession = SparkSession.builder().master("local[2]").appName("streaming").getOrCreate()

val df = sparkSession

.readStream

.format("socket")

.option("host", "hadoop102")

.option("port", "9999")

.load()

import sparkSession.implicits._

val lines = df.selectExpr("CAST(value as STRING)").as[String]

val weblog = lines.as[String].flatMap(_.split(" "))

val wordCount = weblog.groupBy("value").count().toDF("word", "count")

val url ="jdbc:mysql://hadoop102:3306/test"

val username="root"

val password="000000"

val writer = new JDBCSink(url, username, password)

val query = wordCount.writeStream

.foreach(writer)

.outputMode("update")

.trigger(ProcessingTime("10 seconds"))

.start()

query.awaitTermination()

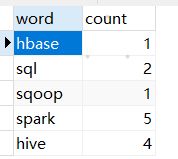

}用nc向端口9999传递一些数据后,查询mysql,发现已经保存上去。

同样的,我们也可以写入数据到kafka中。自定义一个类KafkaSink继承ForeachWriter。

import java.util.Properties

import org.apache.kafka.clients.producer.{KafkaProducer, ProducerRecord}

import org.apache.spark.sql.{ForeachWriter, Row}

class KafkaSink(topic: String, servers: String) extends ForeachWriter[Row]{

val kafkaProperties = new Properties()

kafkaProperties.put("bootstrap.servers", servers)

kafkaProperties.put("key.serializer", "kafkashaded.org.apache.kafka.common.serialization.StringSerializer")

kafkaProperties.put("value.serializer", "kafkashaded.org.apache.kafka.common.serialization.StringSerializer")

val results = new scala.collection.mutable.HashMap

var producer: KafkaProducer[String, String] = _

override def open(partitionId: Long, version: Long): Boolean = {

producer = new KafkaProducer(kafkaProperties)

return true

}

override def process(value: Row): Unit = {

val word = value.getAs[String]("word")

val count = value.getAs[String]("count")

producer.send(new ProducerRecord(topic, word, count))

}

override def close(errorOrNull: Throwable): Unit = {

producer.close()

}

}然后和前面使用JDBCSink一样使用自定义KafkaSink就行了。

从spark2.2.1写入kafka就变得很简单了,不需要像上面一样实现自定义类。

wordcount.writeStream

.format("kafka")

.option("kafka.bootstrap.servers", "host1:port1,host2:port2")

.option("topic", "wordcount")

.start()