MNIST攻略:原始数据解析和基于numpy的全连接网络实现

文章目录

- 数据背景介绍

- 数据下载

- 数据编码格式

- 数据解析

- 单层全连接网络

- 三层全连接网络

本文档最后两部分

单层全连接网络和

三层全连接网络仅有代码实现,没有相应的公式推导,公式推导可参考以下两个文档,配合阅读更容易理解:

Softmax以及Cross Entropy Loss求导

全连接神经网络公式推导:含正向和反向

数据背景介绍

MNIST,一般被称为手写数字,读法可能是 /‘em nist’/,是CNN界的hello world。

手写数字的识别需求来自于20世纪80年代前后的美国邮政,人工识别信封上的邮政编码是一件非常耗费精力、容易出错且技术含量不高的活,所以就产生了依靠计算机进行识别的需求。

CNN的奠基之作,LeCun同学1998年的Gradient-based learning applied to document recognition 就是基于MNIST做的工作。

以现在的深度学习发展程度随便就能把MNIST玩爆,但是因为其简单,数据量小,上手容易,所以作为初学而言大家还是喜欢玩一下,学习一个新的框架的时候也喜欢用MNIST试水,就好像大家学一门新的编程语言时总喜欢先在屏幕上打印个hello world一样。

数据下载

下载地址为:

http://yann.lecun.com/exdb/mnist/

共有4个文件:

train-images-idx3-ubyte.gz: training set images (9912422 bytes), 训练集图像

train-labels-idx1-ubyte.gz: training set labels (28881 bytes), 训练集标签

t10k-images-idx3-ubyte.gz: test set images (1648877 bytes), 测试集图像

t10k-labels-idx1-ubyte.gz: test set labels (4542 bytes), 测试集标签

其中训练集有60000个实例,测试集有10000个实例。所有数字都位于图像中间位置并且尺寸做了normalization。

数据编码格式

其实现在的框架都已经有了解析的接口,毕竟这么出名又简单的标杆数据集,出个接口还是很容易的。但是以二进制读入数据逐字节解析会让我们对数据的认识更清楚一些。请先解压数据。

数据下载网址同时也给了数据的编码规则:

TRAINING SET LABEL FILE (train-labels-idx1-ubyte):

[offset] [type] [value] [description]` `0000 32 bit integer 0x00000801(2049) magic number (MSB first)` `0004 32 bit integer 60000 number of items` `0008 unsigned byte ?? label` `0009 unsigned byte ?? label` `........` `xxxx unsigned byte ?? label The labels values are 0 to 9.TRAINING SET IMAGE FILE (train-images-idx3-ubyte):

[offset] [type] [value] [description]` `0000 32 bit integer 0x00000803(2051) magic number` `0004 32 bit integer 60000 number of images` `0008 32 bit integer 28 number of rows` `0012 32 bit integer 28 number of columns` `0016 unsigned byte ?? pixel` `0017 unsigned byte ?? pixel` `........` `xxxx unsigned byte ?? pixelPixels are organized row-wise. Pixel values are 0 to 255. 0 means background (white), 255 means foreground (black).

TEST SET LABEL FILE (t10k-labels-idx1-ubyte):

[offset] [type] [value] [description]` `0000 32 bit integer 0x00000801(2049) magic number (MSB first)` `0004 32 bit integer 10000 number of items` `0008 unsigned byte ?? label` `0009 unsigned byte ?? label` `........` `xxxx unsigned byte ?? labelThe labels values are 0 to 9.

TEST SET IMAGE FILE (t10k-images-idx3-ubyte):

[offset] [type] [value] [description]` `0000 32 bit integer 0x00000803(2051) magic number` `0004 32 bit integer 10000 number of images` `0008 32 bit integer 28 number of rows` `0012 32 bit integer 28 number of columns` `0016 unsigned byte ?? pixel` `0017 unsigned byte ?? pixel` `........` `xxxx unsigned byte ?? pixelPixels are organized row-wise. Pixel values are 0 to 255. 0 means background (white), 255 means foreground (black).

下面以train set对规则进行解释:

在TRAINING SET LABEL FILE中,1-4 bytes是一个magic number,值为2049,好像并没有什么很特殊的含义;5-8 bytes是数据量,值为60000;从第9 byte开始,每一byte代表一个实例的标签,值为0~9中的一个。

在TRAINING SET IMAGE FILE中,1-4 bytes是一个magic number,值为2051;5-8 bytes是数据量,值为60000;9-12 bytes是图像的行数,值为28;13-16 bytes是图像的列数,值为28;从第17 byte开始,每一byte代表图像中的一个像素,像素的排列顺序是按行排列(row-wise),像素值范围是0~255,0代表背景(白色),255代表前景(黑色)。

NOTE:请注意现在常规的uint8数据类型的图像,0代表黑色,255代表白色。不过对于MNIST的训练而言,黑色白色的概念并没有什么所谓,网站上这样写可能是为了方便跟文章里的图像对照起来,文章里是黑字白背景。

下面计算4个文件的大小,计算大小有助于加深理解上面的文件编码,可以“右键 - 属性”查看文件大小对计算结果进行确认。

TRAINING SET LABEL FILE (train-labels-idx1-ubyte)

4个bytes: magic number

4个bytes: number of items

60000个bytes: 每个byte为一个label

----------------

共计: 4 + 4 + 60000 = 60008 bytes

TRAINING SET IMAGE FILE (train-images-idx3-ubyte)

4个bytes: magic number

4个bytes: number of items

4个bytes: number of rows

4个bytes: number of columns

28*28*60000: 28*28是一个图像的大小,共60000个

----------------

共计: 4 + 4 + 4 + 4 + 28*28*60000 = 47040016 bytes

TEST SET LABEL FILE (t10k-labels-idx1-ubyte)

4个bytes: magic number

4个bytes: number of items

10000个bytes: 每个byte为一个label

----------------

共计: 4 + 4 + 10000 = 10008 bytes

TEST SET IMAGE FILE (t10k-images-idx3-ubyte)

4个bytes: magic number

4个bytes: number of items

4个bytes: number of rows

4个bytes: number of columns

28*28*10000: 28*28是一个图像的大小,共10000个

----------------

共计: 4 + 4 + 4 + 4 + 28*28*10000 = 7840016 bytes

数据解析

搞清楚了数据的编码格式,那么就容易解析了。

解析需要用到python的struct模块,struct模块的help信息如下:

Functions to convert between Python values and C structs.

Python bytes objects are used to hold the data representing the C struct

and also as format strings (explained below) to describe the layout of data

in the C struct.

The optional first format char indicates byte order, size and alignment:

@: native order, size & alignment (default)

=: native order, std. size & alignment

<: little-endian, std. size & alignment

>: big-endian, std. size & alignment

!: same as >

The remaining chars indicate types of args and must match exactly;

these can be preceded by a decimal repeat count:

x: pad byte (no data); 1 byte

c:char; 1 byte

b:signed byte; 1 byte

B:unsigned byte; 1 byte

?: _Bool; 1 byte

h:short; 2 bytes

H:unsigned short; 2 bytes

i:int; 4 bytes

I:unsigned int; 4 bytes

l:long; 4 bytes

L:unsigned long; 4 bytes

f:float; 4 bytes

d:double; 8 bytes

q:long long; 8 bytes

Q:unsigned long long; 8 bytes

Whitespace between formats is ignored.

......

unpack(format, buffer, /)

Return a tuple containing values unpacked according to the format string.

The buffer's size in bytes must be calcsize(format).

我们解析使用的是unpack函数,format这里有坑,因为这个数据是big-endian的,数据的网址上有这么一段话:

All the integers in the files are stored in the MSB first (high endian) format used by most non-Intel processors. Users of Intel processors and other low-endian machines must flip the bytes of the header.

high-endian,应该就是big-endian的意思。所以unpack的format就应当写为">i",">B"等,如果有多个的话就用">5B"之类的,也可以使用正则表达式,比如">%dB"%(item_number * rows * cols)。

解析函数如下,如果要执行下面main中的内容保存图像的话,那么需要安装opencv-python,安装方法是在cmd中输入pip install opencv-python。

文件名:parse_mnist.py (下面还要用到该文件)

# -*- coding: utf-8 -*-

"""

functions to parse the mnist dataset.

Convert the data into png format and save to the disk in the __main__ part

"""

import os

import struct

import numpy as np

import cv2

def parse_mnist_images(filename):

"""

Descriptions:

Parse mnist image file and output the images as a 3D numpy.array with a

shape of (image_number, rows, cols), uint8 type

Input:

filename: filename of image file

Output:

The parsed images as a 3D numpy.array

"""

with open(filename, 'rb') as fid:

file_content = fid.read()

item_number = struct.unpack('>i', file_content[4:8])[0]

rows = struct.unpack('>i', file_content[8:12])[0]

cols = struct.unpack('>i', file_content[12:16])[0]

# 'item_number * rows * cols' is the number of bytes

images = struct.unpack(

'>%dB' % (item_number * rows * cols), file_content[16:])

images = np.uint8(np.array(images))

# np.reshape: the dimension assigned by -1 will be computed according

# to the first input (images) and other dimensions (rows, cols)

images = np.reshape(images, [-1, rows, cols])

return images

def parse_mnist_labels(filename):

"""

Descriptions:

Parse mnist label file and output the labels as a numpy.array with a

shape of (image_number,), int32 type

Input:

filename: filename of label file

Output:

The parsed labels as a numpy.array

"""

with open(filename, 'rb') as fid:

file_content = fid.read()

item_number = struct.unpack('>i', file_content[4:8])[0]

# 'item_number' is the number of bytes

labels = struct.unpack('>%dB' % item_number, file_content[8:])

labels = np.array(labels)

return labels

def make_one_hot_labels(labels):

"""

Description:

Transform classification labels which is in terms of numbers into

one hot type

Inputs:

labels in terms of number

Outputs:

One hot labels, composed of 0 and 1

"""

classes = np.unique(labels)

assert len(classes) == classes.argmax() - classes.argmin() + 1

labels_one_hot = (labels[:, None] == np.arange(10)).astype(np.int32)

return labels_one_hot

if __name__ == '__main__':

# parse labels

labels_train = parse_mnist_labels('./MNIST_DATA/train-labels.idx1-ubyte')

labels_test = parse_mnist_labels('./MNIST_DATA/t10k-labels.idx1-ubyte')

# parse images and save as png files

path_train = './MNIST_DATA/train_images/'

os.makedirs(path_train, exist_ok=True)

images = parse_mnist_images('./MNIST_DATA/train-images.idx3-ubyte')

for index, image in enumerate(images):

cv2.imwrite(path_train + '%05d_%d.png' %

(index, labels_train[index]), image)

path_test = './MNIST_DATA/test_images/'

os.makedirs(path_test, exist_ok=True)

images = parse_mnist_images('./MNIST_DATA/t10k-images.idx3-ubyte')

for index, image in enumerate(images):

cv2.imwrite(path_test + '%05d_%d.png' %

(index, labels_test[index]), image)

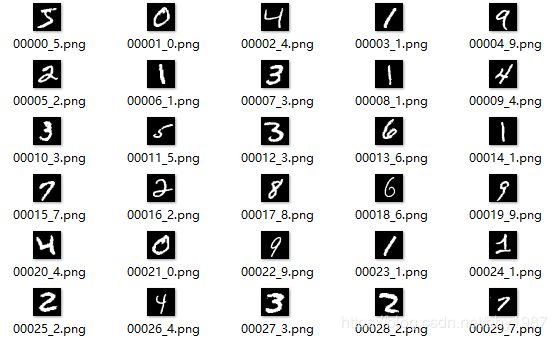

为什么要保存成png格式?因为是无损压缩,保存下来的图像如下,文件名包含了图像序号和标签并以下划线作为分隔。

单层全连接网络

这部分包含简易的公式推导,详细过程请参考下面两个文档,请注意《全连接神经网络公式推导:含正向和反向》中推导的是含有两个隐藏层(或也可以说是三个全连接层)的网络结构,与这部分不完全相同,所以配合下面的简易推导一起看会更加清楚一些。

Softmax以及Cross Entropy Loss求导

全连接神经网络公式推导:含正向和反向

代码中有两点需要略加注意:

- 因为我们这里使用全连接网络进行实验,所以需要把数据reshape成长度为784(28*28)的行向量

- 数据需要做normalization,这里简单除以了255将数据normalize到[0, 1]之间,否则可能需要非常仔细地调整weight和bias的初始化范围以及学习率才能收敛。这里多扯两句, s o f t m a x softmax softmax函数中需要做

exp()运算,如果图片值太大,导致exp()的输入过大,那么输出就会超出float型的范围而溢出,在这种简单的神经网络里,这种溢出是导致训练发散的常见原因。

前向过程:

( z 0 z_0 z0表示一个batch的输入数据,第一个维度是batch,第二个维度是拉平了的图像数据,长度为 28 ∗ 28 = 784 28*28=784 28∗28=784)

z 1 = z 0 ∗ w e i g h t + b i a s S ( z 1 ) = S o f t m a x ( z 1 ) \begin{aligned} z_1 &= z_0 * weight + bias\\[2ex] S(z_1) &= Softmax(z_1) \end{aligned} z1S(z1)=z0∗weight+bias=Softmax(z1)

后向过程:

( d x dx dx表示某个量的梯度,所谓梯度都是 L o s s Loss Loss对该变量的偏导,如 d w = ∂ L o s s / ∂ w dw = \partial Loss / \partial w dw=∂Loss/∂w,使用链式求导法则来推导,下式中变量中间的点表示矩阵乘法)

d z 1 = S ( z 1 ) − l a b e l s d w = z 0 T ⋅ d z 1 d b = m e a n ( d z 1 , a x i s = 0 ) \begin{aligned} dz_1 &= S(z_1) - labels \\[2ex] dw &= z_0^T \cdot dz_1 \\[2ex] db &= mean(dz_1,\ axis=0) \end{aligned} dz1dwdb=S(z1)−labels=z0T⋅dz1=mean(dz1, axis=0)

模型参数更新:

w = w − α ∗ d w b = b − α ∗ d b \begin{aligned} w &= w- \alpha * dw \\[2ex] b &= b - \alpha * db \end{aligned} wb=w−α∗dw=b−α∗db

代码:

(需要用到上面的数据解析脚本,parse_mnist.py)

# -*- coding: utf-8 -*-

"""

Train and test the mnist dataset.

Using one fully connection layer and softmax activation, optimized with a

cross entropy loss

"""

import numpy as np

from parse_mnist import parse_mnist_images

from parse_mnist import parse_mnist_labels

from parse_mnist import make_one_hot_labels

def uniform_random(shape, min_limit, max_limit):

"""

Description:

Generate uniform random numbers between [min_limit, max_limit] with a

certain shape

Inputs:

shape: shape of output

min_limit: minimum of random number

max_limit: maximum of random number

Outputs:

Uniform random numbers between [min_limit, max_limit] with a certain

shape

"""

return (max_limit - min_limit) * np.random.random(shape) + min_limit

def shuffle_data(images, labels_one_hot):

"""

Description:

Random shuffle the images and labels_one_hot, note that image number

should be placed at the first axis

Inputs:

images: images tensor, with number as the first axis

labels_one_hot: one hot labels, with number as the first axis

Outputs:

Random shuffled images and labels_one_hot

"""

images_shuffle = np.zeros_like(images)

labels_shuffle = np.zeros_like(labels_one_hot)

idx_shuffle = np.arange(images.shape[0])

np.random.shuffle(idx_shuffle)

images_shuffle = images[idx_shuffle]

labels_shuffle = labels_one_hot[idx_shuffle]

return images_shuffle, labels_shuffle

def softmax(data):

"""

Description:

Compute softmax of data. the first axis of data is batch_size

Inputs:

data: data to be activated by softmax

Outputs:

The softmax of data

"""

exp_data = np.exp(data)

sum_exp_data = np.sum(exp_data, axis=1, keepdims=True)

return exp_data / sum_exp_data

def evaluate(images_test, labels, weight, bias):

"""

Description:

Evaluate the accuracy of test set

Inputs:

images_test: images of test set

labels: labels of test set

weight: weight of fully connection layer

bias: bias of fully connection layer

Outputs:

Accuracy of test set

"""

z1 = np.matmul(images_test, weight) + bias

softmax_z1 = softmax(z1)

# argmax() return the index of max value

labels_predict = softmax_z1.argmax(axis=1)

is_right = labels_predict == labels

return np.mean(is_right)

# load images

images_train = parse_mnist_images('./MNIST_DATA/train-images.idx3-ubyte')

images_test = parse_mnist_images('./MNIST_DATA/t10k-images.idx3-ubyte')

images_train = np.reshape(images_train, [images_train.shape[0], -1])

images_test = np.reshape(images_test, [images_test.shape[0], -1])

images_train = np.float32(images_train) / 255.0

images_test = np.float32(images_test) / 255.0

# load labels

labels_train = parse_mnist_labels('./MNIST_DATA/train-labels.idx1-ubyte')

labels_test = parse_mnist_labels('./MNIST_DATA/t10k-labels.idx1-ubyte')

labels_train_one_hot = make_one_hot_labels(labels_train)

# parameters

BATCH_SIZE = 100

EPOCH = 10

LEARNING_RATE = 0.01

num_train = images_train.shape[0]

single_image_size = images_train.shape[1]

weight = uniform_random([single_image_size, 10], -0.02, 0.02)

bias = np.zeros([1, 10])

# training and evaluation

for ep in range(EPOCH):

print('epoch', ep + 1)

images_shuffle, labels_shuffle = shuffle_data(images_train,

labels_train_one_hot)

for i in range(0, num_train, BATCH_SIZE):

# get a batch of data. images_batch is z0 in the formula

images_batch = images_shuffle[i:i + BATCH_SIZE, :]

labels_batch = labels_shuffle[i:i + BATCH_SIZE, :]

# forward propagation

z1 = np.matmul(images_batch, weight) + bias

softmax_z1 = softmax(z1)

# cross entropy loss, the loss is actually not used

loss = -np.sum(labels_batch * softmax_z1) / BATCH_SIZE

# backward propagation

dz1 = softmax_z1 - labels_batch

dweight = np.matmul(images_batch.T, dz1)

dbias = np.mean(dz1, axis=0)

# update paramters

weight -= LEARNING_RATE * dweight

bias -= LEARNING_RATE * dbias

acc = evaluate(images_test, labels_test, weight, bias)

print('accuracy: ', acc)

准确率大概能到92%左右。

三层全连接网络

这部分只有代码没有公式,详细推导过程请参考以下两个文档,这部分的代码完全按照以下两个文档的公式推导编写:

Softmax以及Cross Entropy Loss求导

全连接神经网络公式推导:含正向和反向

三层全连接网络的意思就是有两个隐藏层,加上最后的输出层,共三个全连接层,隐藏层节点数设置为256,准确率大概能跑到97%+。

随着网络模型变复杂,手推公式的流程缺少正则化的劣势就慢慢体现出来了,下面的训练过程较不稳定,有时候能到97%+,有时候到不了,看运气。另外激活函数使用了relu,所以相比较单层全连接的情况多了两个函数,relu和drelu(求导)。

# -*- coding: utf-8 -*-

"""

train mnist using three fully connection layers and relu activation, except for

the last layer with softmax, optimized by minimizing cross entropy loss

"""

import numpy as np

from parse_mnist import parse_mnist_images

from parse_mnist import parse_mnist_labels

from parse_mnist import make_one_hot_labels

def uniform_random(shape, min_limit, max_limit):

"""

Description:

Generate uniform random numbers between [min_limit, max_limit] with a

certain shape

Inputs:

shape: shape of output

min_limit: minimum of random number

max_limit: maximum of random number

Outputs:

Uniform random numbers between [min_limit, max_limit] with a certain

shape

"""

return (max_limit - min_limit) * np.random.random(shape) + min_limit

def shuffle_data(images, labels_one_hot):

"""

Description:

Random shuffle the images and labels_one_hot, note that image number

should be placed at the first axis

Inputs:

images: images tensor, with number as the first axis

labels_one_hot: one hot labels, with number as the first axis

Outputs:

Random shuffled images and labels_one_hot

"""

images_shuffle = np.zeros_like(images)

labels_shuffle = np.zeros_like(labels_one_hot)

idx_shuffle = np.arange(images.shape[0])

np.random.shuffle(idx_shuffle)

images_shuffle = images[idx_shuffle]

labels_shuffle = labels_one_hot[idx_shuffle]

return images_shuffle, labels_shuffle

def relu(features):

"""

Description:

relu activation of features. NOT in-place

Inputs:

features: features to be activated by relu

Outputs:

The relu of features

"""

output = np.copy(features)

output[features < 0] = 0

return output

def drelu(features):

"""

Description:

derivatives of relu activation for features

Inputs:

features: input of derivatives

Outputs:

derivatives of relu activation for features

"""

output = np.copy(features)

output[features > 0] = 1

output[features <= 0] = 0

return output

def softmax(data):

"""

Description:

Compute softmax of data. the first axis of data is batch_size

Inputs:

data: data to be activated by softmax

Outputs:

The softmax of data

"""

exp_data = np.exp(data)

sum_exp_data = np.sum(exp_data, axis=1, keepdims=True)

return exp_data / sum_exp_data

def evaluate(images_test, labels, w1, b1, w2, b2, w3, b3):

"""

Description:

Evaluate the accuracy of test set

Inputs:

images_test: images of test set

labels: labels of test set

weight: weight of fully connection layer

bias: bias of fully connection layer

Outputs:

Accuracy of test set

"""

z1 = np.matmul(images_test, w1) + b1

a1 = relu(z1)

z2 = np.matmul(a1, w2) + b2

a2 = relu(z2)

z3 = np.matmul(a2, w3) + b3

softmax_z3 = softmax(z3)

# argmax() return the index of max value

labels_predict = softmax_z3.argmax(axis=1)

is_right = labels_predict == labels

return np.mean(is_right)

# load images

images_train = parse_mnist_images('./MNIST_DATA/train-images.idx3-ubyte')

images_test = parse_mnist_images('./MNIST_DATA/t10k-images.idx3-ubyte')

images_train = np.reshape(images_train, [images_train.shape[0], -1])

images_test = np.reshape(images_test, [images_test.shape[0], -1])

images_train = np.float32(images_train) / 255.0

images_test = np.float32(images_test) / 255.0

# load labels

labels_train = parse_mnist_labels('./MNIST_DATA/train-labels.idx1-ubyte')

labels_test = parse_mnist_labels('./MNIST_DATA/t10k-labels.idx1-ubyte')

labels_train_one_hot = make_one_hot_labels(labels_train)

# parameters

BATCH_SIZE = 100

EPOCH = 10

NUM_NODES = 256

LEARNING_RATE = 0.01

num_train = images_train.shape[0]

single_image_size = images_train.shape[1]

w1 = uniform_random([single_image_size, NUM_NODES], -0.002, 0.002)

b1 = np.zeros([1, NUM_NODES])

w2 = uniform_random([NUM_NODES, NUM_NODES], -0.002, 0.002)

b2 = np.zeros([1, NUM_NODES])

w3 = uniform_random([NUM_NODES, 10], -0.02, 0.02)

b3 = np.zeros([1, 10])

# training and evaluation

for ep in range(EPOCH):

print('epoch', ep + 1)

images_shuffle, labels_shuffle = shuffle_data(images_train,

labels_train_one_hot)

for i in range(0, num_train, BATCH_SIZE):

# get a batch of data

images_batch = images_shuffle[i:i + BATCH_SIZE, :]

labels_batch = labels_shuffle[i:i + BATCH_SIZE, :]

# forward propagation, activation is NOT in-place

z1 = np.matmul(images_batch, w1) + b1

a1 = relu(z1)

z2 = np.matmul(a1, w2) + b2

a2 = relu(z2)

z3 = np.matmul(a2, w3) + b3

softmax_z3 = softmax(z3)

# cross entropy loss, the loss is actually not used

loss = -np.sum(labels_batch * softmax_z3) / BATCH_SIZE

# backward propagation

dz3 = softmax_z3 - labels_batch

dw3 = np.matmul(a2.T, dz3)

db3 = np.mean(dz3, axis=0)

da2 = np.matmul(dz3, w3.T)

dz2 = da2 * drelu(z2)

dw2 = np.matmul(a1.T, dz2)

db2 = np.mean(dz2, axis=0)

da1 = np.matmul(dz2, w2.T)

dz1 = da1 * drelu(z1)

dw1 = np.matmul(images_batch.T, dz1)

db1 = np.mean(dz1, axis=0)

# update paramters

w1 -= LEARNING_RATE * dw1

b1 -= LEARNING_RATE * db1

w2 -= LEARNING_RATE * dw2

b2 -= LEARNING_RATE * db2

w3 -= LEARNING_RATE * dw3

b3 -= LEARNING_RATE * db3

acc = evaluate(images_test, labels_test, w1, b1, w2, b2, w3, b3)

print('accuracy: ', acc)