elasticsearch安装和使用ik分词器

在使用elasticsearch的时候,如果不额外安装分词器的话,在处理text字段时会使用elasticsearch自带的默认分词器,我们来一起看看默认分词器的效果;

环境信息

- 本次实战用到的elasticsearch版本是6.5.0,安装在Ubuntu 16.04.5 LTS,客户端工具是postman6.6.1;

- 如果您需要搭建elasticsearch环境,请参考《Linux环境快速搭建elasticsearch6.5.4集群和Head插件》;

- Ubuntu服务器上安装的JDK,版本是1.8.0_191;

- Ubuntu服务器上安装了maven,版本是是3.5.0;

elasticsearch为什么要用6.5.0版本

截止发布文章时间,elasticsearch官网已经提供了6.5.4版本下载,但是ik分词器的版本目前支持到6.5.0版本,因此本次实战的elasticsearch选择了6.5.0版本;

基本情况介绍

本次实战的elasticsearch环境已经搭建完毕,是由两个机器搭建的集群,并且elasticsearch-head也搭建完成:

- 一号机器,IP地址:192.168.150.128;

- 二号机器:IP地址:192.168.150.128;

- elasticsearch-head安装在一号机器,访问地址:http://192.168.150.128:9100

数据格式说明

为了便于和读者沟通,我们来约定一下如何在文章中表达请求和响应的信息:

- 假设通过Postman工具向服务器发送一个PUT类型的请求,地址是:http://192.168.150.128:9200/test001/article/1

- 请求的内容是JSON格式的,内容如下:

{

"id":1,

"title":"标题a",

"posttime":"2019-01-12",

"content":"一起来熟悉文档相关的操作"

}

对于上面的请求,我在文章中就以如下格式描述:

PUT test001/article/1

{

"id":1,

"title":"标题a",

"posttime":"2019-01-12",

"content":"一起来熟悉文档相关的操作"

}

读者您看到上述内容,就可以在postman中发起PUT请求,地址是"test001/article/1"前面加上您的服务器地址,内容是上面的JSON;

默认分词器的效果

先来看看默认的分词效果:

- 创建一个索引:

PUT test002

- 查看索引基本情况:

GET test002/_settings

收到响应如下,可见并没有分词器的信息:

{

"test002": {

"settings": {

"index": {

"creation_date": "1547863084175",

"number_of_shards": "5",

"number_of_replicas": "1",

"uuid": "izLzdwCQRdeq01tNBxajEg",

"version": {

"created": "6050499"

},

"provided_name": "test002"

}

}

}

}

- 查看分词效果:

POST test002/_analyze?pretty=true

{"text":"我们是软件工程师"}

收到响应如下,可见每个汉字都被拆分成一个词了,这样会导致词项搜索时收不到我们想要的(例如用"我们"来搜索是没有结果的):

{

"tokens": [

{

"token": "我",

"start_offset": 0,

"end_offset": 1,

"type": "" ,

"position": 0

},

{

"token": "们",

"start_offset": 1,

"end_offset": 2,

"type": "" ,

"position": 1

},

{

"token": "是",

"start_offset": 2,

"end_offset": 3,

"type": "" ,

"position": 2

},

{

"token": "软",

"start_offset": 3,

"end_offset": 4,

"type": "" ,

"position": 3

},

{

"token": "件",

"start_offset": 4,

"end_offset": 5,

"type": "" ,

"position": 4

},

{

"token": "工",

"start_offset": 5,

"end_offset": 6,

"type": "" ,

"position": 5

},

{

"token": "程",

"start_offset": 6,

"end_offset": 7,

"type": "" ,

"position": 6

},

{

"token": "师",

"start_offset": 7,

"end_offset": 8,

"type": "" ,

"position": 7

}

]

}

为了词项搜索能得到我们想要的结果,需要换一个分词器,理想的分词效果应该是"我们"、“是”、“软件”、“工程师”,ik分词器可以满足我们的要求,接下来开始实战;

注意事项

- 下面的所有操作都使用es账号来进行,不要用root账号;

- 编译ik分词器需要用到maven,如果您有docker,但是不想安装maven,可以参考《没有JDK和Maven,用Docker也能构建Maven工程》来编译工程;

下载IK分词器源码到Ubuntu

- 登录ik分词器网站:https://github.com/medcl/elasticsearch-analysis-ik

- 按照网站提供的版本对应表,确认我们要使用的分词器版本,很遗憾写文章的时候还没有匹配elasticsearch-6.5.0的版本,那就用master吧,也就是下图中的红框版本:

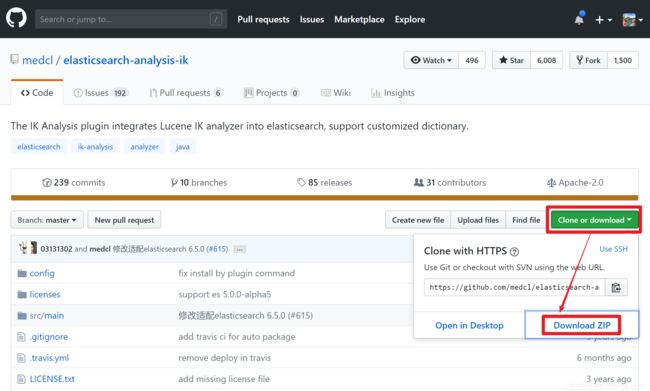

- 如下图,点击下载zip文件:

- 将下载的zip包放到Ubuntu机器上,解压后是个名为elasticsearch-analysis-ik-master的文件夹,在此文件夹下执行以下命令,即可开始构建ik分词器工程:

mvn clean package -U -DskipTests

- 等待编译完成后,在target/release目录下会生产名为elasticsearch-analysis-ik-6.5.0.zip的文件,如下所示:

$ pwd

/usr/local/work/es/elasticsearch-analysis-ik-master

$ cd target/

$ ls

archive-tmp elasticsearch-analysis-ik-6.5.0.jar generated-sources maven-status

classes elasticsearch-analysis-ik-6.5.0-sources.jar maven-archiver releases

$ cd releases/

$ ls

elasticsearch-analysis-ik-6.5.0.zip

- 停止集群中所有机器的elasticsearch进程,在所有机器上做这些操作:在elasticsearch的plugins目录下创建名为ik的目录,再将上面生成的elasticsearch-analysis-ik-6.5.0.zip文件复制到这个新创建的ik目录下;

- 在elasticsearch-analysis-ik-6.5.0.zip所在文件夹下,执行目录unzip elasticsearch-analysis-ik-6.5.0.zip进行解压;

- 确认elasticsearch-analysis-ik-6.5.0.zip已经复制到每个elasticsearch的plugins/ik目录下并解压后,将所有elasticsearch启动,可以发现控制台上会输出ik分词器被加载的信息,如下图所示:

至此,ik分词器安装完成,来验证一下吧;

验证ik分词器

- 在postman发起请求,在json中通过tokenizer指定分词器:

POST test002/_analyze?pretty=true

{

"text":"我们是软件工程师",

"tokenizer":"ik_max_word"

}

这一次得到了分词的效果:

{

"tokens": [

{

"token": "我们",

"start_offset": 0,

"end_offset": 2,

"type": "CN_WORD",

"position": 0

},

{

"token": "是",

"start_offset": 2,

"end_offset": 3,

"type": "CN_CHAR",

"position": 1

},

{

"token": "软件工程",

"start_offset": 3,

"end_offset": 7,

"type": "CN_WORD",

"position": 2

},

{

"token": "软件",

"start_offset": 3,

"end_offset": 5,

"type": "CN_WORD",

"position": 3

},

{

"token": "工程师",

"start_offset": 5,

"end_offset": 8,

"type": "CN_WORD",

"position": 4

},

{

"token": "工程",

"start_offset": 5,

"end_offset": 7,

"type": "CN_WORD",

"position": 5

},

{

"token": "师",

"start_offset": 7,

"end_offset": 8,

"type": "CN_CHAR",

"position": 6

}

]

}

可见所有可能形成的词语都被分了出来,接下试试ik分词器的另一种分词方式ik_smart;

2. 使用ik_smart方式分词的请求如下:

shellshell

POST test002/_analyze?pretty=true

{

“text”:“我们是软件工程师”,

“tokenizer”:“ik_smart”

}

这一次得到了分词的效果:

```json

{

"tokens": [

{

"token": "我们",

"start_offset": 0,

"end_offset": 2,

"type": "CN_WORD",

"position": 0

},

{

"token": "是",

"start_offset": 2,

"end_offset": 3,

"type": "CN_CHAR",

"position": 1

},

{

"token": "软件",

"start_offset": 3,

"end_offset": 5,

"type": "CN_WORD",

"position": 2

},

{

"token": "工程师",

"start_offset": 5,

"end_offset": 8,

"type": "CN_WORD",

"position": 3

}

]

}

可见ik_smart的特点是将原句做拆分,不会因为各种组合出现部分的重复,以下是来自官方的解释:

ik_max_word 和 ik_smart 什么区别?

ik_max_word: 会将文本做最细粒度的拆分,比如会将“中华人民共和国国歌”拆分为“中华人民共和国,中华人民,中华,华人,人民共和国,人民,人,民,共和国,共和,和,国国,国歌”,会穷尽各种可能的组合;

ik_smart: 会做最粗粒度的拆分,比如会将“中华人民共和国国歌”拆分为“中华人民共和国,国歌”。

验证搜索

前面通过http请求验证了分词效果,现在通过搜索来验证分词效果;

- 通过静态mapping的方式创建索引,指定了分词器和分词方式:

PUT test003

{

"mappings": {

"article": {

"dynamic": false,

"properties": {

"title": {

"type": "keyword"

},

"content": {

"type": "text",

"analyzer": "ik_max_word",

"search_analyzer": "ik_max_word"

}

}

}

}

}

创建成功会收到以下响应:

{

"acknowledged": true,

"shards_acknowledged": true,

"index": "test003"

}

- 创建一个文档:

PUT test003/article/1

{

"id":1,

"title":"文章一",

"content":"我们是软件工程师"

}

- 用工程师作为关键词查询试试:

GET test003/_search

{

"query":{

"term":{"content":"工程师"}

}

}

搜索成功:

{

"took": 111,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"skipped": 0,

"failed": 0

},

"hits": {

"total": 1,

"max_score": 0.2876821,

"hits": [

{

"_index": "test003",

"_type": "article",

"_id": "1",

"_score": 0.2876821,

"_source": {

"id": 1,

"title": "文章一",

"content": "我们是软件工程师"

}

}

]

}

}

至此,ik分词器的安装和使用实战就完成了,希望本文能在您的使用过程中提供一些参考;

欢迎关注我的公众号:程序员欣宸

![]()