Ceph安装学习

学习ceph首先了解一下基本架构,再安装一下基础环境,参考

http://docs.ceph.org.cn/start/quick-start-preflight/

环境信息:

Centos7 server Linuxadmin-node 3.10.0-514.el7.x86_64

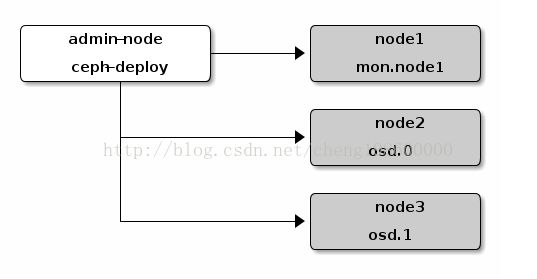

基础架构:

注意点:

(1) 感觉官网给的安装方法,在实际安装过程中会遇到很多问题,都需要一个个解决,不能迷信官网安装步骤

(2) 安装使用ceph-deploy工具进行安装,安装时可能会遇到网速很慢,此时可能会提示失败,需要重新执行安装命令,去各节点查看安装情况

(3) OSD尽量安装在单个的节点上,监视器和元数据服务器也都分开安装

开始安装

预检

(1) 修改各主机hostname,分别为admin-node,node1,node2,node3,ceph-client

(2) 添加各主机hosts,以admin-node 为例,保证能各节点之间能正常通信

(3) 为各主机增加非root用户

//添加用户

Sudouseradd –d /home/cl-ceph -m cl-ceph

Sudopasswd cl-ceph

//为新加的用户创建sudo 权限,在所有节点都执行一次

echo"{username} ALL = (root) NOPASSWD:ALL" | sudo tee/etc/sudoers.d/{username}

sudochmod 0440 /etc/sudoers.d/{username}

//允许admin-node无密码登录子节点

[cl-ceph@admin-nodemy-cluster]$ ssh-keygen

Generatingpublic/private rsa key pair.

Enterfile in which to save the key (/home/cl-ceph/.ssh/id_rsa):

全部直接回车就好

Ssh-copy-idcl-ceph@node1

Ssh-copy-idcl-ceph@node2

Ssh-copy-idcl-ceph@node3

Ssh-copy-idcl-ceph@ceph-client

修改管理节点的~/.ssh/config

(4) 关闭防火墙(官网是修改防火墙规则)和selinux

Systemctlstop firewalld

Systemctldisable firewalld

修改/etc/selinux/config

SELINUX=disabled

(5) admin-node节点上执行

sudo yuminstall -y yum-utils && sudo yum-config-manager --add-repo https://dl.fedoraproject.org/pub/epel/7/x86_64/&& sudo yum install --nogpgcheck -y epel-release && sudo rpm--import /etc/pki/rpm-gpg/RPM-GPG-KEY-EPEL-7 && sudo rm/etc/yum.repos.d/dl.fedoraproject.org*

并在

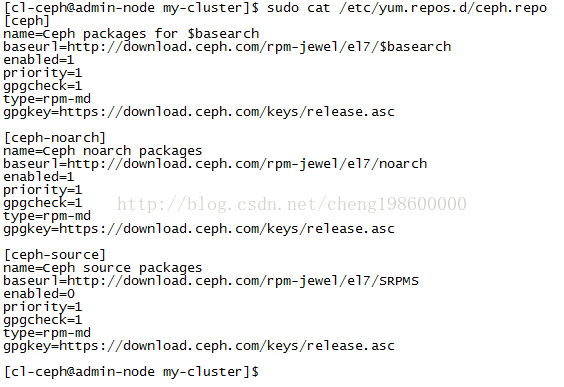

sudo vim/etc/yum.repos.d/ceph.repo 添加

//官网只给出了[ceph-noarch] 部分的内容,在后面执行过程中会报错

[ceph_deploy.conf][DEBUG] found configuration file at: /home/ceph/.cephdeploy.conf

…

[node1][WARNIN]ensuring that /etc/yum.repos.d/ceph.repo contains a high priority

[ceph_deploy][ERROR] RuntimeError: NoSectionError: No section: 'ceph'

再执行

sudo yumupdate && sudo yum install ceph-deploy

sudo yuminstall yum-plugin-priorities

存储集群安装

一般一个存储集群至少有一个monitor和两个OSD守护进程,当集群达到active+clean状态后可以再扩展

(1) 在admin-node上用cl-ceph用户执行:

mkdir my-cluster

cd my-cluster

并确保后续ceph-deploy是在my-cluster目录下执行,且不要用sudo和root身份运行

如果sudo visudo 打开文件后有Defaults requiretty,请注释掉或者改为Defaults:ceph !requiretty

(2) 创建集群

在admin-node上执行ceph-deploy new node1(monitor节点)

在my-cluster目录下会产生ceph.conf ceph-deploy-ceph.log ceph.mon.keyring三个文件

打开ceph.conf 加入以下字段:

osd pool default size = 2

rbd_default_features =3(centos7内核应该只支持1,2特性,不然可能会出现如下错误)

[root@ceph-client~]# sudo rbd map foo --name client.admin

rbd: sysfs writefailed

RBD image featureset mismatch. You can disable features unsupported by the kernel with "rbdfeature disable".

In some casesuseful info is found in syslog - try "dmesg | tail" or so.

rbd: map failed:(6) No such device or address

ceph-deployinstall admin-node node1 node2 node3

这一步可能会受到机器性能和网络的影响而timeout,可以重复执行

ceph-deploymon create-initial

ssh node2

sudomkdir /var/local/osd0

exit

ssh node3

sudomkdir /var/local/osd1

exit

ceph-deployosd prepare node2:/var/local/osd0 node3:/var/local/osd1

ceph-deployosd activate node2:/var/local/osd0 node3:/var/local/osd1

这步可能会出现如下错误:

ERROR: error creating empty object store in/var/local/osd0: (13) Permission denied

这里通过chmod 777 /var/local/osd0来解决

网上说需要修改own,我安装时ok

ceph-deployadmin admin-node node1 node2 node3

复制配置文件和密钥去其他ceph节点

sudochmod +r /etc/ceph/ceph.client.admin.keyring

cephhealth

ceph –s检查集群状态

块设备入门

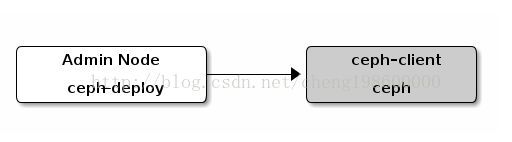

Ceph块设备也叫RBD或者RADOS设备,重新起一个VM安装ceph-client

Admin-node执行

ceph-deployinstall ceph-client

ceph-deployadmin ceph-client 复制密钥

确保ceph-client节点上有对该文件的读权限,ceph-client上执行

sudochmod +r /etc/ceph/ceph.client.admin.keyring

配置块设备,在ceph-client节点上执行:

(1) 创建块设备

Sudo rbdcreate foo --size 4096 -m 192.168.1.135 -k /etc/ceph/ ceph.client.admin.keyring

(2) 将镜像映射为块设备

sudo rbdmap foo --name client.admin -m 192.168.1.135 -k /etc/ceph/ ceph.client.admin.keyring

(3) 创建文件系统

sudomkfs.ext4 -m0 /dev/rbd/rbd/foo

(4) 将该文件系统挂载

sudomkdir /mnt/ceph-block-device

sudomount /dev/rbd/rbd/foo /mnt/ceph-block-device

cd/mnt/ceph-block-device