DPDK系列之九:f-stack简介、安装和网络性能对比测试

一、前言

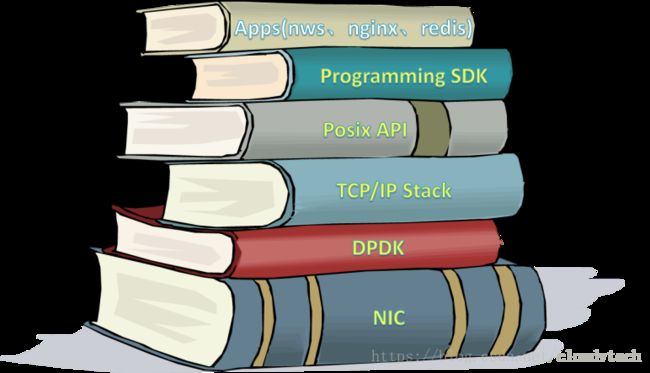

DPDK主要支持在TCP/IP层进行数据包的操作以满足SDN、NFV等应用场景,但是对于上层更复杂的TCP的操作和对应的七层应用的支持非常有限,所以F-stack进行了一些整合操作,将DPDK、FreeBSD用户态协议栈、Posix API、线程框架和一些应用(如Nginx和Redis)集成为一个可部署的端到端服务,并给出了其它七层应用移植的指导,从而使得复杂的七层应用也能享受DPDK的高速数据通道。

转载自https://blog.csdn.net/cloudvtech

二、编译和安装f-stack

2.1 环境设置

OS:

CentOS-7-x86_64-Minimal-1611.iso VM硬件:

VM1 run f-stack nginx

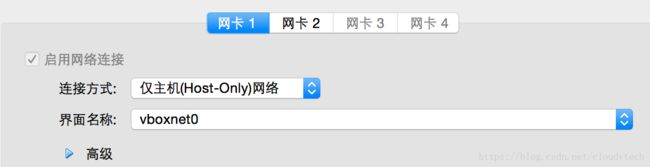

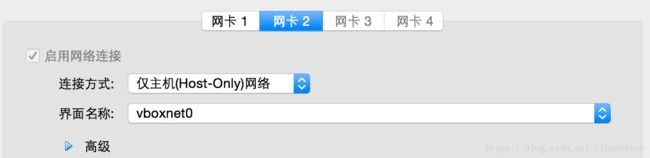

VM2 run send request to nginx in VM11 CPU, 2GB memory and 2 NICs in host-only mode 2.3 配置NIC

cat /etc/sysconfig/network-scripts/ifcfg-enp0s8

TYPE=Ethernet

BOOTPROTO=dhcp

DEFROUTE=yes

PEERDNS=yes

PEERROUTES=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_PEERDNS=yes

IPV6_PEERROUTES=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=enp0s8

UUID=4e48307c-cac0-491b-b98c-46f6fac26731

DEVICE=enp0s8

ONBOOT=yesf-stack NIC

cat /etc/sysconfig/network-scripts/ifcfg-enp0s17

TYPE="Ethernet"

BOOTPROTO="dhcp"

DEFROUTE="yes"

PEERDNS="yes"

PEERROUTES="yes"

IPV4_FAILURE_FATAL="no"

IPV6INIT="yes"

IPV6_AUTOCONF="yes"

IPV6_DEFROUTE="yes"

IPV6_PEERDNS="yes"

IPV6_PEERROUTES="yes"

IPV6_FAILURE_FATAL="no"

IPV6_ADDR_GEN_MODE="stable-privacy"

NAME="enp0s17"

UUID="13dedce7-1ff0-4d46-8590-43f5c6d1a151"

DEVICE="enp0s17"

ONBOOT="yes"2.4 安装依赖包

yum makecache

yum install -y git gcc openssl-devel bc epel-release wget htop net-tools nginx

wget ftp://ftp.pbone.net/mirror/ftp.scientificlinux.org/linux/scientific/7.0/x86_64/updates/security/kernel-devel-3.10.0-514.el7.x86_64.rpm

rpm -ivh kernel-devel-3.10.0-514.el7.x86_64.rpm

iptables -F

systemctl stop firewalld

systemctl disable firewalld2.5 编译安装

mkdir -p /data/f-stack/

git clone https://github.com/F-Stack/f-stack.git /data/f-stack

cd /data/f-stack/dpdk

make config T=x86_64-native-linuxapp-gcc

make

export FF_PATH=/data/f-stack

export FF_DPDK=/data/f-stack/dpdk/build

cd /data/f-stack/lib

make

cd /data/f-stack/app/nginx-1.11.10

./configure --prefix=/usr/local/nginx_fstack --with-ff_module

make

make install

cd /data/f-stack/tools

make三、运行基于f-stack的nginx

3.1 配置环境

准备配置文件

echo 1024 > /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages

mkdir /mnt/huge

mount -t hugetlbfs nodev /mnt/huge

modprobe uio

insmod /data/f-stack/dpdk/build/kmod/igb_uio.ko

insmod /data/f-stack/dpdk/build/kmod/rte_kni.ko

export myaddr=`ifconfig enp0s17 | grep "inet" | grep -v ":" | awk -F ' ' '{print $2}'`

export mymask=`ifconfig enp0s17 | grep "netmask" | awk -F ' ' '{print $4}'`

export mybc=`ifconfig enp0s17 | grep "broadcast" | awk -F ' ' '{print $6}'`

export myhw=`ifconfig enp0s17 | grep "ether" | awk -F ' ' '{print $2}'`

export mygw=`route -n | grep 0.0.0.0 | grep enp0s17 | grep UG | awk -F ' ' '{print $2}'`

export FF_PATH=/data/f-stack

export FF_DPDK=/data/f-stack/dpdk/build

ifconfig enp0s17 down

python /data/f-stack/dpdk/tools/dpdk-devbind.py --bind=igb_uio enp0s17

python /data/f-stack/dpdk/tools/dpdk-devbind.py --status3.2 运行DPDK和Nginx

cat /data/f-stack/config.ini

[dpdk]

## Hexadecimal bitmask of cores to run on.

lcore_mask=1

channel=4

promiscuous=1

numa_on=1

## TCP segment offload, default: disabled.

tso=0

## HW vlan strip, default: enabled.

vlan_strip=1

# enabled port list

#

# EBNF grammar:

#

# exp ::= num_list {"," num_list}

# num_list ::= |

# range ::= "-"

# num ::= '0' | '1' | '2' | '3' | '4' | '5' | '6' | '7' | '8' | '9'

#

# examples

# 0-3 ports 0, 1,2,3 are enabled

# 1-3,4,7 ports 1,2,3,4,7 are enabled

port_list=0

## Port config section

## Correspond to dpdk.port_list's index: port0, port1...

[port0]

addr=10.0.2.15

netmask=255.255.255.0

broadcast=10.0.2.255

gateway=10.0.2.2

... ... 3.2 运行nginx

cp /data/f-stack/config.ini /usr/local/nginx_fstack/

cp /data/f-stack/config.ini /usr/local/nginx_fstack/conf/f-stack.conf

/usr/local/nginx_fstack/sbin/nginx3.3 查看状态

进程

![]()

CPU

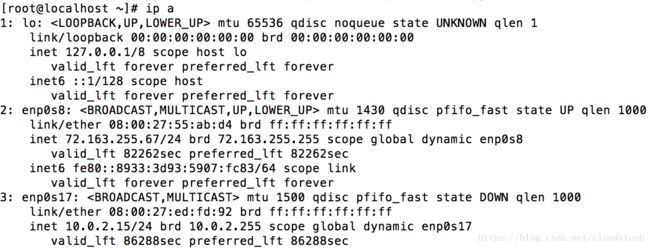

ip

[root@localhost conf]# ip a

1: lo: mtu 65536 qdisc noqueue state UNKNOWN qlen 1

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s8: mtu 1430 qdisc pfifo_fast state UP qlen 1000

link/ether 08:00:27:55:ab:d4 brd ff:ff:ff:ff:ff:ff

inet 72.163.255.67/24 brd 72.163.255.255 scope global dynamic enp0s8

valid_lft 82075sec preferred_lft 82075sec

inet6 2001:420:588b:1306:4967:a034:166:ce0f/128 scope global dynamic

valid_lft 1209443sec preferred_lft 604643sec

inet6 fe80::8933:3d93:5907:fc83/64 scope link

valid_lft forever preferred_lft forever ifconfig

[root@localhost conf]# /data/f-stack/tools/ifconfig/ifconfig

EAL: WARNING: Address Space Layout Randomization (ASLR) is enabled in the kernel.

EAL: This may cause issues with mapping memory into secondary processes

lo0: flags=8008 metric 0 mtu 16384

options=600003

groups: lo

f-stack-0: flags=8843 metric 0 mtu 1500

ether 8:0:27:ed:fd:92

inet 10.0.2.15 netmask 0xffffff00 broadcast 10.0.2.255 netstat

[root@localhost conf]# /data/f-stack/tools/netstat/netstat -an

EAL: WARNING: Address Space Layout Randomization (ASLR) is enabled in the kernel.

EAL: This may cause issues with mapping memory into secondary processes

Active Internet connections (including servers)

Proto Recv-Q Send-Q Local Address Foreign Address (state)

tcp4 0 0 *.80 *.* LISTEN

udp4 0 0 *.* *.* arp

[root@localhost conf]# /data/f-stack/tools/arp/arp -an

EAL: WARNING: Address Space Layout Randomization (ASLR) is enabled in the kernel.

EAL: This may cause issues with mapping memory into secondary processes

? (10.0.2.15) at 8:0:27:ed:fd:92 on f-stack-0 permanent [ethernet]

? (10.0.2.2) at 52:54:0:12:35:2 on f-stack-0 expires in 510 seconds [ethernet]转载自https://blog.csdn.net/cloudvtech

四、互联访问

VM1:

[root@fstack-dev1 ~]# ps -ef | grep nginx

root 17511 1 0 00:02 ? 00:00:00 nginx: master process /usr/local/nginx_fstack/sbin/nginx

root 17513 17511 91 00:02 ? 00:00:11 nginx: worker process

root 17535 2163 0 00:02 pts/0 00:00:00 grep --color=auto nginx

/data/f-stack/tools/ifconfig/ifconfig

EAL: WARNING: Address Space Layout Randomization (ASLR) is enabled in the kernel.

EAL: This may cause issues with mapping memory into secondary processes

lo0: flags=8008 metric 0 mtu 16384

options=600003

groups: lo

f-stack-0: flags=8843 metric 0 mtu 1500

ether 8:0:27:ed:fd:92

inet 192.168.56.101 netmask 0xffffff00 broadcast 192.168.56.255 VM2:

[root@fstack-dev2 wrk-master]# arp -an

? (192.168.56.101) at 08:00:27:ed:fd:92 [ether] on enp0s10

? (192.168.56.100) at 08:00:27:65:b2:2a [ether] on enp0s10

? (192.168.56.1) at 0a:00:27:00:00:00 [ether] on enp0s10

? (72.163.255.1) at 00:08:e3:ff:fd:98 [ether] on enp0s9

? (72.163.255.11) at 6c:41:6a:ff:1f:7f [ether] on enp0s9从VM2访问VM1基于f-stack的nginx:

[root@fstack-dev2 wrk-master]# curl http://192.168.56.101/

Welcome to nginx!

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.

五、性能测试

5.1 在VM2安装wrk

git clone https://github.com/wg/wrk.git wrk

cd wrk

make5.2 在VM1启动正常的Nginx并测试

在VM2运行wrk结果如下:

[root@fstack-dev2 wrk-master]# ./wrk -t10 -c 1k -d30s http://192.168.56.101/index.html

Running 30s test @ http://192.168.56.101/index.html

10 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 59.48ms 169.33ms 1.99s 92.64%

Req/Sec 1.49k 0.92k 7.89k 74.29%

432861 requests in 30.10s, 350.87MB read

Socket errors: connect 0, read 299, write 0, timeout 413

Requests/sec: 14380.66

Transfer/sec: 11.66MB5.3 在VM1启动基于f-stack的Nginx并测试

start.sh

echo 1024 > /sys/kernel/mm/hugepages/hugepages-2048kB/nr_hugepages

mkdir /mnt/huge

mount -t hugetlbfs nodev /mnt/huge

modprobe uio

insmod /data/f-stack/dpdk/build/kmod/igb_uio.ko

insmod /data/f-stack/dpdk/build/kmod/rte_kni.ko

ifconfig enp0s17 down

python /data/f-stack/dpdk/tools/dpdk-devbind.py --bind=igb_uio enp0s17

python /data/f-stack/dpdk/tools/dpdk-devbind.py --status

/usr/local/nginx_fstack/sbin/nginx

sleep 3

ps -ef| grep nginx在VM2运行wrk结果如下:

[root@fstack-dev2 wrk-master]# ./wrk -t10 -c 1k -d30s http://192.168.56.101/index.html

Running 30s test @ http://192.168.56.101/index.html

10 threads and 1000 connections

Thread Stats Avg Stdev Max +/- Stdev

Latency 24.83ms 35.61ms 175.35ms 78.34%

Req/Sec 3.40k 604.47 10.66k 76.76%

1013642 requests in 30.10s, 822.60MB read

Requests/sec: 33679.78

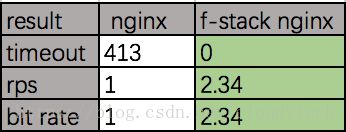

Transfer/sec: 27.33MB5.4 结果分析如下

转载自https://blog.csdn.net/cloudvtech