k8s-学习总结(Kubernetes基本概念、运行结构、分层结构、安装部署)

文章目录

- 官方文档

- 相关概念

- 运行结构

- 分层结构

- 相关命令

- kubeadm

- Kunernetes安装部署

- 安装环境

- 安装过程

官方文档

记录几个常用的

- 主页(中文):https://kubernetes.io/zh/

- 文档概览:https://kubernetes.io/zh/docs/home/

- 标准化词汇表:https://kubernetes.io/zh/docs/reference/glossary/?all=true#term-kubelet

(查询各种关键词、技术术语的相关解释) - k8s中文社区:https://www.kubernetes.org.cn/

- k8s中文社区的文档:http://docs.kubernetes.org.cn/

相关概念

- Kubernetes :是一个可移植的、可扩展的开源平台,用于管理容器化的工作负载和服务,可促进声明式配置和自动化。Kubernetes 拥有一个庞大且快速增长的生态系统。Kubernetes 的服务、支持和工具广泛可用。

- Kubernetes 名称意义: Kubernetes 源于希腊语,意为 “舵手” 或 “飞行员”。也称为k8s,首字母k和尾字母s之间有8个字母

- Kubeadm用来快速安装 Kubernetes 并搭建安全稳定的集群的工具。可以使用 kubeadm 安装控制面和工作节点组件

- Kubectl:是用来和 Kubernetes API 服务器进行通信的命令行工具。可以使用 kubectl 创建、检查、更新和删除 Kubernetes 对象

- Kubernetes 版本号格式为 x.y.z,其中 x 为大版本号,y 为小版本号,z 为补丁版本号。 版本号格式遵循 Semantic Versioning 规则

- Kubernetes 优势:

- 服务发现和负载均衡:Kubernetes 可以使用 DNS 名称或自己的 IP 地址公开容器,如果到容器的流量很大,Kubernetes 可以负载均衡并分配网络流量,从而使部署稳定。

- 存储编排:Kubernetes 允许自动挂载您选择的存储系统,例如本地存储、公共云提供商等。

- 自动部署和回滚:可以使用 Kubernetes 描述已部署容器的所需状态,它可以以受控的速率将实际状态更改为所需状态。例如,您可以自动化 Kubernetes 来为您的部署创建新容器,删除现有容器并将它们的所有资源用于新容器。

- 自动二进制打包:Kubernetes 允许您指定每个容器所需 CPU 和内存(RAM)。当容器指定了资源请求时,Kubernetes 可以做出更好的决策来管理容器的资源。

- 自我修复:Kubernetes 重新启动失败的容器、替换容器、杀死不响应用户定义的运行状况检查的容器,并且在准备好服务之前不将其通告给客户端。

- 密钥与配置管理:Kubernetes 允许您存储和管理敏感信息,例如密码、OAuth 令牌和 ssh 密钥。您可以在不重建容器镜像的情况下部署和更新密钥和应用程序配置,也无需在堆栈配置中暴露密钥。

运行结构

- Kubernetes 组成分类:

-

Master:Master 组件提供集群的控制平台。Master 组件对集群进行全局决策(例如,调度),并检测和响应集群事件(例如,当不满足部署的 replicas 字段时,启动新的 pod)。Master 组件可以在集群中的任何节点上运行。

- kube-apiserver:主节点上负责提供 Kubernetes API 服务的组件;它是 Kubernetes 控制面的前端。

- etcd:是兼具一致性和高可用性的键值数据库,可以作为保存 Kubernetes 所有集群数据的后台数据库。

- kube-scheduler:主节点上的组件,该组件监视那些新创建的未指定运行节点的 Pod,并选择节点让 Pod 在上面运行。调度决策考虑的因素包括单个 Pod 和 Pod 集合的资源需求、硬件/软件/策略约束、亲和性和反亲和性规范、数据位置、工作负载间的干扰和最后时限。

- kube-controller-manager在主节点上运行控制器的组件。从逻辑上讲,每个控制器都是一个单独的进程,但是为了降低复杂性,它们都被编译到同一个可执行文件,并在一个进程中运行。这些控制器包括:

- 节点控制器(Node Controller): 负责在节点出现故障时进行通知和响应。

- 副本控制器(Replication Controller): 负责为系统中的每个副本控制器对象维护正确数量的 Pod。

- 端点控制器(Endpoints Controller): 填充端点(Endpoints)对象(即加入 Service 与 Pod)。

- 服务帐户和令牌控制器(Service Account & Token Controllers): 为新的命名空间创建默认帐户和 API 访问令牌.

- 云控制器管理器-(cloud-controller-manager)

- cloud-controller-manager 运行与基础云提供商交互的控制器。。可以通过在启动 kube-controller-manager 时将

--cloud-provider参数设置为external来禁用云控制器循环监控。主要控制器包括:- 节点控制器(Node Controller): 用于检查云提供商以确定节点是否在云中停止响应后被删除

- 路由控制器(Route Controller): 用于在底层云基础架构中设置路由

- 服务控制器(Service Controller): 用于创建、更新和删除云提供商负载均衡器

- 数据卷控制器(Volume Controller): 用于创建、附加和装载卷、并与云提供商进行交互以编排卷

-

Node:节点组件在集群每个节点上运行,维护运行的 Pod 并提供 Kubernetes 运行环境

-

kubelet一个在集群中每个节点上运行的代理。它保证容器都运行在 Pod 中。kubelet 接收一组通过各类机制提供给它的 PodSpecs,确保这些 PodSpecs 中描述的容器处于运行状态且健康。kubelet 不会管理不是由 Kubernetes 创建的容器。

-

kube-proxy:kube-proxy 是集群中每个节点上运行的网络代理,实现 Kubernetes Service 概念的一部分。kube-proxy 维护节点上的网络规则。这些网络规则允许从集群内部或外部的网络会话与 Pod 进行网络通信。如果有 kube-proxy 可用,它将使用操作系统数据包过滤层。否则,kube-proxy 会转发流量本身。

-

容器运行时(Container Runtime):容器运行时是负责运行容器的软件。Kubernetes 支持多个容器运行时: Docker、containerd、cri-o、 rktlet 以及任何实现 Kubernetes CRI (容器运行环境接口)。

-

-

Addon:插件,扩展了 Kubernetes 的功能;插件分类:

- 网络和网络策略

- 有很多,先标记一下后续学习,具体可查看网址解释:https://kubernetes.io/zh/docs/concepts/cluster-administration/addons/

- Flannel 是一个可以用于 Kubernetes 的 overlay 网络提供者

- 服务发现

- CoreDNS 是一种灵活的,可扩展的 DNS 服务器,可以 安装 为集群内的 Pod 提供 DNS 服务

- 可视化管理

- Dashboard 是一个 Kubernetes 的 web 控制台界面。

- Weave Scope 是一个图形化工具,用于查看你的 containers、 pods、services 等。 请和一个 Weave Cloud account 一起使用,或者自己运行 UI。

- 基础设施

- KubeVirt 是可以让 Kubernetes 运行虚拟机的 add-ons 。通常运行在裸机群集上

- 容器资源监控:容器资源监控将关于容器的一些常见的时间序列度量值保存到一个集中的数据库中,并提供用于浏览这些数据的界面

- Resource metrics pipeline

- Full metrics pipeline

- 集群层面日志:集群层面日志机制负责将容器的日志数据保存到一个集中的日志存储中,该存储能够提供搜索和浏览接口。

- 基本日志记录:使用

kubectl logs命令获取基本日志记录内容 - 节点级日志记录:容器化应用写入 stdout 和 stderr 的任何数据,都会被容器引擎捕获并被重定向到某个位置

- 集群级日志架构:在节点级日志的基础上,增加具有日志代理功能的 sidecar 容器,作为日志管理后端服务

- 基本日志记录:使用

- 网络和网络策略

-

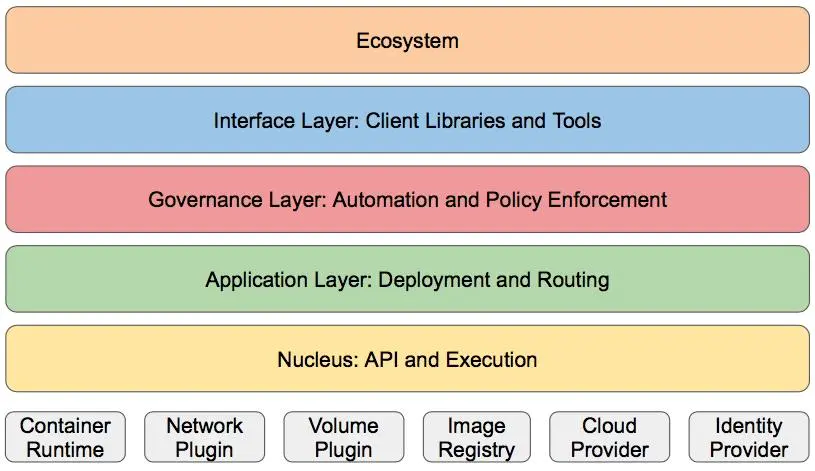

分层结构

Kubernetes设计理念和功能其实就是一个类似Linux的分层架构:

核心层:Kubernetes最核心的功能,对外提供API构建高层的应用,对内提供插件式应用执行环境

应用层:部署(无状态应用、有状态应用、批处理任务、集群应用等)和路由(服务发现、DNS解析等)

管理层:系统度量(如基础设施、容器和网络的度量),自动化(如自动扩展、动态Provision等)以及策略管理(RBAC、Quota、PSP、NetworkPolicy等)

接口层:kubectl命令行工具、客户端SDK以及集群联邦

生态系统:在接口层之上的庞大容器集群管理调度的生态系统,可以划分为两个范畴

Kubernetes外部:日志、监控、配置管理、CI、CD、Workflow、FaaS、OTS应用、ChatOps等

Kubernetes内部:CRI、CNI、CVI、镜像仓库、Cloud Provider、集群自身的配置和管理等

相关命令

kubeadm

kubeadm

kubeadm [command]

kubeadm alpha 预览一组可用的新功能以便从社区搜集反馈

kubeadm config 如果你使用 kubeadm v1.7.x 或者更低版本,你需要对你的集群做一些配置以便使用 kubeadm upgrade 命令

kubeadm help 帮助信息

kubeadm init 启动一个 Kubernetes 主节点

kubeadm join 启动一个 Kubernetes 工作节点并且将其加入到集群

kubeadm reset 还原之前使用 kubeadm init 或者 kubeadm join 对节点产生的改变

kubeadm token 使用 kubeadm join 来管理令牌

kubeadm upgrade 更新一个 Kubernetes 集群到新版本

kubeadm version 打印出 kubeadm 版本

Kunernetes安装部署

安装环境

| 主机 | 系统 | IP | 作用 |

|---|---|---|---|

| node1 | rhel7.5 | 192.168.27.11 | 安装有Docker(18.09.6)、可访问私有仓库、作为部署的master节点 |

| node2 | rhel7.5 | 192.168.27.12 | 安装有Docker(18.09.6)、可访问私有仓库、node节点 |

| node3 | rhel7.5 | 192.168.27.13 | 安装有Docker(18.09.6)、可访问私有仓库、node节点 |

| repository | rhel7.5 | 192.168.27.12 | 搭建有harbor私有仓库,作为集群操作中的镜像使用仓库 |

安装docker环境,是为了k8s在作为容器运行时使用

安装过程

- 修改所有节点daemon.json文件;因为docekr默认运行的cgroup驱动是cgroupfs,k8s使用的是systemd,会发生驱动不一致报错,为防止报错,改为统一口径,本次讲docekr驱动改为systemd,根据官方文档修改

官网文档:https://kubernetes.io/zh/docs/setup/production-environment/container-runtimes/

[root@node1 docker]# vim /etc/docker/daemon.json

{

"registry-mirrors": ["https://reg.mydocker.com"],

"exec-opts": ["native.cgroupdriver=systemd"], #此处为重点,必须填写内容,其他可以根据需要增加

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

[root@node1 docker]# systemctl daemon-reload

[root@node1 docker]# systemctl restart docker.service

- 每个节点都关闭swap功能

[root@node1 ~]# swapoff -a

[root@node1 ~]# vim /etc/fstab

#/dev/mapper/rhel-swap swap swap defaults 0 0

- 每个节点修改sysctl一些网络参数,k8s建立集群需要一定的网络支持

[root@node1 ~]# vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.ipv4.ip_forward=1

[root@node1 ~]# sysctl --system

- 每个节点都设置阿里云的yum源,保证虚拟机可以上网,安装3个软件kubelet、kubeadm、kubectl;然后开启kubelet服务并且设置为开机自启动

[root@node1 ~]# vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=0

[root@node1 ~]# yum install kubelet kubeadm kubectl -y

[root@node1 ~]# systemctl enable --now kubelet.service

- Master节点,进行初始化

在初始化前需要拉取相关镜像,然后才能初始,由于镜像地址为国外地址,你懂的,所以需要改为阿里云资源

[root@node1 ~]# kubeadm config print init-defaults

……

imageRepository: k8s.gcr.io

……

#查看kubeadm配置文件信息,可以看到其中这条,镜像资源位k8s.gcr.io,无法使用

[root@node1 ~]# kubeadm config images list

W0223 00:07:22.549605 1592 validation.go:28] Cannot validate kube-proxy cotor is available

W0223 00:07:22.549654 1592 validation.go:28] Cannot validate kubelet confi is available

k8s.gcr.io/kube-apiserver:v1.17.3

k8s.gcr.io/kube-controller-manager:v1.17.3

k8s.gcr.io/kube-scheduler:v1.17.3

k8s.gcr.io/kube-proxy:v1.17.3

k8s.gcr.io/pause:3.1

k8s.gcr.io/etcd:3.4.3-0

k8s.gcr.io/coredns:1.6.5

[kubeadm@node1 add-ons]$ kubeadm config images list --image-repository registry.aliyuncs.com/google_containers

W0223 01:57:06.960018 7598 validation.go:28] Cannot validate kube-proxy config - no validator is available

W0223 01:57:06.960511 7598 validation.go:28] Cannot validate kubelet config - no validator is available

registry.aliyuncs.com/google_containers/kube-apiserver:v1.17.3

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.17.3

registry.aliyuncs.com/google_containers/kube-scheduler:v1.17.3

registry.aliyuncs.com/google_containers/kube-proxy:v1.17.3

registry.aliyuncs.com/google_containers/pause:3.1

registry.aliyuncs.com/google_containers/etcd:3.4.3-0

registry.aliyuncs.com/google_containers/coredns:1.6.5

#查看阿里云的镜像资源信息是否与k8s的配置资源一致

[root@node1 ~]# kubeadm config images pull --image-repository registry.aliyunntainers

#将使用到的镜像拉取下来

[root@node1 ~]# docker images

REPOSITORY TAG IMAGE ID

registry.aliyuncs.com/google_containers/kube-proxy v1.17.3 ae853e93

registry.aliyuncs.com/google_containers/kube-apiserver v1.17.3 90d27391

registry.aliyuncs.com/google_containers/kube-controller-manager v1.17.3 b0f1517c

registry.aliyuncs.com/google_containers/kube-scheduler v1.17.3 d109c082

registry.aliyuncs.com/google_containers/coredns 1.6.5 70f31187

registry.aliyuncs.com/google_containers/etcd 3.4.3-0 303ce5db

registry.aliyuncs.com/google_containers/pause 3.1 da86e6ba

# 查看拉取的镜像文件

[root@node1 ~]# kubeadm init --pod-network-cidr=10.244.0.0/16 --image-repository registry.aliyuncs.com/google_containers

#进行初始化控制节点

#--pod-network-cidr=10.244.0.0/16 是根据要添加flannel插件设置的

……

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.27.11:6443 --token kb3of5.77ez8xh3pv1q2g26 \

--discovery-token-ca-cert-hash sha256:49ae0dde8ff27dddaca8ad1885aecdb24e8c2144d52cf778825345f168f12211

#出现以上信息表示Master控制节点初始化完成了

kubeadm join 192.168.27.11:6443 --token kb3of5.77ez8xh3pv1q2g26 \

--discovery-token-ca-cert-hash sha256:49ae0dde8ff27dddaca8ad1885aecdb24e8c2144d52cf778825345f168f12211

#上面2行提示,在其他节点运行就可以加入集群了,与dockerswarm类似

[root@node1 ~]# kubeadm token list

TOKEN TTL EXPIRES USAGES DESCRIPTION EXTRA GROUPS

kb3of5.77ez8xh3pv1q2g26 23h 2020-02-24T00:58:12+08:00 authentication,signing The default bootstrap token generated by 'kubeadm init'. system:bootstrappers:kubeadm:default-node-token

#可以看出k8s的token是有时效性的,24小时

注意:

1. token过期后,使用kubeadm token create创建新的token

2. 如果Master节点初始化失败了,运行kubeadm reset,清楚之前留存的信息,避免再次创建发生冲突

- 根据初始化完成后的提示信息,添加用户,并且完成目录创建和文件复制

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

[root@node1 ~]# useradd kubeadm

#用户名称自由设置

[root@node1 ~]# visudo

91 ## Allow root to run any commands anywhere

92 root ALL=(ALL) ALL

93 kubeadm ALL=(ALL) NOPASSWD: ALL

# 添加93行信息,将kubeadm设置权限,同时登录不使用密码,根据需求进行设置

[root@node1 ~]# su - kubeadm

#登录用户kubeadm

[root@node1 ~]# su - kubeadm

[kubeadm@node1 ~]$ mkdir -p $HOME/.kube

[kubeadm@node1 ~]$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[kubeadm@node1 ~]$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

#运行k8s提示代码

- 添加集群其他节点(node2、node3)

[root@node2 ~]# kubeadm join 192.168.27.11:6443 --token kb3of5.77ez8xh3pv1q2g26 --dis778825345f168f12211

W0223 01:20:43.573088 15204 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane s

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" Confi

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

[root@node3 ~]# kubeadm join 192.168.27.11:6443 --token kb3of5.77ez8xh3pv1q2g26 --dis778825345f168f12211

W0223 01:20:43.573088 15204 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane s

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.17" Confi

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

#节点node2和node3加入集群

#根据提示查看节点信息

[kubeadm@node1 ~]$ kubectl get node

NAME STATUS ROLES AGE VERSION

node1 NotReady master 30m v1.17.3

node2 NotReady 7m38s v1.17.3

node3 NotReady 7m14s v1.17.3

在使用kubectl的时候,无法自动不全命令,需要添加一行代码在bashrc中,直接运行本行命令echo "source <(kubectl completion bash)">> ~/.bashrc,重启加载bashrcsource .bashrc,即可完成kubectl命令不全

- 节点无法运行,查看信息,原因是没有添加网络配置

[kubeadm@node1 ~]$ kubectl get ns

NAME STATUS AGE

default Active 34m

kube-node-lease Active 34m

kube-public Active 34m

kube-system Active 34m

#查看ns都也哪些,其中系统管理的都在kube-system

[kubeadm@node1 ~]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-9d85f5447-fktb7 0/1 Pending 0 34m

coredns-9d85f5447-wvx48 0/1 Pending 0 34m

etcd-node1 1/1 Running 0 34m

kube-apiserver-node1 1/1 Running 0 34m

kube-controller-manager-node1 1/1 Running 0 34m

kube-proxy-98v44 1/1 Running 0 12m

kube-proxy-jd6gd 1/1 Running 0 11m

kube-proxy-wggcv 1/1 Running 0 34m

kube-scheduler-node1 1/1 Running 0 34m

#查看具体信息,可以看出网络配置不完全

- 添加flannel网络插件

yml配置文件网址:https://github.com/coreos/flannel/blob/master/Documentation/kube-flannel.yml

[kubeadm@node1 add-ons]$ \vi kube-flannel.yml

……

网址中400多行代码粘贴到文件中

……

#建立flannel的yml文件

[kubeadm@node1 add-ons]$ kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

#添加flannel插件

[kubeadm@node1 add-ons]$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-9d85f5447-fktb7 1/1 Running 0 42m

coredns-9d85f5447-wvx48 1/1 Running 0 42m

etcd-node1 1/1 Running 0 42m

kube-apiserver-node1 1/1 Running 0 42m

kube-controller-manager-node1 1/1 Running 0 42m

kube-flannel-ds-amd64-b9bhf 1/1 Running 0 64s

kube-flannel-ds-amd64-jszkh 1/1 Running 0 64s

kube-flannel-ds-amd64-l7drb 1/1 Running 0 64s

kube-proxy-98v44 1/1 Running 0 19m

kube-proxy-jd6gd 1/1 Running 0 19m

kube-proxy-wggcv 1/1 Running 0 42m

kube-scheduler-node1 1/1 Running 0 42m

#再次查看已经完全运行

[kubeadm@node1 add-ons]$ kubectl get node

NAME STATUS ROLES AGE VERSION

node1 Ready master 43m v1.17.3

node2 Ready 20m v1.17.3

node3 Ready 20m v1.17.3

#查看节点信息也是运行中

- 附加选项:由于测试节点少,将master节点也设置为工作节点方式

[kubeadm@node1 add-ons]$ kubectl taint nodes --all node-role.kubernetes.io/master-

node/node1 untainted

taint "node-role.kubernetes.io/master" not found

taint "node-role.kubernetes.io/master" not found