MFS-学习总结(部署)

实验环境

| 主机 | IP | 作用 | 安装软件 |

|---|---|---|---|

| node1 | 192.168.27.11 | Master Server | moosefs-master\moosefs-cgi\moosefs-cgiserv\moosefs-cli |

| node2 | 192.168.27.12 | Chunk Servers | moosefs-chunkserver |

| node3 | 192.168.27.13 | Chunk Servers | moosefs-chunkserver |

| node4 | 192.168.27.14 | Chunk Servers | moosefs-chunkserver |

| node5 | 192.168.27.15 | Chunk Servers | moosefs-chunkserver |

| node6 | 192.168.27.16 | Clients | moosefs-client |

软件安装,官网有教程

- https://moosefs.com/download/

- 包括了下载repo文件,和节点安装软件命令

部署过程

简单部署

Master Server

- 修改hosts文件

hosts文件添加解析mfsmaster,是因为cgi服务的/usr/share/mfscgi/mfs.cgi配置文件会寻找mfsmaster名称的服务器,如果没有解析,服务查询到master主机

[root@node1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.27.11 node1 mfsmaster

#添加mfsmaster的解析

- 配置文件(没有什么修改的)

[root@node1 ~]# ls /etc/mfs/mfs

mfsexports.cfg #指定那些客户端主机可以远程挂接 MooseFS 文件系统,以及授予挂接客户端什么样的访问权限

mfsmaster.cfg #master服务的主配置文件

mfstopology.cfg #mfs网络拓扑定义,包含IP地址到网络位置的分配(通常是交换机号)。 该文件是可选的。如果您的网络中有一台交换机,或者不需要减少交换机之间的流量,则将此文件留空。

- 启动master节点相关服务

[root@node1 ~]# systemctl start moosefs-master

#启动master主服务

[root@node1 ~]# systemctl start moosefs-cgiserv

#启动图形界面

- 查看启动端口

[root@node1 ~]# netstat -antpl | grep 94*

tcp 0 0 0.0.0.0:9419 0.0.0.0:* LISTEN 18853/mfsmaster

tcp 0 0 0.0.0.0:9420 0.0.0.0:* LISTEN 18853/mfsmaster

tcp 0 0 0.0.0.0:9421 0.0.0.0:* LISTEN 18853/mfsmaster

tcp 0 0 0.0.0.0:9425 0.0.0.0:* LISTEN 18819/python2

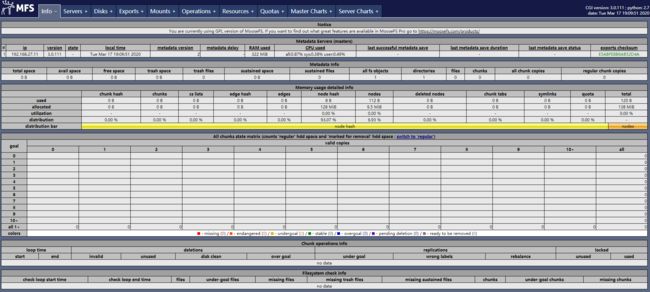

- 图形界面在,浏览器访问192.168.27.11:9425,可以看到分布式文件系统的监控页面

a. 点击不同选项卡查看具体信息

b. 显示界面可同时显示多个选项卡内容,其中想监控哪一选项卡信息,就点击哪个选项的加号即可加号

Chunk Server

- 修改hosts文件

hosts文件添加解析mfsmaster,是因为chunkserver服务的/etc/mfs/mfschunkserver.cfg配置文件会寻找mfsmaster名称的服务器,如果没有解析,无法连接到master主机

[root@node2 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.27.11 node1 mfsmaster

#添加mfsmaster的解析

- 配置文件(主配置文件没有修改的,mfshdd.cfg中需要添加一个存储路径)

[root@node2 mfs]# ls /etc/mfs

mfschunkserver.cfg #主配置文件

mfshdd.cfg #mfschunkserver的MooseFS存储目录列表

[root@node2 mfs]# vim /etc/mfs/mfshdd.cfg

……省略部分内容

/mnt/chunk_n2 #根据配置文件中给出的样例设定,此处不设定大小,使用磁盘挂载管理此文件夹

- 设置挂载磁盘,统一管理

[root@node2 mfs]# mkdir /mnt/chunk_n2

[root@node2 mfs]# chown mfs.mfs /mnt/chunk_n2/

[root@node2 mfs]# ll /mnt/chunk_n2/ -d

drwxr-xr-x 2 mfs mfs 6 Mar 18 00:02 /mnt/chunk_n2/

#创建本机chunk存储路径文件,注意文件所属改为mfs用户和组

[root@node2 mfs]# mount /dev/vdb1 /mnt/chunk_n2

[root@node2 mfs]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/vdb1 1044132 32944 1011188 4% /mnt/chunk_n2

#临时挂载

[root@node2 mfs]# blkid

/dev/vdb1: UUID="28fb1a81-751c-47b1-86c2-5c3dab62e9cf" TYPE="xfs"

[root@node2 mfs]# vim /etc/fstab

UUID="28fb1a81-751c-47b1-86c2-5c3dab62e9cf" /mnt/chunk_n2 xfs defaults 0 0

[root@node2 mfs]# mount -a

#永久挂载,建议使用uuid

- 启动服务

[root@node2 mfs]# systemctl start moosefs-chunkserver

#启动服务

[root@node2 mfs]# ls /mnt/chunk_n2/

00 08 10 18 20 28 30 38 40 48 50 58 60 68 70 78 80 88 90 98 A0 A8 B0 B8 C0 C8 D0 D8 E0 E8 F0 F8

01 09 11 19 21 29 31 39 41 49 51 59 61 69 71 79 81 89 91 99 A1 A9 B1 B9 C1 C9 D1 D9 E1 E9 F1 F9

02 0A 12 1A 22 2A 32 3A 42 4A 52 5A 62 6A 72 7A 82 8A 92 9A A2 AA B2 BA C2 CA D2 DA E2 EA F2 FA

03 0B 13 1B 23 2B 33 3B 43 4B 53 5B 63 6B 73 7B 83 8B 93 9B A3 AB B3 BB C3 CB D3 DB E3 EB F3 FB

04 0C 14 1C 24 2C 34 3C 44 4C 54 5C 64 6C 74 7C 84 8C 94 9C A4 AC B4 BC C4 CC D4 DC E4 EC F4 FC

05 0D 15 1D 25 2D 35 3D 45 4D 55 5D 65 6D 75 7D 85 8D 95 9D A5 AD B5 BD C5 CD D5 DD E5 ED F5 FD

06 0E 16 1E 26 2E 36 3E 46 4E 56 5E 66 6E 76 7E 86 8E 96 9E A6 AE B6 BE C6 CE D6 DE E6 EE F6 FE

07 0F 17 1F 27 2F 37 3F 47 4F 57 5F 67 6F 77 7F 87 8F 97 9F A7 AF B7 BF C7 CF D7 DF E7 EF F7 FF

[root@node2 mfs]# ls /mnt/chunk_n2/ | wc -l

256

#启动服务后chunk存储文件夹自动生成256文件加存放chunk

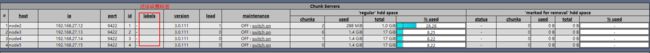

- master监控显示

6. 其他mfschunkserver服务器操作相同,先添加一个node3节点

![]()

node3服务器上,我没有使用挂载磁盘,直接在将chunk目录建立在了根目录磁盘中,与node2进行显示对比

上图可以看出显示看出对比很明,node3直接显示根目录磁盘大小

client

- 修改hosts文件

[root@node6 ~]# mfsmount

can't resolve master hostname and/or portname (mfsmaster:9421)

#不添加解析,挂载找不到主机

[root@node1 ~]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.27.11 node1 mfsmaster

#添加mfsmaster的解析

- 配置文件

[root@node6 ~]# ls /etc/mfs

mfsmount.cfg #客户端挂载配置文件

[root@node6 ~]# vim /etc/mfs/mfsmount.cfg

……省略部分内容

/mnt/mfs #根据配置文件中给出的样例设定,设定挂载文件夹

- 挂载文件

[root@node6 ~]# mfsmount

mfsmaster accepted connection with parameters: read-write,restricted_ip,admin ; root mapped to root:root

[root@node6 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

mfsmaster:9421 18855552 1759680 17095872 10% /mnt/mfs

#挂载成功,直接使用mfs中存储文件即可

简单部署完成,检测

- 查看组件关联

[root@node1 ~]# lsof -i | grep mfs

mfsmaster 19014 mfs 8u IPv4 74240 0t0 TCP *:9419 (LISTEN)

mfsmaster 19014 mfs 11u IPv4 74241 0t0 TCP *:9420 (LISTEN)

mfsmaster 19014 mfs 12u IPv4 74242 0t0 TCP *:9421 (LISTEN)

mfsmaster 19014 mfs 13u IPv4 79453 0t0 TCP node1:9420->node2:41484 (ESTABLISHED)

mfsmaster 19014 mfs 14u IPv4 79714 0t0 TCP node1:9420->node3:47680 (ESTABLISHED)

mfsmaster 19014 mfs 15u IPv4 81180 0t0 TCP node1:9421->node6:37142 (ESTABLISHED)

#可以看出master服务器上 各端口链接情况

[root@node2 mfs]# netstat -atlp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 node2:41484 node1:9420 ESTABLISHED 12266/mfschunkserve

[root@node3 ~]# netstat -atpl

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 node3:47680 node1:9420 ESTABLISHED 12543/mfschunkserve

#chunkserver服务器也显示正常

[root@node6 ~]# netstat -atpl

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 node6:37142 node1:9421 ESTABLISHED 12638/mfsmount (mou

[root@node6 ~]# ps -ef |grep 12638

root 12638 1 2 22:36 ? 00:00:11 mfsmount (mounted on: /mnt/mfs)

#client 显示结果

- 存储文件,查看chunk信息

[root@node6 mfs]# mkdir data{1..2}

#创建2个测试文件夹

[root@node6 mfs]# ls

data1 data2

[root@node6 mfs]# mfsgetgoal data{1..2}

data1: 2

data2: 2

#默认副本数为2

[root@node6 mfs]# mfssetgoal 1 data1

data1: goal: 1

#修改data1的副本数为1

[root@node6 mfs]# mfsgetgoal data{1..2}

data1: 1

data2: 2

#修改结果查看

[root@node6 mfs]# echo "data1 test message" > data1/file1

[root@node6 mfs]# echo "data2 test message" > data2/file1

#分别创建文件

[root@node6 mfs]# mfsfileinfo data{1..2}/file1

data1/file1:

chunk 0: 0000000000000006_00000001 / (id:6 ver:1)

copy 1: 192.168.27.13:9422 (status:VALID)

data2/file1:

chunk 0: 0000000000000007_00000001 / (id:7 ver:1)

copy 1: 192.168.27.12:9422 (status:VALID)

copy 2: 192.168.27.13:9422 (status:VALID)

#可以看出data1中的file1文件chunk存放在node3中,副本为1

#data2中的file1文件chunk存放在node2和node3中,副本为2

- 关闭一个chunkserver存放节点查看效果

因为node3上存在单幅本和多副本文件,关闭node3服务效果最佳

[root@node3 ~]# systemctl stop moosefs-chunkserver.service

#关闭服务

[root@node6 mfs]# mfsfileinfo data{1..2}/file1

data1/file1:

chunk 0: 0000000000000006_00000001 / (id:6 ver:1)

no valid copies !!!

data2/file1:

chunk 0: 0000000000000007_00000001 / (id:7 ver:1)

copy 1: 192.168.27.12:9422 (status:VALID)

#chunk显示已改变

[root@node6 mfs]# cat data2/file1

data2 test message

#多副本文件,可以打开查看内容

[root@node6 mfs]# cat data1/file1

^C

^C^C^C^C

#单副本的文件,无法打开,且进程卡死,无法退出

#测试时知道node3服务开启,才能回复正常操作

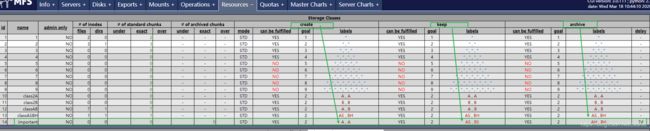

存储类(Storage Class)

- 添加chunkserver节点node4和node5方法与上面chunkserver部署一致

- 添加标签

node2标签 A S;此处A代表机房A,S代表SSD硬盘类型服务器

node3标签 B S;此处B代表机房B,S代表SSD硬盘类型服务器

node4标签 A H;此处A代表机房A,H代表HDD硬盘类型服务器

node4标签 B H;此处B代表机房B,H代表HDD硬盘类型服务器

[root@node2 ~]# vim /etc/mfs/mfschunkserver.cfg

83 # labels string (default is empty - no labels)

84 LABELS = A S

#修改chunkserver的主配置文件,开启label功能,添加A标签

[root@node2 ~]# systemctl reload moosefs-chunkserver.service

#修改了配置文件,重新加载服务配置文件

#其他节点设置方式一致,主机标签设定字母不同即可

[root@node1 ~]# mfscli -SCS

#-SCS : show connected chunk servers|显示已连接chunkserver服务器的信息

+---------------------------------------------------------------------------------------------------------------------------------------------+

| Chunk Servers |

+---------+------+----+--------+---------+------+-------------+-------------------------------------+-----------------------------------------+

| | | | | | | | 'regular' hdd space | 'marked for removal' hdd space |

| ip/host | port | id | labels | version | load | maintenance +--------+---------+---------+--------+--------+--------+------+-------+--------+

| | | | | | | | chunks | used | total | % used | status | chunks | used | total | % used |

+---------+------+----+--------+---------+------+-------------+--------+---------+---------+--------+--------+--------+------+-------+--------+

| node2 | 9422 | 1 | A,S | 3.0.111 | 0 | off | 0 | 288 MiB | 1.0 GiB | 28.28% | - | 0 | 0 B | 0 B | - |

| node3 | 9422 | 2 | B,S | 3.0.111 | 0 | off | 1 | 1.4 GiB | 17 GiB | 8.25% | - | 0 | 0 B | 0 B | - |

| node4 | 9422 | 3 | A,H | 3.0.111 | 0 | off | 3 | 1.4 GiB | 17 GiB | 8.25% | - | 0 | 0 B | 0 B | - |

| node5 | 9422 | 4 | B,H | 3.0.111 | 0 | off | 5 | 1.4 GiB | 17 GiB | 8.25% | - | 0 | 0 B | 0 B | - |

+---------+------+----+--------+---------+------+-------------+--------+---------+---------+--------+--------+--------+------+-------+--------+

#可以看到标签都已添加成功

- 设置存储分类

设置4中存储方式,进行测试,设置的都是2个副本模式:

1.文件都存在A机房中的服务器上

2.文件都存在B机房中的服务器上

3.文件分别存在A,B机房中的各一台服务器上

4.文见存放在A机房的SSD硬盘服务器上,和B机房的HDD服务器上

[root@node6 mfs]# mfsscadmin create 2A class2A

storage class make class2A: ok

[root@node6 mfs]# mfsscadmin create 2B class2B

storage class make class2B: ok

[root@node6 mfs]# mfsscadmin create A,B classAB

storage class make classAB: ok

[root@node6 mfs]# mfsscadmin create AS,BH classASBH

storage class make classASBH: ok

[root@node6 mfs]# mfsscadmin list

1

2

3

4

5

6

7

8

9

class2A

class2B

classAB

classASBH

#上方1-9是留给老版本存储类的设定

- 存储类使用,及效果显示

[root@node6 mfs]# mfsfileinfo data{1..2}/file1

data1/file1:

chunk 0: 0000000000000006_00000001 / (id:6 ver:1)

copy 1: 192.168.27.13:9422 (status:VALID)

data2/file1:

chunk 0: 0000000000000007_00000001 / (id:7 ver:1)

copy 1: 192.168.27.14:9422 (status:VALID)

copy 2: 192.168.27.15:9422 (status:VALID)

#查看文件源存放地点

[root@node6 mfs]# mfssetgoal 2 data1

data1: goal: 2

#将data1文件副本改为2

[root@node6 mfs]# mfssetsclass -r class2A data1

data1:

inodes with storage class changed: 2

inodes with storage class not changed: 0

inodes with permission denied: 0

#将data1设置为2A存储类,文件应当存放在node2和node4上

[root@node6 mfs]# mfssetsclass -r class2B data2

data2:

inodes with storage class changed: 2

inodes with storage class not changed: 0

inodes with permission denied: 0

#将data2设置为2B存储类,文件应当存放在node3和nod5上

[root@node6 mfs]# mfsfileinfo data{1..2}/file1

data1/file1: #class2A

chunk 0: 0000000000000006_00000001 / (id:6 ver:1)

copy 1: 192.168.27.12:9422 (status:VALID) #node2

copy 2: 192.168.27.14:9422 (status:VALID) #node4

data2/file1: #class2B

chunk 0: 0000000000000007_00000001 / (id:7 ver:1)

copy 1: 192.168.27.13:9422 (status:VALID) #node3

copy 2: 192.168.27.15:9422 (status:VALID) #node5

#显示文件已安装存储类设定存放

[root@node6 mfs]# mfsxchgsclass -nr class2A classASBH data1

data1:

inodes with storage class changed: 2

inodes with storage class not changed: 0

inodes with permission denied: 0

#修改data1的存储类为classASBS

[root@node6 mfs]# mfsfileinfo data{1..2}/file1

data1/file1: #classASBS

chunk 0: 0000000000000006_00000001 / (id:6 ver:1)

copy 1: 192.168.27.12:9422 (status:VALID) #node2

copy 2: 192.168.27.15:9422 (status:VALID) #node5

#显示文件已安装存储类设定存放在node2和node5中

[root@node6 mfs]# mfsxchgsclass -r class2B classAB data2

data2:

inodes with storage class changed: 2

inodes with storage class not changed: 0

inodes with permission denied: 0

#修改data2的存储类为classAB

[root@node6 mfs]# for i in {2..4} ;do echo "file$i test message" > data2/file$i ;done

[root@node6 mfs]# cat data2/file{1..4}

data2 test message

file2 test message

file3 test message

file4 test message

#由于A、B机房中都是2台机器,文件会在机房中存储过程中发生同一级房随机存放,所以多生成几个文件看效果

[root@node6 mfs]# mfsfileinfo data2/file{1..4}

data2/file1:

chunk 0: 0000000000000007_00000001 / (id:7 ver:1)

copy 1: 192.168.27.13:9422 (status:VALID) #node3 B机房

copy 2: 192.168.27.14:9422 (status:VALID) #node4 A机房

data2/file2:

chunk 0: 000000000000000C_00000001 / (id:12 ver:1)

copy 1: 192.168.27.14:9422 (status:VALID) #node4 A机房

copy 2: 192.168.27.15:9422 (status:VALID) #node5 B机房

data2/file3:

chunk 0: 000000000000000D_00000001 / (id:13 ver:1)

copy 1: 192.168.27.13:9422 (status:VALID) #node3 B机房

copy 2: 192.168.27.14:9422 (status:VALID) #node4 A机房

data2/file4:

chunk 0: 000000000000000E_00000001 / (id:14 ver:1)

copy 1: 192.168.27.14:9422 (status:VALID) #node4 A机房

copy 2: 192.168.27.15:9422 (status:VALID) #node5 B机房

#可以看出保持存储类规则AB机房各一个副本,同一机房存放服务器随机

- 关于存储类(create、keep、archive设定)

create 文件在创建时储存设定

keep 文件保存是存储类设定

archive 指定时间后,文件打包存储服务器设定

[root@node6 mfs]# mfsscadmin create -C 2A -K AS,BS -A AH,BH -d 7 important

storage class make important: ok

# -C - set labels used for creation chunks - when not specified then 'keep' labels are used|创建规则

# -K - set labels used for keeping chunks - must be specified|保存规则

# -A - set labels used for archiving chunks - when not specified then 'keep' labels are used|打包存储规则

# -d - set number of days used to switch labels from 'keep' to 'archive' - must be specified when '-A' option is given|设置多少天数后打包

#create 设定2A创建文件在A机房中的任意2台服务器上

#keep 设定AS,BS保存文件副本在A,B机房中各一台SSD硬盘的服务器上

#archive设定了打包存储在在A,B机房中各一台HDD硬盘的服务器上,时间在7天后

#-d 默认为day,天数

#时间太长就不看效果了,可以在监控中看到设定效果,如下图