为什么用word1.2?

最新的word分词是1.3版本,但是用1.3的时候会出现一些Bug,产生Java.lang.OutOfMemory错误,所以还是用比较稳定的1.2版本。

在Lucene 6.1.0中,实现一个Analyzer的子类,也就是构建自己的Analyzer的时候,需要实现的方法是createComponet(String fieldName),而在Word 1.2中,没有实现这个方法(word 1.2对lucene 4.+的版本支持较好),运用ChineseWordAnalyzer运行的时候会提示:

Exception in thread "main" java.lang.AbstractMethodError: org.apache.lucene.analysis.Analyzer.createComponents(Ljava/lang/String;)Lorg/apache/lucene/analysis/Analyzer$TokenStreamComponents;

at org.apache.lucene.analysis.Analyzer.tokenStream(Analyzer.java:140)

所以要对ChineseWordAnalyzer做一些修改.

实现createComponet(String fieldName)方法

新建一个Analyzer的子类MyWordAnalyzer,根据ChinesWordAnalyzer改写:

public class MyWordAnalyzer extends Analyzer {

Segmentation segmentation = null;

public MyWordAnalyzer() {

segmentation = SegmentationFactory.getSegmentation(

SegmentationAlgorithm.BidirectionalMaximumMatching);

}

public MyWordAnalyzer(Segmentation segmentation) {

this.segmentation = segmentation;

}

@Override

protected TokenStreamComponents createComponents(String fieldName) {

Tokenizer tokenizer = new MyWordTokenizer(segmentation);

return new TokenStreamComponents(tokenizer);

}

}

其中segmentation属性可以设置分词所用的算法,默认的是双向最大匹配算法。接着要实现的是MyWordTokenizer,也是模仿ChineseWordTokenizer来写:

public class MyWordTokenizer extends Tokenizer{

private final CharTermAttribute charTermAttribute

= addAttribute(CharTermAttribute.class);

private final OffsetAttribute offsetAttribute

= addAttribute(OffsetAttribute.class);

private final PositionIncrementAttribute

positionIncrementAttribute

= addAttribute(PositionIncrementAttribute.class);

private Segmentation segmentation = null;

private BufferedReader reader = null;

private final Queue words = new LinkedTransferQueue<>();

private int startOffset=0;

public MyWordTokenizer() {

segmentation = SegmentationFactory.getSegmentation(

SegmentationAlgorithm.BidirectionalMaximumMatching);

}

public MyWordTokenizer(Segmentation segmentation) {

this.segmentation = segmentation;

}

private Word getWord() throws IOException {

Word word = words.poll();

if(word == null){

String line;

while( (line = reader.readLine()) != null ){

words.addAll(segmentation.seg(line));

}

startOffset = 0;

word = words.poll();

}

return word;

}

@Override

public final boolean incrementToken() throws IOException {

reader=new BufferedReader(input);

Word word = getWord();

if (word != null) {

int positionIncrement = 1;

//忽略停用词

while(StopWord.is(word.getText())){

positionIncrement++;

startOffset += word.getText().length();

word = getWord();

if(word == null){

return false;

}

}

charTermAttribute.setEmpty().append(word.getText());

offsetAttribute.setOffset(startOffset, startOffset

+word.getText().length());

positionIncrementAttribute.setPositionIncrement(

positionIncrement);

startOffset += word.getText().length();

return true;

}

return false;

}

}

incrementToken()是必需要实现的方法,返回true的时候表示后面还有token,返回false表示解析结束。在incrementToken()的第一行,将input的值赋给reader,input是Tokenizer为Reader的对象,在Tokenizer中还有另一个Reader对象——inputPending,在Tokenizer中源码如下:

public abstract class Tokenizer extends TokenStream {

/** The text source for this Tokenizer. */

protected Reader input = ILLEGAL_STATE_READER;

/** Pending reader: not actually assigned to input until reset() */

private Reader inputPending = ILLEGAL_STATE_READER;

input中存储的是需要解析的文本,但是文本是先放到inputPending中,直到调用了reset方法之后才将值赋给input。

reset()方法定义如下:

@Override

public void reset() throws IOException {

super.reset();

input = inputPending;

inputPending = ILLEGAL_STATE_READER;

}

在调用reset()方法之前,input里面的是没有需要解析的文本信息的,所以要在reset()之后再将input的值赋给reader(一个BufferedReader 的对象)。

做了上面的修改之后,就可以运用Word 1.2里面提供的算法进行分词了:

测试类MyWordAnalyzerTest

public class MyWordAnalyzerTest {

public static void main(String[] args) throws IOException {

String text = "乒乓球拍卖完了";

Analyzer analyzer = new MyWordAnalyzer();

TokenStream tokenStream = analyzer.tokenStream("text", text);

// 准备消费

tokenStream.reset();

// 开始消费

while (tokenStream.incrementToken()) {

// 词

CharTermAttribute charTermAttribute

= tokenStream.getAttribute(CharTermAttribute.class);

// 词在文本中的起始位置

OffsetAttribute offsetAttribute

= tokenStream.getAttribute(OffsetAttribute.class);

// 第几个词

PositionIncrementAttribute positionIncrementAttribute

= tokenStream

.getAttribute(PositionIncrementAttribute.class);

System.out.println(charTermAttribute.toString() + " "

+ "(" + offsetAttribute.startOffset() + " - "

+ offsetAttribute.endOffset() + ") "

+ positionIncrementAttribute.getPositionIncrement());

}

// 消费完毕

tokenStream.close();

}

}

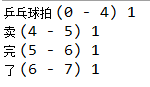

结果如下:

因为在increamToken()中,将停止词去掉了,所以分词结果中没有出现“了”。从上面的结果也可以看到,Word分词可以将句子分解为“乒乓球拍”和“卖完”,对比用SmartChineseAnalyzer():

综上Word的分词效果还是不错的。