GAN生成对抗网络合集(五):LSGan-最小二乘GAN(附代码)

首先分享一个讲得还不错的博客:

经典论文复现 | LSGAN:最小二乘生成对抗网络

1. LSGan原理

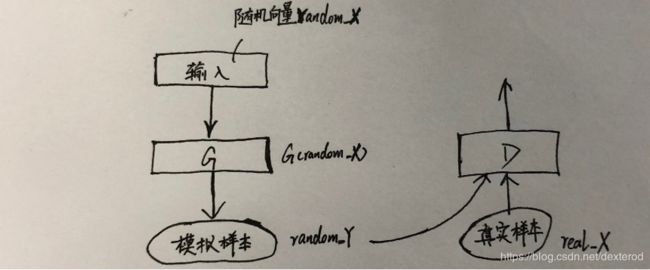

GAN是以对抗的方式逼近概率分布。但是直接使用该方法,会随着判别器越来越好而生成器无法与其对抗,进而形成梯度消失的问题。所以不论是WGAN,还是本节中的LSGAN,都是试图使用不同的距离度量(loss值),从而构建一个不仅稳定,同时还收敛迅速的生成对抗网络。

WGAN使用的是Wasserstein理论来构建度量距离。而LSGAN使用了另一个方法,即使用了更加平滑和非饱和梯度的损失函数——最小二乘来代替原来的Sigmoid交叉熵。这是由于L2正则独有的特性,在数据偏离目标时会有一个与其偏离距离成比例的惩罚,再将其拉回来,从而使数据的偏离不会越来越远。

相对于WGAN而言,LSGAN的loss简单很多。直接将传统的GAN中的loss变为平方差即可。

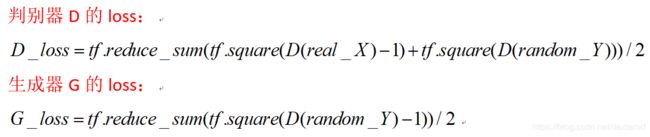

即LSGan的核心就是损失函数变为D的loss是真实样本和1作差的平方+模拟样本和0作差的平方;G的loss是模拟样本和1作差的平方(L2正则化)

而WGan的核心是损失函数变为真实值和虚拟值的差(L1正则化)

原始GAN的损失函数是D的loss都是真实样本和1作交叉熵,模拟样本和0作交叉熵;G的loss是模拟样本和1作交叉熵。(交叉熵)

为什么要除以2?和以前的原理一样,在对平方求导时会得到一个系数2,与事先的1/2运算正好等于1,使公式更加完整。

2 代码

直接修改我们之前的 GAN生成对抗网络合集(三):InfoGAN和ACGAN-指定类别生成模拟样本的GAN(附代码) 代码,将其改成LSGan。

需要改三个地方:

- 修改判别器D

将判别器的最后一层输出disc改成使用Sigmoid的激活函数。代码如下:

... ...

... ...

# 生成的数据可以分别连接不同的输出层产生不同的结果

# 1维的输出层产生判别结果1或是0

disc = slim.fully_connected(shared_tensor, num_outputs=1, activation_fn=tf.nn.sigmoid)

disc = tf.squeeze(disc, -1)

# print ("disc",disc.get_shape()) # 0 or 1

# 10维的输出层产生分类结果 (样本标签)

recog_cat = slim.fully_connected(recog_shared, num_outputs=num_classes, activation_fn=None)

# 2维输出层产生重构造的隐含维度信息

recog_cont = slim.fully_connected(recog_shared, num_outputs=num_cont, activation_fn=tf.nn.sigmoid)

return disc, recog_cat, recog_cont

- 修改loss值

将原有的loss_d与loss_g改成平方差形式,原有的y_real与y_fake不再需要了,可以删掉,其他代码不用变动。

##################################################################

# 4.定义损失函数和优化器

##################################################################

# 判别器 loss

# loss_d_r = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=disc_real, labels=y_real)) # 1

# loss_d_f = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=disc_fake, labels=y_fake)) # 0

loss_d = tf.reduce_sum(tf.square(disc_real-1) + tf.square(disc_fake-0)) / 2

# print ('loss_d', loss_d.get_shape())

# generator loss

loss_g = tf.reduce_sum(tf.square(disc_fake-1)) / 2

# categorical factor loss 分类因素损失

loss_cf = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(logits=class_fake, labels=y))

loss_cr = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(logits=class_real, labels=y))

loss_c = (loss_cf + loss_cr) / 2

# continuous factor loss 隐含信息变量的损失

loss_con = tf.reduce_mean(tf.square(con_fake - z_con))

-----------------------------------------------------------------------------------------------------------------------------------------

这里附上生成MNIST完整代码:

# !/usr/bin/env python3

# -*- coding: utf-8 -*-

__author__ = '黎明'

##################################################################

# 1.引入头文件并加载mnist数据

##################################################################

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

from scipy.stats import norm

import tensorflow.contrib.slim as slim

import time

from timer import Timer

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets("/media/S318080208/py_pictures/minist/") # ,one_hot=True)

tf.reset_default_graph() # 用于清除默认图形堆栈并重置全局默认图形

##################################################################

# 2.定义生成器与判别器

##################################################################

def generator(x): # 生成器函数 : 两个全连接+两个反卷积模拟样本的生成,每一层都有BN(批量归一化)处理

reuse = len([t for t in tf.global_variables() if t.name.startswith('generator')]) > 0 # 确认该变量作用域没有变量

# print (x.get_shape())

with tf.variable_scope('generator', reuse=reuse):

x = slim.fully_connected(x, 1024)

# print(x)

x = slim.batch_norm(x, activation_fn=tf.nn.relu)

x = slim.fully_connected(x, 7 * 7 * 128)

x = slim.batch_norm(x, activation_fn=tf.nn.relu)

x = tf.reshape(x, [-1, 7, 7, 128])

# print ('22', tf.tensor.get_shape())

x = slim.conv2d_transpose(x, 64, kernel_size=[4, 4], stride=2, activation_fn=None)

# print ('gen',x.get_shape())

x = slim.batch_norm(x, activation_fn=tf.nn.relu)

z = slim.conv2d_transpose(x, 1, kernel_size=[4, 4], stride=2, activation_fn=tf.nn.sigmoid)

# print ('genz',z.get_shape())

return z

def leaky_relu(x):

return tf.where(tf.greater(x, 0), x, 0.01 * x)

def discriminator(x, num_classes=10, num_cont=2): # 判别器函数 : 两次卷积,再接两次全连接

reuse = len([t for t in tf.global_variables() if t.name.startswith('discriminator')]) > 0

# print (reuse)

# print (x.get_shape())

with tf.variable_scope('discriminator', reuse=reuse):

x = tf.reshape(x, shape=[-1, 28, 28, 1])

x = slim.conv2d(x, num_outputs=64, kernel_size=[4, 4], stride=2, activation_fn=leaky_relu)

x = slim.conv2d(x, num_outputs=128, kernel_size=[4, 4], stride=2, activation_fn=leaky_relu)

# print ("conv2d",x.get_shape())

x = slim.flatten(x) # 输入扁平化

shared_tensor = slim.fully_connected(x, num_outputs=1024, activation_fn=leaky_relu)

recog_shared = slim.fully_connected(shared_tensor, num_outputs=128, activation_fn=leaky_relu)

# 生成的数据可以分别连接不同的输出层产生不同的结果

# 1维的输出层产生判别结果1或是0

disc = slim.fully_connected(shared_tensor, num_outputs=1, activation_fn=tf.nn.sigmoid)

disc = tf.squeeze(disc, -1)

# print ("disc",disc.get_shape()) # 0 or 1

# 10维的输出层产生分类结果 (样本标签)

recog_cat = slim.fully_connected(recog_shared, num_outputs=num_classes, activation_fn=None)

# 2维输出层产生重构造的隐含维度信息

recog_cont = slim.fully_connected(recog_shared, num_outputs=num_cont, activation_fn=tf.nn.sigmoid)

return disc, recog_cat, recog_cont

##################################################################

# 3.定义网络模型 : 定义 参数/输入/输出/中间过程(经过G/D)的输入输出

##################################################################

batch_size = 10 # 获取样本的批次大小32

classes_dim = 10 # 10 classes

con_dim = 2 # 隐含信息变量的维度, 应节点为z_con

rand_dim = 38 # 一般噪声的维度, 应节点为z_rand, 二者都是符合标准高斯分布的随机数。

n_input = 784 # 28 * 28

x = tf.placeholder(tf.float32, [None, n_input]) # x为输入真实图片images

y = tf.placeholder(tf.int32, [None]) # y为真实标签labels

z_con = tf.random_normal((batch_size, con_dim)) # 2列

z_rand = tf.random_normal((batch_size, rand_dim)) # 38列

z = tf.concat(axis=1, values=[tf.one_hot(y, depth=classes_dim), z_con, z_rand]) # 50列 shape = (10, 50)

gen = generator(z) # shape = (10, 28, 28, 1)

genout = tf.squeeze(gen, -1) # shape = (10, 28, 28)

# labels for discriminator

# y_real = tf.ones(batch_size) # 真

# y_fake = tf.zeros(batch_size) # 假

# 判别器

disc_real, class_real, _ = discriminator(x)

disc_fake, class_fake, con_fake = discriminator(gen)

pred_class = tf.argmax(class_fake, dimension=1)

##################################################################

# 4.定义损失函数和优化器

##################################################################

# 判别器 loss

# loss_d_r = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=disc_real, labels=y_real)) # 1

# loss_d_f = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits=disc_fake, labels=y_fake)) # 0

loss_d = tf.reduce_sum(tf.square(disc_real-1) + tf.square(disc_fake-0)) / 2

# print ('loss_d', loss_d.get_shape())

# generator loss

loss_g = tf.reduce_sum(tf.square(disc_fake-1)) / 2

# categorical factor loss 分类因素损失

loss_cf = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(logits=class_fake, labels=y))

loss_cr = tf.reduce_mean(tf.nn.sparse_softmax_cross_entropy_with_logits(logits=class_real, labels=y))

loss_c = (loss_cf + loss_cr) / 2

# continuous factor loss 隐含信息变量的损失

loss_con = tf.reduce_mean(tf.square(con_fake - z_con))

# 获得各个网络中各自的训练参数列表

t_vars = tf.trainable_variables()

d_vars = [var for var in t_vars if 'discriminator' in var.name]

g_vars = [var for var in t_vars if 'generator' in var.name]

# 优化器

disc_global_step = tf.Variable(0, trainable=False)

gen_global_step = tf.Variable(0, trainable=False)

train_disc = tf.train.AdamOptimizer(0.0001).minimize(loss_d + loss_c + loss_con, var_list=d_vars,

global_step=disc_global_step)

train_gen = tf.train.AdamOptimizer(0.001).minimize(loss_g + loss_c + loss_con, var_list=g_vars,

global_step=gen_global_step)

##################################################################

# 5.训练与测试

# 建立session,循环中使用run来运行两个优化器

##################################################################

training_epochs = 30

display_step = 1

config = tf.ConfigProto()

config.gpu_options.per_process_gpu_memory_fraction = 0.4

with tf.Session(config=config) as sess:

sess.run(tf.global_variables_initializer())

print(time.asctime(time.localtime(time.time())))

for epoch in range(training_epochs):

# print(time.asctime(time.localtime(time.time())))

timer = Timer()

timer.tic()

avg_cost = 0.

total_batch = int(mnist.train.num_examples / batch_size) # 5500

# 遍历全部数据集

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size) # 取数据x:images, y:labels

feeds = {x: batch_xs, y: batch_ys}

# Fit training using batch data

# 输入数据,运行优化器

l_disc, _, l_d_step = sess.run([loss_d, train_disc, disc_global_step], feeds)

l_gen, _, l_g_step = sess.run([loss_g, train_gen, gen_global_step], feeds)

timer.toc()

# 显示训练中的详细信息

if epoch % display_step == 0:

print("Epoch:", '%04d' % (epoch + 1), "cost=", "{:.9f} ".format(l_disc), l_gen, 'speed:', '{:.2f}'.format(timer.average_time), 's')

print("完成!")

# 测试

print("Result: loss_d = ", loss_d.eval({x: mnist.test.images[:batch_size], y: mnist.test.labels[:batch_size]}),

"\n loss_g = ", loss_g.eval({x: mnist.test.images[:batch_size], y: mnist.test.labels[:batch_size]}))

##################################################################

# 6.可视化

##################################################################

# 根据图片模拟生成图片

show_num = 10

gensimple, d_class, inputx, inputy, con_out = sess.run(

[genout, pred_class, x, y, con_fake],

feed_dict={x: mnist.test.images[:batch_size], y: mnist.test.labels[:batch_size]})

f, a = plt.subplots(2, 10, figsize=(10, 2)) # figure 1000*20 , 分为10张子图

for i in range(show_num):

a[0][i].imshow(np.reshape(inputx[i], (28, 28)))

a[1][i].imshow(np.reshape(gensimple[i], (28, 28)))

# 输出 判决预测种类/真实输入种类/隐藏信息

print("d_class", d_class[i], "inputy", inputy[i], "con_out", con_out[i])

plt.draw()

plt.show()

# 将隐含信息分布对应的图片打印出来

my_con = tf.placeholder(tf.float32, [batch_size, 2])

myz = tf.concat(axis=1, values=[tf.one_hot(y, depth=classes_dim), my_con, z_rand])

mygen = generator(myz)

mygenout = tf.squeeze(mygen, -1)

my_con1 = np.ones([10, 2])

a = np.linspace(0.0001, 0.99999, 10)

y_input = np.ones([10])

figure = np.zeros((28 * 10, 28 * 10))

my_rand = tf.random_normal((10, rand_dim))

for i in range(10):

for j in range(10):

my_con1[j][0] = a[i]

my_con1[j][1] = a[j]

y_input[j] = j

mygenoutv = sess.run(mygenout, feed_dict={y: y_input, my_con: my_con1})

for jj in range(10):

digit = mygenoutv[jj].reshape(28, 28)

figure[i * 28: (i + 1) * 28,

jj * 28: (jj + 1) * 28] = digit

plt.figure(figsize=(10, 10))

plt.imshow(figure, cmap='Greys_r')

plt.show()

这是最后的运行结果: