Python2 爬虫(九) -- Scrapy & BeautifulSoup之再爬CSDN博文

序

我的Python3爬虫(五)博文使用utllib基本函数以及正则表达式技术实现了爬取csdn全部博文信息的任务。

链接: Python3 爬虫(五) -- 单线程爬取我的CSDN全部博文

上一篇 Python3 爬虫(八) -- BeautifulSoup之再次爬取CSDN博文,我们就利用BeautifulSoup4重新实现了一次爬取csdn博文的任务。

那么,既然认识了Scrapy和BeautifulSoup,哪有不让它们合作一下的道理呢?不过,既然要使用Scrapy框架,我不得不又转战Ubuntu,使用Python2.7了。还是希望Python3能够尽快的支持Scrapy框架哦~

嘿嘿,我又不厌其烦的继续爬CSDN博文了,问我为什么 ?也没啥,只不过是想做下简单对比而已,当然你也可以爬别的东西啦~~~

这次博客首页主题没变,所以就不重复展示了,参看

爬虫(八)即可。

创建Scrapy项目

首先,利用命令scrapy startproject csdnSpider创建我们的爬虫项目;

然后,在spiders目录下,创建CSDNSpider.py文件,这是我们主程序所在文件,目录结构如下:

定义Item

找到并打开items.py文件,定义我们需要爬取的元素:

# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

import scrapy

from scrapy.item import Item,Field

class CsdnspiderItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

pass

class PaperItem(Item):

title = Field() #博文标题

link = Field() #博文链接

writeTime = Field() #日志编写时间

readers = Field() #阅读次数

comments = Field() #评论数

实现CSDNSpider

打开创建的CSDNSpider.py文件,实现代码:

# -*- coding: UTF-8 -*-

############################################################################

# 程序:CSDN博客爬虫

# 功能:抓取我的CSDN全部博文

# 时间:2016/06/01

# 作者:yr

#############################################################################

import scrapy, re, json, sys

# 导入框架内置基本类class scrapy.spider.Spider

try:

from scrapy.spider import Spider

except:

from scrapy.spider import BaseSpider as Spider

# 导入爬取一般网站常用类class scrapy.contrib.spiders.CrawlSpider和规则类Rule

from scrapy.contrib.spiders import CrawlSpider, Rule

from scrapy.contrib.linkextractors.lxmlhtml import LxmlLinkExtractor

from bs4 import BeautifulSoup

from csdnSpider.items import PaperItem

# 设置编码格式

reload(sys)

sys.setdefaultencoding('utf-8')

add = 0

class CSDNPaperSpider(CrawlSpider):

name = "csdnSpider"

allowed_domains = ["csdn.net"]

# 定义爬虫的入口网页

start_urls = ["http://blog.csdn.net/fly_yr/article/list/1"]

# 自定义规则

rules = [Rule(LxmlLinkExtractor(allow=('/article/list/\d{,2}')), follow=True, callback='parseItem')]

# 定义提取网页数据到Items中的实现函数

def parseItem(self, response):

global add

items = []

data = response.body

soup = BeautifulSoup(data, "html5lib")

# 找到所有的博文代码模块

sites = soup.find_all('div', "list_item article_item")

for site in sites:

item = PaperItem()

# 标题、链接、日期、阅读次数、评论个数

item['title'] = site.find('span', "link_title").a.get_text()

item['link']= site.find('span', "link_title").a.get('href')

item['writeTime'] = site.find('span', "link_postdate").get_text()

item['readers'] = re.findall(re.compile(r'\((.*?)\)'), site.find('span', "link_view").get_text())[0]

item['comments'] = re.findall(re.compile(r'\((.*?)\)'), site.find('span', "link_comments").get_text())[0]

add += 1

items.append(item)

print("The total number:",add)

return items

定义pipeline

找到并打开pipelines.py文件,添加代码:

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

import scrapy

from scrapy import signals

import json, codecs

class CsdnspiderPipeline(object):

def process_item(self, item, spider):

return item

class JsonWithEncodingCSDNPipeline(object):

def __init__(self):

self.file = codecs.open('papers.json', 'w', encoding='utf-8')

def process_item(self, item, spider):

writeTime = json.dumps("日期:"+str(item['writeTime']),ensure_ascii=False) + "\n"

title = json.dumps("标题:"+str(item['title']),ensure_ascii=False)+ "\n"

link = json.dumps("链接:"+str(item['link']),ensure_ascii=False)+ "\n"

readers = json.dumps("阅读次数:"+str(item['readers']),ensure_ascii=False)+ "\t"

comments = json.dumps("评论数量:"+str(item['comments']),ensure_ascii=False)+ "\n\n"

line = writeTime + title + link + readers + comments

self.file.write(line)

return item

def spider_closed(self, spider):

self.file.close()

修改设置文件

找到并打开setting.py文件:# -*- coding: utf-8 -*-

# Scrapy settings for csdnSpider project

#

# For simplicity, this file contains only the most important settings by

# default. All the other settings are documented here:

#

# http://doc.scrapy.org/en/latest/topics/settings.html

#

BOT_NAME = 'csdnSpider'

SPIDER_MODULES = ['csdnSpider.spiders']

NEWSPIDER_MODULE = 'csdnSpider.spiders'

# Crawl responsibly by identifying yourself (and your website) on the user-agent

#USER_AGENT = 'csdnSpider (+http://www.yourdomain.com)'

ITEM_PIPELINES = {

'csdnSpider.pipelines.JsonWithEncodingCSDNPipeline': 300,

}

LOG_LEVEL = 'INFO'

运行

在项目根目录下运行scrapy crawl csdnSpider,得到结果:

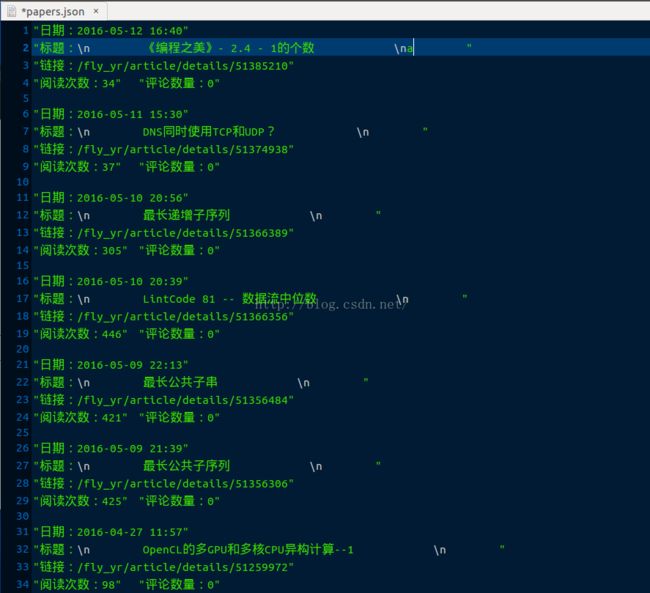

当前我的博文共有394篇,结果正确。打开项目根目录下的papers.json文件,查看爬取的博文信息:

GitHub完整代码链接,戳我哦~~~