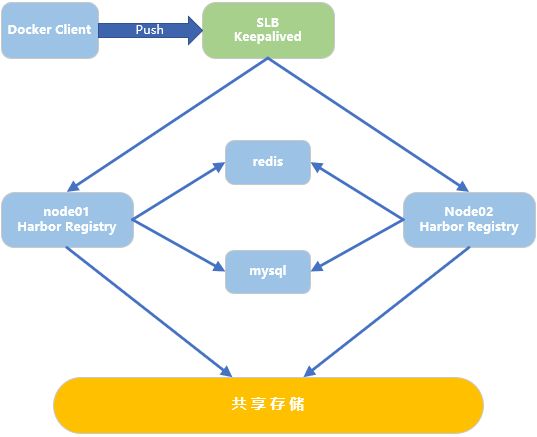

一 多Harbor高可用介绍

共享后端存储是一种比较标准的方案,将多个Harbor实例共享同一个后端存储,任何一个实例持久化到存储的镜像,都可被其他实例中读取。通过前置LB组件,如Keepalived,可以分流到不同的实例中去处理,从而实现负载均衡,也避免了单点故障,其架构图如下:

方案说明:

共享存储:Harbor的后端存储目前支持AWS S3、Openstack Swift, Ceph等,本实验环境采用NFS;

共享Session:harbor默认session会存放在redis中,可将redis独立出来,从而实现在不同实例上的session共享,独立出来的redis也可采用redis sentinel或者redis cluster等方式来保证redis的高可用性,本实验环境采用单台redis;

数据库高可用:MySQL多个实例无法共享一份mysql数据文件,可将harbor中的数据库独立出来。让多实例共用一个外部数据库,独立出来的mysql数据库也可采用mysqls cluster等方式来保证mysql的高可用性,本实验环境采用单台mysql。

二 正式部署

2.1 前期准备

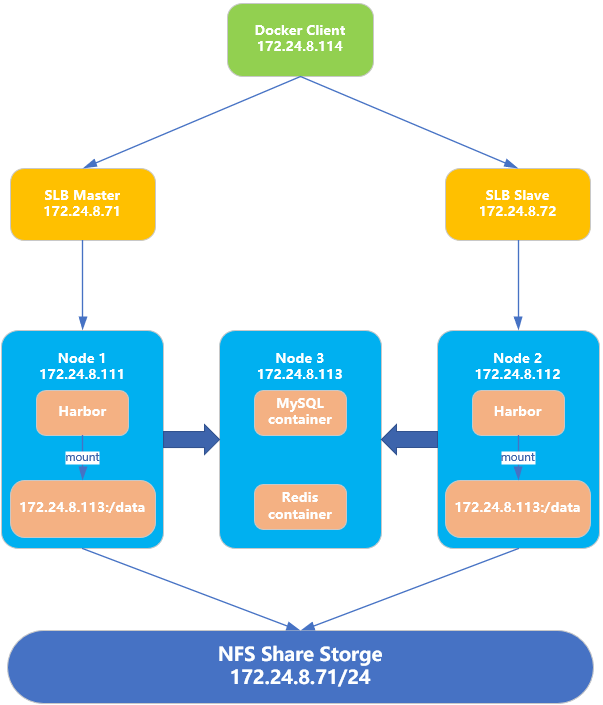

架构示意图:

前置配置:

- docker、docker-compose安装(见《009.Docker Compose基础使用》);

- ntp时钟同步(建议项);

- 相关防火墙-SELinux放通或关闭;

- nfsslb和slb02节点添加解析:echo "172.24.8.200 reg.harbor.com" >> /etc/hosts

2.2 创建nfs

1 [root@nfsslb ~]# yum -y install nfs-utils* 2 [root@nfsslb ~]# mkdir /myimages #用于共享镜像 3 [root@nfsslb ~]# mkdir /mydatabase #用于存储数据库数据 4 [root@nfsslb ~]# echo -e "/dev/vg01/lv01 /myimages ext4 defaults 0 0\n/dev/vg01/lv02 /mydatabase ext4 defaults 0 0">> /etc/fstab 5 [root@nfsslb ~]# mount -a 6 [root@nfsslb ~]# vi /etc/exports 7 /myimages 172.24.8.0/24(rw,no_root_squash) 8 /mydatabase 172.24.8.0/24(rw,no_root_squash) 9 [root@nfsslb ~]# systemctl start nfs.service 10 [root@nfsslb ~]# systemctl enable nfs.service

注意:nfsserver节点采用独立LVM磁盘作为nfs挂载目录,并配置相应共享目录,更多nfs配置见——NFS《004.NFS配置实例》。

2.3 挂载nfs

1 root@docker01:~# apt-get -y install nfs-common 2 root@docker02:~# apt-get -y install nfs-common 3 root@docker03:~# apt-get -y install nfs-common 4 5 root@docker01:~# mkdir /data 6 root@docker02:~# mkdir /data 7 8 root@docker01:~# echo "172.24.8.71:/myimages /data nfs defaults,_netdev 0 0">> /etc/fstab 9 root@docker02:~# echo "172.24.8.71:/myimages /data nfs defaults,_netdev 0 0">> /etc/fstab 10 root@docker03:~# echo "172.24.8.71:/mydatabase /database nfs defaults,_netdev 0 0">> /etc/fstab 11 12 root@docker01:~# mount -a 13 root@docker02:~# mount -a 14 root@docker03:~# mount -a 15 16 root@docker03:~# mkdir -p /database/mysql 17 root@docker03:~# mkdir -p /database/redis

2.4 部署外部mysql-redis

1 root@docker03:~# mkdir docker_compose/ 2 root@docker03:~# cd docker_compose/ 3 root@docker03:~/docker_compose# vi docker-compose.yml 4 version: '3' 5 services: 6 mysql-server: 7 hostname: mysql-server 8 restart: always 9 container_name: mysql-server 10 image: mysql:5.7 11 volumes: 12 - /database/mysql:/var/lib/mysql 13 command: --character-set-server=utf8 14 ports: 15 - '3306:3306' 16 environment: 17 MYSQL_ROOT_PASSWORD: x19901123 18 # logging: 19 # driver: "syslog" 20 # options: 21 # syslog-address: "tcp://172.24.8.112:1514" 22 # tag: "mysql" 23 redis: 24 hostname: redis-server 25 container_name: redis-server 26 restart: always 27 image: redis:3 28 volumes: 29 - /database/redis:/data 30 ports: 31 - '6379:6379' 32 # logging: 33 # driver: "syslog" 34 # options: 35 # syslog-address: "tcp://172.24.8.112:1514" 36 # tag: "redis"

提示:因为log容器为harbor中服务,当harbor暂未部署时,需要注释相关配置,harbor部署完毕后取消注释,然后重新up一次即可。

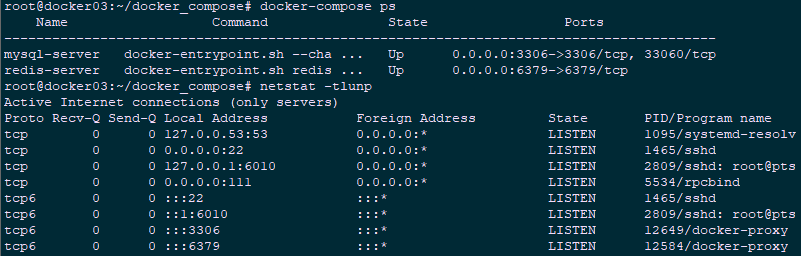

1 root@docker03:~/docker_compose# docker-compose up -d 2 root@docker03:~/docker_compose# docker-compose ps #确认docker是否up 3 root@docker03:~/docker_compose# netstat -tlunp #确认相关端口是否启动

2.5 下载harbor

1 root@docker01:~# wget https://storage.googleapis.com/harbor-releases/harbor-offline-installer-v1.5.4.tgz 2 root@docker01:~# tar xvf harbor-offline-installer-v1.5.4.tgz

提示:docker02节点参考如上操作即可。

2.6 导入registry表

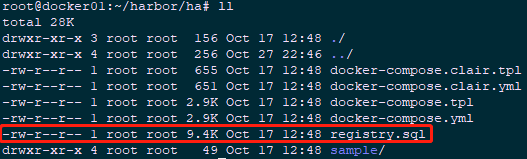

1 root@docker01:~# apt-get -y install mysql-client 2 root@docker01:~# cd harbor/ha/ 3 root@docker01:~/harbor/ha# ll

1 root@docker01:~/harbor/ha# mysql -h172.24.8.113 -uroot -p 2 mysql> set session sql_mode='STRICT_TRANS_TABLES,ERROR_FOR_DIVISION_BY_ZERO,NO_AUTO_CREATE_USER,NO_ENGINE_SUBSTITUTION'; #必须修改sql_mode 3 mysql> source ./registry.sql #导入registry数据表至外部数据库。 4 mysql> exit

提示:只需要导入一次即可。

2.7 修改harbor相关配置

1 root@docker01:~/harbor/ha# cd /root/harbor/ 2 root@docker01:~/harbor# vi harbor.cfg #修改harbor配置文件 3 hostname = 172.24.8.111 4 db_host = 172.24.8.113 5 db_password = x19901123 6 db_port = 3306 7 db_user = root 8 redis_url = 172.24.8.113:6379 9 root@docker01:~/harbor# vi prepare 10 empty_subj = "/C=/ST=/L=/O=/CN=/" 11 修改如下: 12 empty_subj = "/C=US/ST=California/L=Palo Alto/O=VMware, Inc./OU=Harbor/CN=notarysigner" 13 root@docker01:~/harbor# ./prepare #载入相关配置

提示:docker02参考如上配置即可;

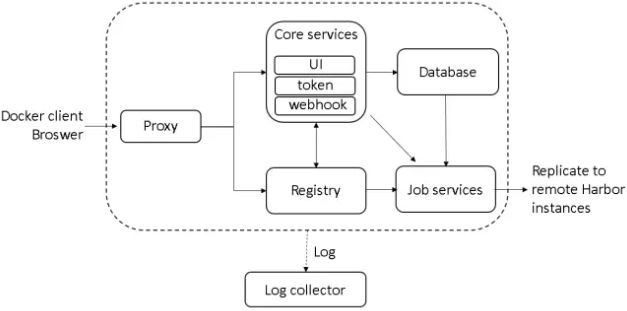

由于采用外部mysql和redis,根据以下架构图可知和数据库相关的组件有UI和jobservices因此需要做相应修改,运行prepare命令,会自动将相应的数据库参数同步至./common/config/ui/env和./common/config/adminserver/env。

1 root@docker01:~/harbor# cat ./common/config/ui/env #验证 2 _REDIS_URL=172.24.8.113:6379 3 root@docker01:~/harbor# cat ./common/config/adminserver/env | grep MYSQL #验证 4 MYSQL_HOST=172.24.8.113 5 MYSQL_PORT=3306 6 MYSQL_USR=root 7 MYSQL_PWD=x19901123 8 MYSQL_DATABASE=registry

2.8 docker-compose部署

1 root@docker01:~/harbor# cp docker-compose.yml docker-compose.yml.bak 2 root@docker01:~/harbor# cp ha/docker-compose.yml . 3 root@docker01:~/harbor# vi docker-compose.yml 4 log 5 ports: 6 - 1514:10514 #log需要对外部redis和mysql提供服务,因此只需要修改此处即可 7 root@docker01:~/harbor# ./install.sh

提示:由于redis和mysql采用外部部署,因此需要在docker-compose.yml中删除或注释redis和mysql的服务项,同时删除其他服务对其的依赖,官方自带的harbor中已经存在修改好的docker-compose文件,位于ha目录。

docker02节点参考2.5-2.8部署harbor即可。

2.9 重新构建外部redis和mysql

去掉log有关注释项。

1 root@docker03:~/docker_compose# docker-compose up -d 2 root@docker03:~/docker_compose# docker-compose ps #确认docker是否up 3 root@docker03:~/docker_compose# netstat -tlunp #确认相关端口是否启动

2.10 Keepalived安装

1 [root@nfsslb ~]# yum -y install gcc gcc-c++ make kernel-devel kernel-tools kernel-tools-libs kernel libnl libnl-devel libnfnetlink-devel openssl-devel 2 [root@nfsslb ~]# cd /tmp/ 3 [root@nfsslb ~]# tar -zxvf keepalived-2.0.8.tar.gz 4 [root@nfsslb tmp]# cd keepalived-2.0.8/ 5 [root@nfsslb keepalived-2.0.8]# ./configure --sysconf=/etc --prefix=/usr/local/keepalived 6 [root@nfsslb keepalived-2.0.8]# make && make install

提示:slb02节点参考如上即可。

2.11 Keepalived配置

1 [root@nfsslb ~]# cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak 2 root@docker01:~# scp harbor/ha/sample/active_active/keepalived_active_active.conf [email protected]:/etc/keepalived/keepalived.conf 3 root@docker01:~# scp harbor/ha/sample/active_active/check.sh [email protected]:/usr/local/bin/check.sh 4 root@docker01:~# scp harbor/ha/sample/active_active/check.sh [email protected]:/usr/local/bin/check.sh 5 [root@nfsslb ~]# chmod u+x /usr/local/bin/check.sh 6 [root@slb02 ~]# chmod u+x /usr/local/bin/check.sh 7 [root@nfsslb ~]# vi /etc/keepalived/keepalived.conf 8 global_defs { 9 router_id haborlb 10 } 11 vrrp_sync_groups VG1 { 12 group { 13 VI_1 14 } 15 } 16 vrrp_instance VI_1 { 17 interface eth0 18 19 track_interface { 20 eth0 21 } 22 23 state MASTER 24 virtual_router_id 51 25 priority 10 26 27 virtual_ipaddress { 28 172.24.8.200 29 } 30 advert_int 1 31 authentication { 32 auth_type PASS 33 auth_pass d0cker 34 } 35 36 } 37 virtual_server 172.24.8.200 80 { 38 delay_loop 15 39 lb_algo rr 40 lb_kind DR 41 protocol TCP 42 nat_mask 255.255.255.0 43 persistence_timeout 10 44 45 real_server 172.24.8.111 80 { 46 weight 10 47 MISC_CHECK { 48 misc_path "/usr/local/bin/check.sh 172.24.8.111" 49 misc_timeout 5 50 } 51 } 52 53 real_server 172.24.8.112 80 { 54 weight 10 55 MISC_CHECK { 56 misc_path "/usr/local/bin/check.sh 172.24.8.112" 57 misc_timeout 5 58 } 59 } 60 } 61 [root@nfsslb ~]# scp /etc/keepalived/keepalived.conf [email protected]:/etc/keepalived/keepalived.conf #Keepalived配置复制至slb02节点 62 [root@nfsslb ~]# vi /etc/keepalived/keepalived.conf 63 state BACKUP 64 priority 8

提示:harbor官方已提示Keepalived配置文件及检测脚本,直接使用即可;

lsb02节点设置为BACKUP,优先级低于MASTER,其他默认即可。

2.12 slb节点配置LVS

1 [root@nfsslb ~]# yum -y install ipvsadm 2 [root@nfsslb ~]# vi ipvsadm.sh 3 #!/bin/sh 4 #****************************************************************# 5 # ScriptName: ipvsadm.sh 6 # Author: xhy 7 # Create Date: 2018-10-28 02:40 8 # Modify Author: xhy 9 # Modify Date: 2018-10-28 02:40 10 # Version: 11 #***************************************************************# 12 sudo ifconfig eth0:0 172.24.8.200 broadcast 172.24.8.200 netmask 255.255.255.255 up 13 sudo route add -host 172.24.8.200 dev eth0:0 14 sudo echo "1" > /proc/sys/net/ipv4/ip_forward 15 sudo ipvsadm -C 16 sudo ipvsadm -A -t 172.24.8.200:80 -s rr 17 sudo ipvsadm -a -t 172.24.8.200:80 -r 172.24.8.111:80 -g 18 sudo ipvsadm -a -t 172.24.8.200:80 -r 172.24.8.112:80 -g 19 sudo ipvsadm 20 sudo sysctl -p 21 [root@nfsslb ~]# chmod u+x ipvsadm.sh 22 [root@nfsslb ~]# echo "source /root/ipvsadm.sh" >> /etc/rc.local #开机运行 23 [root@nfsslb ~]# ./ipvsadm.sh

示例解释:

ipvsadm -A -t 172.24.8.200:80 -s rr -p 600

表示在内核的虚拟服务器列表中添加一条IP为192.168.10.200的虚拟服务器,并且指定此虚拟服务器的服务端口为80,其调度策略为轮询模式,并且每个Real Server上的持续时间为600秒。

ipvsadm -a -t 172.24.8.200:80 -r 192.168.10.100:80 -g

表示在IP地位为192.168.10.10的虚拟服务器上添加一条新的Real Server记录,且虚拟服务器的工作模式为直接路由模式。

提示:slb02节点参考以上配置即可,更多LVS可参考https://www.cnblogs.com/itzgr/category/1367969.html。

2.13 harbor节点配置VIP

1 root@docker01:~# vi /etc/init.d/lvsrs 2 #!/bin/bash 3 # description:Script to start LVS DR real server. 4 # 5 . /etc/rc.d/init.d/functions 6 VIP=172.24.8.200 7 #修改相应的VIP 8 case "$1" in 9 start) 10 #启动 LVS-DR 模式,real server on this machine. 关闭ARP冲突检测。 11 echo "Start LVS of Real Server!" 12 /sbin/ifconfig lo down 13 /sbin/ifconfig lo up 14 echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore 15 echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce 16 echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore 17 echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce 18 /sbin/ifconfig lo:0 $VIP broadcast $VIP netmask 255.255.255.255 up 19 /sbin/route add -host $VIP dev lo:0 20 sudo sysctl -p 21 ;; 22 stop) 23 #停止LVS-DR real server loopback device(s). 24 echo "Close LVS Director Server!" 25 /sbin/ifconfig lo:0 down 26 echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore 27 echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce 28 echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore 29 echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce 30 sudo sysctl -p 31 ;; 32 status) 33 # Status of LVS-DR real server. 34 islothere=`/sbin/ifconfig lo:0 | grep $VIP` 35 isrothere=`netstat -rn | grep "lo:0" | grep $VIP` 36 if [ ! "$islothere" -o ! "isrothere" ];then 37 # Either the route or the lo:0 device 38 # not found. 39 echo "LVS-DR real server Stopped!" 40 else 41 echo "LVS-DR real server Running..." 42 fi 43 ;; 44 *) 45 # Invalid entry. 46 echo "$0: Usage: $0 {start|status|stop}" 47 exit 1 48 ;; 49 esac 50 root@docker01:~# chmod u+x /etc/init.d/lvsrs 51 root@docker02:~# chmod u+x /etc/init.d/lvsrs

2.14 启动相关服务

1 root@docker01:~# service lvsrs start 2 root@docker02:~# service lvsrs start 3 [root@nfsslb ~]# systemctl start keepalived.service 4 [root@nfsslb ~]# systemctl enable keepalived.service 5 [root@slb02 ~]# systemctl start keepalived.service 6 [root@slb02 ~]# systemctl enable keepalived.service

2.15 确认验证

1 root@docker01:~# ip addr #验证docker01/02/slb是否成功启用vip

三 测试验证

1 root@docker04:~# vi /etc/hosts 2 172.24.8.200 reg.harbor.com 3 root@docker04:~# vi /etc/docker/daemon.json 4 { 5 "insecure-registries": ["http://reg.harbor.com"] 6 } 7 root@docker04:~# systemctl restart docker.service 8 若是信任CA机构颁发的证书,相应关闭daemon.json中的配置。 9 root@docker04:~# docker login reg.harbor.com #登录registry 10 Username: admin 11 Password: Harbor12345

提示:公开的registry可pull,但push也必须登录,私有的registry必须登录才可pull和push。

1 root@docker04:~# docker pull hello-world 2 root@docker04:~# docker tag hello-world:latest reg.harbor.com/library/hello-world:xhy 3 root@docker04:~# docker push reg.harbor.com/library/hello-world:xhy

提示:修改tag必须为已经存在的项目,并且具备相应的授权。

浏览器访问:https://reg.harbor.com,并使用默认用户名admin/Harbor12345

参考链接:https://www.cnblogs.com/breezey/p/9444231.html