hive学习(3)--- 较大数据下的hql执行速度

生成一个20000000条数据的wetherdata4.txt文件,追加到weather表中,形成20600000条数据下的查询性能情况分析:

第一条hql语句:

select cy.number,wh.*,pm.pmlevel

from cityinfo cy join weather wh on (cy.name=wh.city)

join pminfo pm on (pm.pmvalue=wh.pmvalue)

where wh.city='hangzhou' and wh.weath='fine' and wh.minTemperat in

( -18,25,43) order by maxTemperat DESC limit 20;

三表联合,有判断条件,有in,有排序

执行过程:

hive> select cy.number,wh.*,pm.pmlevel

> from cityinfo cy join weather wh on (cy.name=wh.city)

> join pminfo pm on (pm.pmvalue=wh.pmvalue)

> where wh.city='hangzhou' and wh.weath='fine' and wh.minTemperat in

> ( -18,25,43) order by maxTemperat DESC limit 20;

Total MapReduce jobs = 3

Launching Job 1 out of 3

Number of reduce tasks not specified. Estimated from input data size: 2

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapred.reduce.tasks=

Starting Job = job_1400798880449_0059, Tracking URL = http://Master.Hadoop:8088/proxy/application_1400798880449_0059/

Kill Command = /usr/hadoop/bin/hadoop job -kill job_1400798880449_0059

Hadoop job information for Stage-1: number of mappers: 5; number of reducers: 2

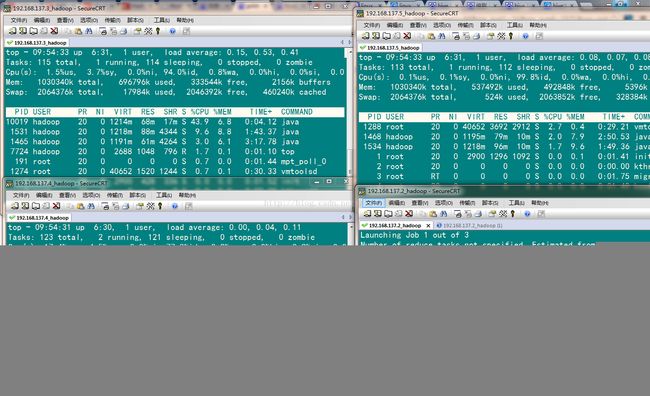

2014-05-24 09:54:29,453 Stage-1 map = 0%, reduce = 0%

2014-05-24 09:54:36,505 Stage-1 map = 20%, reduce = 0%, Cumulative CPU 1.32 sec

2014-05-24 09:54:37,528 Stage-1 map = 20%, reduce = 0%, Cumulative CPU 1.32 sec

2014-05-24 09:54:38,551 Stage-1 map = 20%, reduce = 0%, Cumulative CPU 1.32 sec

2014-05-24 09:54:39,948 Stage-1 map = 20%, reduce = 0%, Cumulative CPU 13.56 sec

2014-05-24 09:54:41,309 Stage-1 map = 21%, reduce = 0%, Cumulative CPU 17.58 sec

2014-05-24 09:54:42,509 Stage-1 map = 35%, reduce = 0%, Cumulative CPU 21.57 sec

2014-05-24 09:54:44,675 Stage-1 map = 35%, reduce = 0%, Cumulative CPU 26.09 sec

2014-05-24 09:54:46,488 Stage-1 map = 43%, reduce = 0%, Cumulative CPU 33.4 sec

2014-05-24 09:54:47,899 Stage-1 map = 49%, reduce = 0%, Cumulative CPU 35.77 sec

2014-05-24 09:54:48,957 Stage-1 map = 49%, reduce = 0%, Cumulative CPU 35.77 sec

2014-05-24 09:54:49,997 Stage-1 map = 87%, reduce = 0%, Cumulative CPU 46.21 sec

2014-05-24 09:54:51,026 Stage-1 map = 87%, reduce = 0%, Cumulative CPU 46.21 sec

2014-05-24 09:54:52,049 Stage-1 map = 87%, reduce = 7%, Cumulative CPU 47.25 sec

2014-05-24 09:54:53,085 Stage-1 map = 100%, reduce = 7%, Cumulative CPU 49.46 sec

2014-05-24 09:54:54,109 Stage-1 map = 100%, reduce = 57%, Cumulative CPU 51.61 sec

2014-05-24 09:54:55,141 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 53.67 sec

2014-05-24 09:54:56,177 Stage-1 map = 100%, reduce = 100%, Cumulative CPU 53.67 sec

MapReduce Total cumulative CPU time: 53 seconds 670 msec

Ended Job = job_1400798880449_0059

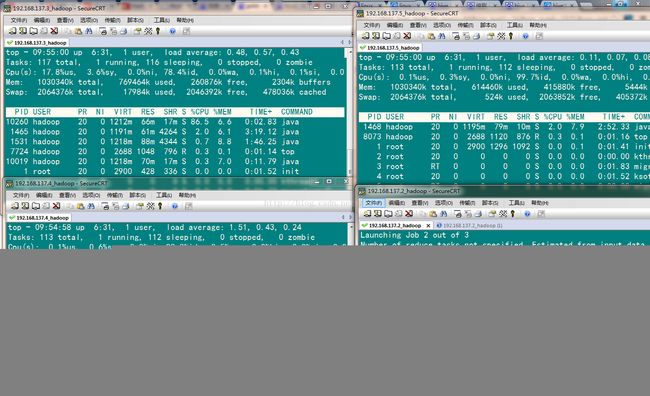

Launching Job 2 out of 3

Number of reduce tasks not specified. Estimated from input data size: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapred.reduce.tasks=

Starting Job = job_1400798880449_0060, Tracking URL = http://Master.Hadoop:8088/proxy/application_1400798880449_0060/

Kill Command = /usr/hadoop/bin/hadoop job -kill job_1400798880449_0060

Hadoop job information for Stage-2: number of mappers: 2; number of reducers: 1

2014-05-24 09:54:59,962 Stage-2 map = 0%, reduce = 0%

2014-05-24 09:55:04,109 Stage-2 map = 50%, reduce = 0%, Cumulative CPU 0.94 sec

2014-05-24 09:55:05,130 Stage-2 map = 50%, reduce = 0%, Cumulative CPU 0.94 sec

2014-05-24 09:55:06,164 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 3.34 sec

2014-05-24 09:55:07,187 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 3.34 sec

2014-05-24 09:55:08,212 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 3.34 sec

2014-05-24 09:55:09,237 Stage-2 map = 100%, reduce = 0%, Cumulative CPU 3.34 sec

2014-05-24 09:55:10,258 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 5.39 sec

2014-05-24 09:55:11,294 Stage-2 map = 100%, reduce = 100%, Cumulative CPU 5.39 sec

MapReduce Total cumulative CPU time: 5 seconds 390 msec

Ended Job = job_1400798880449_0060

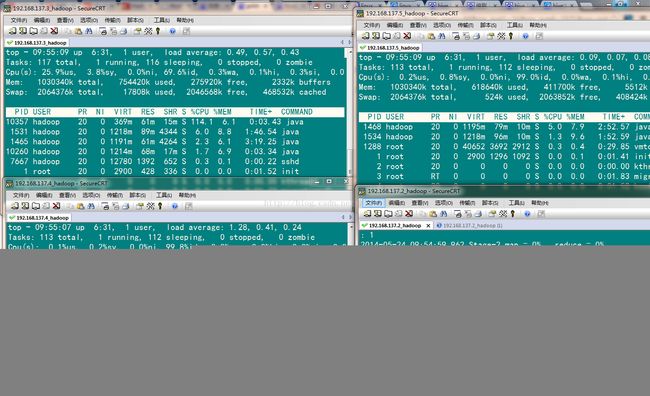

Launching Job 3 out of 3

Number of reduce tasks determined at compile time: 1

In order to change the average load for a reducer (in bytes):

set hive.exec.reducers.bytes.per.reducer=

In order to limit the maximum number of reducers:

set hive.exec.reducers.max=

In order to set a constant number of reducers:

set mapred.reduce.tasks=

Starting Job = job_1400798880449_0061, Tracking URL = http://Master.Hadoop:8088/proxy/application_1400798880449_0061/

Kill Command = /usr/hadoop/bin/hadoop job -kill job_1400798880449_0061

Hadoop job information for Stage-3: number of mappers: 1; number of reducers: 1

2014-05-24 09:55:15,223 Stage-3 map = 0%, reduce = 0%

2014-05-24 09:55:19,362 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 1.27 sec

2014-05-24 09:55:20,404 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 1.27 sec

2014-05-24 09:55:21,425 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 1.27 sec

2014-05-24 09:55:22,446 Stage-3 map = 100%, reduce = 0%, Cumulative CPU 1.27 sec

2014-05-24 09:55:23,534 Stage-3 map = 100%, reduce = 100%, Cumulative CPU 1.83 sec

2014-05-24 09:55:24,571 Stage-3 map = 100%, reduce = 100%, Cumulative CPU 1.83 sec

2014-05-24 09:55:25,603 Stage-3 map = 100%, reduce = 100%, Cumulative CPU 1.83 sec

MapReduce Total cumulative CPU time: 1 seconds 830 msec

Ended Job = job_1400798880449_0061

MapReduce Jobs Launched:

Job 0: Map: 5 Reduce: 2 Cumulative CPU: 53.67 sec HDFS Read: 1061735802 HDFS Write: 6918992 SUCCESS

Job 1: Map: 2 Reduce: 1 Cumulative CPU: 5.39 sec HDFS Read: 6923192 HDFS Write: 7232163 SUCCESS

Job 2: Map: 1 Reduce: 1 Cumulative CPU: 1.83 sec HDFS Read: 7232532 HDFS Write: 1126 SUCCESS

Total MapReduce CPU Time Spent: 1 minutes 0 seconds 890 msec

OK

从上面的日志看,一共执行了3次job,经过尝试发现,hive规划的job的次数取决于hql语句的复杂程度,具体规则是什么样的,有待后续研究,此处举一个例子:

select *

from cityinfo join weather on (cityinfo.name=weather.city)

where city='hangzhou' and weath='fine' and minTemperat=-16

上面这句语句,hql只会生成一个job,当然可能包括N个mapper和M个reducer

但是如果后面跟上排序的功能,就会变成2个job:

select *

from cityinfo join weather on (cityinfo.name=weather.city)

where city='hangzhou' and weath='fine' and minTemperat=-16

order by maxTemperat

执行过程中的数据倾斜于top信息: