论文阅读笔记之——《Recovering Realistic Texture in Image Super-resolution by Deep Spatial Feature Transform》

本博文是文章《Recovering Realistic Texture in Image Super-resolution by Deep Spatial Feature Transform》也就是SFTGAN的学习笔记。附上论文的连接(https://arxiv.org/pdf/1804.02815.pdf)本博文属于本人阅读该论文时写下的阅读笔记,思路按本人阅读的跳跃式思路,仅供本人理解用。

除了对STFGAN进行描述以外,本博文还对几种SR的loss进行了分析理解

Recovering Realistic Texture恢复真实的纹理,那应该就是如NIQE一样,评价图片更加的sharp,而不是传统的超分方法关注PSNR(希望生成的图片more realistic and visually pleasing textures)。而Spatial Feature Transform(就是特征空间的转换,应该也就是论文的中心,如何通过特征空间的转换来实现纹理中心的恢复)

In this paper, we show that it is possible to recover textures faithful to semantic classes.基于语义类来恢复纹理,是基于特定的区域恢复纹理的意思吗?接下来看一下

we only need to modulate features of a few intermediate layers in a single network conditioned on semantic segmentation probability maps.(我们只需要在语义分割概率图的条件下调整单个网络中几个中间层的特征。)通过空间特征变换层(SFT)生成用于空间特征调制的仿射变换参数。

传统的SR方法,是基于MSE。属于基于像素纬度的loss,会导致产生的图片更加的模糊、过平滑(conventional pixel-wise mean squared error (MSE) loss that tends to encourage blurry and overly-smoothed results。encourage the network to find an average of many plausible solutions and lead to overly-smooth results.)而SRGAN提出的perceptual loss对特征维度进行优化而不是像素维度(optimize a superresolution model in a feature space instead of pixel space。are proposed to enhance the visual quality by minimizing the error in a feature space.)进一步地,通过引入adversarial loss(generating images with more natural details),使得生成更加自然的图片。(局部纹理匹配损失)local texture matching loss, partly reducing visually unpleasant artifacts.

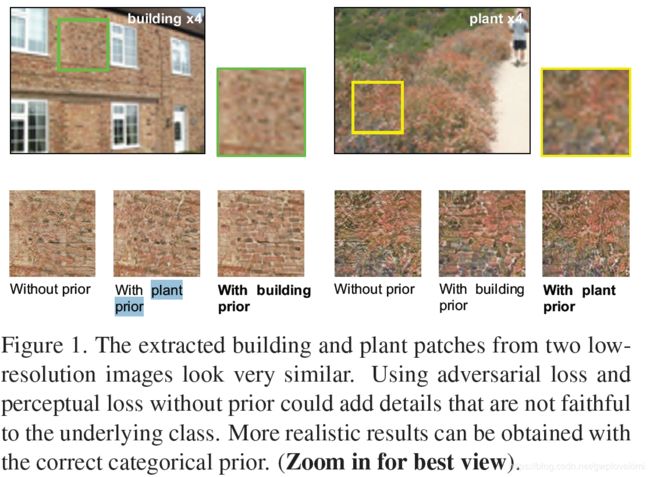

纹理的恢复(texture recovery)方面的问题。如下图所示。不同的HR patches可能有相似的LR。图中的without prior是perceptual and adversarial losses得到的。因此需要产生更强的纹理特性。因此作者通过在特定的植物数据集和建筑物数据集产生的两个CNN网络,来恢复出更加好的纹理细节。为每个语义类别训练专用模型(train specialized models for each semantic category)可以通过对语义的先验来改变SR的结果

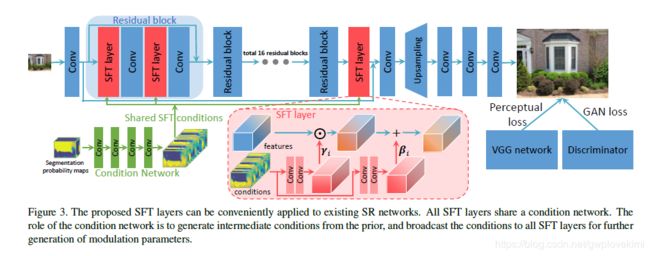

为此,本文是基于类的先验的超分(investigate class-conditional image super-resolution with CNN)当一副图像中存在多个语义类时也要实现SR。SFT通过转换网络的某些中间层的特征,能够改变SR网络的性能。SFT层以语义分割概率图为条件,基于此,它生成一对调制参数,以在空间上对网络的特征图应用仿射变换。通过转换单个网络的中间特征,只需一次正向传递就可以实现具有丰富语义区域的HR图像的重建。本文采用local texture matching loss,基于categorical priors(分类先验)

assume multiple categorical classes to co-exist in an image, and propose an effective layer that enables an SR network to generate rich

and realistic textures in a single forward pass conditioned on the prior provided up to the pixel level.

论文中提到“Conditional Normalization (CN) applies a learned function of some conditions to replace parameters for feature-wise affine transformation in BN.”也就是说,所谓CN层就是用某些条件下,学习到的函数,来替换BN层中的特征仿射变换参数。

SFT层能够转换空间条件,不仅可以进行特征操作,还可以进行空间转换(It is capable of converting spatial conditions for not only feature-wise manipulation but also spatial-wise transformation)

在语义分割方面。本文使用语义映射来指导SR域中不同区域的纹理恢复。 其次,利用概率图来捕捉精细的纹理区别,而不是简单的图像片段。

SFT层学习mapping function,然后输出基于一些先验条件的调制参数对![]() 学习的参数对通过在空间上对SR网络中的每个中间特征图应用仿射变换来自适应地影响输出(先验由一对仿射变换参数建模)。

学习的参数对通过在空间上对SR网络中的每个中间特征图应用仿射变换来自适应地影响输出(先验由一对仿射变换参数建模)。

SFTGAN的网络结构如下图所示。包括了a condition network and an SR network。condition network将分割概率图作为输入,然后通过四个卷积层进行处理,生成所有SFT层共享的中间条件。

补充

Perceptual Loss

参考文献

J. Johnson, A. Alahi, and L. Fei-Fei. Perceptual losses for real-time style transfer and super-resolution. In ECCV, 2016.

J. Bruna, P. Sprechmann, and Y. LeCun. Super-resolution with deep convolutional sufficient statistics. In ICLR, 2015.

Adversarial Loss

C. Ledig, L. Theis, F. Husz´ar, J. Caballero, A. Cunningham, A. Acosta, A. Aitken, A. Tejani, J. Totz, Z. Wang, et al. Photo-realistic single image super-resolution using a generative adversarial network. In CVPR, 2017.

M. S. Sajjadi, B. Sch¨olkopf, and M. Hirsch. EnhanceNet: Single image super-resolution through automated texture synthesis. In ICCV, 2017.

Local Texture Matching Loss

M. S. Sajjadi, B. Sch¨olkopf, and M. Hirsch. EnhanceNet: Single image super-resolution through automated texture synthesis. In ICCV, 2017.