Tensorflow对比AlexNet的CPU和GPU运算效率

代码及注释

from datetime import datetime

import math

import time

import tensorflow as tf

c:\program files\python\python36\lib\site-packages\h5py\__init__.py:36: FutureWarning: Conversion of the second argument of issubdtype from `float` to `np.floating` is deprecated. In future, it will be treated as `np.float64 == np.dtype(float).type`.

from ._conv import register_converters as _register_converters

batch_size = 32

num_batches = 100

# 该函数用来显示网络每一层的结构,展示tensor的尺寸

def print_activations(t):

print(t.op.name, ' ',t.get_shape().as_list())

# with tf.name_scope('conv1') as scope # 可以将scope之内的variable自动命名为conv1/xxx,便于区分不同组件

def inference(images):

parameters = []

# 第一个卷积层

with tf.name_scope('conv1') as scope:

# 卷积核、截断正态分布

kernel = tf.Variable(tf.truncated_normal([11,11,3,64],

dtype=tf.float32,stddev=1e-1),name='weights')

conv = tf.nn.conv2d(images,kernel,[1,4,4,1],padding='SAME')

# 可训练

biases = tf.Variable(tf.constant(0.0,shape=[64],dtype=tf.float32),trainable=True,name='biases')

bias = tf.nn.bias_add(conv,biases)

conv1 = tf.nn.relu(bias,name=scope)

print_activations(conv1)

parameters += [kernel,biases]

# 再加LRN和最大池化层,除了AlexNet,基本放弃了LRN,说是效果不明显,还会减速?

lrn1 = tf.nn.lrn(conv1,4,bias=1.0,alpha=0.001/9,beta=0.75,name='lrn1')

pool1 = tf.nn.max_pool(lrn1,ksize=[1,3,3,1],strides = [1,2,2,1],padding='VALID',name='pool1')

print_activations(pool1)

# 第二个卷积层,只有部分参数不同

with tf.name_scope('conv2') as scope:

kernel = tf.Variable(tf.truncated_normal([5,5,64,192],dtype=tf.float32,stddev=1e-1),name='weights')

conv = tf.nn.conv2d(pool1,kernel,[1,1,1,1],padding='SAME')

biases = tf.Variable(tf.constant(0.0,shape=[192],dtype=tf.float32),trainable=True,name='biases')

bias = tf.nn.bias_add(conv,biases)

conv2 = tf.nn.relu(bias,name=scope)

parameters += [kernel,biases]

print_activations(conv2)

# 稍微处理一下

lrn2 = tf.nn.lrn(conv2,4,bias=1.0,alpha = 0.001/9,beta=0.75,name='lrn2')

pool2 = tf.nn.max_pool(lrn2,ksize=[1,3,3,1],strides=[1,2,2,1],padding='VALID',name='pool2')

print_activations(pool2)

# 第三个

with tf.name_scope('conv3') as scope:

kernel = tf.Variable(tf.truncated_normal([3,3,192,384],dtype=tf.float32,stddev=1e-1),name='weights')

conv = tf.nn.conv2d(pool2,kernel,[1,1,1,1],padding='SAME')

biases = tf.Variable(tf.constant(0.0,shape=[384],dtype=tf.float32),trainable=True,name='biases')

bias = tf.nn.bias_add(conv,biases)

conv3 = tf.nn.relu(bias,name=scope)

parameters += [kernel,biases]

print_activations(conv3)

# 第四层

with tf.name_scope('conv4') as scope:

kernel = tf.Variable(tf.truncated_normal([3,3,384,256],dtype=tf.float32,stddev=1e-1),name='weights')

conv = tf.nn.conv2d(conv3,kernel,[1,1,1,1],padding='SAME')

biases = tf.Variable(tf.constant(0.0,shape=[256],dtype=tf.float32),trainable=True,name = 'biases')

bias = tf.nn.bias_add(conv,biases)

conv4 = tf.nn.relu(bias,name=scope)

parameters += [kernel,biases]

print_activations(conv4)

# 第五个

with tf.name_scope('conv5') as scope:

kernel = tf.Variable(tf.truncated_normal([3,3,256,256],dtype=tf.float32,stddev=1e-1),name='weights')

conv = tf.nn.conv2d(conv4,kernel,[1,1,1,1],padding= 'SAME')

biases = tf.Variable(tf.constant(0.0,shape=[256],dtype=tf.float32),trainable=True,name='biases')

bias = tf.nn.bias_add(conv,biases)

conv5 = tf.nn.relu(bias,name=scope)

parameters += [kernel,biases]

print_activations(conv5)

# 之后还有最大化池层

pool5 = tf.nn.max_pool(conv5,ksize=[1,3,3,1],strides = [1,2,2,1],padding='VALID',name = 'pool5')

print_activations(pool5)

return pool5,parameters

# 全连接层

# 评估每轮计算时间,第一个输入是tf得Session,第二个是运算算子,第三个是测试名称

# 头几轮有显存加载,cache命中等问题,可以考虑只计算第10次以后的

def time_tensorflow_run(session,target,info_string):

num_steps_burn_in = 10

total_duration = 0.0

total_duration_squared = 0.0

# 进行num_batches+num_steps_burn_in次迭代

# 用time.time()记录时间,热身过后,开始显示时间

for i in range(num_batches + num_steps_burn_in):

start_time = time.time()

_ = session.run(target)

duration = time.time() - start_time

if i >= num_steps_burn_in:

if not i %10:

print('%s:step %d, duration = %.3f' % (datetime.now(),i-num_steps_burn_in,duration))

total_duration += duration

total_duration_squared += duration*duration

# 计算每轮迭代品均耗时和标准差sd

mn = total_duration/num_batches

vr = total_duration_squared/num_batches - mn*mn

sd = math.sqrt(vr)

print('%s: %s across %d steps, %.3f +/- %.3f sec / batch' % (datetime.now(),info_string,num_batches,mn,sd))

def run_benchmark():

# 首先定义默认的Graph

with tf.Graph().as_default():

# 并不实用ImageNet训练,知识随机计算耗时

image_size = 224

images = tf.Variable(tf.random_normal([batch_size,image_size,image_size,3],dtype=tf.float32,stddev=1e-1))

pool5,parameters = inference(images)

init = tf.global_variables_initializer()

sess = tf.Session()

sess.run(init)

# 下面直接用pool5传入训练(没有全连接层)

# 只是做做样子,并不是真的计算

time_tensorflow_run(sess,pool5,"Forward")

# 瞎弄的,伪装

objective = tf.nn.l2_loss(pool5)

grad = tf.gradients(objective,parameters)

time_tensorflow_run(sess,grad,"Forward-backward")

run_benchmark()cpu运行结果:

conv1 [32, 56, 56, 64]

conv1/pool1 [32, 27, 27, 64]

conv2 [32, 27, 27, 192]

conv2/pool2 [32, 13, 13, 192]

conv3 [32, 13, 13, 384]

conv4 [32, 13, 13, 256]

conv5 [32, 13, 13, 256]

conv5/pool5 [32, 6, 6, 256]

2018-04-07 22:04:02.078231: Forward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:04:03.381361: Forward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:04:04.645201: Forward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:04:05.934559: Forward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:04:07.261441: Forward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:04:08.600831: Forward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:04:09.864171: Forward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:04:11.132014: Forward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:04:12.440383: Forward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:04:13.715730: Forward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:04:15.047116:step 0, duration = 1.330

2018-04-07 22:04:15.047616: Forward across 100 steps, 0.013 +/- 0.132 sec / batch

2018-04-07 22:04:16.427534: Forward across 100 steps, 0.027 +/- 0.190 sec / batch

2018-04-07 22:04:17.825463: Forward across 100 steps, 0.041 +/- 0.234 sec / batch

2018-04-07 22:04:19.149843: Forward across 100 steps, 0.054 +/- 0.266 sec / batch

2018-04-07 22:04:20.465219: Forward across 100 steps, 0.067 +/- 0.294 sec / batch

2018-04-07 22:04:21.931704: Forward across 100 steps, 0.082 +/- 0.325 sec / batch

2018-04-07 22:04:23.300113: Forward across 100 steps, 0.096 +/- 0.349 sec / batch

2018-04-07 22:04:24.705568: Forward across 100 steps, 0.110 +/- 0.373 sec / batch

2018-04-07 22:04:26.021455: Forward across 100 steps, 0.123 +/- 0.391 sec / batch

2018-04-07 22:04:27.387037: Forward across 100 steps, 0.137 +/- 0.410 sec / batch

2018-04-07 22:04:28.670890:step 10, duration = 1.283

2018-04-07 22:04:28.671891: Forward across 100 steps, 0.149 +/- 0.425 sec / batch

2018-04-07 22:04:29.951741: Forward across 100 steps, 0.162 +/- 0.440 sec / batch

2018-04-07 22:04:31.253607: Forward across 100 steps, 0.175 +/- 0.454 sec / batch

2018-04-07 22:04:32.656039: Forward across 100 steps, 0.189 +/- 0.470 sec / batch

2018-04-07 22:04:33.946397: Forward across 100 steps, 0.202 +/- 0.482 sec / batch

2018-04-07 22:04:35.237774: Forward across 100 steps, 0.215 +/- 0.493 sec / batch

2018-04-07 22:04:36.508665: Forward across 100 steps, 0.228 +/- 0.504 sec / batch

2018-04-07 22:04:37.887082: Forward across 100 steps, 0.242 +/- 0.516 sec / batch

2018-04-07 22:04:39.174097: Forward across 100 steps, 0.254 +/- 0.526 sec / batch

2018-04-07 22:04:40.512485: Forward across 100 steps, 0.268 +/- 0.536 sec / batch

2018-04-07 22:04:41.811973:step 20, duration = 1.299

2018-04-07 22:04:41.812475: Forward across 100 steps, 0.281 +/- 0.545 sec / batch

2018-04-07 22:04:43.105834: Forward across 100 steps, 0.294 +/- 0.554 sec / batch

2018-04-07 22:04:44.386699: Forward across 100 steps, 0.307 +/- 0.561 sec / batch

2018-04-07 22:04:45.649539: Forward across 100 steps, 0.319 +/- 0.569 sec / batch

2018-04-07 22:04:46.935394: Forward across 100 steps, 0.332 +/- 0.576 sec / batch

2018-04-07 22:04:48.270282: Forward across 100 steps, 0.345 +/- 0.583 sec / batch

2018-04-07 22:04:49.543627: Forward across 100 steps, 0.358 +/- 0.589 sec / batch

2018-04-07 22:04:50.811971: Forward across 100 steps, 0.371 +/- 0.595 sec / batch

2018-04-07 22:04:52.119853: Forward across 100 steps, 0.384 +/- 0.601 sec / batch

2018-04-07 22:04:53.490264: Forward across 100 steps, 0.398 +/- 0.608 sec / batch

2018-04-07 22:04:54.818654:step 30, duration = 1.328

2018-04-07 22:04:54.819154: Forward across 100 steps, 0.411 +/- 0.613 sec / batch

2018-04-07 22:04:56.146036: Forward across 100 steps, 0.424 +/- 0.619 sec / batch

2018-04-07 22:04:57.543481: Forward across 100 steps, 0.438 +/- 0.625 sec / batch

2018-04-07 22:04:58.914893: Forward across 100 steps, 0.452 +/- 0.630 sec / batch

2018-04-07 22:05:00.318326: Forward across 100 steps, 0.466 +/- 0.635 sec / batch

2018-04-07 22:05:01.642206: Forward across 100 steps, 0.479 +/- 0.639 sec / batch

2018-04-07 22:05:02.951076: Forward across 100 steps, 0.492 +/- 0.643 sec / batch

2018-04-07 22:05:04.296471: Forward across 100 steps, 0.506 +/- 0.646 sec / batch

2018-04-07 22:05:05.792464: Forward across 100 steps, 0.520 +/- 0.652 sec / batch

2018-04-07 22:05:07.362509: Forward across 100 steps, 0.536 +/- 0.658 sec / batch

2018-04-07 22:05:08.714419:step 40, duration = 1.351

2018-04-07 22:05:08.714920: Forward across 100 steps, 0.550 +/- 0.661 sec / batch

2018-04-07 22:05:10.368039: Forward across 100 steps, 0.566 +/- 0.667 sec / batch

2018-04-07 22:05:11.925074: Forward across 100 steps, 0.582 +/- 0.672 sec / batch

2018-04-07 22:05:13.389365: Forward across 100 steps, 0.596 +/- 0.675 sec / batch

2018-04-07 22:05:14.829647: Forward across 100 steps, 0.611 +/- 0.678 sec / batch

2018-04-07 22:05:16.287116: Forward across 100 steps, 0.625 +/- 0.680 sec / batch

2018-04-07 22:05:17.707072: Forward across 100 steps, 0.640 +/- 0.682 sec / batch

2018-04-07 22:05:19.012940: Forward across 100 steps, 0.653 +/- 0.682 sec / batch

2018-04-07 22:05:20.430382: Forward across 100 steps, 0.667 +/- 0.683 sec / batch

2018-04-07 22:05:21.800792: Forward across 100 steps, 0.680 +/- 0.683 sec / batch

2018-04-07 22:05:23.106659:step 50, duration = 1.305

2018-04-07 22:05:23.107161: Forward across 100 steps, 0.694 +/- 0.682 sec / batch

2018-04-07 22:05:24.418033: Forward across 100 steps, 0.707 +/- 0.681 sec / batch

2018-04-07 22:05:25.709418: Forward across 100 steps, 0.720 +/- 0.680 sec / batch

2018-04-07 22:05:27.040803: Forward across 100 steps, 0.733 +/- 0.679 sec / batch

2018-04-07 22:05:28.389215: Forward across 100 steps, 0.746 +/- 0.678 sec / batch

2018-04-07 22:05:29.674569: Forward across 100 steps, 0.759 +/- 0.676 sec / batch

2018-04-07 22:05:30.958422: Forward across 100 steps, 0.772 +/- 0.673 sec / batch

2018-04-07 22:05:32.305644: Forward across 100 steps, 0.785 +/- 0.671 sec / batch

2018-04-07 22:05:33.569985: Forward across 100 steps, 0.798 +/- 0.668 sec / batch

2018-04-07 22:05:34.848835: Forward across 100 steps, 0.811 +/- 0.665 sec / batch

2018-04-07 22:05:36.199232:step 60, duration = 1.350

2018-04-07 22:05:36.199733: Forward across 100 steps, 0.824 +/- 0.662 sec / batch

2018-04-07 22:05:37.558151: Forward across 100 steps, 0.838 +/- 0.659 sec / batch

2018-04-07 22:05:38.840033: Forward across 100 steps, 0.851 +/- 0.655 sec / batch

2018-04-07 22:05:40.112378: Forward across 100 steps, 0.863 +/- 0.651 sec / batch

2018-04-07 22:05:41.405738: Forward across 100 steps, 0.876 +/- 0.646 sec / batch

2018-04-07 22:05:42.745129: Forward across 100 steps, 0.890 +/- 0.642 sec / batch

2018-04-07 22:05:44.070010: Forward across 100 steps, 0.903 +/- 0.637 sec / batch

2018-04-07 22:05:45.362869: Forward across 100 steps, 0.916 +/- 0.632 sec / batch

2018-04-07 22:05:46.695755: Forward across 100 steps, 0.929 +/- 0.626 sec / batch

2018-04-07 22:05:48.006666: Forward across 100 steps, 0.942 +/- 0.620 sec / batch

2018-04-07 22:05:49.291521:step 70, duration = 1.284

2018-04-07 22:05:49.292021: Forward across 100 steps, 0.955 +/- 0.614 sec / batch

2018-04-07 22:05:50.568369: Forward across 100 steps, 0.968 +/- 0.607 sec / batch

2018-04-07 22:05:51.916775: Forward across 100 steps, 0.981 +/- 0.600 sec / batch

2018-04-07 22:05:53.247160: Forward across 100 steps, 0.995 +/- 0.593 sec / batch

2018-04-07 22:05:54.513500: Forward across 100 steps, 1.007 +/- 0.585 sec / batch

2018-04-07 22:05:55.794351: Forward across 100 steps, 1.020 +/- 0.577 sec / batch

2018-04-07 22:05:57.115230: Forward across 100 steps, 1.033 +/- 0.568 sec / batch

2018-04-07 22:05:58.434607: Forward across 100 steps, 1.047 +/- 0.560 sec / batch

2018-04-07 22:05:59.707954: Forward across 100 steps, 1.059 +/- 0.550 sec / batch

2018-04-07 22:06:01.056851: Forward across 100 steps, 1.073 +/- 0.540 sec / batch

2018-04-07 22:06:02.388235:step 80, duration = 1.331

2018-04-07 22:06:02.389236: Forward across 100 steps, 1.086 +/- 0.530 sec / batch

2018-04-07 22:06:03.818185: Forward across 100 steps, 1.100 +/- 0.520 sec / batch

2018-04-07 22:06:05.277789: Forward across 100 steps, 1.115 +/- 0.509 sec / batch

2018-04-07 22:06:06.727272: Forward across 100 steps, 1.129 +/- 0.498 sec / batch

2018-04-07 22:06:08.093180: Forward across 100 steps, 1.143 +/- 0.485 sec / batch

2018-04-07 22:06:09.585633: Forward across 100 steps, 1.158 +/- 0.472 sec / batch

2018-04-07 22:06:11.111158: Forward across 100 steps, 1.173 +/- 0.459 sec / batch

2018-04-07 22:06:12.512989: Forward across 100 steps, 1.187 +/- 0.444 sec / batch

2018-04-07 22:06:13.805348: Forward across 100 steps, 1.200 +/- 0.428 sec / batch

2018-04-07 22:06:15.113332: Forward across 100 steps, 1.213 +/- 0.411 sec / batch

2018-04-07 22:06:16.451307:step 90, duration = 1.337

2018-04-07 22:06:16.452307: Forward across 100 steps, 1.227 +/- 0.392 sec / batch

2018-04-07 22:06:17.779690: Forward across 100 steps, 1.240 +/- 0.373 sec / batch

2018-04-07 22:06:19.064052: Forward across 100 steps, 1.253 +/- 0.351 sec / batch

2018-04-07 22:06:20.342401: Forward across 100 steps, 1.266 +/- 0.328 sec / batch

2018-04-07 22:06:21.650772: Forward across 100 steps, 1.279 +/- 0.302 sec / batch

2018-04-07 22:06:22.920115: Forward across 100 steps, 1.291 +/- 0.274 sec / batch

2018-04-07 22:06:24.254011: Forward across 100 steps, 1.305 +/- 0.241 sec / batch

2018-04-07 22:06:25.709478: Forward across 100 steps, 1.319 +/- 0.202 sec / batch

2018-04-07 22:06:27.372083: Forward across 100 steps, 1.336 +/- 0.156 sec / batch

2018-04-07 22:06:28.808038: Forward across 100 steps, 1.350 +/- 0.081 sec / batch

2018-04-07 22:06:35.461935: Forward-backward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:06:41.997287: Forward-backward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:06:48.182993: Forward-backward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:06:54.358695: Forward-backward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:07:00.541870: Forward-backward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:07:06.823545: Forward-backward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:07:12.998150: Forward-backward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:07:19.139292: Forward-backward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:07:25.350639: Forward-backward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:07:31.543266: Forward-backward across 100 steps, 0.000 +/- 0.000 sec / batch

2018-04-07 22:07:37.788917:step 0, duration = 6.245

2018-04-07 22:07:37.789417: Forward-backward across 100 steps, 0.062 +/- 0.621 sec / batch

2018-04-07 22:07:43.974582: Forward-backward across 100 steps, 0.124 +/- 0.870 sec / batch

2018-04-07 22:07:50.103804: Forward-backward across 100 steps, 0.186 +/- 1.055 sec / batch

2018-04-07 22:07:56.308428: Forward-backward across 100 steps, 0.248 +/- 1.213 sec / batch

2018-04-07 22:08:02.566087: Forward-backward across 100 steps, 0.310 +/- 1.352 sec / batch

2018-04-07 22:08:08.795247: Forward-backward across 100 steps, 0.372 +/- 1.474 sec / batch

2018-04-07 22:08:14.924900: Forward-backward across 100 steps, 0.434 +/- 1.581 sec / batch

2018-04-07 22:08:21.144569: Forward-backward across 100 steps, 0.496 +/- 1.682 sec / batch

2018-04-07 22:08:27.448335: Forward-backward across 100 steps, 0.559 +/- 1.778 sec / batch

2018-04-07 22:08:33.655970: Forward-backward across 100 steps, 0.621 +/- 1.863 sec / batch

2018-04-07 22:08:40.002033:step 10, duration = 6.346

2018-04-07 22:08:40.003033: Forward-backward across 100 steps, 0.684 +/- 1.947 sec / batch

2018-04-07 22:08:46.189682: Forward-backward across 100 steps, 0.746 +/- 2.021 sec / batch

2018-04-07 22:08:52.435864: Forward-backward across 100 steps, 0.809 +/- 2.092 sec / batch

2018-04-07 22:08:58.665004: Forward-backward across 100 steps, 0.871 +/- 2.159 sec / batch

2018-04-07 22:09:04.900665: Forward-backward across 100 steps, 0.933 +/- 2.222 sec / batch

2018-04-07 22:09:11.080890: Forward-backward across 100 steps, 0.995 +/- 2.280 sec / batch

2018-04-07 22:09:17.550010: Forward-backward across 100 steps, 1.060 +/- 2.342 sec / batch

2018-04-07 22:09:23.804166: Forward-backward across 100 steps, 1.122 +/- 2.396 sec / batch

2018-04-07 22:09:30.818193: Forward-backward across 100 steps, 1.193 +/- 2.464 sec / batch

2018-04-07 22:09:37.286491: Forward-backward across 100 steps, 1.257 +/- 2.516 sec / batch

2018-04-07 22:09:44.119077:step 20, duration = 6.832

2018-04-07 22:09:44.120579: Forward-backward across 100 steps, 1.326 +/- 2.573 sec / batch

2018-04-07 22:09:51.168940: Forward-backward across 100 steps, 1.396 +/- 2.632 sec / batch

2018-04-07 22:09:58.243589: Forward-backward across 100 steps, 1.467 +/- 2.688 sec / batch

2018-04-07 22:10:04.727410: Forward-backward across 100 steps, 1.532 +/- 2.729 sec / batch

2018-04-07 22:10:11.002081: Forward-backward across 100 steps, 1.594 +/- 2.765 sec / batch

2018-04-07 22:10:17.775683: Forward-backward across 100 steps, 1.662 +/- 2.808 sec / batch

2018-04-07 22:10:24.408629: Forward-backward across 100 steps, 1.728 +/- 2.846 sec / batch

2018-04-07 22:10:30.710613: Forward-backward across 100 steps, 1.791 +/- 2.877 sec / batch

2018-04-07 22:10:37.306567: Forward-backward across 100 steps, 1.857 +/- 2.910 sec / batch

2018-04-07 22:10:43.785874: Forward-backward across 100 steps, 1.922 +/- 2.940 sec / batch

2018-04-07 22:10:50.352258:step 30, duration = 6.566

2018-04-07 22:10:50.352759: Forward-backward across 100 steps, 1.988 +/- 2.970 sec / batch

2018-04-07 22:10:56.846428: Forward-backward across 100 steps, 2.053 +/- 2.996 sec / batch

2018-04-07 22:11:03.307729: Forward-backward across 100 steps, 2.117 +/- 3.021 sec / batch

2018-04-07 22:11:09.610920: Forward-backward across 100 steps, 2.180 +/- 3.042 sec / batch

2018-04-07 22:11:15.950465: Forward-backward across 100 steps, 2.244 +/- 3.062 sec / batch

2018-04-07 22:11:22.532876: Forward-backward across 100 steps, 2.310 +/- 3.083 sec / batch

2018-04-07 22:11:28.878169: Forward-backward across 100 steps, 2.373 +/- 3.100 sec / batch

2018-04-07 22:11:35.081804: Forward-backward across 100 steps, 2.435 +/- 3.114 sec / batch

2018-04-07 22:11:42.013672: Forward-backward across 100 steps, 2.504 +/- 3.136 sec / batch

2018-04-07 22:11:48.819330: Forward-backward across 100 steps, 2.572 +/- 3.155 sec / batch

2018-04-07 22:11:55.479284:step 40, duration = 6.659

2018-04-07 22:11:55.480285: Forward-backward across 100 steps, 2.639 +/- 3.170 sec / batch

2018-04-07 22:12:01.803794: Forward-backward across 100 steps, 2.702 +/- 3.180 sec / batch

2018-04-07 22:12:08.027946: Forward-backward across 100 steps, 2.764 +/- 3.187 sec / batch

2018-04-07 22:12:14.246596: Forward-backward across 100 steps, 2.827 +/- 3.194 sec / batch

2018-04-07 22:12:20.853037: Forward-backward across 100 steps, 2.893 +/- 3.203 sec / batch

2018-04-07 22:12:27.755235: Forward-backward across 100 steps, 2.962 +/- 3.214 sec / batch

2018-04-07 22:12:34.547499: Forward-backward across 100 steps, 3.030 +/- 3.222 sec / batch

2018-04-07 22:12:41.893929: Forward-backward across 100 steps, 3.103 +/- 3.236 sec / batch

2018-04-07 22:12:48.495318: Forward-backward across 100 steps, 3.169 +/- 3.240 sec / batch

2018-04-07 22:12:54.999182: Forward-backward across 100 steps, 3.234 +/- 3.241 sec / batch

2018-04-07 22:13:01.428955:step 50, duration = 6.429

2018-04-07 22:13:01.429456: Forward-backward across 100 steps, 3.298 +/- 3.240 sec / batch

2018-04-07 22:13:07.911265: Forward-backward across 100 steps, 3.363 +/- 3.238 sec / batch

2018-04-07 22:13:15.010983: Forward-backward across 100 steps, 3.434 +/- 3.241 sec / batch

2018-04-07 22:13:21.574445: Forward-backward across 100 steps, 3.500 +/- 3.237 sec / batch

2018-04-07 22:13:28.327446: Forward-backward across 100 steps, 3.567 +/- 3.234 sec / batch

2018-04-07 22:13:35.170995: Forward-backward across 100 steps, 3.636 +/- 3.230 sec / batch

2018-04-07 22:13:43.325071: Forward-backward across 100 steps, 3.717 +/- 3.240 sec / batch

2018-04-07 22:13:50.248083: Forward-backward across 100 steps, 3.787 +/- 3.234 sec / batch

2018-04-07 22:13:57.226529: Forward-backward across 100 steps, 3.856 +/- 3.227 sec / batch

2018-04-07 22:14:05.166786: Forward-backward across 100 steps, 3.936 +/- 3.229 sec / batch

2018-04-07 22:14:13.147299:step 60, duration = 7.980

2018-04-07 22:14:13.148299: Forward-backward across 100 steps, 4.016 +/- 3.229 sec / batch

2018-04-07 22:14:19.532873: Forward-backward across 100 steps, 4.079 +/- 3.212 sec / batch

2018-04-07 22:14:25.992300: Forward-backward across 100 steps, 4.144 +/- 3.194 sec / batch

2018-04-07 22:14:32.404073: Forward-backward across 100 steps, 4.208 +/- 3.175 sec / batch

2018-04-07 22:14:38.651726: Forward-backward across 100 steps, 4.271 +/- 3.153 sec / batch

2018-04-07 22:14:45.506017: Forward-backward across 100 steps, 4.339 +/- 3.134 sec / batch

2018-04-07 22:14:52.723815: Forward-backward across 100 steps, 4.411 +/- 3.116 sec / batch

2018-04-07 22:14:59.618563: Forward-backward across 100 steps, 4.480 +/- 3.094 sec / batch

2018-04-07 22:15:06.859808: Forward-backward across 100 steps, 4.553 +/- 3.073 sec / batch

2018-04-07 22:15:13.477717: Forward-backward across 100 steps, 4.619 +/- 3.045 sec / batch

2018-04-07 22:15:20.112636:step 70, duration = 6.634

2018-04-07 22:15:20.113135: Forward-backward across 100 steps, 4.685 +/- 3.016 sec / batch

2018-04-07 22:15:27.965407: Forward-backward across 100 steps, 4.764 +/- 2.995 sec / batch

2018-04-07 22:15:34.965559: Forward-backward across 100 steps, 4.834 +/- 2.964 sec / batch

2018-04-07 22:15:42.242897: Forward-backward across 100 steps, 4.906 +/- 2.934 sec / batch

2018-04-07 22:15:49.095452: Forward-backward across 100 steps, 4.975 +/- 2.898 sec / batch

2018-04-07 22:15:55.880514: Forward-backward across 100 steps, 5.043 +/- 2.860 sec / batch

2018-04-07 22:16:03.602515: Forward-backward across 100 steps, 5.120 +/- 2.827 sec / batch

2018-04-07 22:16:11.750931: Forward-backward across 100 steps, 5.201 +/- 2.796 sec / batch

2018-04-07 22:16:18.997248: Forward-backward across 100 steps, 5.274 +/- 2.754 sec / batch

2018-04-07 22:16:25.345089: Forward-backward across 100 steps, 5.337 +/- 2.704 sec / batch

2018-04-07 22:16:31.857926:step 80, duration = 6.512

2018-04-07 22:16:31.858927: Forward-backward across 100 steps, 5.402 +/- 2.653 sec / batch

2018-04-07 22:16:38.345797: Forward-backward across 100 steps, 5.467 +/- 2.598 sec / batch

2018-04-07 22:16:44.743050: Forward-backward across 100 steps, 5.531 +/- 2.541 sec / batch

2018-04-07 22:16:51.504735: Forward-backward across 100 steps, 5.599 +/- 2.482 sec / batch

2018-04-07 22:16:58.363358: Forward-backward across 100 steps, 5.668 +/- 2.421 sec / batch

2018-04-07 22:17:04.801136: Forward-backward across 100 steps, 5.732 +/- 2.354 sec / batch

2018-04-07 22:17:11.422538: Forward-backward across 100 steps, 5.798 +/- 2.284 sec / batch

2018-04-07 22:17:18.029753: Forward-backward across 100 steps, 5.864 +/- 2.209 sec / batch

2018-04-07 22:17:24.838779: Forward-backward across 100 steps, 5.932 +/- 2.131 sec / batch

2018-04-07 22:17:31.531255: Forward-backward across 100 steps, 5.999 +/- 2.047 sec / batch

2018-04-07 22:17:38.791928:step 90, duration = 7.260

2018-04-07 22:17:38.792429: Forward-backward across 100 steps, 6.072 +/- 1.960 sec / batch

2018-04-07 22:17:47.367134: Forward-backward across 100 steps, 6.157 +/- 1.878 sec / batch

2018-04-07 22:17:55.087142: Forward-backward across 100 steps, 6.235 +/- 1.780 sec / batch

2018-04-07 22:18:02.064306: Forward-backward across 100 steps, 6.304 +/- 1.667 sec / batch

2018-04-07 22:18:08.902851: Forward-backward across 100 steps, 6.373 +/- 1.543 sec / batch

2018-04-07 22:18:15.971768: Forward-backward across 100 steps, 6.443 +/- 1.405 sec / batch

2018-04-07 22:18:23.112095: Forward-backward across 100 steps, 6.515 +/- 1.248 sec / batch

2018-04-07 22:18:30.340400: Forward-backward across 100 steps, 6.587 +/- 1.065 sec / batch

2018-04-07 22:18:36.829714: Forward-backward across 100 steps, 6.652 +/- 0.834 sec / batch

2018-04-07 22:18:43.282010: Forward-backward across 100 steps, 6.717 +/- 0.500 sec / batch后话

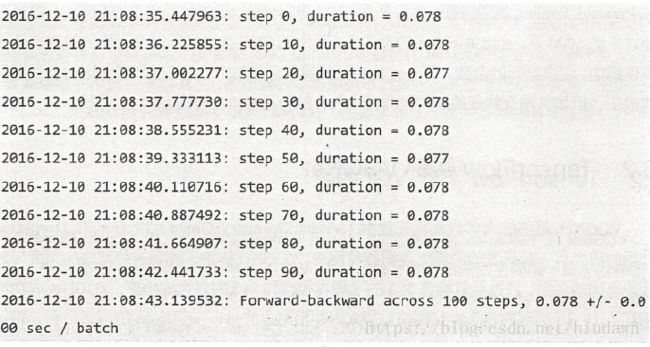

差了50倍,可以洗洗睡了(20180407,我,还没有GPU)(20180506我也还没有)

AlexNet 使用GPU、ReLU、Dropout和LRN和Trick。转折点