sparkR调用R的执行分布式计算

-

环境spark2.4.5,R3.6, install.package("SparkR"),默认sparkR提供的函数支持对应的版本为spark2.4.5不支持2.4.0

-

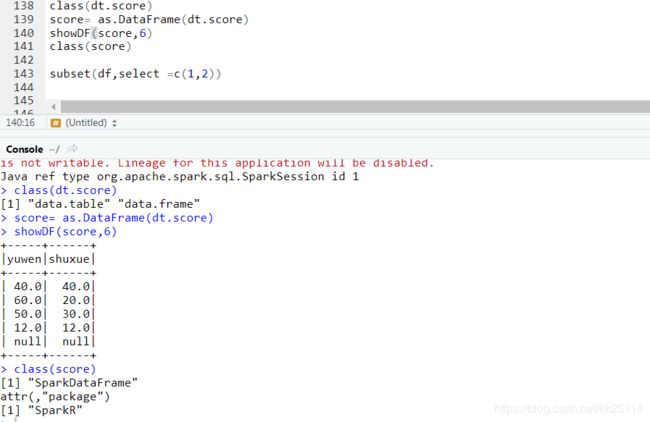

如:将data.table,data.frame dt.score数据集转化成sparkR中的dataframe时可以执行sparkR中提供的方法

-

sparkR默认会覆盖掉R中的方法

-

如需要调用R中的方法需要指定调用

-

dataframe作为R和sparkR中的桥梁,不同的是sparkR可以进行分布式计算

-

使用R中色方法直接调用spark中的方法则会s4接口异常

The following objects are masked from ‘package:jsonlite’:

flatten, toJSON

The following objects are masked from ‘package:plyr’:

arrange, count, desc, join, mutate, rename, summarize, take

The following object is masked from ‘package:MASS’:

select

The following object is masked from ‘package:nlme’:

gapply

The following objects are masked from ‘package:data.table’:

between, cube, first, hour, last, like, minute, month, quarter, rollup, second, tables, year

The following objects are masked from ‘package:dplyr’:

arrange, between, coalesce, collect, contains, count, cume_dist, dense_rank, desc, distinct, explain, expr, filter, first, group_by,

intersect, lag, last, lead, mutate, n, n_distinct, ntile, percent_rank, rename, row_number, sample_frac, select, slice, sql,

summarize, union

The following object is masked from ‘package:RMySQL’:

summary

The following objects are masked from ‘package:stats’:

cov, filter, lag, na.omit, predict, sd, var, window

The following objects are masked from ‘package:base’:

as.data.frame, colnames, colnames<-, drop, endsWith, intersect, rank, rbind, sample, startsWith, subset, summary, transform, union