本文介绍了lstm的regularization :dropout.

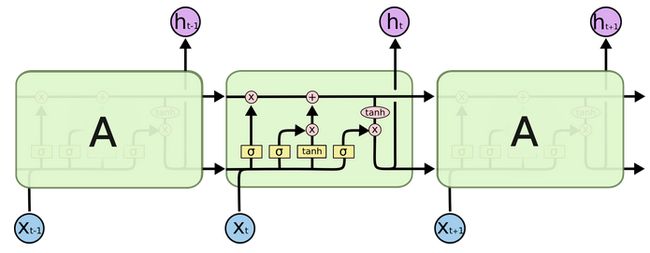

lstm:

符号意思:

对lstm进行rugularization,第一需要达到regularization的效果,第二不能丧失lstm的记忆能力。所以只在同一时刻layer之间进行dropout。从t-1到t之间没有dropout。

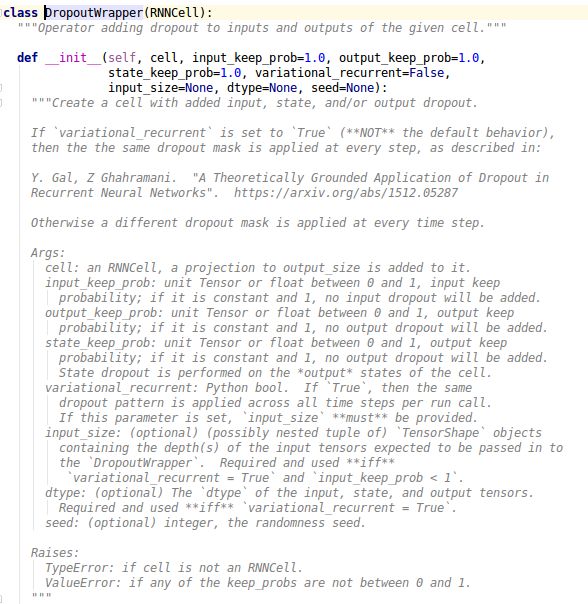

对应tensorflow api:

input_keep_prob在传入当前cell之前的droupout,

output_keep_prob,当前cell的输出做dropout

可以看到还提供了,state_keep_prob

参考文献:http://colah.github.io/posts/2015-08-Understanding-LSTMs/