FFmpeg学习之二 (yuv视频渲染)

FFmpeg学习之二 (yuv视频渲染)

- yuv简介

- 1.yuv是什么

- 2.yuv采集方式

- 3.yuv存储方式

- 4.yuv格式

- yuv视频渲染

- 1. iOS YUV视频渲染

- 1.1 IOS利用opengles 通过纹理方式渲染yuv视频

- 1.1.1 流程

- 1.1.2 完整代码1 自定义KYLGLRender类实现

- 1.1.3 完整代码2 继承GLKViewController类实现

- 1.2 IOS利用第三方框架渲染yuv视频

- 2. Android YUV视频渲染

- 3. Qt YUV视频渲染

- 4. VC++ YUV视频渲染

yuv简介

1.yuv是什么

YUV是一种颜色编码方式,主要用于电视系统以及模拟视频领域,它将亮度信息(Y)与色彩信息(UV)分离,没有UV信息一样可以显示完整的图像,只不过是黑白的,这样的设计很好地解决了彩色电视机与黑白电视兼容的问题。

YUV不像传统RGB那样要求三个独立的视频信号同时传输,因此YUV方式传输视频占用较少带宽。

在过去,YUV 和 Y’UV被用作电视系统中颜色信息的特定模拟信息编码。而YCbCr被用作颜色信息的数字编码,通常适用于视频和静态图像的压缩和传输(MPEG, JPEG)。

今天,YUV通常用被用在计算机行业描述使用YCbCr编码的文件格式。

Y:表示明亮度(Luminance,Luma),也就是灰度值

U和V:色度(Chrominance,Chroma),描述影像色彩及饱和度。

2.yuv采集方式

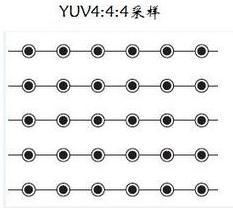

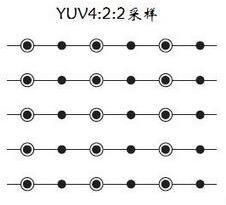

如下图:实心圆圈代表Y,空心圆圈代表UV

3.yuv存储方式

在以上三张图中,实心圆圈代表一个Y分量,空心圆圈代表一个UV分量,而因为又分三种不同的采集方式,即1个Y对应一组UV分量,2个Y共用一组UV分量,4个Y共用一组UV分量,

以YUV4:2:0为例,它又被分为YUV420P与YUV420SP,它们都是YUV420格式。

- YUV420P, Plane模式(Y,U,V三个plane)将Y,U,V分量分别打包,依次存储。下图为I420.

I420 : YYYYYYYY UU VV

YV12 : YYYYYYYY VV UU

- YUV420SP(NV12/NV21): two-plane模式,即Y和UV分为两个Plane,但是UV(CbCr)为交错存储,而不是分为三个plane. 下图为NV12

NV12: YYYYYYYY UVUV

NV21: YYYYYYYY VUVU

根据以上两幅图,我们以分辨率为640*480的图片为例,则它的大小为 Y : width(640) * height(480) 可认为每个Y即为每个像素点,又因为每4个Y共用一组UV,所以,U和V的大小都为: width(640) * height(480) * (1 / 4 ). 所以图片真正的大小为 Y+U+V = 3 / 2 * (width(640) * height(480)).

在程序中,比如一张图片的分辨率为640*480,如果该图片的格式为YUV420P,则我们可以很轻松的算出这张图片的Y,U,V三个分量。 我们用数组来存储该图像的大小byte[] src 则(I420)

Y = src[width * height];

U = Y + scr[1/4 * width * height];

V = U + scr[1/4 * width * height];

4.yuv格式

YUV格式可分为两大类:打包(packed) , 平面(planar)

- 打包(packed) : 将YUV分量存放在同一个数组中,通常是几个相邻的像素组成的一个宏像素(macro-pixel);

- 平面(planar) : 使用三个数组分开存放YUV三个分量,就像一个三维平面。

更多YUV知识请参考大神文档:

参考文档:

详解YUV数据格式

图文详解YUV420数据格式

YUV

yuv视频渲染

1. iOS YUV视频渲染

1.1 IOS利用opengles 通过纹理方式渲染yuv视频

由于苹果已经在2018年底层渲染放弃了opengl,改用自己的渲染引擎Metal,推荐后续项目改成Metal来渲染,Metal将苹果的硬件能力发挥到了极致,效率比opengl高。

OpenGL ES是OpenGL的精简版本,主要针对于手机、游戏主机等嵌入式设备,它提供了一套设备图形硬件的软件接口,通过直接操作图形硬件,使我们能够高效地绘制图形。OpenGL在iOS架构中属于媒体层,与quartz(core graphics)类似,是相对底层的技术,可以控制每一帧的图形绘制。由于图形渲染是通过图形硬件(GPU)来完成的,相对于使用CPU,能够获得更高的帧率同时不会因为负载过大而造成卡顿。

openGL 渲染yuv视频流,主要是通过纹理方式,也就是将yuv转换成纹理显示出来。

这里简要介绍一下纹理

- 纹理

我们需要将YUV数据纹理的方式加载到OpenGL,再将纹理贴到之前创建矩形上,完成绘制。

将每个顶点赋予一个纹理坐标,OpenGL会根据纹理坐标插值得到图形内部的像素值。OpenGL的纹理坐标系是归一化的,取值范围是0 - 1,左下角是原点。

纹理目标是显卡的软件接口中定义的句柄,指向要进行当前操作的显存。

纹理对象是我们创建的用来存储纹理的显存,在实际使用过程中使用的是创建后返回的ID。

纹理单元是显卡中所有的可用于在shader中进行纹理采样的显存,数量与显卡类型相关,至少16个。在激活某个纹理单元后,纹理目标就该纹理单元,默认激活的是GL_TEXTURE0。

可以这么想象,纹理目标是转轮手枪正对弹膛的单孔,纹理对象就是子弹,纹理单元是手枪的六个弹孔。下面用代码说明它们之间的关系。

//创建一个纹理对象数组,数组里是纹理对象的ID

GLuint texture[3];

//创建纹理对象,第一个参数是要创建的数量,第二个参数是数组的基址

glGenTextures(3, &texture);

//激活GL_TEXTURE0这个纹理单元,用于之后的纹理采样

glActiveTexture(GL_TEXTURE0);

//绑定纹理对象texture[0]到纹理目标GL_TEXTURE_2D,接下来对纹理目标的操作都发生在此对象上

glBindTexture(GL_TEXTURE_2D, texture[0]);

//创建图像,采样工作在GL_TEXTURE0中完成,图像数据存储在GL_TEXTURE_2D绑定的对象,即texture[0]中。

glTexImage(GL_TEXTURE_2D, ...);

//解除绑定,此时再对GL_TEXTURE_2D不会影响到texture[0],texture[0]的内存不会回收。

glBindTexture(GL_TEXTURE_2D, 0);

//可以不断创建新的纹理对象,直到显存耗净

ios yuv视频渲染demo 下载

1.1.1 流程

- 创建EAGLContext上下文对象

- 创建OpenGL预览层

CAEAGLLayer *eaglLayer = (CAEAGLLayer *)self.layer;

eaglLayer.opaque = YES;

eaglLayer.drawableProperties = @{kEAGLDrawablePropertyRetainedBacking : [NSNumber numberWithBool:NO],

kEAGLDrawablePropertyColorFormat : kEAGLColorFormatRGBA8};

- 创建OpenGL上下文对象

EAGLContext *context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES2];

[EAGLContext setCurrentContext:context];

- 设置上下文渲染缓冲区

- (void)setupBuffersWithContext:(EAGLContext *)context width:(int *)width height:(int *)height colorBufferHandle:(GLuint *)colorBufferHandle frameBufferHandle:(GLuint *)frameBufferHandle {

glDisable(GL_DEPTH_TEST);

glEnableVertexAttribArray(ATTRIB_VERTEX);

glVertexAttribPointer(ATTRIB_VERTEX, 2, GL_FLOAT, GL_FALSE, 2 * sizeof(GLfloat), 0);

glEnableVertexAttribArray(ATTRIB_TEXCOORD);

glVertexAttribPointer(ATTRIB_TEXCOORD, 2, GL_FLOAT, GL_FALSE, 2 * sizeof(GLfloat), 0);

glGenFramebuffers(1, frameBufferHandle);

glBindFramebuffer(GL_FRAMEBUFFER, *frameBufferHandle);

glGenRenderbuffers(1, colorBufferHandle);

glBindRenderbuffer(GL_RENDERBUFFER, *colorBufferHandle);

[context renderbufferStorage:GL_RENDERBUFFER fromDrawable:(CAEAGLLayer *)self.layer];

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_WIDTH , width);

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_HEIGHT, height);

glFramebufferRenderbuffer(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_RENDERBUFFER, *colorBufferHandle);

}

- 加载着色器

- 修改shader

如果对GLSL语法与YUV不熟悉,可以看OpenGL的着色语言:GLSL和YUV颜色编码解析。

(1)顶点着色器

vertex shader

//vertex shader

ttribute vec4 position;

attribute mediump vec2 textureCoordinate;//要获取的纹理坐标

varying mediump vec2 coordinate;//传递给fragm shader的纹理坐标,会自动插值

void main(void) {

gl_Position = vertexPosition;

coordinate = textureCoordinate;

}

(2)片源着色器

fragment shader

//fragment shader

precision mediump float;

uniform sampler2D SamplerY;//sample2D的常量,用来获取I420数据的Y平面数据

uniform sampler2D SamplerU;//U平面

uniform sampler2D SamplerV;//V平面

uniform sampler2D SamplerNV12_Y;//NV12数据的Y平面

uniform sampler2D SamplerNV12_UV;//NV12数据的UV平面

varying highp vec2 coordinate;//纹理坐标

uniform int yuvType;//0 代表 I420, 1 代表 NV12

//用来做YUV --> RGB 的变换矩阵

const vec3 delyuv = vec3(-0.0/255.0,-128.0/255.0,-128.0/255.0);

const vec3 matYUVRGB1 = vec3(1.0,0.0,1.402);

const vec3 matYUVRGB2 = vec3(1.0,-0.344,-0.714);

const vec3 matYUVRGB3 = vec3(1.0,1.772,0.0);

void main()

{

vec3 CurResult;

highp vec3 yuv;

if (yuvType == 0){

yuv.x = texture2D(SamplerY, coordinate).r;//因为是YUV的一个平面,所以采样后的r,g,b,a这四个参数的数值是一样的

yuv.y = texture2D(SamplerU, coordinate).r;

yuv.z = texture2D(SamplerV, coordinate).r;

}

else{

yuv.x = texture2D(SamplerY, coordinate).r;

yuv.y = texture2D(SamplerUV, coordinate).r;//因为NV12是2平面的,对于UV平面,在加载纹理时,会指定格式,让U值存在r,g,b中,V值存在a中。

yuv.z = texture2D(SamplerUV, coordinate).a;//这里会在下面解释

}

yuv += delyuv;//读取值得范围是0-255,读取时要-128回归原值

//用数量积来模拟矩阵变换,转换成RGB值

CurResult.x = dot(yuv,matYUVRGB1);

CurResult.y = dot(yuv,matYUVRGB2);

CurResult.z = dot(yuv,matYUVRGB3);

//输出像素值给光栅器

gl_FragColor = vec4(CurResult.rgb, 1);

}

- 加载shader

- (void)loadShaderWithBufferType:(KYLPixelBufferType)type {

GLuint vertShader, fragShader;

NSURL *vertShaderURL, *fragShaderURL;

NSString *shaderName;

GLuint program;

program = glCreateProgram();

if (type == KYLPixelBufferTypeNV12) {

shaderName = @"KYLPreviewNV12Shader";

_nv12Program = program;

} else if (type == KYLPixelBufferTypeRGB) {

shaderName = @"KYLPreviewRGBShader";

_rgbProgram = program;

}

vertShaderURL = [[NSBundle mainBundle] URLForResource:shaderName withExtension:@"vsh"];

if (![self compileShader:&vertShader type:GL_VERTEX_SHADER URL:vertShaderURL]) {

log4cplus_error(kModuleName, "Failed to compile vertex shader");

return;

}

fragShaderURL = [[NSBundle mainBundle] URLForResource:shaderName withExtension:@"fsh"];

if (![self compileShader:&fragShader type:GL_FRAGMENT_SHADER URL:fragShaderURL]) {

log4cplus_error(kModuleName, "Failed to compile fragment shader");

return;

}

glAttachShader(program, vertShader);

glAttachShader(program, fragShader);

glBindAttribLocation(program, ATTRIB_VERTEX , "position");

glBindAttribLocation(program, ATTRIB_TEXCOORD, "inputTextureCoordinate");

if (![self linkProgram:program]) {

if (vertShader) {

glDeleteShader(vertShader);

vertShader = 0;

}

if (fragShader) {

glDeleteShader(fragShader);

fragShader = 0;

}

if (program) {

glDeleteProgram(program);

program = 0;

}

return;

}

if (type == KYLPixelBufferTypeNV12) {

uniforms[UNIFORM_Y] = glGetUniformLocation(program , "luminanceTexture");

uniforms[UNIFORM_UV] = glGetUniformLocation(program, "chrominanceTexture");

uniforms[UNIFORM_COLOR_CONVERSION_MATRIX] = glGetUniformLocation(program, "colorConversionMatrix");

} else if (type == XDXPixelBufferTypeRGB) {

_displayInputTextureUniform = glGetUniformLocation(program, "inputImageTexture");

}

if (vertShader) {

glDetachShader(program, vertShader);

glDeleteShader(vertShader);

}

if (fragShader) {

glDetachShader(program, fragShader);

glDeleteShader(fragShader);

}

}

- (BOOL)compileShader:(GLuint *)shader type:(GLenum)type URL:(NSURL *)URL {

NSError *error;

NSString *sourceString = [[NSString alloc] initWithContentsOfURL:URL

encoding:NSUTF8StringEncoding

error:&error];

if (sourceString == nil) {

log4cplus_error(kModuleName, "Failed to load vertex shader: %s", [error localizedDescription].UTF8String);

return NO;

}

GLint status;

const GLchar *source;

source = (GLchar *)[sourceString UTF8String];

*shader = glCreateShader(type);

glShaderSource(*shader, 1, &source, NULL);

glCompileShader(*shader);

glGetShaderiv(*shader, GL_COMPILE_STATUS, &status);

if (status == 0) {

glDeleteShader(*shader);

return NO;

}

return YES;

}

- (BOOL)linkProgram:(GLuint)prog {

GLint status;

glLinkProgram(prog);

glGetProgramiv(prog, GL_LINK_STATUS, &status);

if (status == 0) {

return NO;

}

return YES;

}

- 创建视频纹理缓存区

if (!*videoTextureCache) {

CVReturn err = CVOpenGLESTextureCacheCreate(kCFAllocatorDefault, NULL, context, NULL, videoTextureCache);

if (err != noErr)

log4cplus_error(kModuleName, "Error at CVOpenGLESTextureCacheCreate %d",err);

}

- 加载YUV数据到纹理对象

//创建纹理对象,需要3个纹理对象来获取不同平面的数据

-(void)setupTexture{

_planarTextureHandles = (GLuint *)malloc(3*sizeof(GLuint));

glGenTextures(3, _planarTextureHandles);

}

-(void)feedTextureWithImageData:(Byte*)imageData imageSize:(CGSize)imageSize type:(NSInteger)type{

//根据YUV编码的特点,获得不同平面的基址

Byte * yPlane = imageData;

Byte * uPlane = imageData + imageSize.width*imageSize.height;

Byte * vPlane = imageData + imageSize.width*imageSize.height * 5 / 4;

if (type == 0) {

[self textureYUV:yPlane widthType:imageSize.width heightType:imageSize.height index:0];

[self textureYUV:uPlane widthType:imageSize.width/2 heightType:imageSize.height/2 index:1];

[self textureYUV:vPlane widthType:imageSize.width/2 heightType:imageSize.height/2 index:2];

}else{

[self textureYUV:yPlane widthType:imageSize.width heightType:imageSize.height index:0];

[self textureNV12:uPlane widthType:imageSize.width/2 heightType:imageSize.height/2 index:1];

}

}

- (void) textureYUV: (Byte*)imageData widthType: (int) width heightType: (int) height index: (int) index

{

//将纹理对象绑定到纹理目标

glBindTexture(GL_TEXTURE_2D, _planarTextureHandles[index]);

//设置放大和缩小时,纹理的过滤选项为:线性过滤

glTexParameteri( GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR );

glTexParameteri( GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR );

//设置纹理X,Y轴的纹理环绕选项为:边缘像素延伸

glTexParameteri( GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri( GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

//加载图像数据到纹理,GL_LUMINANCE指明了图像数据的像素格式为只有亮度,虽然第三个和第七个参数都使用了GL_LUMINANCE,

//但意义是不一样的,前者指明了纹理对象的颜色分量成分,后者指明了图像数据的像素格式

//获得纹理对象后,其每个像素的r,g,b,a值都为相同,为加载图像的像素亮度,在这里就是YUV某一平面的分量值

glTexImage2D( GL_TEXTURE_2D, 0, GL_LUMINANCE, width, height, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, imageData );

//解绑

glBindTexture(GL_TEXTURE_2D, 0);

}

- CADisplayLink定时绘制

现在已经能够将YUV数据加载到纹理对象了,下一步来改造render方法,将其绘制到屏幕上。可以用CADisplayLink定时调用render方法,可以根据屏幕刷新频率来控制YUV视频流的帧率。

- (void)render {

//绘制黑色背景

glClearColor(0, 0, 0, 1.0);

glClear(GL_COLOR_BUFFER_BIT);

//获取平面的scale

CGFloat scale = [[UIScreen mainScreen] scale];

CGFloat width = _frame.size.width*scale;

CGFloat height = _frame.size.height*scale;

//创建一个OpenGL绘制的窗口

glViewport(0, 0,width,height);

[self drawTexture];

//EACAGLContext 渲染OpenGL绘制好的图像到EACAGLLayer

[_context presentRenderbuffer:GL_RENDERBUFFER];

}

//fragment shader的sample数组

GLint sampleHandle[3];

//绘制纹理

- (void) drawTexture{

//传纹理坐标给fragment shader

glVertexAttribPointer([AVGLShareInstance shareInstance].texCoordAttributeLocation, 2, GL_FLOAT, GL_FALSE,

sizeof(Vertex), (void*)offsetof(Vertex, TexCoord));

glEnableVertexAttribArray([AVGLShareInstance shareInstance].texCoordAttributeLocation);

//传纹理的像素格式给fragment shader

GLint yuvType = glGetUniformLocation(_programHandle, "yuvType");

glUniform1i([AVGLShareInstance shareInstance].drawTypeUniform, yuvType);

//type: 0是I420, 1是NV12

int planarCount = 0;

if (type == 0) {

planarCount = 3;//I420有3个平面

sampleHandle[1] = glGetUniformLocation(_programHandle, "samplerY");

sampleHandle[2] = glGetUniformLocation(_programHandle, "samplerU");

sampleHandle[3] = glGetUniformLocation(_programHandle, "samplerV");

}else{

planarCount = 2;//NV12有两个平面

sampleHandle[1] = glGetUniformLocation(_programHandle, "SamplerNV12_Y");

sampleHandle[2] = glGetUniformLocation(_programHandle, "SamplerNV12_UV");

}

for (int i=0; i<planarCount; i++){

glActiveTexture(GL_TEXTURE0+i);

glBindTexture(GL_TEXTURE_2D, _planarTextureHandles[i]);

glUniform1i(sampleHandle[i], i);

}

//绘制函数,使用三角形作为图元构造要绘制的几何图形,由于顶点的indexs使用了VBO,所以最后一个参数传0

//调用这个函数后,vertex shader先在每个顶点执行一次,之后fragment shader在每个像素执行一次,绘制后的图像存储在render buffer中。

glDrawElements(GL_TRIANGLES, 6,GL_UNSIGNED_BYTE, 0);

}

- 将pixelBuffer渲染到屏幕

- 开始渲染前先清空缓存数据

- (void)cleanUpTextures {

if (_lumaTexture) {

CFRelease(_lumaTexture);

_lumaTexture = NULL;

}

if (_chromaTexture) {

CFRelease(_chromaTexture);

_chromaTexture = NULL;

}

if (_renderTexture) {

CFRelease(_renderTexture);

_renderTexture = NULL;

}

CVOpenGLESTextureCacheFlush(_videoTextureCache, 0);

}

- 根据pixelBuffer格式确定视频数据类型

KYLPixelBufferType bufferType;

if (CVPixelBufferGetPixelFormatType(pixelBuffer) == kCVPixelFormatType_420YpCbCr8BiPlanarFullRange || CVPixelBufferGetPixelFormatType(pixelBuffer) == kCVPixelFormatType_420YpCbCr8BiPlanarVideoRange) {

bufferType = KYLPixelBufferTypeNV12;

} else if (CVPixelBufferGetPixelFormatType(pixelBuffer) == kCVPixelFormatType_32BGRA) {

bufferType = KYLPixelBufferTypeRGB;

}else {

log4cplus_error(kModuleName, "Not support current format.");

return;

}

- 通过当前的pixelBuffer对象创建CVOpenGLESTexture对象

CVOpenGLESTextureRef lumaTexture,chromaTexture,renderTexture;

if (bufferType == KYLPixelBufferTypeNV12) {

// Y

glActiveTexture(GL_TEXTURE0);

error = CVOpenGLESTextureCacheCreateTextureFromImage(kCFAllocatorDefault,

videoTextureCache,

pixelBuffer,

NULL,

GL_TEXTURE_2D,

GL_LUMINANCE,

frameWidth,

frameHeight,

GL_LUMINANCE,

GL_UNSIGNED_BYTE,

0,

&lumaTexture);

if (error) {

log4cplus_error(kModuleName, "Error at CVOpenGLESTextureCacheCreateTextureFromImage %d", error);

}else {

_lumaTexture = lumaTexture;

}

glBindTexture(CVOpenGLESTextureGetTarget(lumaTexture), CVOpenGLESTextureGetName(lumaTexture));

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

// UV

glActiveTexture(GL_TEXTURE1);

error = CVOpenGLESTextureCacheCreateTextureFromImage(kCFAllocatorDefault,

videoTextureCache,

pixelBuffer,

NULL,

GL_TEXTURE_2D,

GL_LUMINANCE_ALPHA,

frameWidth / 2,

frameHeight / 2,

GL_LUMINANCE_ALPHA,

GL_UNSIGNED_BYTE,

1,

&chromaTexture);

if (error) {

log4cplus_error(kModuleName, "Error at CVOpenGLESTextureCacheCreateTextureFromImage %d", error);

}else {

_chromaTexture = chromaTexture;

}

glBindTexture(CVOpenGLESTextureGetTarget(chromaTexture), CVOpenGLESTextureGetName(chromaTexture));

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

} else if (bufferType == KYLPixelBufferTypeRGB) {

// RGB

glActiveTexture(GL_TEXTURE0);

error = CVOpenGLESTextureCacheCreateTextureFromImage(kCFAllocatorDefault,

videoTextureCache,

pixelBuffer,

NULL,

GL_TEXTURE_2D,

GL_RGBA,

frameWidth,

frameHeight,

GL_BGRA,

GL_UNSIGNED_BYTE,

0,

&renderTexture);

if (error) {

log4cplus_error(kModuleName, "Error at CVOpenGLESTextureCacheCreateTextureFromImage %d", error);

}else {

_renderTexture = renderTexture;

}

glBindTexture(CVOpenGLESTextureGetTarget(renderTexture), CVOpenGLESTextureGetName(renderTexture));

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameterf(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

}

- 选择OpenGL程序

glBindFramebuffer(GL_FRAMEBUFFER, frameBufferHandle);

glViewport(0, 0, backingWidth, backingHeight);

glClearColor(0.1f, 0.0f, 0.0f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT);

if (bufferType == KYLPixelBufferTypeNV12) {

if (self.lastBufferType != bufferType) {

glUseProgram(nv12Program);

glUniform1i(uniforms[UNIFORM_Y], 0);

glUniform1i(uniforms[UNIFORM_UV], 1);

glUniformMatrix3fv(uniforms[UNIFORM_COLOR_CONVERSION_MATRIX], 1, GL_FALSE, preferredConversion);

}

} else if (bufferType == KYLPixelBufferTypeRGB) {

if (self.lastBufferType != bufferType) {

glUseProgram(rgbProgram);

glUniform1i(displayInputTextureUniform, 0);

}

}

- 计算非全屏尺寸

static CGSize normalizedSamplingSize;

if (self.lastFullScreen != self.isFullScreen || self.pixelbufferWidth != frameWidth || self.pixelbufferHeight != frameHeight

|| normalizedSamplingSize.width == 0 || normalizedSamplingSize.height == 0 || self.screenWidth != [UIScreen mainScreen].bounds.size.width) {

normalizedSamplingSize = [self getNormalizedSamplingSize:CGSizeMake(frameWidth, frameHeight)];

self.lastFullScreen = self.isFullScreen;

self.pixelbufferWidth = frameWidth;

self.pixelbufferHeight = frameHeight;

self.screenWidth = [UIScreen mainScreen].bounds.size.width;

quadVertexData[0] = -1 * normalizedSamplingSize.width;

quadVertexData[1] = -1 * normalizedSamplingSize.height;

quadVertexData[2] = normalizedSamplingSize.width;

quadVertexData[3] = -1 * normalizedSamplingSize.height;

quadVertexData[4] = -1 * normalizedSamplingSize.width;

quadVertexData[5] = normalizedSamplingSize.height;

quadVertexData[6] = normalizedSamplingSize.width;

quadVertexData[7] = normalizedSamplingSize.height;

}

- 渲染画面

glVertexAttribPointer(ATTRIB_VERTEX, 2, GL_FLOAT, 0, 0, quadVertexData);

glEnableVertexAttribArray(ATTRIB_VERTEX);

glVertexAttribPointer(ATTRIB_TEXCOORD, 2, GL_FLOAT, 0, 0, quadTextureData);

glEnableVertexAttribArray(ATTRIB_TEXCOORD);

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

glBindRenderbuffer(GL_RENDERBUFFER, colorBufferHandle);

if ([EAGLContext currentContext] == context) {

[context presentRenderbuffer:GL_RENDERBUFFER];

}

1.1.2 完整代码1 自定义KYLGLRender类实现

- 方式一:

抽象一个渲染类

只需要定义一个KYLGLRender类

点击此处下载完整 YUV 渲染 demo

- 头文件KYLGLRender.h如下:

//

// KYLGLRender.h

// yuvShowKYLDemo

//

// Created by yulu kong on 2019/7/27.

// Copyright © 2019 yulu kong. All rights reserved.

//

#ifndef __KYLGLRENDER_H_

#define __KYLGLRENDER_H_

#import <Foundation/Foundation.h>

#import <QuartzCore/QuartzCore.h>

#import <OpenGLES/EAGL.h>

#import <OpenGLES/ES2/gl.h>

#import <OpenGLES/ES2/glext.h>

#import <QuartzCore/CAMediaTiming.h>

#import <QuartzCore/CATransform3D.h>

#import <Foundation/NSObject.h>

typedef GLint EGLint;

typedef struct KYLYUVData

{

unsigned int width;

unsigned int height;

uint8_t * data[3];

} *PYUVData;

struct KYLScreenParam

{

unsigned int width;

unsigned int height;

};

class GLRender

{

typedef enum

{

VERTICAL = 0,

HORIZONTAL

}ORIENT_T;

public:

GLRender();

~GLRender();

public:

int nativeGLRender(PYUVData &yuvdata);

void nativeGLRender(char *pFrame);

int digitalRegionZoom(int bootom_x , int bootom_y, int top_x, int top_y);

int close();

bool setGLSurface(const int p_nWidth, const int p_nHeight, CAEAGLLayer *layer);

private:

void render(const void *data);

void render(PYUVData &yuvdata);

void configGL();

GLuint loadShader(GLenum shaderType, const char* pSource);

GLuint createProgram(const char* pVertexSource, const char* pFragmentSource);

bool setupGraphics(EGLint w, EGLint h) ;

void setTexture(GLuint texture);

void setupBuffers();

void createBuffers();

void releaseBuffers();

private:

NSCondition *m_lock;

int mWidth, mGLSurfaceWidth;

int mHeight, mGLSurfaceHeight;

bool surface_ok;

ORIENT_T mOrient;

GLfloat *mSquareVertices;

private:

EAGLContext *mContext;

GLuint mViewRenderbuffer;

GLuint mViewFramebuffer;

CAEAGLLayer *mSurface;

GLuint mGlProgram;

GLuint m_texturePlanarY;

GLuint m_texturePlanarU;

GLuint m_texturePlanarV;

GLint mPositionLoc;

GLint mTexCoordLoc;

GLint mSamplerY;

GLint mSamplerU;

GLint mSamplerV;

int mCurrentLayerWidth;

int mCurrentLayerHeight;

};

#endif

- 实现文件如下:

//

// KYLGLRender.m

// yuvShowKYLDemo

//

// Created by yulu kong on 2019/7/27.

// Copyright © 2019 yulu kong. All rights reserved.

//

#import "KYLGLRender.h"

#define LOGI printf("GLRender: "); printf

#define LOGE printf("GLRender: "); printf

#define eglGetError glGetError

#import <OpenGLES/EAGLDrawable.h>

#import <QuartzCore/QuartzCore.h>

typedef GLint EGLint;

#define EGL_SUCCESS GL_NO_ERROR

const int VERTEX_STRIDE = 6 * sizeof(GLfloat);

const GLfloat squareVertices[] = {

1.0f, -1.0f, 0.0f, 1.0f, // Position 0

1.0f, 1.0f, // TexCoord 0

1.0f, 1.f, 0.0f, 1.0f, // Position 1

1.0f, 0.0f, // TexCoord 1

-1.0f, -1.0f, 0.0f, 1.0f, // Position 2

0.0f, 1.0f, // TexCoord 2

-1.0f, 1.f, 0.0f, 1.0f, // Position 3

0.0f, 0.0f, // TexCoord 3

};

//Vertext shader language

static const char gVertexShader[] =

"attribute vec4 a_Position;\n"

"attribute vec2 a_texCoord;\n"

"varying vec2 v_texCoord;\n"

"void main() {\n"

" gl_Position = a_Position;\n"

" v_texCoord = a_texCoord;\n"

"}\n";

//Fragment shader language

static const char gFragmentShader[] =

"varying lowp vec2 v_texCoord;\n"

"uniform sampler2D SamplerY;\n"

"uniform sampler2D SamplerU;\n"

"uniform sampler2D SamplerV;\n"

"void main(void)\n"

"{\n"

"mediump vec3 yuv;\n"

"lowp vec3 rgb;\n"

"yuv.x = texture2D(SamplerY, v_texCoord).r;\n"

"yuv.y = texture2D(SamplerU, v_texCoord).r - 0.5;\n"

"yuv.z = texture2D(SamplerV, v_texCoord).r - 0.5;\n"

"rgb = mat3( 1, 1, 1,\n"

"0, -0.39465, 2.03211,\n"

"1.13983, -0.58060, 0) * yuv;\n"

"gl_FragColor = vec4(rgb, 1);\n"

"}\n";

GLRender::GLRender()

:surface_ok(false)

,mContext(NULL)

,mOrient(VERTICAL)

,mViewRenderbuffer(NULL)

,mViewFramebuffer(NULL)

{

mSquareVertices = (GLfloat *)malloc(sizeof(squareVertices));

memcpy(mSquareVertices,squareVertices,sizeof(squareVertices));

m_lock = [[NSCondition alloc] init];

}

GLRender::~GLRender()

{

this->releaseBuffers();

free(mSquareVertices);

}

int GLRender::close()

{

[m_lock lock];

if (surface_ok)

{

if (mContext)

{

glClearColor(0, 0, 0, 0);

glClear(GL_COLOR_BUFFER_BIT|GL_DEPTH_BUFFER_BIT);

[mContext presentRenderbuffer:GL_RENDERBUFFER];

[EAGLContext setCurrentContext:mContext];

this->releaseBuffers();

[EAGLContext setCurrentContext:nil];

if (mContext){

mContext = nil;

}

}

mSurface = NULL;

surface_ok = false ;

}

[m_lock unlock];

return 0;

}

GLuint GLRender::loadShader(GLenum shaderType, const char* pSource) {

GLuint shader = glCreateShader(shaderType);

if (shader) {

glShaderSource(shader, 1, &pSource, NULL);

glCompileShader(shader);

GLint compiled = 0;

glGetShaderiv(shader, GL_COMPILE_STATUS, &compiled);

if (!compiled) {

GLint infoLen = 0;

glGetShaderiv(shader, GL_INFO_LOG_LENGTH, &infoLen);

if (infoLen) {

char* buf = (char*) malloc(infoLen);

if (buf != NULL) {

glGetShaderInfoLog(shader, infoLen, NULL, buf);

LOGE("Could not compile shader %d:\n%s\n", shaderType, buf);

free(buf);

}

glDeleteShader(shader);

shader = 0;

}

}

}

return shader;

}

GLuint GLRender::createProgram(const char* pVertexSource, const char* pFragmentSource)

{

GLuint program = glCreateProgram();

if (program) {

GLuint vertexShader = loadShader(GL_VERTEX_SHADER, pVertexSource);

if (!vertexShader) {

return 0;

}else{

glAttachShader(program, vertexShader);

}

GLuint pixelShader = loadShader(GL_FRAGMENT_SHADER, pFragmentSource);

if (!pixelShader) {

return 0;

}else{

glAttachShader(program, pixelShader);

}

glLinkProgram(program);

GLint linkStatus = GL_FALSE;

glGetProgramiv(program, GL_LINK_STATUS, &linkStatus);

if (linkStatus != GL_TRUE) {

GLint bufLength = 0;

glGetProgramiv(program, GL_INFO_LOG_LENGTH, &bufLength);

if (bufLength) {

char* buf = (char*) malloc(bufLength);

if (buf) {

glGetProgramInfoLog(program, bufLength, NULL, buf);

LOGE("Could not link program:\n%s\n", buf);

free(buf);

}

}

glDeleteProgram(program);

program = 0;

}

}

return program;

}

void GLRender::setupBuffers()

{

glDisable(GL_DEPTH_TEST);

mPositionLoc = glGetAttribLocation(mGlProgram, "a_Position");

glEnableVertexAttribArray(mPositionLoc);

glVertexAttribPointer(mPositionLoc, 4, GL_FLOAT,GL_FALSE, VERTEX_STRIDE, mSquareVertices);

mTexCoordLoc = glGetAttribLocation(mGlProgram, "a_texCoord");

glEnableVertexAttribArray(mTexCoordLoc);

glVertexAttribPointer(mTexCoordLoc, 2, GL_FLOAT, GL_FALSE, VERTEX_STRIDE, &mSquareVertices[4]);

this->createBuffers();

}

void GLRender::createBuffers()

{

[EAGLContext setCurrentContext:mContext];

//Create render buffer

glGenRenderbuffers(1, &mViewRenderbuffer);

glBindRenderbuffer(GL_RENDERBUFFER, mViewRenderbuffer);

//Create frame buffer

glGenFramebuffers(1, &mViewFramebuffer);

glBindFramebuffer(GL_FRAMEBUFFER, mViewFramebuffer);

//Create viewport

[mContext renderbufferStorage:GL_RENDERBUFFER fromDrawable:mSurface];

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_WIDTH, &mGLSurfaceWidth);

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_HEIGHT, &mGLSurfaceHeight);

glViewport(0.0, 0.0, mGLSurfaceWidth, mGLSurfaceHeight);

glClearColor(0.0, 0.0, 0.0, 1.0);

glPixelStorei(GL_UNPACK_ALIGNMENT, 1);

glFramebufferRenderbuffer(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_RENDERBUFFER, mViewRenderbuffer);

if(glCheckFramebufferStatus(GL_FRAMEBUFFER) != GL_FRAMEBUFFER_COMPLETE){

NSLog(@"Failure with framebuffer generation");

}

}

void GLRender::releaseBuffers()

{

if (mViewFramebuffer){

glDeleteFramebuffers(1, &mViewFramebuffer);

mViewFramebuffer = 0;

}

if (mViewFramebuffer){

glDeleteRenderbuffers(1, &mViewRenderbuffer);

mViewRenderbuffer = 0;

}

}

bool GLRender::setupGraphics(EGLint w, EGLint h)

{

mGlProgram = createProgram(gVertexShader, gFragmentShader);

if (!mGlProgram) {

LOGE("Could not create program.");

return false;

}

// Get sampler location

mSamplerY = glGetUniformLocation(mGlProgram, "SamplerY");

mSamplerU = glGetUniformLocation(mGlProgram, "SamplerU");

mSamplerV = glGetUniformLocation(mGlProgram, "SamplerV");

glUseProgram(mGlProgram);

glGenTextures(1, &m_texturePlanarY);

glGenTextures(1, &m_texturePlanarU);

glGenTextures(1, &m_texturePlanarV);

glActiveTexture(GL_TEXTURE0);

setTexture(m_texturePlanarY);

glUniform1i(mSamplerY, 0);

glActiveTexture(GL_TEXTURE1);

setTexture(m_texturePlanarU);

glUniform1i(mSamplerU, 1);

glActiveTexture(GL_TEXTURE2);

setTexture(m_texturePlanarV);

glUniform1i(mSamplerV, 2);

surface_ok = true;

return true;

}

void GLRender::nativeGLRender(char *pFrame)

{

[m_lock lock];

this->configGL();

if (surface_ok)

{

glViewport(0, 0, mGLSurfaceWidth, mGLSurfaceHeight);

mPositionLoc = glGetAttribLocation(mGlProgram, "a_Position");

mTexCoordLoc = glGetAttribLocation(mGlProgram, "a_texCoord");

glEnableVertexAttribArray(mPositionLoc);

glVertexAttribPointer(mPositionLoc, 4, GL_FLOAT,GL_FALSE, VERTEX_STRIDE, mSquareVertices);

// Load the texture coordinate

glEnableVertexAttribArray(mTexCoordLoc);

glVertexAttribPointer(mTexCoordLoc, 2, GL_FLOAT, GL_FALSE, VERTEX_STRIDE, &mSquareVertices[4]);

glDisable(GL_BLEND);

glActiveTexture(GL_TEXTURE0);

setTexture(m_texturePlanarY);

glUniform1i(mSamplerY, 0);

glActiveTexture(GL_TEXTURE1);

setTexture(m_texturePlanarU);

glUniform1i(mSamplerU, 1);

glActiveTexture(GL_TEXTURE2);

setTexture(m_texturePlanarV);

glUniform1i(mSamplerV, 2);

render(pFrame);

}

[m_lock unlock];

}

int GLRender::nativeGLRender(PYUVData &yuvdata)

{

[m_lock lock];

if(mCurrentLayerHeight != mSurface.bounds.size.height && mCurrentLayerWidth != mSurface.bounds.size.width){

mCurrentLayerWidth = mSurface.bounds.size.width;

mCurrentLayerHeight = mSurface.bounds.size.height;

if((mCurrentLayerWidth > mWidth - 5 && mCurrentLayerWidth < mWidth + 5)){

surface_ok = false ;

}

}

this->configGL();

if (surface_ok){

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_WIDTH, &mGLSurfaceWidth);

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_HEIGHT, &mGLSurfaceHeight);

glBindRenderbuffer(GL_RENDERBUFFER, mViewRenderbuffer);

mPositionLoc = glGetAttribLocation(mGlProgram, "a_Position");

mTexCoordLoc = glGetAttribLocation(mGlProgram, "a_texCoord");

glVertexAttribPointer(mPositionLoc, 4, GL_FLOAT,GL_FALSE, VERTEX_STRIDE, mSquareVertices);

glEnableVertexAttribArray(mPositionLoc);

glVertexAttribPointer(mTexCoordLoc, 2, GL_FLOAT, GL_FALSE, VERTEX_STRIDE, &mSquareVertices[4]);

glEnableVertexAttribArray(mTexCoordLoc);

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_WIDTH, &mGLSurfaceWidth);

glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_HEIGHT, &mGLSurfaceHeight);

glViewport(0, 0, mGLSurfaceWidth, mGLSurfaceHeight);

render(yuvdata);

}else{

LOGE("NativeGLRender error.");

[m_lock unlock];

return -1;

}

[m_lock unlock];

return 0;

}

void GLRender::setTexture(GLuint texture)

{

glBindTexture ( GL_TEXTURE_2D, texture);

glTexParameteri( GL_TEXTURE_2D, GL_TEXTURE_MIN_FILTER, GL_LINEAR );

glTexParameteri( GL_TEXTURE_2D, GL_TEXTURE_MAG_FILTER, GL_LINEAR );

glTexParameteri( GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE );

glTexParameteri( GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE );

}

void GLRender::render(PYUVData &yuvdata)

{

const uint8_t *src_y = (uint8_t *)yuvdata->data[0];

const uint8_t *src_u = (uint8_t *)yuvdata->data[1];

const uint8_t *src_v = (uint8_t *)yuvdata->data[2];

glBindRenderbuffer(GL_RENDERBUFFER, mViewRenderbuffer);

[mContext presentRenderbuffer:GL_RENDERBUFFER];

glBindTexture ( GL_TEXTURE_2D, m_texturePlanarY);

glTexImage2D ( GL_TEXTURE_2D, 0, GL_LUMINANCE, mWidth, mHeight, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, src_y);

glBindTexture( GL_TEXTURE_2D, m_texturePlanarU );

glTexImage2D ( GL_TEXTURE_2D, 0, GL_LUMINANCE, mWidth/2, mHeight/2, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, src_u);

glBindTexture ( GL_TEXTURE_2D,m_texturePlanarV );

glTexImage2D ( GL_TEXTURE_2D, 0, GL_LUMINANCE, mWidth/2, mHeight/2, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, src_v);

glDrawArrays(GL_TRIANGLE_STRIP, 0, 4);

}

void GLRender::render( const void *data)

{

const uint8_t *src_y = (const uint8_t *)data;

const uint8_t *src_u = (const uint8_t *)data + mWidth * mHeight;

const uint8_t *src_v = src_u + (mWidth / 2 * mHeight / 2);

glBindTexture ( GL_TEXTURE_2D, m_texturePlanarY);

glTexImage2D ( GL_TEXTURE_2D, 0, GL_LUMINANCE, mWidth, mHeight, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, src_y);

glBindTexture( GL_TEXTURE_2D, m_texturePlanarU );

glTexImage2D ( GL_TEXTURE_2D, 0, GL_LUMINANCE, mWidth/2, mHeight/2, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, src_u);

glBindTexture ( GL_TEXTURE_2D,m_texturePlanarV );

glTexImage2D ( GL_TEXTURE_2D, 0, GL_LUMINANCE, mWidth/2, mHeight/2, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, src_v);

}

void GLRender::configGL()

{

if ((mSurface != NULL) && (!surface_ok)){

if (mContext){

mContext = nil;

}

memcpy(mSquareVertices,squareVertices,sizeof(squareVertices));

if(!mContext){

mContext = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES2];

if (!mContext || ![EAGLContext setCurrentContext:mContext]) {

return;

}

}

this->releaseBuffers();

this->setupBuffers();

setupGraphics(mGLSurfaceWidth,mGLSurfaceHeight);

}

}

int GLRender::digitalRegionZoom(int bootom_x , int bootom_y, int top_x, int top_y)

{

#define GET_SCALE(x) (x/100.00)

#define CHECK_VALID(x) (x<0||x>100)

int stride = 6;

if (CHECK_VALID(bootom_x) || CHECK_VALID(bootom_y) || CHECK_VALID(top_x) || CHECK_VALID(top_y)){

return -1;

}

GLfloat zoom_textures[4][2]={

static_cast<GLfloat>GET_SCALE(bootom_x),static_cast<GLfloat>(GET_SCALE(bootom_y)),

static_cast<GLfloat>GET_SCALE( top_x ),static_cast<GLfloat>GET_SCALE(bootom_y),

static_cast<GLfloat>GET_SCALE( bootom_x ), static_cast<GLfloat>GET_SCALE(top_y),

static_cast<GLfloat>GET_SCALE(top_x), static_cast<GLfloat>GET_SCALE(top_y)

};

[m_lock lock];

for(int i = 0; i < 4; i++){

mSquareVertices[i*stride+4]= zoom_textures[i][0];

mSquareVertices[i*stride+4+1] = zoom_textures[i][1];

}

[m_lock unlock];

return 0;

}

bool GLRender::setGLSurface(const int p_nWidth, const int p_nHeight, CAEAGLLayer *layer)

{

mWidth = p_nWidth;

mHeight = p_nHeight;

mSurface = layer;

mCurrentLayerWidth = layer.bounds.size.width;

mCurrentLayerHeight = layer.bounds.size.height;

return true;

}

1.1.3 完整代码2 继承GLKViewController类实现

通过继承IOS系统API 的GLKViewController类来实现yuv 渲染。

点击此处下载完整 YUV 渲染 demo

完整代码如下:

//

// KYLGLKController.m

// yuvShowKYLDemo

//

// Created by yulu kong on 2019/7/27.

// Copyright © 2019 yulu kong. All rights reserved.

//

#import "KYLGLKController.h"

#import "kyldefine.h"

#define BUFFER_OFFSET(i) ((char *)NULL + (i))

// Uniform index.

enum

{

KYLGLK_UNIFORM_MODELVIEWPROJECTION_MATRIX,

KYLGLK_UNIFORM_NORMAL_MATRIX,

KYLGLK_NUM_UNIFORMS

};

GLint uniforms[KYLGLK_NUM_UNIFORMS];

// Attribute index.

enum

{

KYLGLK_ATTRIB_VERTEX,

KYLGLK_ATTRIB_NORMAL,

KYLGLK_NUM_ATTRIBUTES

};

@interface KYLGLKController () {

GLuint _program;

NSInteger m_nVideoWidth;

NSInteger m_nVideoHeight;

NSInteger m_nWidth;

NSInteger m_nHeight;

Byte* m_pYUVData;

NSCondition *m_YUVDataLock;

GLuint _testTxture[3];

BOOL m_bNeedSleep;

CGPoint gestureStartPoint;

NSInteger minGestureLength;

NSInteger maxVariance;

CGSize minZoom;

CGSize maxZoom;

CGFloat fScale;

//BOOL _bShowVideo;

}

@property (strong, nonatomic) EAGLContext *context;

@property (strong, nonatomic) CADisplayLink* displayLink;

- (void)setupGL;

- (void)tearDownGL;

- (BOOL)loadShaders;

- (BOOL)compileShader:(GLuint *)shader type:(GLenum)type file:(NSString *)file;

- (BOOL)linkProgram:(GLuint)prog;

- (BOOL)validateProgram:(GLuint)prog;

@end

@implementation KYLGLKController

- (void)dealloc

{

NSLog(@"dealloc() in %s", __FUNCTION__);

[self stopTimerUpdate];

[self tearDownGL];

//释放内存

[m_YUVDataLock lock];

if (m_pYUVData != NULL) {

delete []m_pYUVData;

m_pYUVData = NULL;

}

[m_YUVDataLock unlock];

}

- (void)writeYUVFrame:(Byte *)pYUV Len:(NSInteger)length width:(NSInteger)width height:(NSInteger)height

{

[m_YUVDataLock lock];

if(m_nVideoWidth != width || height != m_nVideoHeight)

{

if (m_pYUVData != NULL) {

delete []m_pYUVData;

m_pYUVData = NULL;

}

m_pYUVData = new Byte[length];

}

if (m_pYUVData == NULL) {

[m_YUVDataLock unlock];

return;

}

memcpy(m_pYUVData, pYUV, length);

m_nWidth = width;

m_nHeight = height;

m_bNeedSleep = NO;

dispatch_async(dispatch_get_main_queue(), ^{ //回到主线程

[self renderView];

});

[m_YUVDataLock unlock];

}

-(void) viewWillDisappear:(BOOL)animated

{

[super viewWillDisappear:animated];

}

-(void) viewDidDisappear:(BOOL)animated

{

[super viewDidDisappear:animated];

}

-(void) viewWillAppear:(BOOL)animated

{

[super viewWillAppear:animated];

}

- (void)viewDidLoad

{

[super viewDidLoad];

[self.view setBackgroundColor:[UIColor blackColor]];

[self initOpenGL];

}

-(void) initOpenGL{

m_nVideoHeight = -1;

m_nVideoWidth = -1;

[self.view setUserInteractionEnabled:NO];

m_bNeedSleep = YES;

m_nHeight = 0;

m_nWidth = 0;

m_pYUVData = NULL;

m_YUVDataLock = [[NSCondition alloc] init];

self.context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES2];

//kEAGLRenderingAPIOpenGLES1

//self.context = [[[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES3] autorelease];

if (!self.context) {

NSLog(@"Failed to create ES context");

}

GLKView *view = (GLKView *)self.view;

view.context = self.context;

view.drawableDepthFormat = GLKViewDrawableDepthFormatNone;

view.drawableColorFormat = GLKViewDrawableColorFormatRGB565;

[EAGLContext setCurrentContext:self.context];

GLuint vertShader, fragShader;

NSString *vertShaderPathname, *fragShaderPathname;

// Create shader program.

_program = glCreateProgram();

// Create and compile vertex shader.

vertShaderPathname = [[NSBundle mainBundle] pathForResource:@"Shader" ofType:@"vsh"];

//NSLog(@"vertShaderPathname: %@", vertShaderPathname);

if (![self compileShader:&vertShader type:GL_VERTEX_SHADER file:vertShaderPathname]) {

//if (![self compileShader:&vertShader type:GL_VERTEX_SHADER file:@"ShaderTest.vsh"]) {

NSLog(@"Failed to compile vertex shader");

return ;

}

// Create and compile fragment shader.

fragShaderPathname = [[NSBundle mainBundle] pathForResource:@"Shader" ofType:@"fsh"];

//NSLog(@"fragShaderPathname: %@", fragShaderPathname);

if (![self compileShader:&fragShader type:GL_FRAGMENT_SHADER file:fragShaderPathname]) {

NSLog(@"Failed to compile fragment shader");

return ;

}

// Attach vertex shader to program.

glAttachShader(_program, vertShader);

// Attach fragment shader to program.

glAttachShader(_program, fragShader);

// Link program.

if (![self linkProgram:_program]) {

NSLog(@"Failed to link program: %d", _program);

if (vertShader) {

glDeleteShader(vertShader);

vertShader = 0;

}

if (fragShader) {

glDeleteShader(fragShader);

fragShader = 0;

}

if (_program) {

glDeleteProgram(_program);

_program = 0;

}

return ;

}

glEnable(GL_TEXTURE_2D);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glUseProgram(_program);

glGenTextures(3, _testTxture);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_2D, _testTxture[0]);

//glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, 640, 480, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, y);

glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_MAG_FILTER,GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_MIN_FILTER,GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_2D, _testTxture[1]);

//glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, 320, 240, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, u);

glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_MAG_FILTER,GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_MIN_FILTER,GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glActiveTexture(GL_TEXTURE2);

glBindTexture(GL_TEXTURE_2D, _testTxture[2]);

//glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, 320, 240, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, v);

glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_MAG_FILTER,GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D,GL_TEXTURE_MIN_FILTER,GL_LINEAR);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_2D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

GLuint i;

glActiveTexture(GL_TEXTURE1);

i=glGetUniformLocation(_program,"Utex");// glUniform1i(i,1); /* Bind Utex to texture unit 1 */

//NSLog(@"i: %d", i);

// glBindTexture(GL_TEXTURE_2D, _textureU);

glUniform1i(i,1);

/* Select texture unit 2 as the active unit and bind the V texture. */

glActiveTexture(GL_TEXTURE2);

i=glGetUniformLocation(_program,"Vtex");

//NSLog(@"i: %d", i);

//glBindTexture(GL_TEXTURE_2D, _textureV);

glUniform1i(i,2); /* Bind Vtext to texture unit 2 */

/* Select texture unit 0 as the active unit and bind the Y texture. */

glActiveTexture(GL_TEXTURE0);

i=glGetUniformLocation(_program,"Ytex");

//NSLog(@"i: %d", i);

//glBindTexture(GL_TEXTURE_2D, _textureY);

glUniform1i(i,0); /* Bind Ytex to texture unit 0 */

}

- (void) startTimerUpdate {

self.displayLink = [CADisplayLink displayLinkWithTarget:self selector:@selector(render:)];

[self.displayLink addToRunLoop:[NSRunLoop currentRunLoop] forMode:NSRunLoopCommonModes];

}

- (void) stopTimerUpdate{

[self.displayLink invalidate];

self.displayLink = nil;

}

- (void)render:(CADisplayLink*)displayLink {

[self renderView];

}

- (void) renderView{

GLKView* view = (GLKView*)self.view;

[view display];

}

- (void)viewDidUnload

{

[super viewDidUnload];

//[self tearDownGL];

}

- (void)didReceiveMemoryWarning

{

[super didReceiveMemoryWarning];

// Release any cached data, images, etc. that aren't in use.

}

- (BOOL)shouldAutorotateToInterfaceOrientation:(UIInterfaceOrientation)interfaceOrientation

{

// Return YES for supported orientations

if ([[UIDevice currentDevice] userInterfaceIdiom] == UIUserInterfaceIdiomPhone) {

return (interfaceOrientation != UIInterfaceOrientationPortraitUpsideDown);

} else {

return YES;

}

}

- (void)setupGL

{

[EAGLContext setCurrentContext:self.context];

}

- (void)tearDownGL

{

//[EAGLContext setCurrentContext:self.context];

if ([EAGLContext currentContext] == self.context) {

[EAGLContext setCurrentContext:nil];

}

self.context = nil;

if (_program) {

glDeleteProgram(_program);

_program = 0;

}

}

#pragma mark - GLKView and GLKViewController delegate methods

- (BOOL)drawYUV

{

[m_YUVDataLock lock];

if (m_bNeedSleep) {

usleep(10000);

}

m_bNeedSleep = YES;

if (m_pYUVData == NULL) {

[m_YUVDataLock unlock];

return NO;

}

Byte *y = m_pYUVData;

Byte *u = m_pYUVData + m_nHeight * m_nWidth;

Byte *v = m_pYUVData + m_nHeight * m_nWidth * 5 / 4;

glActiveTexture(GL_TEXTURE0);

glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, m_nWidth, m_nHeight, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, y);

glActiveTexture(GL_TEXTURE1);

glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, m_nWidth / 2, m_nHeight / 2, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, u);

glActiveTexture(GL_TEXTURE2);

glTexImage2D(GL_TEXTURE_2D, 0, GL_LUMINANCE, m_nWidth / 2, m_nHeight / 2, 0, GL_LUMINANCE, GL_UNSIGNED_BYTE, v);

[m_YUVDataLock unlock];

return YES;

}

- (void)glkView:(GLKView *)view drawInRect:(CGRect)rect

{

glClearColor(0.0f, 0.0f, 0.0f, 1.0f);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

if ([self drawYUV] == NO) {

return;

}

GLfloat varray[] = {

-1.0f, 1.0f, 0.0f,

-1.0f, -1.0f, 0.0f,

1.0f, -1.0f, 0.0f,

1.0f, 1.0f, 0.0f,

-1.0f, 1.0f, 0.0f

};

GLfloat triangleTexCoords[] = {

// X, Y, Z

0.0f, 0.0f,

0.0f, 1.0f,

1.0f, 1.0f,

1.0f, 0.0f,

0.0f, 0.0f

};

glEnable(GL_DEPTH_TEST);

GLuint i;

i = glGetAttribLocation(_program, "vPosition");

glVertexAttribPointer(i, 3, GL_FLOAT, GL_FALSE, 3 * sizeof(float), varray);

glEnableVertexAttribArray(i);

i = glGetAttribLocation(_program, "myTexCoord");

//__android_log_print(ANDROID_LOG_INFO,"TAG","myTexCoord i: %d", i);

glVertexAttribPointer(i, 2, GL_FLOAT, GL_FALSE,

2 * sizeof(float),

triangleTexCoords);

glEnableVertexAttribArray(i);

glDrawArrays(GL_TRIANGLE_STRIP, 0, 5);

}

#pragma mark - OpenGL ES 2 shader compilation

- (BOOL)loadShaders

{

GLuint vertShader, fragShader;

NSString *vertShaderPathname, *fragShaderPathname;

// Create shader program.

_program = glCreateProgram();

// Create and compile vertex shader.

vertShaderPathname = [[NSBundle mainBundle] pathForResource:@"Shader" ofType:@"vsh"];

if (![self compileShader:&vertShader type:GL_VERTEX_SHADER file:vertShaderPathname]) {

NSLog(@"Failed to compile vertex shader");

return NO;

}

// Create and compile fragment shader.

fragShaderPathname = [[NSBundle mainBundle] pathForResource:@"Shader" ofType:@"fsh"];

if (![self compileShader:&fragShader type:GL_FRAGMENT_SHADER file:fragShaderPathname]) {

NSLog(@"Failed to compile fragment shader");

return NO;

}

// Attach vertex shader to program.

glAttachShader(_program, vertShader);

// Attach fragment shader to program.

glAttachShader(_program, fragShader);

// Bind attribute locations.

// This needs to be done prior to linking.

glBindAttribLocation(_program, KYLGLK_ATTRIB_VERTEX, "position");

glBindAttribLocation(_program, KYLGLK_ATTRIB_NORMAL, "normal");

// Link program.

if (![self linkProgram:_program]) {

NSLog(@"Failed to link program: %d", _program);

if (vertShader) {

glDeleteShader(vertShader);

vertShader = 0;

}

if (fragShader) {

glDeleteShader(fragShader);

fragShader = 0;

}

if (_program) {

glDeleteProgram(_program);

_program = 0;

}

return NO;

}

// Get uniform locations.

uniforms[KYLGLK_UNIFORM_MODELVIEWPROJECTION_MATRIX] = glGetUniformLocation(_program, "modelViewProjectionMatrix");

uniforms[KYLGLK_UNIFORM_NORMAL_MATRIX] = glGetUniformLocation(_program, "normalMatrix");

// Release vertex and fragment shaders.

if (vertShader) {

glDetachShader(_program, vertShader);

glDeleteShader(vertShader);

}

if (fragShader) {

glDetachShader(_program, fragShader);

glDeleteShader(fragShader);

}

return YES;

}

- (BOOL)compileShader:(GLuint *)shader type:(GLenum)type file:(NSString *)file

{

GLint status;

const GLchar *source;

source = (GLchar *)[[NSString stringWithContentsOfFile:file encoding:NSUTF8StringEncoding error:nil] UTF8String];

if (!source) {

NSLog(@"Failed to load vertex shader");

return NO;

}

*shader = glCreateShader(type);

glShaderSource(*shader, 1, &source, NULL);

glCompileShader(*shader);

#if defined(DEBUG)

GLint logLength;

glGetShaderiv(*shader, GL_INFO_LOG_LENGTH, &logLength);

if (logLength > 0) {

GLchar *log = (GLchar *)malloc(logLength);

glGetShaderInfoLog(*shader, logLength, &logLength, log);

NSLog(@"Shader compile log:\n%s", log);

free(log);

}

#endif

glGetShaderiv(*shader, GL_COMPILE_STATUS, &status);

if (status == 0) {

glDeleteShader(*shader);

return NO;

}

return YES;

}

- (BOOL)linkProgram:(GLuint)prog

{

GLint status;

glLinkProgram(prog);

#if defined(DEBUG)

GLint logLength;

glGetProgramiv(prog, GL_INFO_LOG_LENGTH, &logLength);

if (logLength > 0) {

GLchar *log = (GLchar *)malloc(logLength);

glGetProgramInfoLog(prog, logLength, &logLength, log);

NSLog(@"Program link log:\n%s", log);

free(log);

}

#endif

glGetProgramiv(prog, GL_LINK_STATUS, &status);

if (status == 0) {

return NO;

}

return YES;

}

- (BOOL)validateProgram:(GLuint)prog

{

GLint logLength, status;

glValidateProgram(prog);

glGetProgramiv(prog, GL_INFO_LOG_LENGTH, &logLength);

if (logLength > 0) {

GLchar *log = (GLchar *)malloc(logLength);

glGetProgramInfoLog(prog, logLength, &logLength, log);

NSLog(@"Program validate log:\n%s", log);

free(log);

}

glGetProgramiv(prog, GL_VALIDATE_STATUS, &status);

if (status == 0) {

return NO;

}

return YES;

}

@end