编程作业(python)| 吴恩达机器学习(4)反向传播算法(BP-神经网络)

8道编程作业及解析见:Coursera吴恩达机器学习编程作业

编程环境:Jupyter Notebook

本次作业的理论部分:笔记 | 反向传播算法(BP-神经网络)

Programming Exercise 4:Neural Networks Learning

本章目录

- Programming Exercise 4:Neural Networks Learning

- 题目

- 1. 构造数据集

- 2. 前向传播

- 3. 代价函数

- 3.1 无正则化的代价函数

- 3.2 有正则化的代价函数

- 4. 反向传播——梯度矩阵 D D D

- 4.1 无正则化的梯度

- 4.2 有正则化的梯度

- 5. 神经网络训练过程

- 6. 可视化隐藏层

题目

跟上次作业一样,还是手写数字识别,上次我们是把已有的训练好的参数直接用于神经网络,这次我们将利用神经网络的反向传播来训练参数并得到最终的优化模型。

数据集:ex4data1.mat

初始参数:ex4weights.mat

1. 构造数据集

-

导入库与数据集:

import numpy as np # 科学计算库,处理多维数组,进行数据分析 import matplotlib.pyplot as plt # 提供一个类似 Matlab 的绘图框架 import scipy.io as sio # 数据输入输出,用于读入.mat文件。scipy一个高级的科学计算库,它和Numpy联系很密切 from scipy.optimize import minimize # 优化函数 data = sio.loadmat('ex3data1.mat') -

获取输入变量 X X X:

raw_X = data['X'] X = np.insert(raw_X,0,values=1,axis=1) >>> X.shape (5000, 401) -

获取输出变量 y y y: 注意需要对y进行独热编码处理(one-hot编码)

raw_y = data['y'] def one_hot_encoder(raw_y): result = [] for i in raw_y: # 1-10 y_temp = np.zeros(10) y_temp[i-1] = 1 result.append(y_temp) return np.array(result) y = one_hot_encoder(raw_y) >>> y.shape (5000, 10) -

权重参数 θ \theta θ:

# 1.获取训练参数 theta = sio.loadmat('ex4weights.mat') >>> theta.keys() dict_keys(['__header__', '__version__', '__globals__', 'Theta1', 'Theta2']) theta1 = theta['Theta1'] # (25, 401) theta2 = theta['Theta2'] # (10, 26) # 2.序列化权重参数:将θ1和θ2合并转化为一维数组 def serialize(a,b): return np.append(a.flatten(),b.flatten()) theta_serialize = serialize(theta1,theta2) >>> theta_serialize.shape (10285,) # 25*401+10*26 # 3.解序列化权重参数:还原上一步的操作 def deserialize(theta_serialize): theta1 = theta_serialize[:25*401].reshape(25,401) theta2 = theta_serialize[25*401:].reshape(10,26) return theta1,theta2

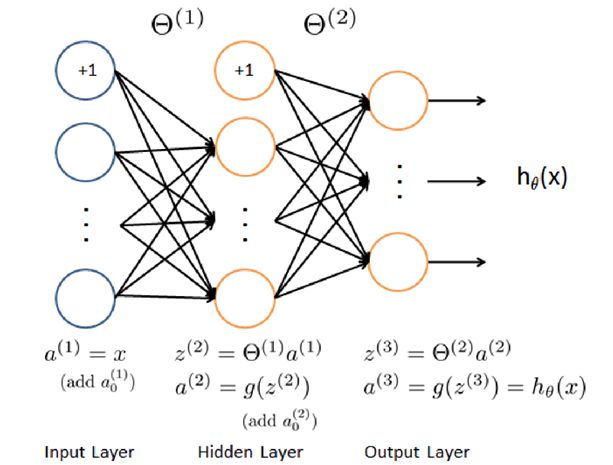

2. 前向传播

与上次作业一样

def sigmoid(z):

return 1 / (1 + np.exp(-z))

def feed_forward(theta_serialize,X):

theta1,theta2 = deserialize(theta_serialize)

a1 = X

z2 = a1 @ theta1.T

a2 = sigmoid(z2)

a2 = np.insert(a2,0,values=1,axis=1)

z3 = a2 @ theta2.T

h = sigmoid(z3)

return a1,z2,a2,z3,h

3. 代价函数

点我看公式推导: 2.3 神经网络的代价函数

3.1 无正则化的代价函数

J ( θ ) = − 1 m ∑ i = 1 m ∑ k = 1 K [ y k ( i ) log ( h θ ( x ( i ) ) ) k + ( 1 − y k ( i ) ) log ( 1 − ( h θ ( x ( i ) ) ) k ) ] J(\theta)=-\frac{1}{m} \sum_{i=1}^{m}\sum_{k=1}^{K} \left [ y_k^{(i)}\log{(h_θ( x^{(i)}))_k}+(1-y_k^{(i)})\log{(1-(h_θ( x^{(i)}))_k)}\right ] J(θ)=−m1i=1∑mk=1∑K[yk(i)log(hθ(x(i)))k+(1−yk(i))log(1−(hθ(x(i)))k)]其中 h θ ( x ) ∈ R K h_\theta(x)∈R^K hθ(x)∈RK , y ∈ R K y∈R^K y∈RK

- ( h θ ( x ( i ) ) ) k (h_\theta(x^{(i)}))_k (hθ(x(i)))k表示第 i i i 个训练实例的第 k k k 个假设

- y k ( i ) y_k^{(i)} yk(i)表示第 i i i 个训练实例的第 k k k 个实际输出

简化表示为:

J ( θ ) = − 1 m S U M [ y ∗ log ( h θ ( x ) ) + ( 1 − y ) ∗ log ( 1 − h θ ( x ) ) ] J(θ)=-\displaystyle\frac{1}{m} SUM\left[y*\log(h_\theta(x))+(1-y)*\log(1-h_\theta(x))\right] J(θ)=−m1SUM[y∗log(hθ(x))+(1−y)∗log(1−hθ(x))] S U M SUM SUM 表示对向量的所有项求和,最终得一个标量值。

def cost(theta_serialize,X,y):

a1,z2,a2,z3,h = feed_forward(theta_serialize,X)

J = -np.sum(y*np.log(h)+(1-y)*np.log(1-h)) / len(X)

return J

3.2 有正则化的代价函数

J ( θ ) = − 1 m [ ∑ i = 1 m ∑ k = 1 K y k ( i ) log ( h θ ( x ( i ) ) ) k + ( 1 − y k ( i ) ) log ( 1 − ( h θ ( x ( i ) ) ) k ) ] + λ 2 m ∑ l = 1 L − 1 ∑ i = 1 s l ∑ j = 1 s l + 1 ( θ j i ( l ) ) 2 = − 1 m [ ∑ i = 1 m ∑ k = 1 K y k ( i ) log ( h θ ( x ( i ) ) ) k + ( 1 − y k ( i ) ) log ( 1 − ( h θ ( x ( i ) ) ) k ) ] + λ 2 m [ ∑ i = 1 400 ∑ j = 1 25 ( θ j i ( 1 ) ) 2 + ∑ i = 1 25 ∑ j = 1 10 ( θ j i ( 2 ) ) 2 ] \begin{aligned} J(\theta)&=-\frac{1}{m} \left [ \sum_{i=1}^{m}\sum_{k=1}^{K} y_k^{(i)}\log{(h_θ( x^{(i)}))_k}+(1-y_k^{(i)})\log{(1-(h_θ( x^{(i)}))_k)}\right ] +\frac{\lambda}{2m} \sum_{l=1}^{L-1} \sum_{i=1}^{s_l} \sum_{j=1}^{s_{l+1}} (\theta_{ji}^{(l)})^2 \\ &=-\frac{1}{m} \left [ \sum_{i=1}^{m}\sum_{k=1}^{K} y_k^{(i)}\log{(h_θ( x^{(i)}))_k}+(1-y_k^{(i)})\log{(1-(h_θ( x^{(i)}))_k)}\right ] +\frac{\lambda}{2m}\left [ \sum_{i=1}^{400}\sum_{j=1}^{25}(\theta_{ji}^{(1)})^2 +\sum_{i=1}^{25}\sum_{j=1}^{10}(\theta_{ji}^{(2)})^2 \right ] \end{aligned} J(θ)=−m1[i=1∑mk=1∑Kyk(i)log(hθ(x(i)))k+(1−yk(i))log(1−(hθ(x(i)))k)]+2mλl=1∑L−1i=1∑slj=1∑sl+1(θji(l))2=−m1[i=1∑mk=1∑Kyk(i)log(hθ(x(i)))k+(1−yk(i))log(1−(hθ(x(i)))k)]+2mλ[i=1∑400j=1∑25(θji(1))2+i=1∑25j=1∑10(θji(2))2]正则化项 ∑ l = 1 L − 1 ∑ i = 1 s l ∑ j = 1 s l + 1 ( θ j i ( l ) ) 2 \displaystyle\sum_{l=1}^{L-1} \displaystyle\sum_{i=1}^{s_l} \displaystyle\sum_{j=1}^{s_{l+1}} (\theta_{ji}^{(l)})^2 l=1∑L−1i=1∑slj=1∑sl+1(θji(l))2 是每一层排除 θ 0 \theta_0 θ0后的所有权值 θ \theta θ 的平方和。

def reg_cost(theta_serialize,X,y,lamda):

sum1 = np.sum(np.power(theta1[:,1:],2)) # 注意从1开始

sum2 = np.sum(np.power(theta2[:,1:],2))

reg = (sum1 + sum2) * lamda / (2*len(X))

return reg + cost(theta_serialize,X,y) # 注意返回值加上了cost()

4. 反向传播——梯度矩阵 D D D

以下公式较为复杂,推导请参阅 BP-神经网络 4.公式推导

4.1 无正则化的梯度

∂ J ( θ ) ∂ θ ( 2 ) = ∂ J ∂ a ( 3 ) ∂ a ( 3 ) ∂ z ( 3 ) ∂ z ( 3 ) ∂ θ ( 2 ) = ( h − y ) a ( 2 ) = d ( 3 ) a ( 2 ) \frac{\partial J(\theta)}{\partial \theta^{(2)}} = \frac{\partial J}{\partial a^{(3)}} \frac{\partial a^{(3)}}{\partial z^{(3)}} \ \ \frac{\partial z^{(3)}}{\partial \theta^{(2)}} =(h-y) a^{(2)}=d^{(3)} a^{(2)} ∂θ(2)∂J(θ)=∂a(3)∂J∂z(3)∂a(3) ∂θ(2)∂z(3)=(h−y)a(2)=d(3)a(2) ∂ J ( θ ) ∂ θ ( 1 ) = ∂ J ∂ a ( 3 ) ∂ a ( 3 ) ∂ z ( 3 ) ∂ z ( 3 ) ∂ a ( 2 ) ∂ a ( 2 ) ∂ z ( 2 ) ∂ z ( 2 ) ∂ θ ( 1 ) = ( h − y ) θ ( 2 ) g ′ ( z ( 2 ) ) a ( 1 ) = d ( 2 ) a ( 1 ) \begin{aligned} \frac{\partial J(\theta)}{\partial \theta^{(1)}} =\frac{\partial J}{\partial a^{(3)}} \frac{\partial a^{(3)}}{\partial z^{(3)}} \ \ \frac{\partial z^{(3)}}{\partial a^{(2)}} \ \frac{\partial a^{(2)}}{\partial z^{(2)}} \ \frac{\partial z^{(2)}}{\partial \theta^{(1)}} = (h-y)\ \theta^{(2)}\ g'(z^{(2)})\ a^{(1)}=d^{(2)} a^{(1)} \end{aligned} ∂θ(1)∂J(θ)=∂a(3)∂J∂z(3)∂a(3) ∂a(2)∂z(3) ∂z(2)∂a(2) ∂θ(1)∂z(2)=(h−y) θ(2) g′(z(2)) a(1)=d(2)a(1)

# sigmoid函数求导

def sigmoid_gradient(z):

return sigmoid(z)*(1-sigmoid(z))

# 对照公式即可

def gradient(theta_serialize,X,y):

theta1,theta2 = deserialize(theta_serialize)

a1,z2,a2,z3,h = feed_forward(theta_serialize,X)

d3 = h - y

d2 = d3 @ theta2[:,1:] * sigmoid_gradient(z2)

D2 = (d3.T @ a2) / len(X)

D1 = (d2.T @ a1) / len(X)

return serialize(D1,D2)

4.2 有正则化的梯度

D i j ( l ) = { 1 m Δ i j ( l ) + λ m θ i j ( l ) if j ≠ 0 1 m Δ i j ( l ) if j = 0 D_{ij}^{(l)}= \begin{cases} \displaystyle\frac{1}{m}Δ_{ij}^{(l)}+\frac{\lambda}{m}\theta_{ij}^{(l)}\ \ & \text{if $j≠0$} \\ \\ \displaystyle\frac{1}{m}Δ_{ij}^{(l)} & \text{if $j=0$ } \end{cases} Dij(l)=⎩⎪⎪⎪⎨⎪⎪⎪⎧m1Δij(l)+mλθij(l) m1Δij(l)if j=0if j=0

# 对照公式即可

def reg_gradient(theta_serialize,X,y,lamda):

D = gradient(theta_serialize,X,y)

D1,D2 = deserialize(D)

theta1,theta2 = deserialize(theta_serialize)

D1[:,1:] = D1[:,1:] + theta1[:,1:] * lamda / len(X)

D2[:,1:] = D2[:,1:] + theta2[:,1:] * lamda / len(X)

return serialize(D1,D2)

5. 神经网络训练过程

-

定义优化函数

from scipy.optimize import minimize def nn_training(X,y): init_theta = np.random.uniform(-0.5,0.5,10285) # 权值随机初始化 res = minimize(fun =reg_cost, x0 = init_theta, args = (X,y,lamda), method='TNC', jac = reg_gradient, # 无正则化就用gradient options = {'maxiter':300}) # 可选项:最大迭代次数,选择为300次 return res -

计算网络准确率

lamda=10 res = nn_training(X,y) raw_y = data['y'].reshape(5000,) _,_,_,_,h = feed_forward(res.x,X) y_pred = np.argmax(h,axis=1) + 1 acc = np.mean(y_pred == raw_y) >>> acc 0.9408

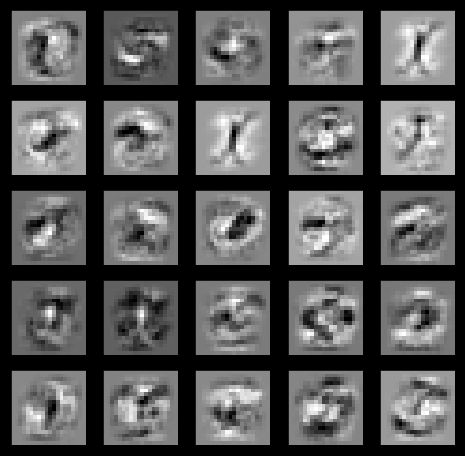

6. 可视化隐藏层

def plot_hidden_layer(theta):

theta1,_ = deserialize(theta)

hidden_layer = theta1[:,1:] # 25,400

fig,ax = plt.subplots(ncols=5,nrows=5,figsize=(8,8),sharex=True,sharey=True)

for r in range(5):

for c in range(5):

ax[r,c].imshow(hidden_layer[5 * r + c].reshape(20,20).T,cmap='gray_r')

plt.xticks([])

plt.yticks([])

plt.show

plot_hidden_layer(res.x)

关于隐藏层的意义: (莫烦 Python) 科普: 神经网络的黑盒不黑

若将隐藏层简化为3个特征,就是用3个信息来代表整张手写数字图片的所有像素点,然后计算机利用这3个特征来区分不同数字。

换言之,神经网络每一层的输出都相当于一个我们人类看不懂但计算机能看懂的特征描述子。而这个神经网络本身则相当于一个提取特征的算法。