HBase - KYlin build cube时出现问题的汇总

1.在单条非空数据时出现null值而导致报错

Vertex failed, vertexName=Map 1, vertexId=vertex_1494251465823_0017_1_01, diagnostics=[Task failed, taskId=task_1494251465823_0017_1_01_000021, diagnostics=[TaskAttempt 0 failed, info=[Error: Failure while running task:java.lang.RuntimeException: java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row {"weibo_id":"3959784874733088","content":null,"json_file":null,"geohash":null,"user_id":"3190257607","time_id":null,"city_id":null,"province_id":null,"country_id":null,"unix_time":null,"pic_url":null,"lat":null,"lon":null}

at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.initializeAndRunProcessor(TezProcessor.java:173)

at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.run(TezProcessor.java:139)

at org.apache.tez.runtime.LogicalIOProcessorRuntimeTask.run(LogicalIOProcessorRuntimeTask.java:347)

at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable$1.run(TezTaskRunner.java:194)

at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable$1.run(TezTaskRunner.java:185)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable.callInternal(TezTaskRunner.java:185)

at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable.callInternal(TezTaskRunner.java:181)

at org.apache.tez.common.CallableWithNdc.call(CallableWithNdc.java:36)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row {"weibo_id":"3959784874733088","content":null,"json_file":null,"geohash":null,"user_id":"3190257607","time_id":null,"city_id":null,"province_id":null,"country_id":null,"unix_time":null,"pic_url":null,"lat":null,"lon":null}

at org.apache.hadoop.hive.ql.exec.tez.MapRecordSource.processRow(MapRecordSource.java:91)

at org.apache.hadoop.hive.ql.exec.tez.MapRecordSource.pushRecord(MapRecordSource.java:68)

at org.apache.hadoop.hive.ql.exec.tez.MapRecordProcessor.run(MapRecordProcessor.java:325)

at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.initializeAndRunProcessor(TezProcessor.java:150)

... 14 more

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row {"weibo_id":"3959784874733088","content":null,"json_file":null,"geohash":null,"user_id":"3190257607","time_id":null,"city_id":null,"province_id":null,"country_id":null,"unix_time":null,"pic_url":null,"lat":null,"lon":null}

at org.apache.hadoop.hive.ql.exec.MapOperator.process(MapOperator.java:565)

at org.apache.hadoop.hive.ql.exec.tez.MapRecordSource.processRow(MapRecordSource.java:83)

... 17 more实际检索Hive时发现该条微博是非空的

select * from check_in_table where weibo_id = '3959784874733088';

result:

3959784874733088 #清明祭英烈#今天的和平安定是先烈们用生命换来的,我们要珍惜今天的和平生活,努力学习,早日实现中国梦。 http://t.cn/R2dLEhU {...} wtn901f5q32n 3190257607 1459526400000 1901 19 00 2016-04-02 12:02:26 0 28.31096 121.64364修改星型模型得以解决

2.在Kylin Build时失败,显示了一个Hbase scan超时的错误

报错如下:

Vertex re-running, vertexName=Map 2, vertexId=vertex_1494251465823_0016_1_00

Vertex failed, vertexName=Map 1, vertexId=vertex_1494251465823_0016_1_01, diagnostics=[Task failed, taskId=task_1494251465823_0016_1_01_000015, diagnostics=[TaskAttempt 0 failed, info=[Error: Failure while running task:java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: java.io.IOException: org.apache.hadoop.hbase.client.ScannerTimeoutException: 425752ms passed since the last invocation, timeout is currently set to 60000

at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.initializeAndRunProcessor(TezProcessor.java:173)

at org.apache.hadoop.hive.ql.exec.tez.TezProcessor.run(TezProcessor.java:139)

at org.apache.tez.runtime.LogicalIOProcessorRuntimeTask.run(LogicalIOProcessorRuntimeTask.java:347)

at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable$1.run(TezTaskRunner.java:194)

at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable$1.run(TezTaskRunner.java:185)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable.callInternal(TezTaskRunner.java:185)

at org.apache.tez.runtime.task.TezTaskRunner$TaskRunnerCallable.callInternal(TezTaskRunner.java:181)

at org.apache.tez.common.CallableWithNdc.call(CallableWithNdc.java:36)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: java.io.IOException: org.apache.hadoop.hbase.client.ScannerTimeoutException: 425752ms passed since the last invocation, timeout is currently set to 60000

at ...解决方法:

1方法.通过修改conf

Configuration conf = HBaseConfiguration.create()

conf.setLong(HConstants.HBASE_REGIONSERVER_LEASE_PERIOD_KEY,120000)

通过代码实现修改 时间、这个值是在客户端应用中配置的,我测试的时候是不会被传递到远程region服务器,所以这样的修改是无效的、不知是否人通过这种测试。

2方法直接修改配置文件

<property>

<name>hbase.regionserver.lease.periodname>

<value>900000value>

property>

<property>

<name>hbase.rpc.timeoutname>

<value>900000value>

property>3.#4 Step Name: Build Dimension Dictionary 时出现cardinality Too high

java.lang.RuntimeException: Failed to create dictionary on WEIBODATA.CHECK_IN_TABLE.USER_ID

at org.apache.kylin.dict.DictionaryManager.buildDictionary(DictionaryManager.java:325)

at org.apache.kylin.cube.CubeManager.buildDictionary(CubeManager.java:222)

at org.apache.kylin.cube.cli.DictionaryGeneratorCLI.processSegment(DictionaryGeneratorCLI.java:50)

at org.apache.kylin.cube.cli.DictionaryGeneratorCLI.processSegment(DictionaryGeneratorCLI.java:41)

at org.apache.kylin.engine.mr.steps.CreateDictionaryJob.run(CreateDictionaryJob.java:54)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.kylin.engine.mr.common.HadoopShellExecutable.doWork(HadoopShellExecutable.java:63)

at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:113)

at org.apache.kylin.job.execution.DefaultChainedExecutable.doWork(DefaultChainedExecutable.java:57)

at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:113)

at org.apache.kylin.job.impl.threadpool.DefaultScheduler$JobRunner.run(DefaultScheduler.java:136)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.IllegalArgumentException: Too high cardinality is not suitable for dictionary -- cardinality: 11824431

at org.apache.kylin.dict.DictionaryGenerator.buildDictionary(DictionaryGenerator.java:96)

at org.apache.kylin.dict.DictionaryGenerator.buildDictionary(DictionaryGenerator.java:73)

at org.apache.kylin.dict.DictionaryManager.buildDictionary(DictionaryManager.java:321)

... 14 more

result code:2解决方法

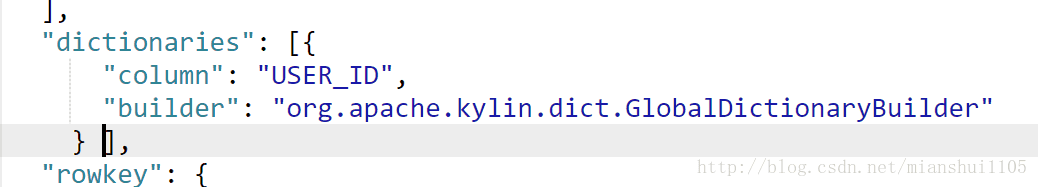

手动修改Cube(JSON)

如果不修改,精确Count Distinct使用了Default dictionary来保存编码后的user_id,而Default dictionary的最大容量为500万,并且,会为每个Segment生成一个Default dictionary,这样的话,跨天进行UV分析的时候,便会产生错误的结果,如果每天不重复的user_id超过500万,那么build的时候会报错。

该值由参数 kylin.dictionary.max.cardinality 来控制,当然,你可以修改该值为1亿,但是Build时候可能会因为内存溢出而导致Kylin Server挂掉。

Apache Kylin中对上亿字符串的精确Count_Distinct示例

由于Global Dictionary 底层基于bitmap,其最大容量为Integer.MAX_VALUE,即21亿多,如果全局字典中,累计值超过Integer.MAX_VALUE,那么在Build时候便会报错。

其他请按实际业务需求配置。

手动修改Cube(JSON)

如果不修改,精确Count Distinct使用了Default dictionary来保存编码后的user_id,而Default dictionary的最大容量为500万,并且,会为每个Segment生成一个Default dictionary,这样的话,跨天进行UV分析的时候,便会产生错误的结果,如果每天不重复的user_id超过500万,那么build的时候会报错:

java.lang.IllegalArgumentException: Too high cardinality is not suitable for dictionary — cardinality: 43377845

at org.apache.kylin.dict.DictionaryGenerator.buildDictionary(DictionaryGenerator.java:96)

at org.apache.kylin.dict.DictionaryGenerator.buildDictionary(DictionaryGenerator.java:73)

该值由参数 kylin.dictionary.max.cardinality 来控制,当然,你可以修改该值为1亿,但是Build时候可能会因为内存溢出而导致Kylin Server挂掉:

因此,这种需求我们需要手动使用Global Dictionary,顾名思义,它是一个全局的字典,不分Segments,同一个user_id,在全局字典中只有一个ID。

4.Dup key found问题

java.lang.IllegalStateException: Dup key found, key=[24], value1=[1456243200000,2016,02,24,0,1456272000000], value2=[1458748800000,2016,03,24,0,1458777600000]

at org.apache.kylin.dict.lookup.LookupTable.initRow(LookupTable.java:85)

at org.apache.kylin.dict.lookup.LookupTable.init(LookupTable.java:68)

at org.apache.kylin.dict.lookup.LookupStringTable.init(LookupStringTable.java:79)

at org.apache.kylin.dict.lookup.LookupTable.(LookupTable.java:56)

at org.apache.kylin.dict.lookup.LookupStringTable.(LookupStringTable.java:65)

at org.apache.kylin.cube.CubeManager.getLookupTable(CubeManager.java:674)

at org.apache.kylin.cube.cli.DictionaryGeneratorCLI.processSegment(DictionaryGeneratorCLI.java:60)

at org.apache.kylin.cube.cli.DictionaryGeneratorCLI.processSegment(DictionaryGeneratorCLI.java:41)

at org.apache.kylin.engine.mr.steps.CreateDictionaryJob.run(CreateDictionaryJob.java:54)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.kylin.engine.mr.common.HadoopShellExecutable.doWork(HadoopShellExecutable.java:63)

at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:113)

at org.apache.kylin.job.execution.DefaultChainedExecutable.doWork(DefaultChainedExecutable.java:57)

at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:113)

at org.apache.kylin.job.impl.threadpool.DefaultScheduler$JobRunner.run(DefaultScheduler.java:136)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecu`

or.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

result code:2 修改model结构

从有重复行的形式=》 无重复行

5.USER_ID全局dict构建失败,提示/dict/WEIBODATA.USER_TABLE/USER_ID should have 0 or 1 append dict but 2

java.lang.RuntimeException: Failed to create dictionary on WEIBODATA.CHECK_IN_TABLE.USER_ID

at org.apache.kylin.dict.DictionaryManager.buildDictionary(DictionaryManager.java:325)

at org.apache.kylin.cube.CubeManager.buildDictionary(CubeManager.java:222)

at org.apache.kylin.cube.cli.DictionaryGeneratorCLI.processSegment(DictionaryGeneratorCLI.java:50)

at org.apache.kylin.cube.cli.DictionaryGeneratorCLI.processSegment(DictionaryGeneratorCLI.java:41)

at org.apache.kylin.engine.mr.steps.CreateDictionaryJob.run(CreateDictionaryJob.java:54)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:90)

at org.apache.kylin.engine.mr.common.HadoopShellExecutable.doWork(HadoopShellExecutable.java:63)

at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:113)

at org.apache.kylin.job.execution.DefaultChainedExecutable.doWork(DefaultChainedExecutable.java:57)

at org.apache.kylin.job.execution.AbstractExecutable.execute(AbstractExecutable.java:113)

at org.apache.kylin.job.impl.threadpool.DefaultScheduler$JobRunner.run(DefaultScheduler.java:136)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.IllegalStateException: GlobalDict /dict/WEIBODATA.USER_TABLE/USER_ID should have 0 or 1 append dict but 2

at org.apache.kylin.dict.GlobalDictionaryBuilder.build(GlobalDictionaryBuilder.java:68)

at org.apache.kylin.dict.DictionaryGenerator.buildDictionary(DictionaryGenerator.java:81)

at org.apache.kylin.dict.DictionaryManager.buildDictionary(DictionaryManager.java:323)

... 14 more

result code:2这个网站 提到了这个问题,有一个解决了这个问题的人用的解决方法是:

1.检查metadata

scan 'kylin_metadata', {STARTROW=>'/dict/WEIBODATA.USER_TABLE/USER_ID', ENDROW=> '/dict/WEIBODATA.USER_TABLE/USER_ID', FILTER=>"KeyOnlyFilter()"} 使用该方法对dict的metadata进行检查,该网站中说他这次scan出了两个metadata,清理后就不报这个错了

但是我扫描的时候的结果是

hbase(main):011:0> scan 'kylin_metadata', {STARTROW=>'/dict/WEIBODATA.USER_TABLE/USER_ID', ENDROW=> '/dict/WEIBODATA.USER_TABLE/USER_ID', FILTER=>"KeyOnlyFilter()"}

ROW COLUMN+CELL

0 row(s) in 0.0220 seconds和

hbase(main):010:0> scan 'kylin_metadata', {STARTROW=>'/dict/WEIBODATA.USER_TABLE/USER_ID', ENDROW=> '/dict/WEIBODATA.USER_TABLE/USER_ID'}

ROW COLUMN+CELL

0 row(s) in 0.0190 seconds甚至没有出现这一行

使用Kylin自带的clean缓存功能

${KYLIN_HOME}/bin/kylin.sh org.apache.kylin.storage.hbase.util.StorageCleanupJob --delete false

${KYLIN_HOME}/bin/kylin.sh org.apache.kylin.storage.hbase.util.StorageCleanupJob --delete truere-build无效

在那个网页中发现其提到把USER_ID设置为distinct_count方法,这一点跟我一样,在去除这个count字段后再次rebuild

无效,证明不是这个的原因

暂时无法解决这个问题

6.Reduce阶段出现OOM

2017-05-24 06:31:27,282 ERROR [main] org.apache.kylin.engine.mr.KylinReducer:

java.lang.OutOfMemoryError: GC overhead limit exceeded

at org.apache.kylin.dict.TrieDictionaryBuilder$Node.reset(TrieDictionaryBuilder.java:60)

at org.apache.kylin.dict.TrieDictionaryBuilder.addValueR(TrieDictionaryBuilder.java:125)

at org.apache.kylin.dict.TrieDictionaryBuilder.addValueR(TrieDictionaryBuilder.java:155)

at org.apache.kylin.dict.TrieDictionaryBuilder.addValueR(TrieDictionaryBuilder.java:155)

at org.apache.kylin.dict.TrieDictionaryBuilder.addValueR(TrieDictionaryBuilder.java:155)

at org.apache.kylin.dict.TrieDictionaryBuilder.addValueR(TrieDictionaryBuilder.java:155)

at org.apache.kylin.dict.TrieDictionaryBuilder.addValueR(TrieDictionaryBuilder.java:155)

at org.apache.kylin.dict.TrieDictionaryBuilder.addValueR(TrieDictionaryBuilder.java:155)

at org.apache.kylin.dict.TrieDictionaryBuilder.addValue(TrieDictionaryBuilder.java:92)

at org.apache.kylin.dict.TrieDictionaryForestBuilder.addValue(TrieDictionaryForestBuilder.java:97)

at org.apache.kylin.dict.TrieDictionaryForestBuilder.addValue(TrieDictionaryForestBuilder.java:78)

at org.apache.kylin.dict.DictionaryGenerator$StringTrieDictForestBuilder.addValue(DictionaryGenerator.java:212)

at org.apache.kylin.engine.mr.steps.FactDistinctColumnsReducer.doReduce(FactDistinctColumnsReducer.java:197)

at org.apache.kylin.engine.mr.steps.FactDistinctColumnsReducer.doReduce(FactDistinctColumnsReducer.java:60)

at org.apache.kylin.engine.mr.KylinReducer.reduce(KylinReducer.java:48)

at org.apache.hadoop.mapreduce.Reducer.run(Reducer.java:171)

at org.apache.hadoop.mapred.ReduceTask.runNewReducer(ReduceTask.java:627)

at org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:389)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:168)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:162)

2017-05-24 06:31:44,672 ERROR [main] org.apache.kylin.engine.mr.KylinReducer:

java.lang.OutOfMemoryError: GC overhead limit exceeded

at java.util.Arrays.copyOf(Arrays.java:3210)

at java.util.Arrays.copyOf(Arrays.java:3181)

at java.util.ArrayList.toArray(ArrayList.java:376)

at java.util.LinkedList.addAll(LinkedList.java:408)

at java.util.LinkedList.addAll(LinkedList.java:387)

at java.util.LinkedList.(LinkedList.java:119)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:384)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.buildTrieBytes(TrieDictionaryBuilder.java:424)

at org.apache.kylin.dict.TrieDictionaryBuilder.build(TrieDictionaryBuilder.java:418)

at org.apache.kylin.dict.TrieDictionaryForestBuilder.build(TrieDictionaryForestBuilder.java:109)

at org.apache.kylin.dict.DictionaryGenerator$StringTrieDictForestBuilder.build(DictionaryGenerator.java:218)

at org.apache.kylin.engine.mr.steps.FactDistinctColumnsReducer.doCleanup(FactDistinctColumnsReducer.java:231)

at org.apache.kylin.engine.mr.KylinReducer.cleanup(KylinReducer.java:71)

at org.apache.hadoop.mapreduce.Reducer.run(Reducer.java:179)

at org.apache.hadoop.mapred.ReduceTask.runNewReducer(ReduceTask.java:627)

at org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:389)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:168)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:162)

2017-05-24 06:31:44,801 FATAL [main] org.apache.hadoop.mapred.YarnChild: Error running child : java.lang.OutOfMemoryError: GC overhead limit exceeded

at java.util.Arrays.copyOf(Arrays.java:3210)

at java.util.Arrays.copyOf(Arrays.java:3181)

at java.util.ArrayList.toArray(ArrayList.java:376)

at java.util.LinkedList.addAll(LinkedList.java:408)

at java.util.LinkedList.addAll(LinkedList.java:387)

at java.util.LinkedList.(LinkedList.java:119)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:384)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.checkOverflowParts(TrieDictionaryBuilder.java:400)

at org.apache.kylin.dict.TrieDictionaryBuilder.buildTrieBytes(TrieDictionaryBuilder.java:424)

at org.apache.kylin.dict.TrieDictionaryBuilder.build(TrieDictionaryBuilder.java:418)

at org.apache.kylin.dict.TrieDictionaryForestBuilder.build(TrieDictionaryForestBuilder.java:109)

at org.apache.kylin.dict.DictionaryGenerator$StringTrieDictForestBuilder.build(DictionaryGenerator.java:218)

at org.apache.kylin.engine.mr.steps.FactDistinctColumnsReducer.doCleanup(FactDistinctColumnsReducer.java:231)

at org.apache.kylin.engine.mr.KylinReducer.cleanup(KylinReducer.java:71)

at org.apache.hadoop.mapreduce.Reducer.run(Reducer.java:179)

at org.apache.hadoop.mapred.ReduceTask.runNewReducer(ReduceTask.java:627)

at org.apache.hadoop.mapred.ReduceTask.run(ReduceTask.java:389)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:168)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:422)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1724)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:162) Reduce阶段OOM:

data skew 数据倾斜

data skew是引发这个的一个原因。

key分布不均匀,导致某一个reduce所处理的数据超过预期,导致jvm频繁GC。value对象过多或者过大

某个reduce中的value堆积的对象过多,导致jvm频繁GC。

解决办法:

set reduce memory = 3.5GB

- 增加reduce个数,set mapred.reduce.tasks=300,。

- 在Hive-site.xml中设置,或者在hive shell里设置 set mapred.child.java.opts = -Xmx512m

或者只设置reduce的最大heap为2G,并设置垃圾回收器的类型为并行标记回收器,这样可以显著减少GC停顿,但是稍微耗费CPU。

set mapred.reduce.child.java.opts=-Xmx2g -XX:+UseConcMarkSweepGC; - 使用map join 代替 common join. 可以set hive.auto.convert.join = true

- 设置 hive.optimize.skewjoin = true 来解决数据倾斜问题

7.Demicial问题

2017-06-06 03:19:03,528 ERROR [pool-7-thread-1] org.apache.kylin.cube.inmemcubing.DoggedCubeBuilder: Dogged Cube Build error

java.io.IOException: java.lang.NumberFormatException

at org.apache.kylin.cube.inmemcubing.DoggedCubeBuilder$BuildOnce.abort(DoggedCubeBuilder.java:197)

at org.apache.kylin.cube.inmemcubing.DoggedCubeBuilder$BuildOnce.checkException(DoggedCubeBuilder.java:169)

at org.apache.kylin.cube.inmemcubing.DoggedCubeBuilder$BuildOnce.build(DoggedCubeBuilder.java:116)

at org.apache.kylin.cube.inmemcubing.DoggedCubeBuilder.build(DoggedCubeBuilder.java:75)

at org.apache.kylin.cube.inmemcubing.AbstractInMemCubeBuilder$1.run(AbstractInMemCubeBuilder.java:82)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.lang.NumberFormatException

at java.math.BigDecimal.(BigDecimal.java:550)

at java.math.BigDecimal.(BigDecimal.java:383)

at java.math.BigDecimal.(BigDecimal.java:806)

at org.apache.kylin.measure.basic.BigDecimalIngester.valueOf(BigDecimalIngester.java:39)

at org.apache.kylin.measure.basic.BigDecimalIngester.valueOf(BigDecimalIngester.java:29)

at org.apache.kylin.cube.inmemcubing.InMemCubeBuilderInputConverter.buildValueOf(InMemCubeBuilderInputConverter.java:122)

at org.apache.kylin.cube.inmemcubing.InMemCubeBuilderInputConverter.buildValue(InMemCubeBuilderInputConverter.java:94)

at org.apache.kylin.cube.inmemcubing.InMemCubeBuilderInputConverter.convert(InMemCubeBuilderInputConverter.java:70)

at org.apache.kylin.cube.inmemcubing.InMemCubeBuilder$InputConverter$1.next(InMemCubeBuilder.java:552)

at org.apache.kylin.cube.inmemcubing.InMemCubeBuilder$InputConverter$1.next(InMemCubeBuilder.java:532)

at org.apache.kylin.gridtable.GTAggregateScanner.iterator(GTAggregateScanner.java:141)

at org.apache.kylin.cube.inmemcubing.InMemCubeBuilder.createBaseCuboid(InMemCubeBuilder.java:346)

at org.apache.kylin.cube.inmemcubing.InMemCubeBuilder.build(InMemCubeBuilder.java:172)

at org.apache.kylin.cube.inmemcubing.InMemCubeBuilder.build(InMemCubeBuilder.java:141)

at org.apache.kylin.cube.inmemcubing.DoggedCubeBuilder$SplitThread.run(DoggedCubeBuilder.java:287) 一般由于设置measure时格式设置错误,回到cube中重新设计解决

8. Kylin 突然崩溃

一般情况是Kylin初始分配的4G内存无法满足需求,在不断的FullGC中崩溃,遇到这个问题需要在/bin/serenv.sh中修改kylin的内存分配以及垃圾回收策略

export KYLIN_JVM_SETTINGS="-Xms1024M -Xmx4096M -Xss1024K -XX:MaxPermSize=128M -verbose:gc -XX:+PrintGCDetails -XX:+PrintGCDateStamps -Xloggc:$KYLIN_HOME/logs/kylin.gc.$$ -XX:+UseGCLogFileRotation -XX:NumberOfGCLogFiles=10 -XX:GCLogFileSize=64M"

# export KYLIN_JVM_SETTINGS="-Xms16g -Xmx16g -XX:MaxPermSize=512m -XX:NewSize=3g -XX:MaxNewSize=3g -XX:SurvivorRatio=4 -XX:+CMSClassUnloadingEnabled -XX:+CMSParallelRemarkEnabled -XX:+UseConcMarkSweepGC -XX:+CMSIncrementalMode -XX:CMSInitiatingOccupancyFraction=70 -XX:+DisableExplicitGC -XX:+HeapDumpOnOutOfMemoryError"上面是初始计划,下面是修改的计划