k8s搭建fluentd+ES

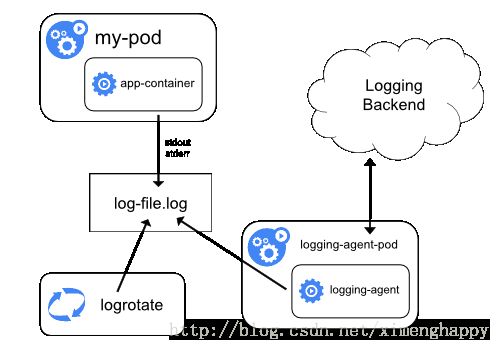

原理分析:

1.安装服务:

fluentd 安装

https://github.com/heheliu321/kubernetes/tree/master/cluster/addons/fluentd-elasticsearch

创建configmap资源(key --filename)

修改fluentd-es-configmap.yaml中的es地址,其他不用动

output.conf: |-

@id elasticsearch

@type elasticsearch

@log_level info

type_name _doc

include_tag_key true

host 192.168.13.1

port 9200

logstash_format true

@type file

path /var/log/fluentd-buffers/kubernetes.system.buffer

flush_mode interval

retry_type exponential_backoff

flush_thread_count 2

flush_interval 5s

retry_forever

retry_max_interval 30

chunk_limit_size 2M

queue_limit_length 8

overflow_action block

root@ubuntu-128:~# kubectl apply -f fluentd-es-configmap.yaml将pod通过configmaps将 配置信息加载到pod中

root@ubuntu-128:~# kubectl apply -f fluentd-es-ds.yaml

其中fluentd需要镜像 quay.io/fluentd_elasticsearch/fluentd:v2.6.0作为插件,完成将pod日志上传到es

docker pull quay.io/fluentd_elasticsearch/fluentd:v2.6.0查看可以知道ubutu-130的fluentd成功运行,

root@ubuntu-128:/home/itcast/working/fluentd# kubectl get pods --namespace=kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-59b69b999c-drvmt 1/1 Running 1 118m 10.244.0.31 ubuntu-128

coredns-59b69b999c-jhx29 1/1 Running 0 34m 10.244.1.124 ubuntu-130

etcd-ubuntu-128 1/1 Running 8 4d1h 192.168.13.128 ubuntu-128

fluentd-es-v2.6.0-cl6f8 1/1 Running 0 20m 10.244.1.125 ubuntu-130

kube-apiserver-ubuntu-128 1/1 Running 12 4d1h 192.168.13.128 ubuntu-128

kube-controller-manager-ubuntu-128 1/1 Running 72 23m 192.168.13.128 ubuntu-128

kube-flannel-ds-amd64-6fnlw 1/1 Running 0 20m 192.168.13.128 ubuntu-128

kube-flannel-ds-amd64-7b54h 1/1 Terminating 5 4d 192.168.13.129 ubuntu-129

kube-flannel-ds-amd64-cxwsg 1/1 Running 14 4d 192.168.13.130 ubuntu-130

kube-proxy-6hb9w 1/1 Running 8 4d1h 192.168.13.128 ubuntu-128

kube-proxy-wgk4w 1/1 Running 4 4d 192.168.13.129 ubuntu-129

kube-proxy-xqgrw 1/1 Running 10 4d 192.168.13.130 ubuntu-130

kube-scheduler-ubuntu-128 1/1 Running 66 4d1h 192.168.13.128 ubuntu-128

tiller-deploy-7b9c5bf9c4-zvxq5 1/1 Running 7 2d2h 10.244.1.111 ubuntu-130

测试

这里讲一下日志的细节,日志记录了大量的操作系统细节,重点注意一下,

root@ubuntu-128:/home/itcast/working/fluentd# kubectl logs fluentd-es-v2.6.0-cl6f8 --namespace=kube-system

2019-08-16 15:27:16 +0000 [warn]: [elasticsearch] failed to flush the buffer. retry_time=0 next_retry_seconds=2019-08-16 15:27:17 +0000 chunk="5903d9f6da39eac27f4f331803e25de2" error_class=Fluent::Plugin::ElasticsearchOutput::RecoverableRequestFailure error="could not push logs to Elasticsearch cluster ({:host=>\"192.168.13.1\", :port=>9200, :scheme=>\"http\"}): read timeout reached"

2019-08-16 15:27:16 +0000 [warn]: /var/lib/gems/2.3.0/gems/fluent-plugin-elasticsearch-3.5.2/lib/fluent/plugin/out_elasticsearch.rb:757:in `rescue in send_bulk'

2019-08-16 15:27:16 +0000 [warn]: /var/lib/gems/2.3.0/gems/fluent-plugin-elasticsearch-3.5.2/lib/fluent/plugin/out_elasticsearch.rb:733:in `send_bulk'

2019-08-16 15:27:16 +0000 [warn]: /var/lib/gems/2.3.0/gems/fluent-plugin-elasticsearch-3.5.2/lib/fluent/plugin/out_elasticsearch.rb:627:in `block in write'

2019-08-16 15:27:16 +0000 [warn]: /var/lib/gems/2.3.0/gems/fluent-plugin-elasticsearch-3.5.2/lib/fluent/plugin/out_elasticsearch.rb:626:in `each'

2019-08-16 15:27:16 +0000 [warn]: /var/lib/gems/2.3.0/gems/fluent-plugin-elasticsearch-3.5.2/lib/fluent/plugin/out_elasticsearch.rb:626:in `write'

2019-08-16 15:27:16 +0000 [warn]: /var/lib/gems/2.3.0/gems/fluentd-1.5.1/lib/fluent/plugin/output.rb:1125:in `try_flush'

2019-08-16 15:27:16 +0000 [warn]: /var/lib/gems/2.3.0/gems/fluentd-1.5.1/lib/fluent/plugin/output.rb:1425:in `flush_thread_run'

2019-08-16 15:27:16 +0000 [warn]: /var/lib/gems/2.3.0/gems/fluentd-1.5.1/lib/fluent/plugin/output.rb:454:in `block (2 levels) in start'

2019-08-16 15:27:16 +0000 [warn]: /var/lib/gems/2.3.0/gems/fluentd-1.5.1/lib/fluent/plugin_helper/thread.rb:78:in `block in thread_create'

2019-08-16 15:27:17 +0000 [warn]: [elasticsearch] retry succeeded. chunk_id="5903d9f6da39eac27f4f331803e25de2"

2019-08-16 15:28:20 +0000 [warn]: dump an error event: error_class=Fluent::Plugin::ConcatFilter::TimeoutError error="Timeout flush: kernel:default" location=nil tag="kernel" time=2019-08-16 15:28:20.403295017 +0000 record={"boot_id"=>"417c6389e89a4793bc868854e9608715", "machine_id"=>"7e7d8472ba514c0daf3ab2ad126bcf6e", "hostname"=>"ubuntu-130", "source_monotonic_timestamp"=>"0", "transport"=>"kernel", "priority"=>"5", "syslog_facility"=>"0", "syslog_identifier"=>"kernel", "message"=>"random: get_random_bytes called from start_kernel+0x42/0x50d with crng_init=0Linux version 4.13.0-36-generic (buildd@lgw01-amd64-033) (gcc version 5.4.0 20160609 (Ubuntu 5.4.0-6ubuntu1~16.04.9)) #40~16.04.1-Ubuntu SMP Fri Feb 16 23:25:58 UTC 2018 (Ubuntu 4.13.0-36.40~16.04.1-generic 4.13.13)Command line: BOOT_IMAGE=/boot/vmlinuz-4.13.0-36-generic root=UUID=9d46940e-386b-4c36-aa05-c1974cb537ac ro quiet splashKERNEL supported cpus: Intel GenuineIntel AMD AuthenticAMD Centaur CentaurHaulsDisabled fast string operationsx86/fpu: Supporting XSAVE feature 0x001: 'x87 floating point registers'x86/fpu: Supporting XSAVE feature 0x002: 'SSE registers'x86/fpu: Supporting XSAVE feature 0x004: 'AVX registers'x86/fpu: xstate_offset[2]: 576, xstate_sizes[2]: 256x86/fpu: Enabled xstate features 0x7, context size is 832 bytes, using 'standard' format.e820: BIOS-provided physical RAM map:BIOS-e820: [mem 0x0000000000000000-0x000000000009e7ff] usableBIOS-e820: [mem 0x000000000009e800-0x000000000009ffff] reservedBIOS-e820: [mem 0x00000000000dc000-0x00000000000fffff] reservedBIOS-e820: [mem 0x0000000000100000-0x000000007fedffff] usableBIOS-e820: [mem 0x000000007fee0000-0x000000007fefefff] ACPI dataBIOS-e820: [mem 0x000000007feff000-0x000000007fefffff] ACPI NVSBIOS-e820: [mem 0x000000007ff00000-0x000000007fffffff] usableBIOS-e820: [mem 0x00000000f0000000-0x00000000f7ffffff] reservedBIOS-e820: [mem 0x00000000fec00000-0x00000000fec0ffff] reservedBIOS-e820: [mem 0x00000000fee00000-0x00000000fee00fff] reservedBIOS-e820: [mem 0x00000000fffe0000-0x00000000ffffffff] reservedNX (Execute Disable) protection: activerandom: fast init doneSMBIOS 2.7 present.DMI: VMware, Inc. VMware Virtual Platform/440BX Desktop Reference Platform, BIOS 6.00 07/02/2015Hypervisor detected: VMwarevmware: TSC freq read from hypervisor : 2494.420 MHzvmware: Host bus clock speed read from hypervisor : 66000000 Hzvmware: using sched offset of 6062854211 nse820: update [mem 0x00000000-0x00000fff] usable ==> reservede820: remove [mem 0x000a0000-0x000fffff] usablee820: last_pfn = 0x80000 max_arch_pfn = 0x400000000MTRR default type: uncachableMTRR fixed ranges enabled: 00000-9FFFF write-back A0000-BFFFF uncachable C0000-CFFFF write-protect D0000-EFFFF uncachable F0000-FFFFF write-protectMTRR variable ranges enabled: 0 base 000C0000000 mask 3FFC0000000 uncachable 1 base 00000000000 mask 3FF00000000 write-back 2 disabled 3 disabled 4 disabled 5 disabled 6 disabled 7 disabledx86/PAT: Configuration [0-7]: WB WC UC- UC WB WC UC- WT total RAM covered: 3072MFound optimal setting for mtrr clean up gran_size: 64K \tchunk_size: 64K \tnum_reg: 2 \tlose cover RAM: 0Gfound SMP MP-table at [mem 0x000f6a80-0x000f6a8f] mapped at [ffff927b800f6a80]Scanning 1 areas for low memory corruptionBase memory trampoline at [ffff927b80098000] 98000 size 24576BRK [0x3d72b000, 0x3d72bff

9-08-16 15:35:07 +0000 [warn]: [elasticsearch] retry succeeded. chunk_id="5903dbb635ba93a5b7402539ca7ba3bd"

2019-08-16 15:45:50 +0000 [warn]: [elasticsearch] failed to flush the buffer. retry_time=0 next_retry_seconds=2019-08-16 15:45:51 +0000 chunk="5903de18c168d1484e7409f0d90e36c3" error_class=Fluent::Plugin::ElasticsearchOutput::RecoverableRequestFailure error="could not push logs to Elasticsearch cluster ({:host=>\"192.168.13.1\", :port=>9200, :scheme=>\"http\"}): read timeout reached"

2019-08-16 15:45:50 +0000 [warn]: suppressed same stacktrace

2019-08-16 15:45:59 +0000 [warn]: [elasticsearch] failed to flush the buffer. retry_time=1 next_retry_seconds=2019-08-16 15:45:59 +0000 chunk="5903de1d5506de45bc10846751a5e79f" error_class=Fluent::Plugin::ElasticsearchOutput::RecoverableRequestFailure error="could not push logs to Elasticsearch cluster ({:host=>\"192.168.13.1\", :port=>9200, :scheme=>\"http\"}): read timeout reached"

2019-08-16 15:45:59 +0000 [warn]: suppressed same stacktrace

2019-08-16 15:45:59 +0000 [warn]: [elasticsearch] failed to flush the buffer. retry_time=2 next_retry_seconds=2019-08-16 15:46:01 +0000 chunk="5903de18c168d1484e7409f0d90e36c3" error_class=Fluent::Plugin::ElasticsearchOutput::RecoverableRequestFailure error="could not push logs to Elasticsearch cluster ({:host=>\"192.168.13.1\", :port=>9200, :scheme=>\"http\"}): read timeout reached"

2019-08-16 15:45:59 +0000 [warn]: suppressed same stacktrace

2019-08-16 15:46:02 +0000 [warn]: [elasticsearch] retry succeeded. chunk_id="5903de18c168d1484e7409f0d90e36c3"

在ubuntu-130中 使用tcpdump可以看到fluentd将日志推送到ES

root@ubuntu-130:~# tcpdump -i any port 9200 -s 0

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on any, link-type LINUX_SLL (Linux cooked), capture size 262144 bytes

23:52:21.601934 IP 10.244.1.125.57142 > 192.168.13.1.9200: Flags [S], seq 927584757, win 28200, options [mss 1410,sackOK,TS val 4040995733 ecr 0,nop,wscale 7], length 0

23:52:21.601989 IP 10.244.1.125.57142 > 192.168.13.1.9200: Flags [S], seq 927584757, win 28200, options [mss 1410,sackOK,TS val 4040995733 ecr 0,nop,wscale 7], length 0

23:52:21.602018 IP ubuntu-130.57142 > 192.168.13.1.9200: Flags [S], seq 927584757, win 28200, options [mss 1410,sackOK,TS val 4040995733 ecr 0,nop,wscale 7], length 0

23:52:21.602515 IP 192.168.13.1.9200 > ubuntu-130.57142: Flags [S.], seq 1478163250, ack 927584758, win 8192, options [mss 1460,nop,wscale 8,sackOK,TS val 1042016 ecr 4040995733], length 0

23:52:21.602543 IP 192.168.13.1.9200 > 10.244.1.125.57142: Flags [S.], seq 1478163250, ack 927584758, win 8192, options [mss 1460,nop,wscale 8,sackOK,TS val 1042016 ecr 4040995733], length 0

23:52:21.602550 IP 192.168.13.1.9200 > 10.244.1.125.57142: Flags [S.], seq 1478163250, ack 927584758, win 8192, options [mss 1460,nop,wscale 8,sackOK,TS val 1042016 ecr 4040995733], length 0

23:52:21.602617 IP 10.244.1.125.57142 > 192.168.13.1.9200: Flags [.], ack 1, win 221, options [nop,nop,TS val 4040995734 ecr 1042016], length 0

23:52:21.623993 IP 192.168.13.1.9200 > ubuntu-130.57142: Flags [P.], seq 1:550, ack 2137, win 256, options [nop,nop,TS val 1042018 ecr 4040995738], length 549

23:52:21.624050 IP 192.168.13.1.9200 > 10.244.1.125.57142: Flags [P.], seq 1:550, ack 2137, win 256, options [nop,nop,TS val 1042018 ecr 4040995738], length 549

23:52:21.624057 IP 192.168.13.1.9200 > 10.244.1.125.57142: Flags [P.], seq 1:550, ack 2137, win 256, options [nop,nop,TS val 1042018 ecr 4040995738], length 549

23:52:21.624110 IP 10.244.1.125.57142 > 192.168.13.1.9200: Flags [.], ack 550, win 229, options [nop,nop,TS val 4040995755 ecr 1042018], length 0

23:52:21.624119 IP 10.244.1.125.57142 > 192.168.13.1.9200: Flags [.], ack 550, win 229, options [nop,nop,TS val 4040995755 ecr 1042018], length 0

23:52:21.624131 IP ubuntu-130.57142 > 192.168.13.1.9200: Flags [.], ack 550, win 229, options [nop,nop,TS val 4040995755 ecr 1042018], length 0

23:52:21.626041 IP 10.244.1.125.57142 > 192.168.13.1.9200: Flags [F.], seq 2137, ack 550, win 229, options [nop,nop,TS val 4040995757 ecr 1042018], length 0

23:52:21.626089 IP 10.244.1.125.57142 > 192.168.13.1.9200: Flags [F.], seq 2137, ack 550, win 229, options [nop,nop,TS val 4040995757 ecr 1042018], length 0

23:52:21.626117 IP ubuntu-130.57142 > 192.168.13.1.9200: Flags [F.], seq 2137, ack 550, win 229, options [nop,nop,TS val 4040995757 ecr 1042018], length 0

23:52:21.626136 IP 192.168.13.1.9200 > ubuntu-130.57144: Flags [P.], seq 1846136596:1846137571, ack 3099668854, win 256, options [nop,nop,TS val 1042018 ecr 4040995738], length 975

23:52:21.626144 IP 192.168.13.1.9200 > 10.244.1.125.57144: Flags [P.], seq 1846136596:1846137571, ack 3099668854, win 256, options [nop,nop,TS val 1042018 ecr 4040995738], length 975

23:52:21.626147 IP 192.168.13.1.9200 > 10.244.1.125.57144: Flags [P.], seq 0:975, ack 1, win 256, options [nop,nop,TS val 1042018 ecr 4040995738], length 975

23:52:21.626373 IP 10.244.1.125.57144 > 192.168.13.1.9200: Flags [.], ack 975, win 236, options [nop,nop,TS val 4040995758 ecr 1042018], length 0

23:52:21.626394 IP 10.244.1.125.57144 > 192.168.13.1.9200: Flags [.], ack 975, win 236, options [nop,nop,TS val 4040995758 ecr 1042018], length 0

23:52:21.626404 IP ubuntu-130.57144 > 192.168.13.1.9200: Flags [.], ack 975, win 236, options [nop,nop,TS val 4040995758 ecr 1042018], length 0

23:52:21.626523 IP 192.168.13.1.9200 > ubuntu-130.57142: Flags [.], ack 2138, win 256, options [nop,nop,TS val 1042018 ecr 4040995757], length 0

23:52:21.626538 IP 192.168.13.1.9200 > 10.244.1.125.57142: Flags [.], ack 2138, win 256, options [nop,nop,TS val 1042018 ecr 4040995757], length 0

23:52:21.626542 IP 192.168.13.1.9200 > 10.244.1.125.57142: Flags [.], ack 2138, win 256, options [nop,nop,TS val 1042018 ecr 4040995757], length 0

23:52:21.626994 IP 192.168.13.1.9200 > ubuntu-130.57142: Flags [R.], seq 550, ack 2138, win 0, length 0

23:52:21.629256 IP 10.244.1.125.57144 > 192.168.13.1.9200: Flags [F.], seq 1, ack 975, win 236, options [nop,nop,TS val 4040995761 ecr 1042018], length 0

23:52:21.629300 IP 10.244.1.125.57144 > 192.168.13.1.9200: Flags [F.], seq 1, ack 975, win 236, options [nop,nop,TS val 4040995761 ecr 1042018], length 0

23:52:21.629326 IP ubuntu-130.57144 > 192.168.13.1.9200: Flags [F.], seq 1, ack 975, win 236, options [nop,nop,TS val 4040995761 ecr 1042018], length 0

23:52:21.630715 IP 192.168.13.1.9200 > ubuntu-130.57144: Flags [.], ack 2, win 256, options [nop,nop,TS val 1042018 ecr 4040995761], length 0

23:52:21.630800 IP 192.168.13.1.9200 > 10.244.1.125.57144: Flags [.], ack 2, win 256, options [nop,nop,TS val 1042018 ecr 4040995761], length 0

23:52:21.630808 IP 192.168.13.1.9200 > 10.244.1.125.57144: Flags [.], ack 2, win 256, options [nop,nop,TS val 1042018 ecr 4040995761], length 0

23:52:21.630814 IP 192.168.13.1.9200 > ubuntu-130.57144: Flags [R.], seq 975, ack 2, win 0, length 0

23:52:21.630823 IP 192.168.13.1.9200 > 10.244.1.125.57144: Flags [R.], seq 975, ack 2, win 0, length 0

23:52:21.630825 IP 192.168.13.1.9200 > 10.244.1.125.57144: Flags [R.], seq 975, ack 2, win 0, length 0

查看es节点中index

http://192.168.13.1:9200/_cat/indices?v

_______________________________________________________________________________________

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

yellow open logstash-2019.08.16 y7KXQWwXQP6EZ-r5MMGzrQ 5 1 10399 0 5.9mb 5.9mb

查看ES的logstash-2019.08.16索引数据

http://192.168.13.1:9200/logstash-2019.08.16/_search

——————————————————————————————————————————————————————————

{

"took": 2,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"skipped": 0,

"failed": 0

},

"hits": {

"total": 10441,

"max_score": 1,

"hits": [

{

"_index": "logstash-2019.08.16",

"_type": "_doc",

"_id": "8wIIm2wBYm28bAuFxTQo",

"_score": 1,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba"

},

"kubernetes": {

"container_name": "istio-proxy",

"namespace_name": "istio-system",

"pod_name": "istio-policy-7bc45bb8f9-4lwjp",

"container_image": "istio/proxyv2:1.0.3",

"container_image_id": "docker-pullable://istio/proxyv2@sha256:947348e2039b8b0e356e843ae263dc0c3d50abbf6cfe9d676446353f85b9ccb7",

"pod_id": "29054276-bea1-11e9-ad52-000c295a7d67",

"host": "ubuntu-130",

"labels": {

"app": "policy",

"istio": "mixer",

"istio-mixer-type": "policy",

"pod-template-hash": "7bc45bb8f9"

},

"master_url": "https://10.96.0.1:443/api",

"namespace_id": "8b3de99f-be97-11e9-9d80-000c295a7d67"

},

"message": "discoveryRefreshDelay: 1s\n",

"@timestamp": "2019-08-16T15:25:31.383692784+00:00",

"tag": "kubernetes.var.log.containers.istio-policy-7bc45bb8f9-4lwjp_istio-system_istio-proxy-f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba.log"

}

},

{

"_index": "logstash-2019.08.16",

"_type": "_doc",

"_id": "-AIIm2wBYm28bAuFxTQo",

"_score": 1,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba"

},

"kubernetes": {

"container_name": "istio-proxy",

"namespace_name": "istio-system",

"pod_name": "istio-policy-7bc45bb8f9-4lwjp",

"container_image": "istio/proxyv2:1.0.3",

"container_image_id": "docker-pullable://istio/proxyv2@sha256:947348e2039b8b0e356e843ae263dc0c3d50abbf6cfe9d676446353f85b9ccb7",

"pod_id": "29054276-bea1-11e9-ad52-000c295a7d67",

"host": "ubuntu-130",

"labels": {

"app": "policy",

"istio": "mixer",

"istio-mixer-type": "policy",

"pod-template-hash": "7bc45bb8f9"

},

"master_url": "https://10.96.0.1:443/api",

"namespace_id": "8b3de99f-be97-11e9-9d80-000c295a7d67"

},

"message": "\n",

"@timestamp": "2019-08-16T15:25:31.387587739+00:00",

"tag": "kubernetes.var.log.containers.istio-policy-7bc45bb8f9-4lwjp_istio-system_istio-proxy-f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba.log"

}

},

{

"_index": "logstash-2019.08.16",

"_type": "_doc",

"_id": "_QIIm2wBYm28bAuFxTQo",

"_score": 1,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba"

},

"kubernetes": {

"container_name": "istio-proxy",

"namespace_name": "istio-system",

"pod_name": "istio-policy-7bc45bb8f9-4lwjp",

"container_image": "istio/proxyv2:1.0.3",

"container_image_id": "docker-pullable://istio/proxyv2@sha256:947348e2039b8b0e356e843ae263dc0c3d50abbf6cfe9d676446353f85b9ccb7",

"pod_id": "29054276-bea1-11e9-ad52-000c295a7d67",

"host": "ubuntu-130",

"labels": {

"app": "policy",

"istio": "mixer",

"istio-mixer-type": "policy",

"pod-template-hash": "7bc45bb8f9"

},

"master_url": "https://10.96.0.1:443/api",

"namespace_id": "8b3de99f-be97-11e9-9d80-000c295a7d67"

},

"message": " address:\n",

"@timestamp": "2019-08-16T15:25:31.393629656+00:00",

"tag": "kubernetes.var.log.containers.istio-policy-7bc45bb8f9-4lwjp_istio-system_istio-proxy-f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba.log"

}

},

{

"_index": "logstash-2019.08.16",

"_type": "_doc",

"_id": "AwIIm2wBYm28bAuFxTUo",

"_score": 1,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba"

},

"kubernetes": {

"container_name": "istio-proxy",

"namespace_name": "istio-system",

"pod_name": "istio-policy-7bc45bb8f9-4lwjp",

"container_image": "istio/proxyv2:1.0.3",

"container_image_id": "docker-pullable://istio/proxyv2@sha256:947348e2039b8b0e356e843ae263dc0c3d50abbf6cfe9d676446353f85b9ccb7",

"pod_id": "29054276-bea1-11e9-ad52-000c295a7d67",

"host": "ubuntu-130",

"labels": {

"app": "policy",

"istio": "mixer",

"istio-mixer-type": "policy",

"pod-template-hash": "7bc45bb8f9"

},

"master_url": "https://10.96.0.1:443/api",

"namespace_id": "8b3de99f-be97-11e9-9d80-000c295a7d67"

},

"message": " stats_tags:\n",

"@timestamp": "2019-08-16T15:25:31.397690383+00:00",

"tag": "kubernetes.var.log.containers.istio-policy-7bc45bb8f9-4lwjp_istio-system_istio-proxy-f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba.log"

}

},

{

"_index": "logstash-2019.08.16",

"_type": "_doc",

"_id": "FgIIm2wBYm28bAuFxTUo",

"_score": 1,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba"

},

"kubernetes": {

"container_name": "istio-proxy",

"namespace_name": "istio-system",

"pod_name": "istio-policy-7bc45bb8f9-4lwjp",

"container_image": "istio/proxyv2:1.0.3",

"container_image_id": "docker-pullable://istio/proxyv2@sha256:947348e2039b8b0e356e843ae263dc0c3d50abbf6cfe9d676446353f85b9ccb7",

"pod_id": "29054276-bea1-11e9-ad52-000c295a7d67",

"host": "ubuntu-130",

"labels": {

"app": "policy",

"istio": "mixer",

"istio-mixer-type": "policy",

"pod-template-hash": "7bc45bb8f9"

},

"master_url": "https://10.96.0.1:443/api",

"namespace_id": "8b3de99f-be97-11e9-9d80-000c295a7d67"

},

"message": " connect_timeout: 0.250s\n",

"@timestamp": "2019-08-16T15:25:31.522235510+00:00",

"tag": "kubernetes.var.log.containers.istio-policy-7bc45bb8f9-4lwjp_istio-system_istio-proxy-f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba.log"

}

},

{

"_index": "logstash-2019.08.16",

"_type": "_doc",

"_id": "GQIIm2wBYm28bAuFxTUo",

"_score": 1,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba"

},

"kubernetes": {

"container_name": "istio-proxy",

"namespace_name": "istio-system",

"pod_name": "istio-policy-7bc45bb8f9-4lwjp",

"container_image": "istio/proxyv2:1.0.3",

"container_image_id": "docker-pullable://istio/proxyv2@sha256:947348e2039b8b0e356e843ae263dc0c3d50abbf6cfe9d676446353f85b9ccb7",

"pod_id": "29054276-bea1-11e9-ad52-000c295a7d67",

"host": "ubuntu-130",

"labels": {

"app": "policy",

"istio": "mixer",

"istio-mixer-type": "policy",

"pod-template-hash": "7bc45bb8f9"

},

"master_url": "https://10.96.0.1:443/api",

"namespace_id": "8b3de99f-be97-11e9-9d80-000c295a7d67"

},

"message": " - socket_address:\n",

"@timestamp": "2019-08-16T15:25:31.523743382+00:00",

"tag": "kubernetes.var.log.containers.istio-policy-7bc45bb8f9-4lwjp_istio-system_istio-proxy-f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba.log"

}

},

{

"_index": "logstash-2019.08.16",

"_type": "_doc",

"_id": "GwIIm2wBYm28bAuFxTUp",

"_score": 1,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba"

},

"kubernetes": {

"container_name": "istio-proxy",

"namespace_name": "istio-system",

"pod_name": "istio-policy-7bc45bb8f9-4lwjp",

"container_image": "istio/proxyv2:1.0.3",

"container_image_id": "docker-pullable://istio/proxyv2@sha256:947348e2039b8b0e356e843ae263dc0c3d50abbf6cfe9d676446353f85b9ccb7",

"pod_id": "29054276-bea1-11e9-ad52-000c295a7d67",

"host": "ubuntu-130",

"labels": {

"app": "policy",

"istio": "mixer",

"istio-mixer-type": "policy",

"pod-template-hash": "7bc45bb8f9"

},

"master_url": "https://10.96.0.1:443/api",

"namespace_id": "8b3de99f-be97-11e9-9d80-000c295a7d67"

},

"message": " address: 127.0.0.1\n",

"@timestamp": "2019-08-16T15:25:31.524735918+00:00",

"tag": "kubernetes.var.log.containers.istio-policy-7bc45bb8f9-4lwjp_istio-system_istio-proxy-f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba.log"

}

},

{

"_index": "logstash-2019.08.16",

"_type": "_doc",

"_id": "HwIIm2wBYm28bAuFxTUp",

"_score": 1,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba"

},

"kubernetes": {

"container_name": "istio-proxy",

"namespace_name": "istio-system",

"pod_name": "istio-policy-7bc45bb8f9-4lwjp",

"container_image": "istio/proxyv2:1.0.3",

"container_image_id": "docker-pullable://istio/proxyv2@sha256:947348e2039b8b0e356e843ae263dc0c3d50abbf6cfe9d676446353f85b9ccb7",

"pod_id": "29054276-bea1-11e9-ad52-000c295a7d67",

"host": "ubuntu-130",

"labels": {

"app": "policy",

"istio": "mixer",

"istio-mixer-type": "policy",

"pod-template-hash": "7bc45bb8f9"

},

"master_url": "https://10.96.0.1:443/api",

"namespace_id": "8b3de99f-be97-11e9-9d80-000c295a7d67"

},

"message": " - max_connections: 100000\n",

"@timestamp": "2019-08-16T15:25:31.527531467+00:00",

"tag": "kubernetes.var.log.containers.istio-policy-7bc45bb8f9-4lwjp_istio-system_istio-proxy-f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba.log"

}

},

{

"_index": "logstash-2019.08.16",

"_type": "_doc",

"_id": "IAIIm2wBYm28bAuFxTUp",

"_score": 1,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba"

},

"kubernetes": {

"container_name": "istio-proxy",

"namespace_name": "istio-system",

"pod_name": "istio-policy-7bc45bb8f9-4lwjp",

"container_image": "istio/proxyv2:1.0.3",

"container_image_id": "docker-pullable://istio/proxyv2@sha256:947348e2039b8b0e356e843ae263dc0c3d50abbf6cfe9d676446353f85b9ccb7",

"pod_id": "29054276-bea1-11e9-ad52-000c295a7d67",

"host": "ubuntu-130",

"labels": {

"app": "policy",

"istio": "mixer",

"istio-mixer-type": "policy",

"pod-template-hash": "7bc45bb8f9"

},

"master_url": "https://10.96.0.1:443/api",

"namespace_id": "8b3de99f-be97-11e9-9d80-000c295a7d67"

},

"message": " max_pending_requests: 100000\n",

"@timestamp": "2019-08-16T15:25:31.528025002+00:00",

"tag": "kubernetes.var.log.containers.istio-policy-7bc45bb8f9-4lwjp_istio-system_istio-proxy-f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba.log"

}

},

{

"_index": "logstash-2019.08.16",

"_type": "_doc",

"_id": "JQIIm2wBYm28bAuFxTUp",

"_score": 1,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba"

},

"kubernetes": {

"container_name": "istio-proxy",

"namespace_name": "istio-system",

"pod_name": "istio-policy-7bc45bb8f9-4lwjp",

"container_image": "istio/proxyv2:1.0.3",

"container_image_id": "docker-pullable://istio/proxyv2@sha256:947348e2039b8b0e356e843ae263dc0c3d50abbf6cfe9d676446353f85b9ccb7",

"pod_id": "29054276-bea1-11e9-ad52-000c295a7d67",

"host": "ubuntu-130",

"labels": {

"app": "policy",

"istio": "mixer",

"istio-mixer-type": "policy",

"pod-template-hash": "7bc45bb8f9"

},

"master_url": "https://10.96.0.1:443/api",

"namespace_id": "8b3de99f-be97-11e9-9d80-000c295a7d67"

},

"message": " - pipe:\n",

"@timestamp": "2019-08-16T15:25:31.532554059+00:00",

"tag": "kubernetes.var.log.containers.istio-policy-7bc45bb8f9-4lwjp_istio-system_istio-proxy-f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba.log"

}

}

]

}

}

http://192.168.13.1:9200/logstash-2019.08.16/_doc/HwIIm2wBYm28bAuFxTUp

——————————————————————————————————————————————————————————————————————

{

"_index": "logstash-2019.08.16",

"_type": "_doc",

"_id": "HwIIm2wBYm28bAuFxTUp",

"_version": 1,

"found": true,

"_source": {

"stream": "stdout",

"docker": {

"container_id": "f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba"

},

"kubernetes": {

"container_name": "istio-proxy",

"namespace_name": "istio-system",

"pod_name": "istio-policy-7bc45bb8f9-4lwjp",

"container_image": "istio/proxyv2:1.0.3",

"container_image_id": "docker-pullable://istio/proxyv2@sha256:947348e2039b8b0e356e843ae263dc0c3d50abbf6cfe9d676446353f85b9ccb7",

"pod_id": "29054276-bea1-11e9-ad52-000c295a7d67",

"host": "ubuntu-130",

"labels": {

"app": "policy",

"istio": "mixer",

"istio-mixer-type": "policy",

"pod-template-hash": "7bc45bb8f9"

},

"master_url": "https://10.96.0.1:443/api",

"namespace_id": "8b3de99f-be97-11e9-9d80-000c295a7d67"

},

"message": " - max_connections: 100000\n",

"@timestamp": "2019-08-16T15:25:31.527531467+00:00",

"tag": "kubernetes.var.log.containers.istio-policy-7bc45bb8f9-4lwjp_istio-system_istio-proxy-f57a82ec41244abc0aba2b846070494f021250d44789fd0a81bc5f2ae92d1fba.log"

}

}从日志看,他貌似只收集kube-system日志,没有收集业务日志(比如demployment-nginx),后台思考是因为demployment-nginx在default namespace命令空间下

root@ubuntu-128:/home/itcast/working/deployment# kubectl get pod --namespace=kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-59b69b999c-drvmt 1/1 Running 18 13h 10.244.0.35 ubuntu-128

coredns-59b69b999c-qllxs 1/1 Running 0 140m 10.244.1.145 ubuntu-130

etcd-ubuntu-128 1/1 Running 12 4d12h 192.168.13.128 ubuntu-128

fluentd-es-v2.6.0-l86vd 1/1 Running 0 68m 10.244.1.148 ubuntu-130

kube-apiserver-ubuntu-128 1/1 Running 17 4d12h 192.168.13.128 ubuntu-128

kube-controller-manager-ubuntu-128 1/1 Running 110 12h 192.168.13.128 ubuntu-128

kube-flannel-ds-amd64-6fnlw 1/1 Running 5 11h 192.168.13.128 ubuntu-128

kube-flannel-ds-amd64-cxwsg 1/1 Running 16 4d12h 192.168.13.130 ubuntu-130

kube-proxy-6hb9w 1/1 Running 12 4d12h 192.168.13.128 ubuntu-128

kube-proxy-xqgrw 1/1 Running 11 4d12h 192.168.13.130 ubuntu-130

kube-scheduler-ubuntu-128 1/1 Running 101 151m 192.168.13.128 ubuntu-128

nginx-deployment-76bf4969df-7h5mw 1/1 Running 0 33m 10.244.1.149 ubuntu-130

tiller-deploy-7b9c5bf9c4-zvxq5 1/1 Running 9 2d13h 10.244.1.136 ubuntu-130 因此在kube-system创建nginx的deployment,成功看到了业务日志

手动使用curl触发日志,

Welcome to nginx!

If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.

For online documentation and support please refer to

nginx.org.

Commercial support is available at

nginx.com.

Thank you for using nginx.