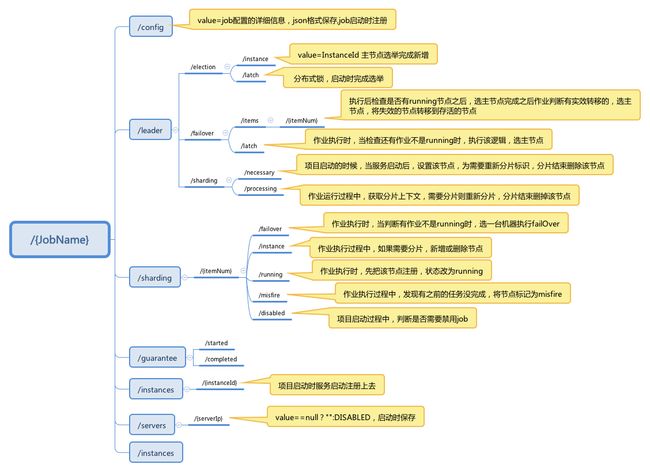

elastic-job作业相关的数据都是配置在zk上的,包括分片参数,作业失效转移,运行实例等等都是保存在ZK上的,那具体的zk节点的树形结构会是什么样子?每一个节点又是什么时候注册到zk上的?

在job的启动过程中(JobScheduler.init()),会将启动信息注册到注册中心,再看一下具体的节点信息:

public void init() {

///{jobName}/config路径在这里

LiteJobConfiguration liteJobConfigFromRegCenter =

schedulerFacade.updateJobConfiguration(liteJobConfig); JobRegistry.getInstance().setCurrentShardingTotalCount(liteJobConfigFromRegCenter.getJobName(), liteJobConfigFromRegCenter.getTypeConfig().getCoreConfig().getShardingTotalCount());

JobScheduleController jobScheduleController = new JobScheduleController(

createScheduler(), createJobDetail(liteJobConfigFromRegCenter.getTypeConfig().getJobClass()), liteJobConfigFromRegCenter.getJobName());

JobRegistry.getInstance().registerJob(liteJobConfigFromRegCenter.getJobName(), jobScheduleController, regCenter);

/**

/{jobName}/leader/election/latch

/leader/election/instance

/{jobName}/services/{ServerIp}

/{jobName}/instances/{instanceIndex}

/{jobName}/sharding/necessary

**/

schedulerFacade.registerStartUpInfo(!liteJobConfigFromRegCenter.isDisabled());

jobScheduleController.scheduleJob(liteJobConfigFromRegCenter.getTypeConfig().getCoreConfig().getCron());

}

持久化job的配置信息,首先将job的配置信息持久到zk节点上,看代码:

LiteJobConfiguration liteJobConfigFromRegCenter=schedulerFacade.updateJobConfiguration(liteJobConfig);

public LiteJobConfiguration updateJobConfiguration(final LiteJobConfiguration liteJobConfig) {

configService.persist(liteJobConfig);//

return configService.load(false);

}

public void persist(final LiteJobConfiguration liteJobConfig) {

checkConflictJob(liteJobConfig);

//configurationNode.ROOT=/{jobName}/config

if (!jobNodeStorage.isJobNodeExisted(ConfigurationNode.ROOT) || liteJobConfig.isOverwrite()) {

jobNodeStorage.replaceJobNode(ConfigurationNode.ROOT, LiteJobConfigurationGsonFactory.toJson(liteJobConfig));

}

}

public void replaceJobNode(final String node, final Object value) {

/**

节点:

/{jobName}/config

在这里注册

**/

regCenter.persist(jobNodePath.getFullPath(node), value.toString());

}

在job启动注册启动信息的时候,会注册很多信息,具体如下:

//JobScheduler.init();

schedulerFacade.registerStartUpInfo(!liteJobConfigFromRegCenter.isDisabled());

public void registerStartUpInfo(final boolean enabled) {

listenerManager.startAllListeners();

/**

节点:

/{jobName}/leader/election/latch

/{jobName}/leader/election/instance

在这里实现

**/

leaderService.electLeader();

/**

节点:

/{jobName}/servers/{ServerIp}

在这里创建

**/

serverService.persistOnline(enabled);

/**

节点:

/{jobName}/instances/{instanceId}

在这里创建

**/

instanceService.persistOnline();

/**

节点:

/{jobName}/sharding/necessary

在这里创建

**/

shardingService.setReshardingFlag();

monitorService.listen();

if (!reconcileService.isRunning()) {

reconcileService.startAsync();

}

}

public void electLeader() {

log.debug("Elect a new leader now.");

//

//选举主节点 在主节点下面创建节点LeaderNode.LATCH=/{jobName}/leader/election/latch

jobNodeStorage.executeInLeader(LeaderNode.LATCH, new LeaderElectionExecutionCallback());

log.debug("Leader election completed.");

}

public void executeInLeader(final String latchNode, final LeaderExecutionCallback callback) {

//

try (LeaderLatch latch = new LeaderLatch(getClient(), jobNodePath.getFullPath(latchNode))) {

latch.start();

latch.await();

//回调,注册主节点

callback.execute();

//CHECKSTYLE:OFF

} catch (final Exception ex) {

//CHECKSTYLE:ON

handleException(ex);

}

}

//在主节点选举完成之后,执行callBack

@RequiredArgsConstructor

class LeaderElectionExecutionCallback implements LeaderExecutionCallback {

@Override

public void execute() {

if (!hasLeader()) {

///{jobName}/leader/election/instance 在这里

jobNodeStorage.fillEphemeralJobNode(LeaderNode.INSTANCE, JobRegistry.getInstance().getJobInstance(jobName).getJobInstanceId());

}

}

}

再看一下执行过程,最重要的一段获取分片上下文,在获取分片上下文的时候,首先会判断是不是需要重新分片,需要分片的话,重新设置分片信息,在这里会做所有相关分片的逻辑。

//AbstractElasticJobExecutor 获取上下文

ShardingContexts shardingContexts = jobFacade.getShardingContexts();

public ShardingContexts getShardingContexts() {

boolean isFailover = configService.load(true).isFailover();

if (isFailover) {

List failoverShardingItems = failoverService.getLocalFailoverItems();

if (!failoverShardingItems.isEmpty()) {

return executionContextService.getJobShardingContext(failoverShardingItems);

}

}

//如果需要分片,则重新分片

shardingService.shardingIfNecessary();

List shardingItems = shardingService.getLocalShardingItems();

if (isFailover) {

shardingItems.removeAll(failoverService.getLocalTakeOffItems());

}

shardingItems.removeAll(executionService.getDisabledItems(shardingItems));

return executionContextService.getJobShardingContext(shardingItems);

}

//分片代码

public void shardingIfNecessary() {

List availableJobInstances = instanceService.getAvailableJobInstances();

if (!isNeedSharding() || availableJobInstances.isEmpty()) {

return;

}

if (!leaderService.isLeaderUntilBlock()) {

blockUntilShardingCompleted();

return;

}

waitingOtherJobCompleted();

LiteJobConfiguration liteJobConfig = configService.load(false);

int shardingTotalCount = liteJobConfig.getTypeConfig().getCoreConfig().getShardingTotalCount();

log.debug("Job '{}' sharding begin.", jobName);

//分片之前,将zk节点状态改为processing,分片中的状态,等待分片结束

/**

/{jobName}/sharding/processing

**/

jobNodeStorage.fillEphemeralJobNode(ShardingNode.PROCESSING, "");

//重新设置分片项参数

resetShardingInfo(shardingTotalCount);

//获取分片策略类

JobShardingStrategy jobShardingStrategy = JobShardingStrategyFactory.getStrategy(liteJobConfig.getJobShardingStrategyClass());

///分片

jobNodeStorage.executeInTransaction(new PersistShardingInfoTransactionExecutionCallback(jobShardingStrategy.sharding(availableJobInstances, jobName, shardingTotalCount)));

log.debug("Job '{}' sharding complete.", jobName);

}

/**

重新设子分片信息

**/

private void resetShardingInfo(final int shardingTotalCount) {

for (int i = 0; i < shardingTotalCount; i++) {

/** 删除jobInstance节点

/{jobName}/sharing/{instanceIndex}分片项节点删除

**/

jobNodeStorage.removeJobNodeIfExisted(ShardingNode.getInstanceNode(i));

/** 删除jobInstance节点

/{jobName}/sharing/{instanceIndex}重新设置分片项

**/

jobNodeStorage.createJobNodeIfNeeded(ShardingNode.ROOT + "/" + i);

}

int actualShardingTotalCount = jobNodeStorage.getJobNodeChildrenKeys(ShardingNode.ROOT).size();

if (actualShardingTotalCount > shardingTotalCount) {

for (int i = shardingTotalCount; i < actualShardingTotalCount; i++) {

//有多余分片删除

jobNodeStorage.removeJobNodeIfExisted(ShardingNode.ROOT + "/" + i);

}

}

}

/** 分片 **/

@RequiredArgsConstructor

class PersistShardingInfoTransactionExecutionCallback implements TransactionExecutionCallback {

private final Map> shardingResults;

@Override

public void execute(final CuratorTransactionFinal curatorTransactionFinal) throws Exception {

for (Map.Entry> entry : shardingResults.entrySet()) {

for (int shardingItem : entry.getValue()) {

/**

每个分片项创建一个实例

{jobName}/sharing/{instanceIndex}/

**/

curatorTransactionFinal.create().forPath(jobNodePath.getFullPath(ShardingNode.getInstanceNode(shardingItem)), entry.getKey().getJobInstanceId().getBytes()).and();

}

}

/**

删除节点

/{jobName}/sharding/necessary

/{jobName}/sharding/processing

**/ curatorTransactionFinal.delete().forPath(jobNodePath.getFullPath(ShardingNode.NECESSARY)).and(); curatorTransactionFinal.delete().forPath(jobNodePath.getFullPath(ShardingNode.PROCESSING)).and();

}

}

在获取分片上下文后,根据每个分片项判断有无作业是运行中的状态,如果有,则标记为misfire

jobFacade.misfireIfRunning(shardingContexts.getShardingItemParameters().keySet())

public boolean misfireIfRunning(final Collection shardingItems) {

return executionService.misfireIfHasRunningItems(shardingItems);

}

/**

* 如果当前分片项仍在运行则设置任务被错过执行的标记.

*

* @param items 需要设置错过执行的任务分片项

* @return 是否错过本次执行

*/

public boolean misfireIfHasRunningItems(final Collection items) {

if (!hasRunningItems(items)) {

return false;

}

setMisfire(items);

return true;

}

/**

* 设置任务被错过执行的标记.

*

* @param items 需要设置错过执行的任务分片项

*/

public void setMisfire(final Collection items) {

for (int each : items) {

/**

/{jobName}/{itemNum}/misfire

**/

jobNodeStorage.createJobNodeIfNeeded(ShardingNode.getMisfireNode(each));

}

}

misfire判断结束之后,回去执行job,执行开始时,会将作业状态改为running状态,作业执行完成,将running节点删除。

private void execute(final ShardingContexts shardingContexts, final JobExecutionEvent.ExecutionSource executionSource) {

if (shardingContexts.getShardingItemParameters().isEmpty()) {

if (shardingContexts.isAllowSendJobEvent()) {

jobFacade.postJobStatusTraceEvent(shardingContexts.getTaskId(), State.TASK_FINISHED, String.format("Sharding item for job '%s' is empty.", jobName));

}

return;

}

/**这里修改作业状态

{jobName}/{itemNum}/running

**/

jobFacade.registerJobBegin(shardingContexts);

String taskId = shardingContexts.getTaskId();

if (shardingContexts.isAllowSendJobEvent()) {

jobFacade.postJobStatusTraceEvent(taskId, State.TASK_RUNNING, "");

}

try {

// failOver逻辑在这里

process(shardingContexts, executionSource);

} finally {

// TODO 考虑增加作业失败的状态,并且考虑如何处理作业失败的整体回路

// 删除running节点

//{jobName}/{itemNum}/running

jobFacade.registerJobCompleted(shardingContexts);

if (itemErrorMessages.isEmpty()) {

if (shardingContexts.isAllowSendJobEvent()) {

jobFacade.postJobStatusTraceEvent(taskId, State.TASK_FINISHED, "");

}

} else {

if (shardingContexts.isAllowSendJobEvent()) {

jobFacade.postJobStatusTraceEvent(taskId, State.TASK_ERROR, itemErrorMessages.toString());

}

}

}

}

/**

* 注册作业启动信息.

*

* @param shardingContexts 分片上下文

*/

public void registerJobBegin(final ShardingContexts shardingContexts) {

JobRegistry.getInstance().setJobRunning(jobName, true);

if (!configService.load(true).isMonitorExecution()) {

return;

}

for (int each : shardingContexts.getShardingItemParameters().keySet()) {

/**这里修改作业状态

{jobName}/{itemNum}/running

**/

jobNodeStorage.fillEphemeralJobNode(ShardingNode.getRunningNode(each), "");

}

}

/**

* 注册作业完成信息.

*

* @param shardingContexts 分片上下文

*/

public void registerJobCompleted(final ShardingContexts shardingContexts) {

JobRegistry.getInstance().setJobRunning(jobName, false);

if (!configService.load(true).isMonitorExecution()) {

return;

}

for (int each : shardingContexts.getShardingItemParameters().keySet()) {

/**在这里删除节点

{jobName}/{itemNum}/running

**/

jobNodeStorage.removeJobNodeIfExisted(ShardingNode.getRunningNode(each));

}

}