Focal loss keras实现 tf.equal tf.ones_like tf.zeros_like tf.where

Focal loss

原理

他人推理过程

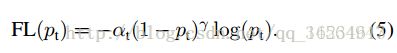

Focal Loss基本思想就是对于容易区分的(概率大)梯度更新小(1-pt接近0),对于不容易区分的(概率小)梯度更新大。

其中pt代表每个类别概率(二分类也可以理解为多分类问题。只不过二分类预测的都是为1概率,而多分类预测属于所有类别概率,此处pt在二分类中理解为属于1或0的概率),alpha控制样本不平衡问题,gamma控制难分样本问题。代码兼容tensorflow版本,未完全测试,请自己测试后使用。

model.compile(loss=[focal_loss(alpha=0.25, gamma=2)])二分类,不带alpha:

from keras import backend as K

def focal_loss(gamma=2.):

def focal_loss_fixed(y_true, y_pred):

pt_1 = tf.where(tf.equal(y_true, 1), y_pred, (1- np.array(y_pred)).tolist() )

return -K.sum(K.pow(1. - pt_1, gamma) * K.log(pt_1))

return focal_loss_fixed二分类,带alpha:

from keras import backend as K

def focal_loss(gamma=2., alpha=0.25):

def focal_loss_fixed(y_true, y_pred):

pt_1 = tf.where(tf.equal(y_true, 1), y_pred, tf.ones_like(y_pred))

pt_0 = tf.where(tf.equal(y_true, 0), y_pred, tf.zeros_like(y_pred))

return -K.sum(alpha * K.pow(1. - pt_1, gamma) * K.log(pt_1))-K.sum((1-alpha) * K.pow( pt_0, gamma) * K.log(1. - pt_0))

return focal_loss_fixed多分类,不带alpha

from keras import backend as K

def focal_loss(gamma=2.):

def focal_loss_fixed(y_true, y_pred):

pt_1 = tf.where(tf.equal(y_true, 1), y_pred, tf.ones_like(y_pred))

return -K.sum( K.pow(1. - pt_1, gamma) * K.log(pt_1))

return focal_loss_fixedtensorflow API

tf.equal:

tf.equal(A, B)是对比这两个矩阵或者向量的相等的元素,如果是相等的那就返回True,反正返回False,返回的值的矩阵维度和A是一样的

import tensorflow as tf

import numpy as np

A = [[1,3,4,5,6]]

B = [[1,3,4,3,2]]

with tf.Session() as sess:

print(sess.run(tf.equal(A, B))) tf.ones_like | tf.zeros_like:

新建一个与给定tensor shape大小一致的tensor,其所有元素为1或0。

tensor=[[1, 2, 3], [4, 5, 6]]

x = tf.ones_like(tensor)

print(sess.run(x))

#[[1 1 1],

# [1 1 1]]tf.where:

tf.where(

condition,

x=None,

y=None,

name=None

)

tf.where(tf.equal(y_true, 1), y_pred, (1- np.array(y_pred)).tolist() )根据condition每个位置的bool值,true返回x对应位置值,false返回y对应位置值。

试验数据:

import tensorflow as tf

import numpy as np

gamma=2.

alpha=0.25

y_true = [1,0,1]

y_pred = [0.6,0.3,0.7]

pt_1 = tf.where(tf.equal(y_true, 1), y_pred, tf.ones_like(y_pred))

pt_0 = tf.where(tf.equal(y_true, 0), y_pred, tf.zeros_like(y_pred))

with tf.Session() as sess:

print(sess.run(-K.sum(alpha * K.pow(1. - pt_1, gamma) * K.log(pt_1))-K.sum((1-alpha) * K.pow( pt_0, gamma) * K.log(1. - pt_0))))

#0.052533768import tensorflow as tf

import numpy as np

gamma=2.

alpha=0.25

y_true = [[1,0,1],[1,0,1]]

y_pred = [[0.6,0.3,0.7],[0.6,0.3,0.7]]

pt_1 = tf.where(tf.equal(y_true, 1), y_pred, tf.ones_like(y_pred))

pt_0 = tf.where(tf.equal(y_true, 0), y_pred, tf.zeros_like(y_pred))

with tf.Session() as sess:

print(sess.run(-K.sum(alpha * K.pow(1. - pt_1, gamma) * K.log(pt_1))-K.sum((1-alpha) * K.pow( pt_0, gamma) * K.log(1. - pt_0))))

# 0.105067536import tensorflow as tf

import numpy as np

gamma=2.

y_true = [[1,0,0],[0,0,1]]

y_pred = [[0.6,0.3,0.7],[0.6,0.3,0.7]]

pt_1 = tf.where(tf.equal(y_true, 1), y_pred, tf.ones_like(y_pred))

# pt_0 = tf.where(tf.equal(y_true, 0), y_pred, tf.zeros_like(y_pred))

with tf.Session() as sess:

print(sess.run( -K.sum( K.pow(1. - pt_1, gamma) * K.log(pt_1)) ))

# 0.11383283