TensorFlow 2.0 保存模型结构和参数

笔记摘自《Google老师亲授 TensorFlow2.0 入门到进阶_课程》

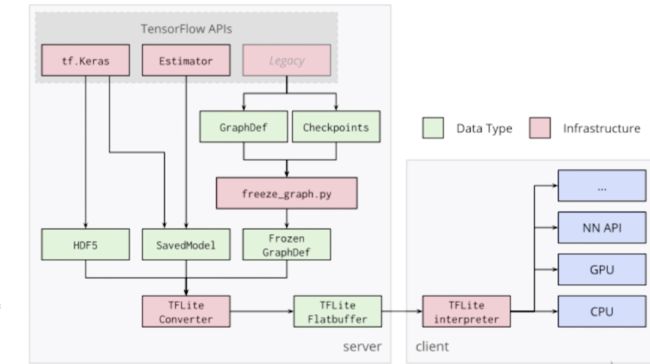

1. 模型保存和部署流程

2. 模型的保存

(1)保存为HDF5

直接修改callback的参数列表如下:

# tf.keras.models.Sequential()

model = keras.models.Sequential([

keras.layers.Flatten(input_shape=[28, 28]),

keras.layers.Dense(300, activation='relu'),

keras.layers.Dense(100, activation='relu'),

keras.layers.Dense(10, activation='softmax')

])

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

logdir = './graph_def_and_weights'

if not os.path.exists(logdir):

os.mkdir(logdir)

output_model_file = os.path.join(logdir,

"fashion_mnist_model.h5")

# 修改callback的参数列表,选择需要保存的参数

callbacks = [

keras.callbacks.TensorBoard(logdir),

keras.callbacks.ModelCheckpoint(output_model_file,

save_best_only = True,

save_weights_only = False),

keras.callbacks.EarlyStopping(patience=5, min_delta=1e-3),

]

# 添加参数callbacks

history = model.fit(x_train_scaled, y_train, epochs=10,

validation_data=(x_valid_scaled, y_valid),

callbacks = callbacks)

model.evaluate(x_test_scaled, y_test, verbose=0)

# 选择只保存weight和bias

model.save_weights(os.path.join(logdir, "fashion_mnist_weights.h5"))

将保存的权重赋值给模型时,需要先建立一个与训练一模一样的模型:

# tf.keras.models.Sequential()

model = keras.models.Sequential([

keras.layers.Flatten(input_shape=[28, 28]),

keras.layers.Dense(300, activation='relu'),

keras.layers.Dense(100, activation='relu'),

keras.layers.Dense(10, activation='softmax')

])

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

logdir = './graph_def_and_weights'

if not os.path.exists(logdir):

os.mkdir(logdir)

output_model_file = os.path.join(logdir,

"fashion_mnist_model.h5")

model.load_weights(output_model_file)

model.evaluate(x_test_scaled, y_test, verbose=0)

(2)保存为SaveModel

使用tf.saved_model.save()直接保存模型所有内容

保存路径:"./keras_saved_graph"

# tf.keras.models.Sequential()

model = keras.models.Sequential([

keras.layers.Flatten(input_shape=[28, 28]),

keras.layers.Dense(300, activation='relu'),

keras.layers.Dense(100, activation='relu'),

keras.layers.Dense(10, activation='softmax')

])

model.compile(loss="sparse_categorical_crossentropy",

optimizer = "sgd",

metrics = ["accuracy"])

history = model.fit(x_train_scaled, y_train, epochs=10,

validation_data=(x_valid_scaled, y_valid))

# 保存模型

tf.saved_model.save(model, "./keras_saved_graph")

查看保存内容:

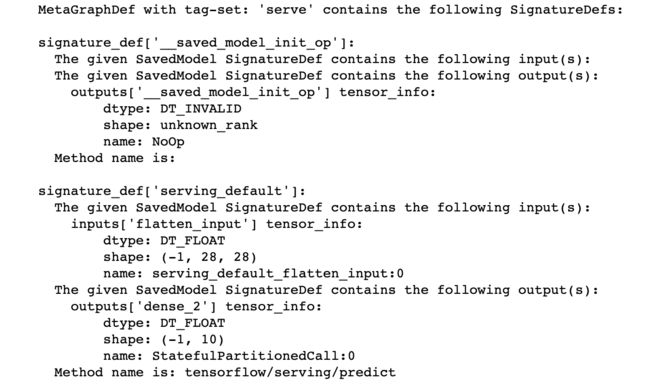

!saved_model_cli show --dir ./keras_saved_graph --all

可以看到关于输入和输出的签名信息。如下:

使用tf.saved_model.load()加载模型,验证模型保存是否正确:

loaded_saved_model = tf.saved_model.load('./keras_saved_graph')

print(list(loaded_saved_model.signatures.keys()))

# ['serving_default']

inference = loaded_saved_model.signatures['serving_default']

print(inference.structured_outputs)

# {'dense_2': TensorSpec(shape=(None, 10), dtype=tf.float32, name='dense_2')}

results = inference(tf.constant(x_test_scaled[0:1]))

print(results['dense_2'])

# tf.Tensor([[3.3287105e-07 6.5204258e-05 7.5596186e-06 9.7430329e-06 6.4412257e-06 8.6376350e-03 2.2177779e-05 5.3723875e-02 4.3917933e-04 9.3708795e-01]], shape=(1, 10), dtype=float32)

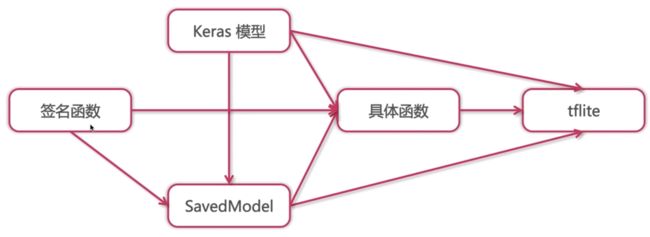

3. 转为TFLite

签名函数转为SavedModel、h5 model转为concrete_function这里不列出,只讨论如何从h5和SavedModel直接转为tflite model。

(1)h5转为TFLite model

使用tf.lite.TFLiteConverter直接将h5转为TFLite model。

# 加载模型

loaded_keras_model = keras.models.load_model(

'./graph_def_and_weights/fashion_mnist_model.h5')

# 转换

keras_to_tflite_converter = tf.lite.TFLiteConverter.from_keras_model(

loaded_keras_model)

keras_tflite = keras_to_tflite_converter.convert()

# 保存

if not os.path.exists('./tflite_models'):

os.mkdir('./tflite_models')

with open('./tflite_models/keras_tflite', 'wb') as f:

f.write(keras_tflite)

(2)SaveModel转为 tf.lite model

直接传入 SaveModel的路径即可。

这里与上述第二种保存方式的保存目录相对应。

saved_model_to_tflite_converter = tf.lite.TFLiteConverter.from_saved_model('./keras_saved_graph/')

saved_model_tflite = saved_model_to_tflite_converter.convert()

with open('./tflite_models/saved_model_tflite', 'wb') as f:

f.write(saved_model_tflite)

4. 量化TFLite模型

量化的意思是把32位精度变为8位,简化模型。

直接给convetor设置一个optimization即可。

(1)量化h5模型

loaded_keras_model = keras.models.load_model(

'./graph_def_and_weights/fashion_mnist_model.h5')

keras_to_tflite_converter = tf.lite.TFLiteConverter.from_keras_model(

loaded_keras_model)

# 量化

keras_to_tflite_converter.optimizations = [

tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

keras_tflite = keras_to_tflite_converter.convert()

if not os.path.exists('./tflite_models'):

os.mkdir('./tflite_models')

with open('./tflite_models/quantized_keras_tflite', 'wb') as f:

f.write(keras_tflite)

(2)量化SaveModel

saved_model_to_tflite_converter = tf.lite.TFLiteConverter.from_saved_model('./keras_saved_graph/')

saved_model_to_tflite_converter.optimizations = [

tf.lite.Optimize.OPTIMIZE_FOR_SIZE]

saved_model_tflite = saved_model_to_tflite_converter.convert()

with open('./tflite_models/quantized_saved_model_tflite', 'wb') as f:

f.write(saved_model_tflite)

4. 模型的部署

TensorFlow Lite 示例应用