python_爬虫_百度地图迁徙_迁入地来源_迁出目的地

百度地图迁徙链接为 :https://qianxi.baidu.com/

建议尽早爬取数据,以后可能会关闭

python爬虫的代码

import os

import random

import time

from urllib import request

import re

import requests

import xlwt

from utils.read_write import readTXT, writeOneJSON

os.chdir(r'D:\data\人口数据\百度迁徙大数据\全国城市省份市内流入流出\json')

def set_style(name, height, bold=False):

style = xlwt.XFStyle() # 初始化样式

font = xlwt.Font() # 为样式创建字体

font.name = name # 'Times New Roman'

font.bold = bold

font.color_index = 4

font.height = height

style.font = font

return style

f = xlwt.Workbook()

sheet2 = f.add_sheet(u'sheet2', cell_overwrite_ok=True) # 创建sheet2

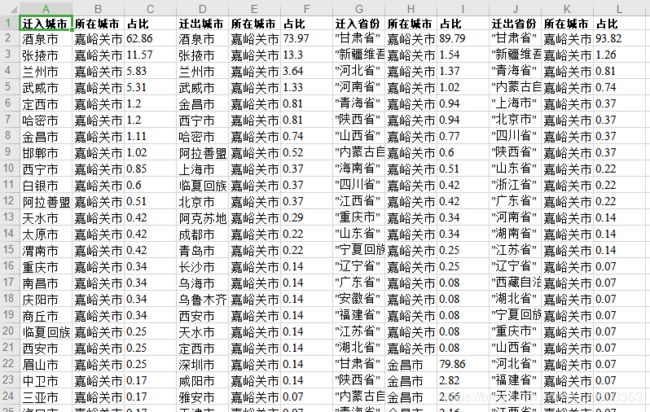

row0 = [u'迁入城市',u'所在城市',u'占比',u'迁出城市',u'所在城市',u'占比',u'迁入省份',u'所在城市',u'占比',u'迁出省份',u'所在城市',u'占比']

# 生成第一行

for i in range(0, len(row0)):

sheet2.write(0, i, row0[i], set_style('Times New Roman', 200, True))

headers = {"User-agent":"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 "

"(KHTML, like Gecko) Chrome/49.0.2623.221 Safari/537.36 SE 2.X MetaSr 1.0"}

opener = request.build_opener()

opener.add_headers = [headers]

request.install_opener(opener)

default = set_style('Times New Roman', 220)

date_list = []

lines = readTXT('D:\project\jianguiyuan\data\BaiduMap_cityCode_1102.txt')

for riqi in range(20200103, 20200132):

date_list.append(str(riqi))

for riqi in range(20200201, 20200226):

date_list.append(str(riqi))

for riqi in date_list:

print(riqi)

for i in range(1, 389):

print(i)

obj = lines[i].split(',')

print(obj[0])

print(obj[1])

firsturl = "http://huiyan.baidu.com/migration/cityrank.jsonp?dt=country&id=" + obj[0] + "&type=move_in&date=" + riqi + "&callback=jsonp"

randint_data = random.randint(3, 6)

time.sleep(randint_data)

data = request.urlopen(firsturl).read().decode("utf-8")

data = data.encode("utf-8").decode("unicode_escape")

writeOneJSON(data, "城市迁入_" +obj[1] + "_" +riqi + ".json")

# 对Unicode编码进行改造

pat = '{"city_name":"(.*?)","province_name":".*?","value":.*?}'

pat1 = '{"city_name":".*?","province_name":".*?","value":(.*?)}'

result = re.compile(pat).findall(str(data))

result1 = re.compile(pat1).findall(str(data))

column0 = result

column1 = result1

column2 = obj[1]

for i1 in range(0, len(column0)):

sheet2.write(i1 + len(column0) * i + 1, 0, column0[i1], default)

for i1 in range(0, len(column0)):

sheet2.write(i1 + len(column0) * i + 1, 1, column2, default)

for i1 in range(0, len(column1)):

sheet2.write(i1 + len(column0) * i + 1, 2, column1[i1], default)

firsturl = "http://huiyan.baidu.com/migration/cityrank.jsonp?dt=country&" \

"id="+obj[0]+"&type=move_out&date="+ riqi+"&callback=jsonp"

randint_data = random.randint(3, 7)

time.sleep(randint_data)

data2 = request.urlopen(firsturl).read().decode("utf-8")

data2 = data2.encode("utf-8").decode("unicode_escape") #

writeOneJSON(data2, "城市迁出_" + obj[1] + "_" + riqi + ".json")

#对Unicode编码进行改造

pat = '{"city_name":"(.*?)","province_name":".*?","value":.*?}'

pat1 = '{"city_name":".*?","province_name":".*?","value":(.*?)}'

result2 = re.compile(pat).findall(str(data2))

result12 = re.compile(pat1).findall(str(data2))

column0 = result2

column1 = result12

column2 = obj[1]

for i1 in range(0, len(column0)):

sheet2.write(i1 + len(column0) * i + 1, 3, column0[i1], default)

for i1 in range(0, len(column0)):

sheet2.write(i1 + len(column0) * i + 1, 4, column2, default)

for i1 in range(0, len(column1)):

sheet2.write(i1 + len(column0) * i + 1, 5, column1[i1], default)

firsturl = "http://huiyan.baidu.com/migration/provincerank.jsonp?dt=country&id=" +obj[0] + "&type=move_in&date=" + riqi + "&callback=jsonp"

randint_data = random.randint(3, 8)

time.sleep(randint_data)

data = request.urlopen(firsturl).read().decode("utf-8")

data = data.encode("utf-8").decode("unicode_escape")

writeOneJSON(data, "省份迁入_" + obj[1] + "_" + riqi + ".json")

# 对Unicode编码进行改造

pat = '{"province_name":(.*?),"value":.*?}'

pat1 = '{"province_name":".*?","value":(.*?)}'

result = re.compile(pat).findall(str(data))

result1 = re.compile(pat1).findall(str(data))

column0 = result

column1 = result1

column2 = obj[1]

for i1 in range(0, len(column0)):

sheet2.write(i1 + len(column0) * i + 1, 6, column0[i1], default)

for i1 in range(0, len(column0)):

sheet2.write(i1 + len(column0) * i + 1, 7, column2, default)

for i1 in range(0, len(column1)):

sheet2.write(i1 + len(column0) * i + 1, 8, column1[i1], default)

firsturl = "http://huiyan.baidu.com/migration/provincerank.jsonp?dt=country&" \

"id="+obj[0]+"&type=move_out&date="+ riqi+"&callback=jsonp"

randint_data = random.randint(3, 6)

time.sleep(randint_data)

data2 = request.urlopen(firsturl).read().decode("utf-8")

data2 = data2.encode("utf-8").decode("unicode_escape") #

writeOneJSON(data2, "省份迁出_" + obj[1] + "_" + riqi + ".json")

#对Unicode编码进行改造

pat = '{"province_name":(.*?),"value":.*?}'

pat1 = '{"province_name":".*?","value":(.*?)}'

result2 = re.compile(pat).findall(str(data2))

result12 = re.compile(pat1).findall(str(data2))

column0 = result2

column1 = result12

column2 = obj[1]

for i1 in range(0, len(column0)):

sheet2.write(i1 + len(column0) * i + 1, 9, column0[i1], default)

for i1 in range(0, len(column0)):

sheet2.write(i1 + len(column0) * i + 1, 10, column2, default)

for i1 in range(0, len(column1)):

sheet2.write(i1 + len(column0) * i + 1, 11, column1[i1], default)

print("大吉大利,今晚吃鸡啊!")

filename = 'D:\data\人口数据\百度迁徙大数据\全国城市省份市内流入流出\\'+riqi+'.xls'

f.save(filename)

其中涉及到的文件下载请点击

我的资源

read_write.py文件链接:

python读写文件

百度城市编码文件:

城市编码

如需全国所有城市的城内出行强度数据、迁入地来源和迁出目的地数据

请私聊我获取联系方式