视频动作识别--Two-Stream Convolutional Networks for Action Recognition in Videos

Two-Stream Convolutional Networks for Action Recognition in Videos NIPS2014

http://www.robots.ox.ac.uk/~vgg/software/two_stream_action/

本文针对视频中的动作分类问题,这里使用 两个独立的CNN来分开处理 视频中的空间信息和时间信息 spatial 和 tempal,然后我们再后融合 late fusion。 spatial stream 从视频中的每一帧图像做动作识别,tempal stream 通过输入稠密光流的运动信息来识别动作。两个 stream 都通过 CNN网络来完成。将时间和空间信息分开来处理,就可以利用现成的数据库来训练这两个网络。

2 Two-stream architecture for video recognition

视频可以很自然的被分为 空间部分和时间部分,空间部分主要对应单张图像中的 appearance,传递视频中描述的场景和物体的相关信息。时间部分对应连续帧的运动,包含物体和观察者(相机)的运动信息。

Each stream is implemented using a deep ConvNet, softmax scores of which are combined by late fusion. We consider two fusion methods: averaging and training a multi-class linear SVM [6] on stacked L 2 -normalised softmax scores as features.

Spatial stream ConvNet: 这就是对单张图像进行分类,我们可以使用最新的网络结构,在图像分类数据库上预训练

3 Optical flow ConvNets

the input to our model is formed by stacking optical flow displacement fields between several consecutive frames. Such input explicitly describes the motion between video frames, which makes the recognition easier

对于 Optical flow ConvNets 我们将若干连续帧图像对应的光流场输入到 CNN中,这种显示的运动信息可以帮助动作分类。

这里我们考虑基于光流输入的变体:

3.1 ConvNet input configurations

Optical flow stacking. 这里我们将光流的水平分量和垂直分量 分别打包当做特征图输入 CNN, The horizontal and vertical components of the vector field can be seen as image channels

Trajectory stacking,作为另一种运动表达方式,我们可以将运动轨迹信息输入 CNN

Bi-directional optical flow

双向光流的计算

Mean flow subtraction: 这算是一种输入的归一化了,将均值归一化到 0

It is generally beneficial to perform zero-centering of the network input, as it allows the model to better exploit the rectification non-linearities

In our case, we consider a simpler approach: from each displacement field d we subtract its mean vector.

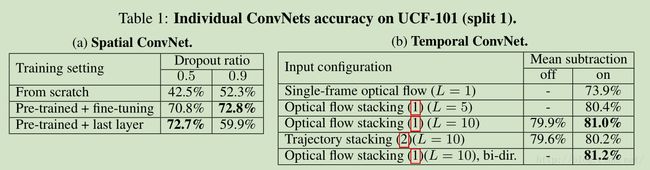

Individual ConvNets accuracy on UCF-101

Temporal ConvNet accuracy on HMDB-51

Two-stream ConvNet accuracy on UCF-101

Mean accuracy (over three splits) on UCF-101 and HMDB-51

--------------------- 本文来自 O天涯海阁O 的CSDN 博客 ,全文地址请点击:https://blog.csdn.net/zhangjunhit/article/details/77991038?utm_source=copy