利用docker在centos 环境下部署tensorflow-serving,并用python书写client客户端测试

本文主要使用docker的方法部署tensorflow serving,并提供python实现client代码实例。

一、Docker安装:https://docs.docker.com/install/linux/docker-ce/centos/#install-docker-ce-1

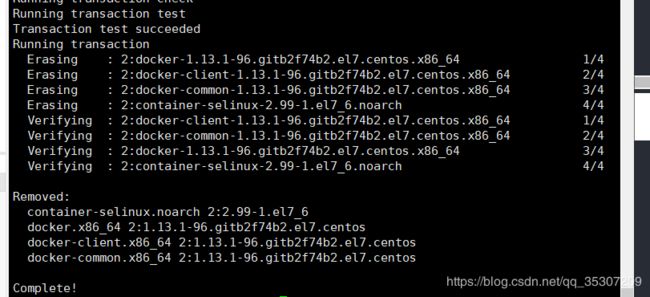

1.移除旧的版本(要谨慎呀,看看这台机器是否已经有docker了,我就不小心把别人的环境弄没了,尴尬~~~)

sudo yum remove docker docker-client docker-client-latest docker-common docker-latest docker-latest-logrotate docker-logrotate docker-selinux docker-engine-selinux docker-engine

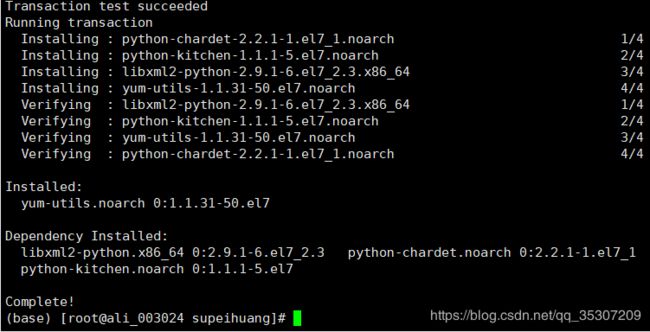

2.安装依赖项:

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

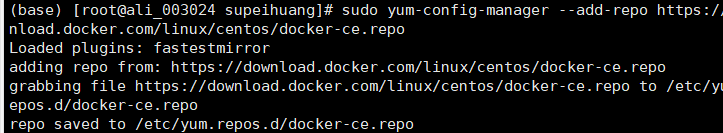

3.添加源信息:

sudo yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

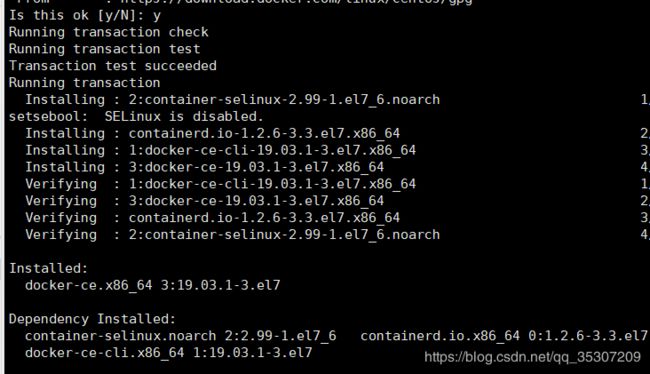

4.安装 Docker-ce:

sudo yum install docker-ce

5.启动 Docker 后台服务:

sudo systemctl start docker

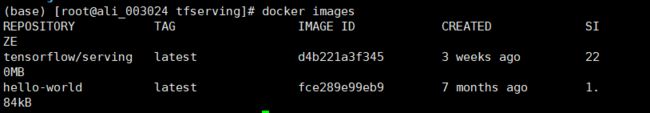

6.查看镜像是否安装成功 docker images

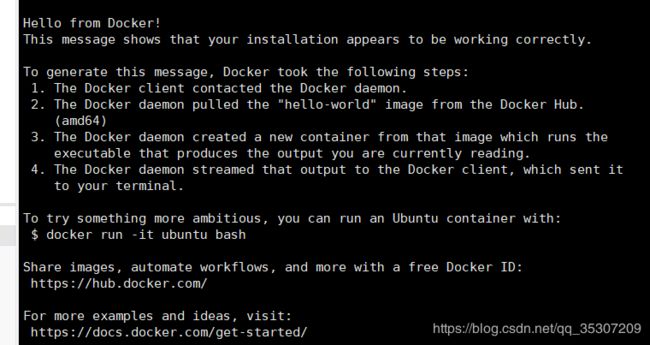

7.测试运行 hello-world:

sudo docker run hello-world

二、TensorFlow服务安装:https://www.tensorflow.org/serving/docker#running_a_gpu_serving_image

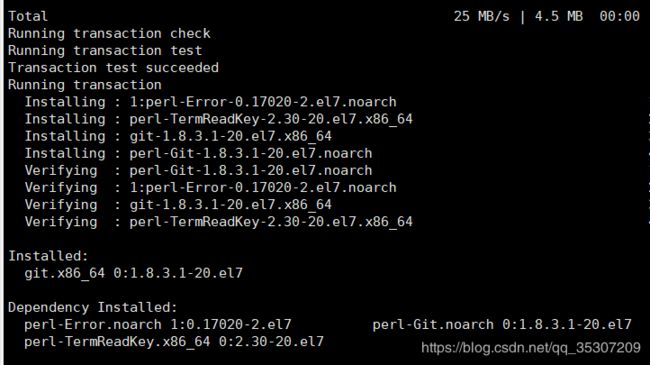

1.yum install git

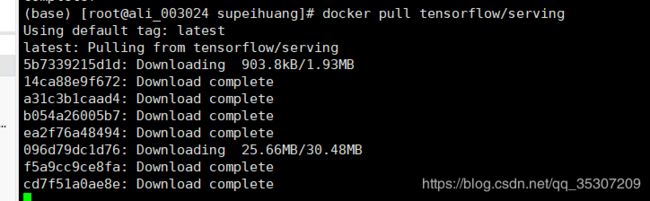

2.拉取serving镜像

docker pull tensorflow/serving

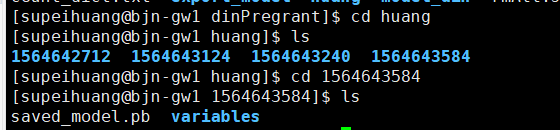

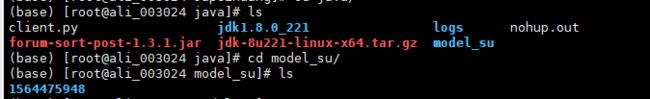

3.导出已经训练好的模型保存在部署服务的机器上

因为机器不同,所以我要cp当前的文件到另一台机器的model_su文件里面

4.运行容器

docker run -p 8501:8501 -p 8500:8500 --mount type=bind,source=/home/supeihuang/pregrant/java/model_su/,target=/models/din_pregrant_posts -e MODEL_NAME=din_pregrant_posts -t tensorflow/serving

注:测试建议使用8500端口 ,source=绝对路径 (重点) 本人模型存在/home/supeihuang/java/model_su/下

- -p 8500:8500 -p 8501:8501 用于绑定rpc和rest端口

- 第一个8501是提供给外部的rest端口 第一个8500是提供给外部的rpc端口 (设置gpu-server-online-list的rest端口)

- /models/model_su:把你导出的本地模型文件夹挂载到docker container的/models/model_su这个文件夹,tensorflow serving会从容器内的/models/model_su文件夹里面找到你的模型

- --MODEL_NAME:模型名字,在导出模型的时候设置的名字

- -t 指定使用tensorflow/serving这个镜像,可以替换其他版本,例如tensorflow/serving:latest-gpu,但你需要

docker pull tensorflow/serving:latest-gpu把这个镜像拉下来

三.客户端的书写

书写客户端其实就是为了让自己能够离线测试这个模型是否正确

安装依赖库sudo pip3 install tensorflow-serving-api

自己安装的时候出现了一点小bug

ERROR: Cannot uninstall ‘wrapt‘. It is a distutils installed project and thus we cannot accurately determine which files belong to it which would lead to only a partial uninstall.

办法1:输入 pip install -U --ignore-installed wrapt enum34 simplejson netaddr 再重新sudo pip3 install tensorflow-serving-api 就能解决了

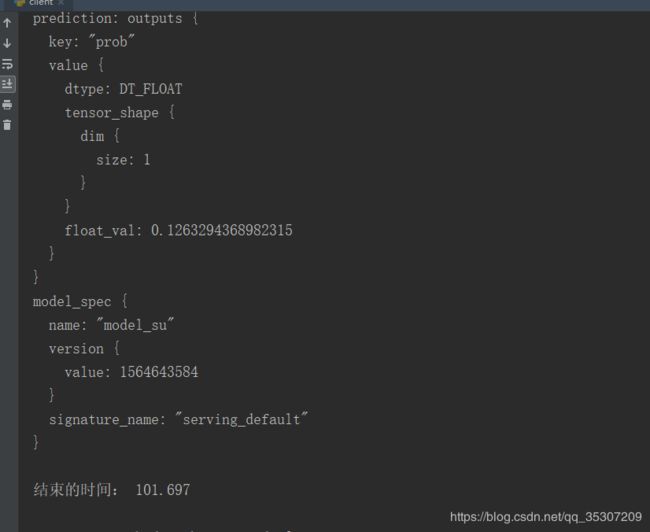

客户端代码及运行结果:(本人一直出现bug,原因是测试的字段和导出模型和测试的字段没匹配成功的原因)

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import grpc

import tensorflow as tf

from tensorflow_serving.apis import predict_pb2

from tensorflow_serving.apis import prediction_service_pb2_grpc

import datetime

# ##39.96.74.14:39000

# tf.app.flags.DEFINE_string('server', '47.94.136.53:8500',

# 'PredictionService host:port')

tf.app.flags.DEFINE_string('server', '123.56.6.143:8500',

'PredictionService host:port')

tf.app.flags.DEFINE_string('model', 'model_su',

'Model name.')

FLAGS = tf.app.flags.FLAGS

def _int_feature(value):

return tf.train.Feature(int64_list=tf.train.Int64List(value=[value]))

def main(_):

starttime = datetime.datetime.now()

values = [36663882,2,2,206781852,1,1,4,1,2,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,1,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0]

length = len(values)

feature_dict = {}

#配置请求端口和一些相关信息

channel = grpc.insecure_channel(FLAGS.server)

stub = prediction_service_pb2_grpc.PredictionServiceStub(channel)

request = predict_pb2.PredictRequest()

print("FLAGS.model:",FLAGS.model)

request.model_spec.name = FLAGS.model

request.model_spec.signature_name = 'serving_default'

#把序列化之后的内容变成proto 并加入inputs serving_default

num = 1

request.inputs['user_feature'].CopyFrom(

tf.make_tensor_proto(values=[[16,26,33,38,112,162,165,198,419,427,438,461,465,543,678,1076,1499,1860,2017,2538,2672,2715]]*num,shape=[num,22], dtype=tf.int32))

request.inputs['item_feature'].CopyFrom(

tf.make_tensor_proto(

values=[[10,304,519,602,628,651,665,832,1382,1774,2154,2358,2420,2800,3086,3254,3671,3993,4395,4817,5209,5350,5788,6391,6785,7196,7523,7626,7628]]*num,shape=[num,29], dtype=tf.int32))

request.inputs['keyword'].CopyFrom(

tf.make_tensor_proto(values=[[12527]]*num,shape=[num], dtype=tf.int32))

request.inputs['keyword2'].CopyFrom(

tf.make_tensor_proto(values=[[13681]]*num,shape=[num], dtype=tf.int32))

request.inputs['tag1'].CopyFrom(

tf.make_tensor_proto(values=[[40]]*num,shape=[num], dtype=tf.int32))

request.inputs['tag2'].CopyFrom(

tf.make_tensor_proto(values=[[92]]*num,shape=[num], dtype=tf.int32))

request.inputs['tag3'].CopyFrom(

tf.make_tensor_proto(values=[[101]]*num,shape=[num], dtype=tf.int32))

request.inputs['ks1'].CopyFrom(

tf.make_tensor_proto(values=[[1630]]*num,shape=[num], dtype=tf.int32))

request.inputs['ks2'].CopyFrom(

tf.make_tensor_proto(values=[[1345]]*num,shape=[num], dtype=tf.int32))

# request.inputs['keep_prob'].CopyFrom(

# tf.make_tensor_proto(values=[[1]], shape=[num,1], dtype=tf.float32))

# request.inputs['hist_item'].CopyFrom(

# tf.make_tensor_proto(values=[[243820,253043,252275,220059,256707,249273,241808,253571,247356,248823,257097,253140,238571,264822,264700,245646,259332,210795,207961,244395,263926,218150,215970,225990,262743,266872,243152,258987,263308,221466,264114,214806,249069,250917,272159,246373,227394,254630,275445,238121,224431,243222,245107,266419,257686,275345,276998,286860,282072,284389,287081,285287,284987]] * num, shape=[num, 53],

# dtype=tf.int32))

# request.inputs['hist_subT'].CopyFrom(

# tf.make_tensor_proto(values=[[1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,1,5,5,1,1,1,1,1,1,5,1,1,1,1,1,1,1,5,1,1,1,1,1,3,1,1,1,1,1,1,1,1,1]]*num,shape=[num,53], dtype=tf.int32))

request.inputs['hist_keyword'].CopyFrom(

tf.make_tensor_proto(values=[[0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,4081,0,0,6494,0,3948,5156,4157,2688,3957,6494,4120,5779,10556,4120,549,6536,6557,7759,4081,9544,1644,14500,11567,1644,7311,10473,4157,7006,12527,0]]*num,shape=[num,48], dtype=tf.int32))

print(request.inputs['hist_keyword'])

request.inputs['hist_keyword2'].CopyFrom(

tf.make_tensor_proto(values=[[0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,4390,0,0,3972,0,5610,5610,5617,3970,9950,3970,6325,12735,10321,10287,8174,13677,12433,4513,3970,0,4513,0,12492,4390,7903,4513,7066,13681,13681,0]]*num,shape=[num,48], dtype=tf.int32))

request.inputs['hist_tag1'].CopyFrom(

tf.make_tensor_proto(values=[[40,40,40,40,20,20,40,40,40,40,19,40,20,40,40,40,40,40,40,40,40,38,40,40,19,40,40,40,40,40,19,40,20,40,40,37,40,37,37,40,40,40,36,40,19,40,40,0]]*num,shape=[num,48], dtype=tf.int32))

request.inputs['hist_tag2'].CopyFrom(

tf.make_tensor_proto(values=[[92,92,92,92,18,0,93,92,92,92,78,92,0,91,92,92,92,92,92,92,92,25,92,92,86,92,92,92,92,92,86,92,0,92,92,75,92,75,75,93,123,92,114,92,86,92,92,0]]*num,shape=[num,48], dtype=tf.int32))

request.inputs['hist_tag3'].CopyFrom(

tf.make_tensor_proto(values=[[101,101,194,101,160,0,24,194,101,101,5,194,0,117,194,101,101,194,194,101,101,168,194,101,93,101,222,101,101,222,93,101,0,194,101,181,101,181,181,150,0,194,0,194,93,101,101,0]]*num,shape=[num,48], dtype=tf.int32))

request.inputs['hist_ks1'].CopyFrom(

tf.make_tensor_proto(values=[[2750,0,0,1623,2150,0,3001,2379,1623,1623,976,0,0,0,0,1547,0,1623,4026,0,1623,0,1623,2140,0,1360,5068,1623,3694,2593,3727,1654,0,4330,1664,0,1623,0,0,0,0,1832,0,1832,0,2593,0,0]]*num,shape=[num,48], dtype=tf.int32))

request.inputs['hist_ks2'].CopyFrom(

tf.make_tensor_proto(values=[[0,0,0,2282,0,0,0,0,2447,1552,0,0,0,0,0,0,0,0,0,0,2282,0,1497,2282,0,0,1503,2473,2282,0,0,3559,0,2473,0,0,1573,0,0,0,0,0,0,1819,0,1586,0,0]]*num,shape=[num,48], dtype=tf.int32))

request.inputs['sl'].CopyFrom(

tf.make_tensor_proto(values=[48] * num, shape=[num], dtype=tf.int32))

print("request:",request)

result_future = stub.Predict.future(request, 100.0)

print("result_future:",result_future)

prediction = result_future.result()

print("prediction:",prediction)

# long running

# do something other

endtime = datetime.datetime.now()

print("结束的时间:",(endtime - starttime).microseconds/1000.0)

if __name__ == '__main__':

tf.app.run()