使用 kubeadm 搭建 v1.15.3 版本 Kubernetes 集群

使用 kubeadm 搭建 v1.15.3 版本 Kubernetes 集群

- 一. 环境准备(每个节点,下面以master节点示例)

- 1.1 修改hostname

- 1.2 添加hosts

- 1.3 禁用防火墙

- 1.4 禁用SELINUX

- 1.5 创建/etc/sysctl.d/k8s.conf文件,添加如下内容

- 1.6 安装 ipvs

- 1.7 关闭 swap 分区

- 1.8 接下来可以安装 Docker

- 1.9 安装 Kubeadm

- 二. 初始化集群

- 2.1 导出默认配置

- 2.2 修改配置

- 2.3 然后使用上面的配置文件进行初始化

- 2.4 拷贝 kubeconfig 文件

- 三. 添加节点(以node1示例,node2过程忽略)

- 3.1 添加节点

- 3.2 安装网络插件

- 1. flannel(如果网段是`192.168.0.x`使用这个)

- 2. calio(如果网段是`10.0.0.x`使用这个)

- 3.3 错误

- 修复(每个节点都要执行)

- 四. 安装 Dashboard

- 4.1 下载yaml文件并修改

- 4.2 创建

- 4.3 访问

- 4.4 创建有权限的用户

- 五. 重置节点

- 5.1 重置节点

- 5.2 删除flannel网络

- 5.3 如果pod一直`Terminating`

- 六. 将Pod调度到Master节点

一. 环境准备(每个节点,下面以master节点示例)

1.1 修改hostname

# master节点

hostnamectl set-hostname master

# node1节点

hostnamectl set-hostname node1

# node2节点

hostnamectl set-hostname node2

1.2 添加hosts

[root@master ~]# vi /etc/hosts

# 复制

192.168.0.27 master

192.168.0.28 node1

192.168.0.29 node2

1.3 禁用防火墙

[root@master ~]# systemctl stop firewalld

[root@master ~]# systemctl disable firewalld

1.4 禁用SELINUX

# 查看

[root@master ~]# getenforce

Permissive

[root@master ~]# vi /etc/selinux/config

将SELINUX=enforcing改为SELINUX=disabled ,设置后需要重启才能生效

SELINUX=disabled

# 快捷键

:%s#SELINUX=enforcing#SELINUX=disabled#g

# 重启后

[root@master ~]# getenforce

Disabled

1.5 创建/etc/sysctl.d/k8s.conf文件,添加如下内容

[root@master ~]# cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

执行如下命令使修改生效:

[root@master ~]# modprobe br_netfilter

[root@master ~]# sysctl -p /etc/sysctl.d/k8s.conf

1.6 安装 ipvs

$ cat > /etc/sysconfig/modules/ipvs.modules <上面脚本创建了的/etc/sysconfig/modules/ipvs.modules文件,保证在节点重启后能自动加载所需模块。使用lsmod | grep -e ip_vs -e nf_conntrack_ipv4命令查看是否已经正确加载所需的内核模块。

接下来还需要确保各个节点上已经安装了 ipset 软件包:

yum install -y ipset

为了便于查看 ipvs 的代理规则,最好安装一下管理工具 ipvsadm:

yum install -y ipvsadm

同步服务器时间

[root@master ~]# yum install chrony -y

[root@master ~]# systemctl enable chronyd

[root@master ~]# systemctl start chronyd

[root@master ~]# chronyc sources

210 Number of sources = 4

MS Name/IP address Stratum Poll Reach LastRx Last sample

===============================================================================

^- ntp1.ams1.nl.leaseweb.net 2 6 33 21 -5010us[-5010us] +/- 196ms

^- stratum2-1.ntp.led01.ru.> 2 6 17 24 +33ms[ +33ms] +/- 99ms

^* 203.107.6.88 2 6 17 25 -75us[ +13ms] +/- 18ms

^- ntp8.flashdance.cx 2 6 17 23 -21ms[ -21ms] +/- 168ms

[root@master ~]# date

2019年 09月 23日 星期一 18:32:07 CST

1.7 关闭 swap 分区

临时关闭,重启失效

[root@master ~]# swapoff -a

修改/etc/fstab文件,注释掉 SWAP 的自动挂载,使用free -m确认 swap 已经关闭。

# 永久关闭,注释swap那一行

sed -ri 's/.*swap.*/#&/' /etc/fstab

[root@master ~]# free -m

total used free shared buff/cache available

Mem: 3771 691 2123 45 956 2759

Swap: 0 0 0

swappiness 参数调整,修改/etc/sysctl.d/k8s.conf添加下面一行:

[root@master ~]# vi /etc/sysctl.d/k8s.conf

# 添加一行

vm.swappiness=0

执行使修改生效。

[root@master ~]# sysctl -p /etc/sysctl.d/k8s.conf

1.8 接下来可以安装 Docker

$ yum install -y yum-utils \

device-mapper-persistent-data \

lvm2

$ yum-config-manager \

--add-repo \

https://download.docker.com/linux/centos/docker-ce.repo

[root@master ~]# yum list docker-ce --showduplicates | sort -r

已加载插件:fastestmirror, langpacks

可安装的软件包

* updates: mirrors.aliyun.com

Loading mirror speeds from cached hostfile

* extras: mirrors.aliyun.com

docker-ce.x86_64 3:19.03.2-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.1-3.el7 docker-ce-stable

docker-ce.x86_64 3:19.03.0-3.el7 docker-ce-stable

docker-ce.x86_64 3:18.09.9-3.el7 docker-ce-stable

...

可以选择安装一个版本,推荐安装18.09版本,我们这里安装18.09.9-3.el7版本,也可以安装最新版本,不过会有Warning提示:

yum install docker-ce-18.09.9-3.el7 -y

如果报错

Last metadata expiration check: 0:00:10 ago on Thu 21 Nov 2019 04:17:26 PM UTC.

Error:

Problem: package docker-ce-3:18.09.9-3.el7.x86_64 requires containerd.io >= 1.2.2-3, but none of the providers can be installed

- conflicting requests

- package containerd.io-1.2.10-3.2.el7.x86_64 is excluded

- package containerd.io-1.2.2-3.3.el7.x86_64 is excluded

- package containerd.io-1.2.2-3.el7.x86_64 is excluded

- package containerd.io-1.2.4-3.1.el7.x86_64 is excluded

- package containerd.io-1.2.5-3.1.el7.x86_64 is excluded

- package containerd.io-1.2.6-3.3.el7.x86_64 is excluded

(try to add '--skip-broken' to skip uninstallable packages or '--nobest' to use not only best candidate packages)

解决办法

[root@wanfei ~]# wget https://download.docker.com/linux/centos/7/x86_64/edge/Packages/containerd.io-1.2.6-3.3.el7.x86_64.rpm

[root@wanfei ~]# yum -y install containerd.io-1.2.6-3.3.el7.x86_64.rpm

然后重新安装

启动 Docker

$ systemctl start docker

$ systemctl enable docker

配置 Docker 镜像加速器

$ cat < /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"registry-mirrors": ["https://x83mabsk.mirror.aliyuncs.com"]

}

EOF

# 生效

systemctl daemon-reload

systemctl restart docker

1.9 安装 Kubeadm

不要使用该文档安装

1.16.0版本,安装了之后发现dashboard进入报404错误

在确保 Docker 安装完成后,上面的相关环境配置也完成了,现在我们就可以来安装 Kubeadm 了,我们这里是通过指定yum 源的方式来进行安装的:

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg

https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

当然了,上面的 yum 源是需要科学上网的,如果不能科学上网的话,我们可以使用阿里云的源进行安装:

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

然后安装 kubeadm、kubelet、kubectl:

[root@master ~]# yum install -y kubelet-1.15.3-0 kubectl-1.15.3-0 kubeadm-1.15.3-0 --disableexcludes=kubernetes

[root@master ~]# kubeadm version

kubeadm version: &version.Info{Major:"1", Minor:"15", GitVersion:"v1.15.3", GitCommit:"2d3c76f9091b6bec110a5e63777c332469e0cba2", GitTreeState:"clean", BuildDate:"2019-08-19T11:11:18Z", GoVersion:"go1.12.9", Compiler:"gc", Platform:"linux/amd64"}

如果

master节点到这里安装了上面的kubeadm再克隆node节点,重启后kubeadm version没反应,此时可以卸载上面三个,再重新安装就没问题了。

可以看到我们这里安装的是 v1.15.3 版本,然后将 kubelet 设置成开机启动:

systemctl enable kubelet.service

到这里为止上面所有的操作都需要在所有节点执行配置。

二. 初始化集群

2.1 导出默认配置

然后接下来在 master 节点配置 kubeadm 初始化文件,可以通过如下命令导出默认的初始化配置:

[root@master ~]# mkdir -p i/master && cd i/master

[root@master master]# kubeadm config print init-defaults > kubeadm.yaml

[root@master master]# ls

kubeadm.yaml

2.2 修改配置

然后根据我们自己的需求修改配置,比如修改 imageRepository 的值,kube-proxy 的模式为 ipvs,另外需要注意的是我们这里是准备安装 flannel 网络插件的,需要将 networking.podSubnet 设置为10.244.0.0/16:

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.0.27 # apiserver 节点内网IP

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: master

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS # dns类型

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: gcr.azk8s.cn/google_containers # 修改这个镜像能下载

kind: ClusterConfiguration

kubernetesVersion: v1.16.3 # k8s版本

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs # kube-proxy 模式

要删除右边的注释

- 如果您的网络运行在

192.168.*.*,需要将networking.podSubnet设置为10.0.0.0/16,此时使用Flannel网络;- 如果您的网络是

10.0.*.*使用192.168.0.0/16,此时使用calico网络(如果设置错了部署calico网络插件后coredns也运行不起来,会报错coredns Failed to list *v1.Endpoints,该错误解决办法参考https://blog.csdn.net/u011663005/article/details/87937800):

2.3 然后使用上面的配置文件进行初始化

[root@master master]# kubeadm init --config kubeadm.yaml

[init] Using Kubernetes version: v1.16.0

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 19.03.2. Latest validated version: 18.09

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Activating the kubelet service

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [master kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.0.27]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [master localhost] and IPs [192.168.0.27 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [master localhost] and IPs [192.168.0.27 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[kubelet-check] Initial timeout of 40s passed.

[apiclient] All control plane components are healthy after 40.507095 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.16" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: abcdef.0123456789abcdef

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.0.27:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:288f1974a67c2d0d302e6bb8ac1ee06ad400d034f7f28e3306bee117ae89635d

可以看到最新验证的 docker 版本是18.09,虽然是一个 warning,所以最好还是安装18.09版本的 docker。

2.4 拷贝 kubeconfig 文件

[root@master master]# mkdir -p $HOME/.kube

[root@master master]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master master]# sudo chown $(id -u):$(id -g) $HOME/.kube/config

# 拷贝config到其他节点

[root@master master]# scp -r $HOME/.kube/ [email protected]:$HOME/

[root@master master]# scp -r $HOME/.kube/ [email protected]:$HOME/

三. 添加节点(以node1示例,node2过程忽略)

3.1 添加节点

记住初始化集群上面的配置和操作要提前做好,将 master 节点上面的 $HOME/.kube/config 文件拷贝到 node 节点对应的文件中,安装 kubeadm、kubelet、kubectl,然后执行上面初始化完成后提示的 join 命令即可:

[root@node1 .kube]# kubeadm join 192.168.0.27:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:288f1974a67c2d0d302e6bb8ac1ee06ad400d034f7f28e3306bee117ae89635d

[preflight] Running pre-flight checks

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 19.03.2. Latest validated version: 18.09

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.16" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

如果忘记了上面的 join 命令可以使用命令kubeadm token create --print-join-command重新获取。

执行成功后运行 get nodes 命令:

[root@node1 node]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master NotReady master 15m v1.16.0

node1 NotReady 104s v1.16.0

node2 NotReady 89s v1.16.0

3.2 安装网络插件

1. flannel(如果网段是192.168.0.x使用这个)

可以看到是 NotReady 状态,这是因为还没有安装网络插件,接下来安装网络插件,可以在文档 https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/ 中选择我们自己的网络插件,这里我们安装 flannel:

$ wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

# 修改几个image为阿里云的镜像

registry.cn-shanghai.aliyuncs.com/wanfei/flannel:v0.11.0-amd64

registry.cn-shanghai.aliyuncs.com/wanfei/flannel:v0.11.0-arm64

registry.cn-shanghai.aliyuncs.com/wanfei/flannel:v0.11.0-arm

registry.cn-shanghai.aliyuncs.com/wanfei/flannel:v0.11.0-ppc64le

# 快捷键替换

:%s#quay.io/coreos/flannel:v0.11.0-amd64#registry.cn-shanghai.aliyuncs.com/wanfei/flannel:v0.11.0-amd64#g

:%s#quay.io/coreos/flannel:v0.11.0-arm64#registry.cn-shanghai.aliyuncs.com/wanfei/flannel:v0.11.0-arm64#g

:%s#quay.io/coreos/flannel:v0.11.0-arm#registry.cn-shanghai.aliyuncs.com/wanfei/flannel:v0.11.0-arm#g

:%s#quay.io/coreos/flannel:v0.11.0-ppc64le#registry.cn-shanghai.aliyuncs.com/wanfei/flannel:v0.11.0-ppc64le#g

$ kubectl apply -f kube-flannel.yml

另外需要注意的是如果你的节点有多个网卡的话,需要在 kube-flannel.yml 中使用–iface参数指定集群主机内网网卡的名称,否则可能会出现 dns 无法解析。flanneld 启动参数加上–iface=

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=eth0

2. calio(如果网段是10.0.0.x使用这个)

可以看到是 NotReady 状态,这是因为还没有安装网络插件,接下来安装网络插件,可以在文档 https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/create-cluster-kubeadm/ 中选择我们自己的网络插件,这里我们安装 calio:

[root@node1 node]# wget https://docs.projectcalico.org/v3.8/manifests/calico.yaml

# 因为有节点是多网卡,所以需要在资源清单文件中指定内网网卡

$ vi calico.yaml

......

spec:

containers:

- env:

- name: DATASTORE_TYPE

value: kubernetes

- name: IP_AUTODETECTION_METHOD # DaemonSet中添加该环境变量

value: interface=ens32 # 指定内网网卡

- name: WAIT_FOR_DATASTORE

value: "true"

......

$ kubectl apply -f calico.yaml # 安装calico网络插件

要删除右边的注释

隔一会儿查看 Pod 运行状态:

[root@node1 node]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-564b6667d7-6wjrg 1/1 Running 0 26s

calico-node-fwgvt 1/1 Running 0 27s

calico-node-phb45 1/1 Running 0 27s

calico-node-w8sc2 1/1 Running 0 27s

coredns-667f964f9b-5kgq6 1/1 Running 0 16m

coredns-667f964f9b-kk2ff 1/1 Running 0 16m

etcd-master 1/1 Running 0 31m

kube-apiserver-master 1/1 Running 0 31m

kube-controller-manager-master 1/1 Running 0 30m

kube-proxy-fh2wl 1/1 Running 0 6m9s

kube-proxy-ltnsz 1/1 Running 0 5m23s

kube-proxy-sslhq 1/1 Running 0 31m

kube-scheduler-master 1/1 Running 0 30m

网络插件运行成功了,node 状态也正常了:

[root@node1 node]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master Ready master 32m v1.16.0

node1 Ready 6m1s v1.16.0

node2 Ready 5m15s v1.16.0

3.3 错误

[root@master ~]# journalctl -f

9月 27 11:28:43 master kubelet[8637]: E0927 11:28:43.085075 8637 summary_sys_containers.go:47] Failed to get system container stats for "/system.slice/docker.service": failed to get cgroup stats for "/system.slice/docker.service": failed to get container info for "/system.slice/docker.service": unknown container "/system.slice/docker.service"

9月 27 11:28:44 master systemd[1]: Created slice libcontainer_88920_systemd_test_default.slice.

9月 27 11:28:44 master systemd[1]: Removed slice libcontainer_88920_systemd_test_default.slice.

修复(每个节点都要执行)

修改docker

vi /etc/docker/daemon.json

# 替换

:%s/systemd/cgroupfs/g

# 生效

systemctl daemon-reload

systemctl restart docker

修改kubelet

vi /var/lib/kubelet/kubeadm-flags.env

# 将systemd替换为cgroupfs,在后面添加

--runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice

# 最后变为

KUBELET_KUBEADM_ARGS="--cgroup-driver=cgroupfs --runtime-cgroups=/systemd/system.slice --kubelet-cgroups=/systemd/system.slice --network-plugin=cni --pod-infra-container-image=gcr.azk8s.cn/google_containers/pause:3.1"

# 生效

systemctl daemon-reload

systemctl restart kubelet

再次查看发现错误消失

四. 安装 Dashboard

4.1 下载yaml文件并修改

$ wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml

$ vi kubernetes-dashboard.yaml

# 修改镜像名称

......

containers:

- name: kubernetes-dashboard

image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1

ports:

- containerPort: 8443

protocol: TCP

......

修改为

......

containers:

- name: kubernetes-dashboard

image: gcr.azk8s.cn/google_containers/kubernetes-dashboard-amd64:v1.10.1

# 如果存在镜像就不拉取

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8443

protocol: TCP

......

# 修改Service为NodePort类型

......

selector:

k8s-app: kubernetes-dashboard

type: NodePort

......

4.2 创建

直接创建:

[root@master dashboard]# kubectl apply -f kubernetes-dashboard.yaml

[root@master dashboard]# kubectl get pods -n kube-system -l k8s-app=kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

kubernetes-dashboard-7c9964c47-dpq55 1/1 Running 1 37s

[root@master dashboard]# kubectl get svc -n kube-system -l k8s-app=kubernetes-dashboard

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes-dashboard NodePort 10.108.229.70 443:31394/TCP 76s

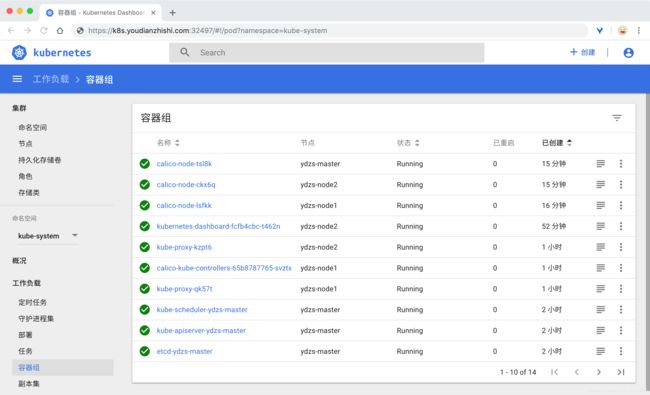

4.3 访问

然后可以通过上面的 31394 端口去访问 Dashboard,要记住使用 https,Chrome不生效可以使用Firefox测试:

4.4 创建有权限的用户

然后创建一个具有全局所有权限的用户来登录Dashboard:(admin.yaml)

[root@master dashboard]# vi admin.yaml

# 复制

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: admin

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

roleRef:

kind: ClusterRole

name: cluster-admin

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: admin

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

直接创建:

$ kubectl apply -f admin.yaml

# 查看秘钥,直接用下面脚本更快

$ kubectl get secret -n kube-system|grep admin-token

admin-token-d5jsg kubernetes.io/service-account-token 3 1d

$ kubectl get secret admin-token-d5jsg -o jsonpath={.data.token} -n kube-system |base64 -d# 会生成一串很长的base64后的字符串

查看秘钥脚本

[root@master dashboard]# ls

dashboard-token.sh

[root@master dashboard]# cat dashboard-token.sh

#!/bin/sh

TOKENS=$(kubectl describe serviceaccount admin -n kube-system | grep "Tokens:" | awk '{ print $2}')

kubectl describe secret $TOKENS -n kube-system | grep "token:" | awk '{ print $2}'

[root@master dashboard]# sh dashboard-token.sh

eyJhbGciOiJSUzI1NiIsImtpZCI6IjRUNFRTLUN2OEN2YWFCT3lVNlE5dHozZnlySWZCUkJGRkRJLWtHUExES28ifQnQvc2VyOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydhyqnEgpjKPQwrH3Q-R97Nz_9YJabpqctPNERt5RhHSN_6DZkvprFbCYBi0JWX80V7s1NSTPgvOe58bw0mHeAMsfXMaSl0y8FC5XGPOeFX_4_8Wr6THi5rxYksXUxeXC8Y3TUQGUEtriOs1xgZc5x6YdsmitTl10A6EQ8cewhomxz84w

然后用上面的base64解码后的字符串作为token登录Dashboard即可:

五. 重置节点

5.1 重置节点

如果那个node节点有问题,可以重置

将节点排出集群

kubectl drain --delete-local-data --force --ignore-daemonsets

kubectl delete node

然后,在要删除的节点上,重置所有kubeadm安装状态:

kubeadm reset

如果想删除整个集群,那么直接每个节点执行

kubeadm reset

5.2 删除flannel网络

虽然上面重置了节点,但是flannel网络没有删除

[root@node2 .kube]# ifconfig

flannel.1: flags=4163 mtu 1450

inet 192.168.2.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::9c7f:85ff:fe0f:3b37 prefixlen 64 scopeid 0x20

ether 9e:7f:85:0f:3b:37 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

# 卸载flannel网络

ifconfig cni0 down

ip link delete cni0

ifconfig flannel.1 down

ip link delete flannel.1

rm -rf /var/lib/cni/

rm -f /etc/cni/net.d/*

# 重启kubelet

systemctl restart kubelet.service

# 查看网络没有了

[root@node2 .kube]# ifconfig

docker0: flags=4099 mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:ae:56:e9:96 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163 mtu 1500

inet 10.2.0.27 netmask 255.255.255.0 broadcast 10.2.0.255

inet6 fe80::5054:ff:fef4:c85 prefixlen 64 scopeid 0x20

ether 52:54:00:f4:0c:85 txqueuelen 1000 (Ethernet)

RX packets 190734 bytes 231387045 (220.6 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 67686 bytes 7373517 (7.0 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 22 bytes 1472 (1.4 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 22 bytes 1472 (1.4 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

5.3 如果pod一直Terminating

[root@master redis-ha]# kubectl get pods -n kube-ops

NAME READY STATUS RESTARTS AGE

harbor-harbor-registry-7d456446ff-j2l22 0/2 Terminating 3 20h

# 强制删除

kubectl delete pod harbor-harbor-registry-7d456446ff-j2l22 --grace-period=0 --force -n kube-ops

六. 将Pod调度到Master节点

出于安全考虑,默认配置下Kubernetes不会将Pod调度到Master节点。如果希望将k8s-master也当作Node使用,可以执行如下命令:

kubectl taint node master node-role.kubernetes.io/master-

其中master是主机节点hostname如果要恢复Master Only状态,执行如下命令:

kubectl taint node master node-role.kubernetes.io/master=""