Spark实时项目第九天-ADS层实现热门品牌统计

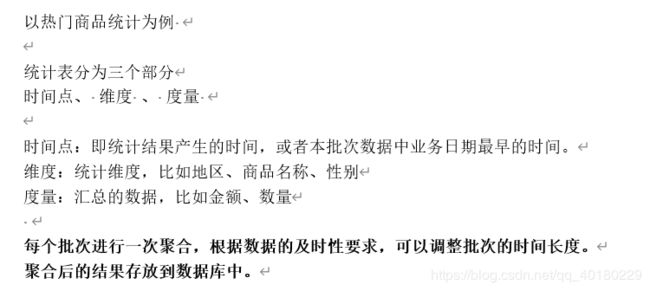

分析

数据库的选型

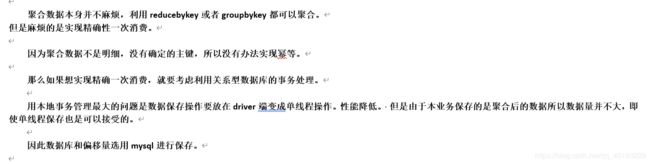

创建数据库

create database spark_gmall_report

CREATE TABLE `offset` (

`group_id` varchar(200) NOT NULL,

`topic` varchar(200) NOT NULL,

`partition_id` int(11) NOT NULL,

`topic_offset` bigint(20) DEFAULT NULL,

PRIMARY KEY (`group_id`,`topic`,`partition_id`)

) ENGINE=InnoDB DEFAULT CHARSET=utf8

CREATE TABLE `spu_order_final_detail_amount_stat` ( stat_time datetime ,spu_id varchar(20) ,spu_name varchar(200),amount decimal(16,2) ,

PRIMARY KEY (`stat_time`,`spu_id`,`spu_name`)

)ENGINE=InnoDB DEFAULT CHARSET=utf8

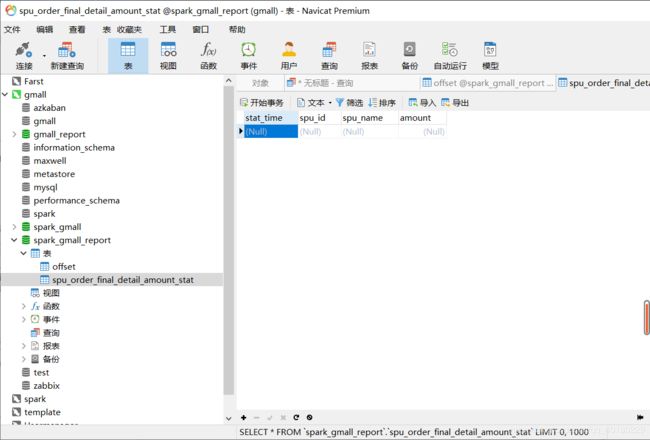

增加配置application.conf

在spark-gmall-dw-realtime\src\main\resources\application.conf

db.default.driver="com.mysql.jdbc.Driver"

db.default.url="jdbc:mysql://hadoop2/spark_gmall_report?characterEncoding=utf-8&useSSL=false"

db.default.user="root"

db.default.password="000000"

POM

此处引用了一个 scala的MySQL工具:scalikeJdbc

配置文件: 默认使用 application.conf

<properties>

<spark.version>2.4.0</spark.version>

<scala.version>2.11.8</scala.version>

<kafka.version>1.0.0</kafka.version>

<project.build.sourceEncoding>UTF-8</project.build.sourceEncoding>

<project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.2.56</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.apache.kafka</groupId>

<artifactId>kafka-clients</artifactId>

<version>${kafka.version}</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-streaming-kafka-0-10_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>redis.clients</groupId>

<artifactId>jedis</artifactId>

<version>2.9.0</version>

</dependency>

<dependency>

<groupId>org.apache.phoenix</groupId>

<artifactId>phoenix-spark</artifactId>

<version>4.14.2-HBase-1.3</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>io.searchbox</groupId>

<artifactId>jest</artifactId>

<version>5.3.3</version>

</dependency>

<dependency>

<groupId>net.java.dev.jna</groupId>

<artifactId>jna</artifactId>

<version>4.5.2</version>

</dependency>

<dependency>

<groupId>org.codehaus.janino</groupId>

<artifactId>commons-compiler</artifactId>

<version>2.7.8</version>

</dependency>

<dependency>

<groupId>org.elasticsearch</groupId>

<artifactId>elasticsearch</artifactId>

<version>2.4.6</version>

</dependency>

<dependency>

<groupId>org.apache.phoenix</groupId>

<artifactId>phoenix-spark</artifactId>

<version>4.14.2-HBase-1.3</version>

</dependency>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>${spark.version}</version>

</dependency>

<dependency>

<groupId>org.scalikejdbc</groupId>

<artifactId>scalikejdbc_2.11</artifactId>

<version>2.5.0</version>

</dependency>

<!-- scalikejdbc-config_2.11 -->

<dependency>

<groupId>org.scalikejdbc</groupId>

<artifactId>scalikejdbc-config_2.11</artifactId>

<version>2.5.0</version>

</dependency>

<dependency>

<groupId>mysql</groupId>

<artifactId>mysql-connector-java</artifactId>

<version>5.1.47</version>

</dependency>

</dependencies>

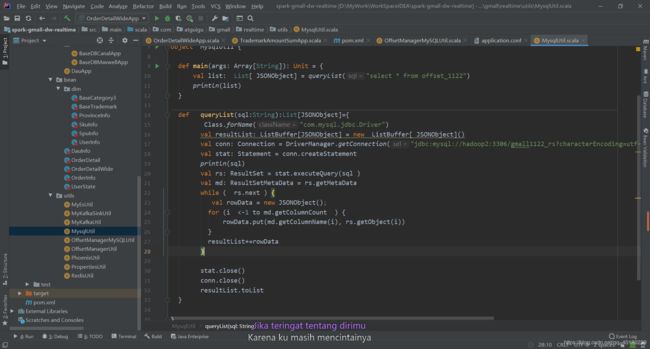

MySQLUtil

用于查询MySQL数据库 scala\com\atguigu\gmall\realtime\utils\MysqlUtil.scala

import java.sql.{Connection, DriverManager, ResultSet, ResultSetMetaData, Statement}

import com.alibaba.fastjson.JSONObject

import scala.collection.mutable.ListBuffer

object MysqlUtil {

def main(args: Array[String]): Unit = {

val list: List[ JSONObject] = queryList("select * from offset_1122")

println(list)

}

def queryList(sql:String):List[JSONObject]={

Class.forName("com.mysql.jdbc.Driver")

val resultList: ListBuffer[JSONObject] = new ListBuffer[ JSONObject]()

val conn: Connection = DriverManager.getConnection("jdbc:mysql://hadoop2:3306/gmall1122_rs?characterEncoding=utf-8&useSSL=false","root","123123")

val stat: Statement = conn.createStatement

println(sql)

val rs: ResultSet = stat.executeQuery(sql )

val md: ResultSetMetaData = rs.getMetaData

while ( rs.next ) {

val rowData = new JSONObject();

for (i <-1 to md.getColumnCount ) {

rowData.put(md.getColumnName(i), rs.getObject(i))

}

resultList+=rowData

}

stat.close()

conn.close()

resultList.toList

}

}

增加OffsetManagerMySQLUtil

在scala\com\atguigu\gmall\realtime\utils\OffsetManagerMySQLUtil.scala

import com.alibaba.fastjson.JSONObject

import org.apache.kafka.common.TopicPartition

object OffsetManagerMySQLUtil {

/**

* 从Mysql中读取偏移量

* @param groupId

* @param topic

* @return

*/

def getOffset(groupId:String,topic:String):Map[TopicPartition,Long]={

var offsetMap=Map[TopicPartition,Long]()

val offsetJsonObjList: List[JSONObject] = MysqlUtil.queryList("SELECT group_id ,topic,partition_id , topic_offset FROM offset_1122 where group_id='"+groupId+"' and topic='"+topic+"'")

if(offsetJsonObjList!=null&&offsetJsonObjList.size==0){

null

}else {

val kafkaOffsetList: List[(TopicPartition, Long)] = offsetJsonObjList.map { offsetJsonObj =>

(new TopicPartition(offsetJsonObj.getString("topic"),offsetJsonObj.getIntValue("partition_id")), offsetJsonObj.getLongValue("topic_offset"))

}

kafkaOffsetList.toMap

}

}

}

增加TrademarkAmountSumApp

在scala\com\atguigu\gmall\realtime\app\ads\TrademarkAmountSumApp.scala

import java.lang.Math

import com.alibaba.fastjson.JSON

import org.apache.kafka.clients.consumer.ConsumerRecord

import org.apache.kafka.common.TopicPartition

import org.apache.spark.SparkConf

import org.apache.spark.streaming.{Seconds, StreamingContext}

import org.apache.spark.streaming.dstream.{DStream, InputDStream}

import org.apache.spark.streaming.kafka010.{HasOffsetRanges, OffsetRange}

import java.lang.Math

import java.text.SimpleDateFormat

import java.util.Date

import com.atguigu.gmall.realtime.bean.OrderDetailWide

import com.atguigu.gmall.realtime.utils.{MyKafkaUtil, OffsetManagerMySQLUtil}

import org.apache.spark.rdd.RDD

import scalikejdbc.{DB, SQL}

import scalikejdbc.config.DBs

object TrademarkAmountSumApp {

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("ads_trademark_sum_app")

val ssc = new StreamingContext(sparkConf, Seconds(5))

val topic = "DWS_ORDER_DETAIL_WIDE";

val groupId = "ads_trademark_sum_group"

///////////////////// 偏移量处理///////////////////////////

//// 改成 //mysql

val offset: Map[TopicPartition, Long] = OffsetManagerMySQLUtil.getOffset(groupId, topic)

var inputDstream: InputDStream[ConsumerRecord[String, String]] = null

// 判断如果从redis中读取当前最新偏移量 则用该偏移量加载kafka中的数据 否则直接用kafka读出默认最新的数据

if (offset != null && offset.size > 0) {

inputDstream = MyKafkaUtil.getKafkaStream(topic, ssc, offset, groupId)

} else {

inputDstream = MyKafkaUtil.getKafkaStream(topic, ssc, groupId)

}

//取得偏移量步长

var offsetRanges: Array[OffsetRange] = null

val inputGetOffsetDstream: DStream[ConsumerRecord[String, String]] = inputDstream.transform { rdd =>

offsetRanges = rdd.asInstanceOf[HasOffsetRanges].offsetRanges

rdd

}

//转换结构

val orderDetailWideDstream: DStream[OrderDetailWide] = inputGetOffsetDstream.map { record =>

val jsonStr: String = record.value()

val orderDetailWide: OrderDetailWide = JSON.parseObject(jsonStr, classOf[OrderDetailWide])

orderDetailWide

}

val orderWideWithKeyDstream: DStream[(String, Double)] = orderDetailWideDstream.map { orderDetailWide =>

(orderDetailWide.tm_id + ":" + orderDetailWide.tm_name, orderDetailWide.final_detail_amount)

}

val orderWideSumDstream: DStream[(String, Double)] = orderWideWithKeyDstream.reduceByKey ((amount1,amount2)=>

java.lang.Math.round((amount1+amount2)/100)*100

)

//保存数据字段:时间(业务时间也行),维度, 度量 stat_time ,tm_id,tm_name,amount,(sku_num)(order_count)

//保存偏移量

orderWideSumDstream.foreachRDD{rdd:RDD[(String, Double)]=>

//把各个executor中各个分区的数据收集到driver中的一个数组

val orderWideArr: Array[(String, Double)] = rdd.collect()

// scalikejdbc

if(orderWideArr!=null&&orderWideArr.size>0){

// 加载配置

DBs.setup()

DB.localTx{implicit session=> //事务启动

// 偏移量保存完毕

for (offset <- offsetRanges ) {

println(offset.partition+"::"+offset.untilOffset)

SQL("REPLACE INTO `offset`(group_id,topic, partition_id, topic_offset) VALUES( ?,?,?,?) ").bind(groupId,topic,offset.partition,offset.untilOffset).update().apply()

}

// throw new RuntimeException("强行异常测试!!!!")

//整个集合保存完毕

for ((tm,amount) <- orderWideArr ) {

val statTime: String = new SimpleDateFormat("yyyy-MM-dd HH:mm:dd").format(new Date)

val tmArr: Array[String] = tm.split(":")

val tm_id=tmArr(0)

val tm_name=tmArr(1)

SQL("insert into trademark_amount_sum_stat values(?,?,?,?)").bind(statTime,tm_id,tm_name,amount).update().apply()

}

}//事务结束

}

}

orderWideSumDstream.print(1000)

ssc.start()

ssc.awaitTermination()

}

}