DL之SegNet:SegNet图像分割算法的简介(论文介绍)、架构详解、案例应用等配图集合之详细攻略

DL之SegNet:SegNet图像分割算法的简介(论文介绍)、架构详解、案例应用等配图集合之详细攻略

导读

基于CNN的神经网络SegNet算法可进行高精度地识别行驶环境。

目录

SegNet图像分割算法的简介(论文介绍)

0、实验结果

1、SegNet算法的关键思路

SegNet图像分割算法的架构详解

SegNet图像分割算法的案例应用

相关文章

DL之SegNet:SegNet图像分割算法的简介(论文介绍)、架构详解、案例应用等配图集合之详细攻略

DL之SegNet:SegNet图像分割算法的架构详解

SegNet图像分割算法的简介(论文介绍)

更新……

Abstract

We present a novel and practical deep fully convolutional neural network architecture for semantic pixel-wise segmentation termed SegNet. This core trainable segmentation engine consists of an encoder network, a corresponding decoder network followed by a pixel-wise classification layer. The architecture of the encoder network is topologically identical to the 13 convolutional layers in the VGG16 network [1]. The role of the decoder network is to map the low resolution encoder feature maps to full input resolution feature maps for pixel-wise classification. The novelty of SegNet lies is in the manner in which the decoder upsamples its lower resolution input feature map(s). Specifically, the decoder uses pooling indices computed in the max-pooling step of the corresponding encoder to perform non-linear upsampling. This eliminates the need for learning to upsample. The upsampled maps are sparse and are then convolved with trainable filters to produce dense feature maps. We compare our proposed architecture with the widely adopted FCN [2] and also with the well known DeepLab-LargeFOV [3], DeconvNet [4] architectures. This comparison reveals the memory versus accuracy trade-off involved in achieving good segmentation performance. SegNet was primarily motivated by scene understanding applications. Hence, it is designed to be efficient both in terms of memory and computational time during inference. It is also significantly smaller in the number of trainable parameters than other competing architectures and can be trained end-to-end using stochastic gradient descent. We also performed a controlled benchmark of SegNet and other architectures on both road scenes and SUN RGB-D indoor scene segmentation tasks. These quantitative assessments show that SegNet provides good performance with competitive inference time and most efficient inference memory-wise as compared to other architectures. We also provide a Caffe implementation of SegNet and a web demo at

http://mi.eng.cam.ac.uk/projects/segnet/.

本文提出了一种新颖实用的深度全卷积神经网络结构——SegNet。该核心的可训练分割引擎由编码器网络、相应的解码器网络和像素级分类层组成。编码器网络的结构在拓扑上与VGG16网络[1]中的13个卷积层相同。解码器网络的作用是将编码器的低分辨率特征映射为全输入分辨率特征映射,进行像素级分类。SegNet lies的新颖之处在于解码器向上采样其低分辨率输入特征图的方式。具体地说,解码器使用在相应编码器的最大池化步骤中计算的池化索引来执行非线性上采样。这消除了学习向上采样的需要。上采样后的图像是稀疏的,然后与可训练滤波器进行卷积,生成密集的特征图。我们将我们提出的体系结构与广泛采用的FCN[2]以及著名的DeepLab-LargeFOV[3]、DeconvNet[4]体系结构进行了比较。这个比较揭示了在获得良好的分割性能时所涉及的内存和精度之间的权衡。SegNet主要是由场景理解应用程序驱动的。因此,它的设计在内存和推理过程中的计算时间方面都是高效的。它在可训练参数的数量上也明显小于其他竞争架构,并且可以使用随机梯度下降进行端到端训练。我们还在道路场景和SUN RGB-D室内场景分割任务上对SegNet等架构进行了受控基准测试。这些定量评估表明,与其他体系结构相比,SegNet具有良好的性能,推理时间有竞争力,并且在内存方面推理效率最高。我们还提供了一个Caffe实现SegNet和一个web demo at

http://mi.eng.cam.ac.uk/projects/segnet/。

CONCLUSION

We presented SegNet, a deep convolutional network architecture for semantic segmentation. The main motivation behind SegNet was the need to design an efficient architecture for road and indoor scene understanding which is efficient both in terms of memory and computational time. We analysed SegNet and compared it with other important variants to reveal the practical trade-offs involved in designing architectures for segmentation, particularly training time, memory versus accuracy. Those architectures which store the encoder network feature maps in full perform best but consume more memory during inference time. SegNet on the other hand is more efficient since it only stores the max-pooling indices of the feature maps and uses them in its decoder network to achieve good performance. On large and well known datasets SegNet performs competitively, achieving high scores for road scene understanding. End-to-end learning of deep segmentation architectures is a harder challenge and we hope to see more attention paid to this important problem.

本文提出了一种用于语义分割的深度卷积网络结构SegNet。SegNet背后的主要动机是需要为道路和室内场景理解设计一个高效的架构,它在内存和计算时间方面都是高效的。我们分析了SegNet,并将其与其他重要的变体进行了比较,以揭示在设计用于分割的架构时所涉及的实际权衡,尤其是训练时间、内存和准确性。那些完全存储编码器网络特征映射的架构执行得最好,但在推理期间消耗更多内存。另一方面,SegNet更高效,因为它只存储特征映射的最大池索引,并将其用于解码器网络中,以获得良好的性能。在大型和知名的数据集上,SegNet表现得很有竞争力,在道路场景理解方面获得了高分。深度分割体系结构的端到端学习是一个比较困难的挑战,我们希望看到更多的人关注这个重要的问题。

论文

Vijay Badrinarayanan, Alex Kendall, Roberto Cipolla.

SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation,

IEEE Transactions on Pattern Analysis and Machine Intelligence ( Volume: 39 , Issue: 12 , Dec. 1 2017 )

https://arxiv.org/abs/1511.00561

《SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation》

arXiv地址:https://arxiv.org/abs/1511.00561?context=cs

PDF地址:https://arxiv.org/pdf/1511.00561.pdf

Vijay Badrinarayanan, Kendall, and Roberto Cipolla(2015): SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. arXiv preprint arXiv:1511.00561 (2015).

0、实验结果

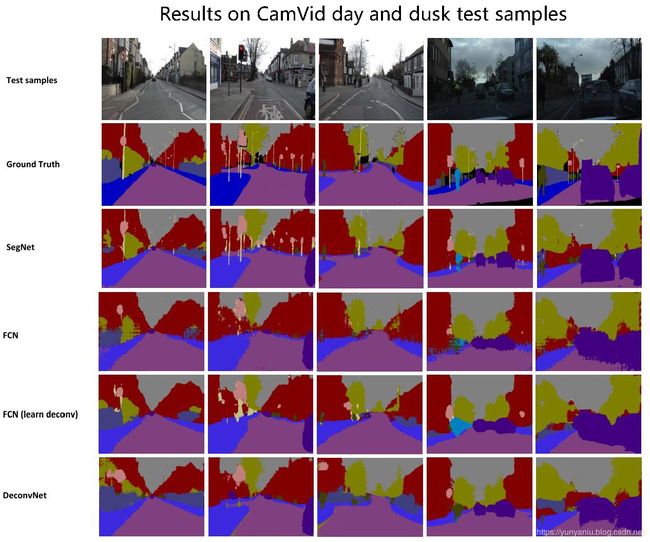

1、定性比较——在CamVidday和dusk测试样品上的实验结果

Results on CamVidday and dusk test samples,几个测试样的图像,包括白天和傍晚。对比的算法包括SegNet、FCN、FCN(learn deconv)、DeconvNet算法,只有SegNet算法给出了比较好的分割效果。

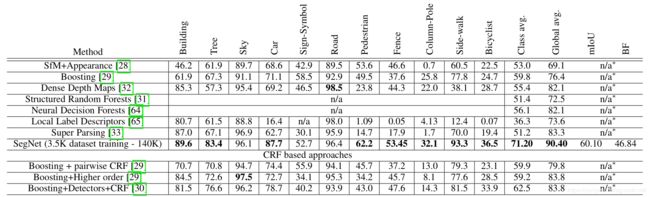

2、定量比较——在CamVid11道路类分割问题上,将SegNet与传统方法进行定量比较

Quantitative comparisons of SegNet with traditional methods on the CamVid11 road class segmentation problem

SegNet outperforms all the other methods, including those using depth, video and/or CRF’s on the majority of classes.

SegNet的单独IU得分都比较高,最后的mean IU可达到60.1%。都优于所有其他方法,包括那些在大多数类上使用深度、视频和/或CRF的方法。

1、SegNet算法的关键思路

1、An illustration of the SegNet architecture. There are no fully connected layers and hence it is only convolutional. A decoder upsamples its input using the transferred pool indices from its encoder to produce a sparse feature map(s). It then performs convolution with a trainable filter bank to densify the feature map. The final decoder output feature maps are fed to a soft-max classifier for pixel-wise classification.

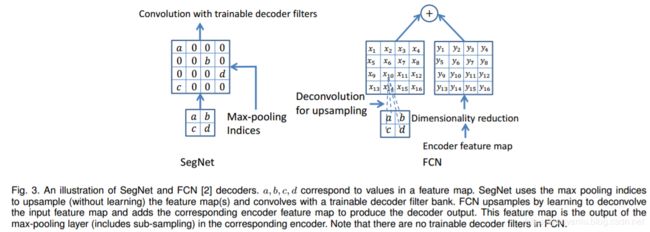

2、An illustration of SegNet and FCN [2] decoders. a, b, c, d correspond to values in a feature map. SegNet uses the max pooling indices to upsample (without learning) the feature map(s) and convolves with a trainable decoder filter bank. FCN upsamples by learning to deconvolve the input feature map and adds the corresponding encoder feature map to produce the decoder output. This feature map is the output of the max-pooling layer (includes sub-sampling) in the corresponding encoder. Note that there are no trainable decoder filters in FCN.

SegNet图像分割算法的架构详解

更新……

SegNet图像分割算法的案例应用

更新……