Python OpenCV+TensorFlow2.0 人脸识别入门

在Python 中TensorFlow 和OpenCV的具体配置就不说明了,网络上资料齐全。

我主要谈谈代码部分的内容,希望给想入门这方面的人一点参考。

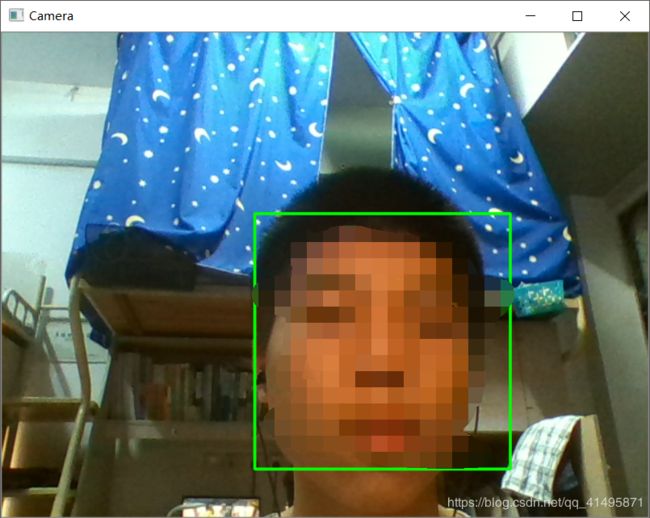

首先是测试代码

face_test.py

import cv2

def CatchVideo(window_name, camera_idx):

cv2.namedWindow(window_name)

#视频来源,可以选择摄像头或者视频

cap = cv2.VideoCapture(camera_idx)

#使用人脸识别分类器(这里填你自己的OpenCV级联分类器地址)

classfier = cv2.CascadeClassifier("C:/Users/Administrator/AppData/Local/Programs/Python/Python36/Lib/site-packages/cv2/data/haarcascade_frontalface_alt2.xml")

#识别出人脸后要画的边框的颜色,RGB格式

color = (0, 255, 0)

while cap.isOpened():

ok, frame = cap.read() #读取一帧数据

if not ok:

break

#将当前帧转换成灰度图像

grey = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

#人脸检测,1.2和3分别为图片缩放比例和需要检测的有效点数,32*32为最小检测的图像像素

faceRects = classfier.detectMultiScale(grey, scaleFactor = 1.2, minNeighbors = 3, minSize = (32, 32))

if len(faceRects) > 0: #大于0则检测到人脸

for faceRect in faceRects: #框出每一张人脸

x, y, w, h = faceRect

cv2.rectangle(frame, (x - 10, y - 10), (x + w + 10, y + h + 10), color, 2)

#显示图像

cv2.imshow(window_name, frame)

c = cv2.waitKey(10)

if c & 0xFF == ord('q'): #按q退出

break

#释放摄像头并销毁所有窗口

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

CatchVideo("Camera", 0)

那么可以采集人脸的数据了。

face_data_catch.py

#!/usr/bin/python

# -*- coding:utf-8 -*-

import cv2

import sys

from PIL import Image

def CatchPICFromVideo(window_name, camera_idx, catch_pic_num, path_name):

cv2.namedWindow(window_name)

#视频来源,可以选择摄像头或者视频

cap = cv2.VideoCapture(camera_idx)

#告诉OpenCV使用人脸识别分类器(这里填你自己的OpenCV级联分类器地址)

classfier = cv2.CascadeClassifier("C:/Users/Administrator/AppData/Local/Programs/Python/Python36/Lib/site-packages/cv2/data/haarcascade_frontalface_alt2.xml")

#识别出人脸后要画的边框的颜色,RGB格式

color = (0, 255, 0)

num = 0

while cap.isOpened():

ok, frame = cap.read() #读取一帧数据

if not ok:

break

grey = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY) #将当前桢图像转换成灰度图像

#人脸检测,1.2和2分别为图片缩放比例和需要检测的有效点数

faceRects = classfier.detectMultiScale(grey, scaleFactor = 1.2, minNeighbors = 3, minSize = (32, 32))

if len(faceRects) > 0: #大于0则检测到人脸

for faceRect in faceRects: #单独框出每一张人脸

x, y, w, h = faceRect

#将当前帧保存为图片

img_name = '%s/%d.jpg'%(path_name, num)

image = frame[y - 10: y + h + 10, x - 10: x + w + 10]

cv2.imwrite(img_name, image)

num += 1

if num > (catch_pic_num): #如果超过指定最大保存数量退出循环

break

#画出矩形框

cv2.rectangle(frame, (x - 10, y - 10), (x + w + 10, y + h + 10), color, 2)

#左上角显示当前捕捉到了多少人脸图片了

font = cv2.FONT_HERSHEY_SIMPLEX

cv2.putText(frame,'num:%d' % (num),(30, 30), font, 1, (255,0,0),1)

#超过指定最大保存数量结束程序

if num > (catch_pic_num): break

#显示图像

cv2.imshow(window_name, frame)

c = cv2.waitKey(10)

if c & 0xFF == ord('q'):

break

#释放摄像头并销毁所有窗口

cap.release()

cv2.destroyAllWindows()

if __name__ == '__main__':

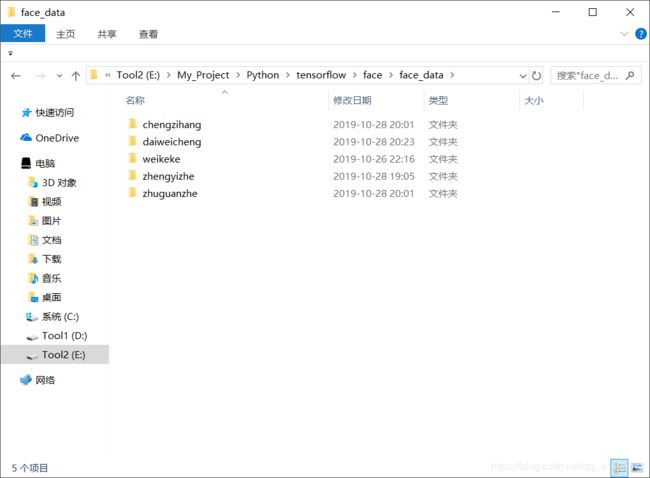

CatchPICFromVideo("catch_face_data", 0, 200-1, 'E:/My_Project/Python/tensorflow/face/face_data/chengzihang') #采集200张,保存在chengzihang这个文件夹下面

运行成功后,文件夹将保存200张你的照片

再随便采另外4个人的图片分别保存在不同目录下

现在我们专门写一个子程序对图像进行预处理(处理图片大小、设置标签等都在这里完成)

face_data_predeal.py

import os

import sys

import numpy as np

import cv2

IMAGE_SIZE = 64 #将图片大小设置为64*64

#按照指定图像大小调整尺寸

def resize_image(image, height = IMAGE_SIZE, width = IMAGE_SIZE):

top, bottom, left, right = (0, 0, 0, 0)

#获取图像尺寸

h, w, _ = image.shape

#对于长宽不相等的图片,找到最长的一边

longest_edge = max(h, w)

#计算短边需要增加多少像素宽度使其与长边等长

if h < longest_edge:

dh = longest_edge - h

top = dh // 2

bottom = dh - top

elif w < longest_edge:

dw = longest_edge - w

left = dw // 2

right = dw - left

else:

pass

#RGB颜色

BLACK = [0, 0, 0]

#给图像增加边界,是图片长、宽等长,cv2.BORDER_CONSTANT指定边界颜色由value指定

constant = cv2.copyMakeBorder(image, top , bottom, left, right, cv2.BORDER_CONSTANT, value = BLACK)

#将图像设置为灰度图

constant = cv2.cvtColor(constant,cv2.COLOR_BGR2GRAY)

#调整图像大小并返回

return cv2.resize(constant, (height, width))

#读取训练数据

images = []

labels = []

def read_path(path_name):

for dir_item in os.listdir(path_name):

#从初始路径开始叠加,合并成可识别的操作路径

full_path = os.path.abspath(os.path.join(path_name, dir_item))

if os.path.isdir(full_path): #如果是文件夹,继续递归调用

read_path(full_path)

else: #文件

if dir_item.endswith('.jpg'):

image = cv2.imread(full_path)

image = resize_image(image, IMAGE_SIZE, IMAGE_SIZE)

images.append(image)

labels.append(path_name)

return images,labels

#从指定路径读取训练数据

def load_dataset(path_name):

images,labels = read_path(path_name)

#将输入的所有图片转成四维数组,尺寸为(图片数量*IMAGE_SIZE*IMAGE_SIZE*3)

#尺寸为 200*5* 64 * 64 * 3

#5个人 每个人200张 图片为64 * 64像素,一个像素3个颜色值(RGB)

images = np.array(images)

print(images.shape)

#标注数据(采用onehot编码),'chengzihang'文件夹下都是我的脸部图像,全部指定为0 其他是我舍友的,分别不同指定标签(请注意必须从0开始算标签)

temp=0

for label in labels :

if label.endswith('chengzihang') :

labels[temp]=0

elif label.endswith('daiweicheng') :

labels[temp]=1

elif label.endswith('weikeke') :

labels[temp]=2

elif label.endswith('zhengyizhe') :

labels[temp]=3

elif label.endswith('zhuguanzhe') :

labels[temp]=4

temp=temp+1

return images, labels

if __name__ == '__main__':

images, labels = load_dataset("E:/My_Project/Python/tensorflow/face/face_data")

print(labels)

好了,现在终于到最重要的部分了,训练模型

face_data_train.py

from __future__ import absolute_import, division, print_function, unicode_literals

import random

import numpy as np

from sklearn.model_selection import train_test_split

from keras import backend as K

from face_data_predeal import load_dataset, resize_image, IMAGE_SIZE

import tensorflow as tf

from tensorflow.keras.layers import Dense, Flatten, Conv2D

from tensorflow.keras import Model

class Dataset:

def __init__(self, path_name):

#训练集

self.train_images = None

self.train_labels = None

#测试集

self.test_images = None

self.test_labels = None

#数据集加载路径

self.path_name = path_name

#当前库采用的维度顺序

self.input_shape = None

self.nb_classes=None

#加载数据集并按照交叉验证的原则划分数据集并进行相关预处理工作

def load(self, img_rows = IMAGE_SIZE, img_cols = IMAGE_SIZE,

img_channels = 1, nb_classes = 5): #灰度图 所以通道数为1 5个类别 所以分组数为5

#加载数据集到内存

images, labels = load_dataset(self.path_name)

train_images, test_images, train_labels, test_labels = train_test_split(images, labels, test_size = 0.3, random_state = random.randint(0, 100)) #将总数据按0.3比重随机分配给训练集和测试集

train_images = train_images.reshape(train_images.shape[0], img_rows, img_cols, img_channels) #由于TensorFlow需要通道数,我们上一步设置为灰度图,所以这里为1,否则彩色图为3

test_images = test_images.reshape(test_images.shape[0], img_rows, img_cols, img_channels)

self.input_shape = (img_rows, img_cols, img_channels)

#输出训练集、测试集的数量

print(train_images.shape[0], 'train samples')

print(test_images.shape[0], 'test samples')

#像素数据浮点化以便归一化

train_images = train_images.astype('float32')

test_images = test_images.astype('float32')

#将其归一化,图像的各像素值归一化到0~1区间

train_images /= 255

test_images /= 255

self.train_images = train_images

self.test_images = test_images

self.train_labels = train_labels

self.test_labels = test_labels

self.nb_classes = nb_classes

#建立CNN模型

class CNN(tf.keras.Model):

#模型初始化

def __init__(self):

super().__init__()

self.conv1 = tf.keras.layers.Conv2D(

filters=32, # 卷积层神经元(卷积核)数目

kernel_size=[3, 3], # 感受野大小

padding='same', # padding策略(vaild 或 same)

activation=tf.nn.relu, # 激活函数

)

self.conv3=tf.keras.layers.Conv2D( filters=32, kernel_size=[3, 3], activation=tf.nn.relu )

self.pool3 = tf.keras.layers.MaxPool2D(pool_size=[2, 2])

self.conv4=tf.keras.layers.Conv2D( filters=64, kernel_size=[3, 3], padding='same', activation=tf.nn.relu )

self.conv5=tf.keras.layers.Conv2D( filters=64, kernel_size=[3, 3], activation=tf.nn.relu )

self.pool4 = tf.keras.layers.MaxPool2D(pool_size=[2, 2])

self.flaten1=tf.keras.layers.Flatten()

self.dense3 = tf.keras.layers.Dense(units=512,activation=tf.nn.relu)

self.dense4 = tf.keras.layers.Dense(units=5) #最后分类 5个单位

#模型输出

def call(self, inputs):

x = self.conv1(inputs)

x = self.conv3(x)

x = self.pool3(x)

x = self.conv4(x)

x = self.conv5(x)

x = self.pool4(x)

x = self.flaten1(x)

x = self.dense3(x)

x = self.dense4(x)

output = tf.nn.softmax(x)

return output

#识别人脸

def face_predict(self, image):

image = resize_image(image)

image = image.reshape((1, IMAGE_SIZE, IMAGE_SIZE, 1))

#浮点并归一化

image = image.astype('float32')

image /= 255

#给出输入属于各个类别的概率

result = self.predict(image)

#print('result:', result[0])

#返回类别预测结果

return result[0]

if __name__ == '__main__':

learning_rate = 0.001 #学习率

batch=32 #batch数

EPOCHS = 120 #学习轮数

dataset = Dataset('./face_data/') #数据都保存在这个文件夹下

dataset.load()

model = CNN()#模型初始化

optimizer = tf.keras.optimizers.Adam(learning_rate=learning_rate) #选择优化器

loss_object = tf.keras.losses.SparseCategoricalCrossentropy() #选择损失函数

train_loss = tf.keras.metrics.Mean(name='train_loss') #设置变量保存训练集的损失值

train_accuracy = tf.keras.metrics.SparseCategoricalAccuracy(name='train_accuracy')#设置变量保存训练集的准确值

test_loss = tf.keras.metrics.Mean(name='test_loss')#设置变量保存测试集的损失值

test_accuracy = tf.keras.metrics.SparseCategoricalAccuracy(name='test_accuracy')#设置变量保存测试集的准确值

@tf.function

def train_step(images, labels):

with tf.GradientTape() as tape:

predictions = model(images)

loss = loss_object(labels, predictions)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))#优化器更新数据

train_loss(loss)#更新损失值

train_accuracy(labels, predictions)#更新准确值

@tf.function

def test_step(images, labels):

predictions = model(images)

t_loss = loss_object(labels, predictions)

test_loss(t_loss)

test_accuracy(labels, predictions)

for epoch in range(EPOCHS):

train_ds = tf.data.Dataset.from_tensor_slices((dataset.train_images, dataset.train_labels)).shuffle(300).batch(batch)

test_ds = tf.data.Dataset.from_tensor_slices((dataset.test_images, dataset.test_labels)).shuffle(300).batch(batch)

for images, labels in train_ds:

train_step(images, labels)

for test_images, test_labels in test_ds:

test_step(test_images, test_labels)

template = 'Epoch {} \nTrain Loss:{:.2f},Train Accuracy:{:.2%}\nTest Loss :{:.2f},Test Accuracy :{:.2%}'

print (template.format(epoch+1,train_loss.result(),train_accuracy.result(),test_loss.result(),test_accuracy.result())) #打印

model.save_weights('./model/face1') #保存权重模型 命名为face1

运行完成后,在./model目录下就生成了face1模型权重文件

最后就是来应用这个模型进行人脸识别了

face_data_apply.py

# -*- coding: utf-8 -*-

from __future__ import absolute_import, division, print_function, unicode_literals

import tensorflow as tf

import numpy as np

import os

from face_data_train import CNN

import cv2

import sys

from PIL import Image, ImageDraw, ImageFont

if __name__ == '__main__':

#加载模型

model = CNN()

model.load_weights('./model/face1') #读取模型权重参数

#框住人脸的矩形边框颜色

color = (0, 255, 0)

#捕获指定摄像头的实时视频流

cap = cv2.VideoCapture(0)

#人脸识别分类器本地存储路径

cascade_path ="C:/Users/Administrator/AppData/Local/Programs/Python/Python36/Lib/site-packages/cv2/data/haarcascade_frontalface_alt2.xml"

#循环检测识别人脸

while True:

ret, frame = cap.read() #读取一帧视频

if ret is True:

#图像灰化,降低计算复杂度

frame_gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

else:

continue

#使用人脸识别分类器,读入分类器

cascade = cv2.CascadeClassifier(cascade_path)

#利用分类器识别出哪个区域为人脸

faceRects = cascade.detectMultiScale(frame_gray, scaleFactor = 1.2, minNeighbors = 3, minSize = (32, 32))

if len(faceRects) > 0:

for faceRect in faceRects:

x, y, w, h = faceRect

#截取脸部图像提交给模型识别这是谁

image = frame[y - 10: y + h + 10, x - 10: x + w + 10]

face_probe = model.face_predict(image) #获得预测值

cv2.rectangle(frame, (x - 10, y - 10), (x + w + 10, y + h + 10), color, thickness = 2)

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB) # cv2和PIL中颜色的hex码的储存顺序不同

pilimg = Image.fromarray(frame)

draw = ImageDraw.Draw(pilimg) # 图片上打印 出所有人的预测值

font = ImageFont.truetype("simkai.ttf", 20, encoding="utf-8") # 参数1:字体文件路径,参数2:字体大小

draw.text((x+25,y-95), '小程:{:.2%}'.format(face_probe[0]), (255, 0, 0), font=font)

draw.text((x+25,y-70), '小戴:{:.2%}'.format(face_probe[1]), (255, 0, 0), font=font)

draw.text((x+25,y-45), '小魏:{:.2%}'.format(face_probe[2]), (255, 0, 0), font=font)

draw.text((x+25,y-20), '小郑:{:.2%}'.format(face_probe[3]), (255, 0, 0), font=font)

draw.text((x+25,y-120),'小朱:{:.2%}'.format(face_probe[4]), (255, 0, 0), font=font)

frame = cv2.cvtColor(np.array(pilimg), cv2.COLOR_RGB2BGR)

cv2.imshow("ShowTime", frame)

#等待10毫秒看是否有按键输入

k = cv2.waitKey(10)

#如果输入q则退出循环

if k & 0xFF == ord('q'):

break

#释放摄像头并销毁所有窗口

cap.release()

cv2.destroyAllWindows()

运行结果如下

可以看到成功识别出我,舍友经过测试,识别率也很高,不会出现误判的情况

至此人脸识别完成,希望能给对这方面感兴趣的人一些参考和帮助。