Mysql同步数据到ElasticSearch,ES单表,多表同步

Mysql同步数据到ElasticSearch,ES单表,多表同步

多表同步:

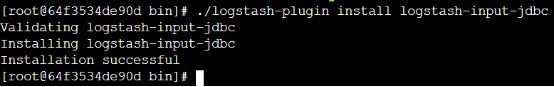

1、安装logstash-input-jdbc插件

参考链接:https://blog.csdn.net/chenguihua5/article/details/90316484

建议先看参考连接

按照链接换源之后操作步骤:

在logstash 目录下

cd bin

./logstash-plugin install logstash-input-jdbc

出现以上表示安装成功

2、在logstash-6.3.0中在安装目录bin下面(一般都是在bin下面)新建两个文件jdbc.conf和jdbc.sql ,jdbc.sql1 ,jdbc.sql2…—— touch 文件名

3、配置jdbc.conf(单表)

input {

stdin {

}

jdbc {

jdbc_connection_string => "jdbc:mysql://ip:3306/mi?characterEncoding=UTF-8&useSSL=false&autoReconnect=true"

jdbc_user => "root"

jdbc_password => "123456"

jdbc_driver_library => "/usr/local/logstash-6.3.0/lib/jars/mysql-connector-java-5.1.43.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

codec => plain { charset => "UTF-8"}

tracking_column => update_time

record_last_run => true

last_run_metadata_path => "/usr/local/logstash-6.3.0/lastrun/.logstash_jdbc_last_run"

jdbc_default_timezone => "Asia/Shanghai"

statement_filepath => "/usr/local/logstash-6.3.0/bin/jdbc.sql"

clean_run => false

schedule => "* * * * *"

type => "std"

}

}

filter {

json {

source => "message"

remove_field => ["message"]

}

}

output {

elasticsearch {

hosts => "ip:9200"

index => "mi"

document_type => "mi_product"

document_id => "%{id}"

}

stdout {

codec => json_lines

}

}

文件内容的开头会丢失 要自己手打补上配置jdbc.conf(多表)

input {

stdin {

}

jdbc {

type => "mi_product"

jdbc_connection_string => "jdbc:mysql://ip:3306/mi?characterEncoding=UTF-8&useSSL=false&autoReconnect=true"

jdbc_user => "root"

jdbc_password => "123456"

jdbc_driver_library => "/usr/local/logstash-6.3.0/lib/jars/mysql-connector-java-5.1.43.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

codec => plain { charset => "UTF-8"}

tracking_column => update_time

record_last_run => true

last_run_metadata_path => "/usr/local/logstash-6.3.0/lastrun/.logstash_jdbc_last_run"

jdbc_default_timezone => "Asia/Shanghai"

statement_filepath => "/usr/local/logstash-6.3.0/bin/jdbc.sql"

clean_run => false

schedule => "* * * * *"

}

jdbc {

type => "mi_product_classification"

jdbc_connection_string => "jdbc:mysql://ip:3306/mi?characterEncoding=UTF-8&useSSL=false&autoReconnect=true"

jdbc_user => "root"

jdbc_password => "123456"

jdbc_driver_library => "/usr/local/logstash-6.3.0/lib/jars/mysql-connector-java-5.1.43.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

codec => plain { charset => "UTF-8"}

tracking_column => update_time

record_last_run => true

last_run_metadata_path => "/usr/local/logstash-6.3.0/lastrun/.logstash_jdbc_last_run"

jdbc_default_timezone => "Asia/Shanghai"

statement_filepath => "/usr/local/logstash-6.3.0/bin/jdbc1.sql"

clean_run => false

schedule => "* * * * *"

}

jdbc {

type => "mi_product_search_criteria"

jdbc_connection_string => "jdbc:mysql://ip:3306/mi?characterEncoding=UTF-8&useSSL=false&autoReconnect=true"

jdbc_user => "root"

jdbc_password => "123456"

jdbc_driver_library => "/usr/local/logstash-6.3.0/lib/jars/mysql-connector-java-5.1.43.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

codec => plain { charset => "UTF-8"}

tracking_column => update_time

record_last_run => true

last_run_metadata_path => "/usr/local/logstash-6.3.0/lastrun/.logstash_jdbc_last_run"

jdbc_default_timezone => "Asia/Shanghai"

statement_filepath => "/usr/local/logstash-6.3.0/bin/jdbc2.sql"

clean_run => false

schedule => "* * * * *"

}

}

filter {

json {

source => "message"

remove_field => ["message"]

}

}

output {

if[type]=="mi_product"{

elasticsearch {

hosts => "ip:9200"

index => "mi"

document_id => "%{id}"

}

}

if[type]=="mi_product_classification"{

elasticsearch {

hosts => "ip:9200"

index => "miclassification"

document_id => "%{id}"

}

}

if[type]=="mi_product_search_criteria"{

elasticsearch {

hosts => "ip:9200"

index => "misearchcriteria"

document_id => "%{id}"

}

}

stdout {

codec => json_lines

}

}

jdbc.conf(单表)

input {

stdin {

}

jdbc {

jdbc_connection_string => "jdbc:mysql://ip:3306/mi?characterEncoding=UTF-8&useSSL=false&autoReconnect=true"

jdbc_user => "root"

jdbc_password => "123456"

jdbc_driver_library => "/usr/local/logstash-6.3.0/lib/jars/mysql-connector-java-5.1.43.jar"

jdbc_driver_class => "com.mysql.jdbc.Driver"

jdbc_paging_enabled => "true"

jdbc_page_size => "50000"

codec => plain { charset => "UTF-8"}

tracking_column => update_time

record_last_run => true

last_run_metadata_path => "/usr/local/logstash-6.3.0/lastrun/.logstash_jdbc_last_run"

jdbc_default_timezone => "Asia/Shanghai"

statement_filepath => "/usr/local/logstash-6.3.0/bin/jdbc.sql"

clean_run => false

schedule => "* * * * *"

type => "std"

}

}

filter {

json {

source => "message"

remove_field => ["message"]

}

}

output {

elasticsearch {

hosts => "ip:9200"

index => "mi"

document_type => "mi_product"

document_id => "%{id}"

}

stdout {

codec => json_lines

}

}

文件内容最下面的es路径改成自己的虚拟机

4、配置jdbc.sql,还有 jdbc.sql的sql用老师的sql 所有时间的判断改成 >:sql_last_value

#根据更新时间同步数据sql

select mp.id as id,mp.product_name,mp.sub_title,mp.description,mp.sale,sku.price

from mi_product mp

LEFT JOIN mi_product_sku sku ON mp.id = sku.product_id

WHERE mp.updated_time > :sql_last_value

5、在logstash-6.3.0中创建lastrun文件夹 —— mkdir 文件夹名

6、在lastrun文件中创建.logstash_jdbc_last_run文件 —— touch 文件名

7、将mysql-connector-java-5.1.43.jar 从 虚拟机 复制到logstash 容器的 /usr/local/logstash-6.3.0/lib/jars/ (这个路径是绝对路径 没有的话自己创建)

docker cp /usr/local/src/mysql-connector-java-5.1.43.jar 64f3534de90d:/usr/local/logstash-6.3.0/lib/jars/

8、./logstash –f jdbc.conf(到bin目录下)

——报错解决:1、Logstash could not be started because there is already another instance using the config

——到logstash-6.3.0的data目录下 执行 ls –lah 然后 rm –rf .lock