ffmpeg 音视频同步实现

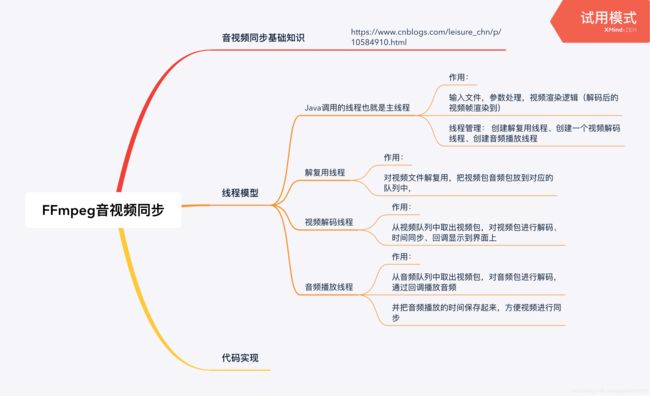

先来张图镇楼:

上面图片中的网址:https://www.cnblogs.com/leisure_chn/p/10584910.html

在开始之前有个初始化的操作:定义为initPlayer

主要是把这个把传过来的的surface转换为window,

在这个地方需要注意的是:在调用这个方法时,要在view显示后调用,不然这个surface会是个NULL

Java_com_houde_ffmpeg_player_VideoView_initPlayer(JNIEnv *env, jobject instance, jobject surface) {

//得到界面

if (window) {

ANativeWindow_release(window);

window = 0;

}

window = ANativeWindow_fromSurface(env, surface);

if(window == NULL){

LOGE("%s","window is NULL !!!");

return;

}

if (ffmpegVideo && ffmpegVideo->codec) {

ANativeWindow_setBuffersGeometry(window, ffmpegVideo->codec->width,

ffmpegVideo->codec->height,

WINDOW_FORMAT_RGBA_8888);

}

}

在把初始化这个window完成之后就可以调用这个play方法:

在开始就初始化这个FFmpeg组件,

void init() {

LOGE("开启解码线程")

//1.注册组件

av_register_all();

avformat_network_init();

//封装格式上下文

pFormatCtx = avformat_alloc_context();

//2.打开输入视频文件

if (avformat_open_input(&pFormatCtx, inputPath, NULL, NULL) != 0) {

LOGE("%s", "打开输入视频文件失败");

}

//3.获取视频信息

if (avformat_find_stream_info(pFormatCtx, NULL) < 0) {

LOGE("%s", "获取视频信息失败");

}

//得到播放总时间

if (pFormatCtx->duration != AV_NOPTS_VALUE) {

duration = pFormatCtx->duration;//微秒

}

}

extern "C"

JNIEXPORT void JNICALL

Java_com_houde_ffmpeg_player_VideoView_play(JNIEnv *env, jobject instance, jstring inputPath_) {

inputPath = env->GetStringUTFChars(inputPath_, 0);

init();

ffmpegVideo = new FFmpegVideo;

ffmpegMusic = new FFmpegMusic;

ffmpegVideo->setPlayCall(call_video_play);

//开启begin线程

pthread_create(&p_tid, NULL, begin, NULL);

env->ReleaseStringUTFChars(inputPath_, inputPath);

}

解复用线程:

在开启的这个begin中就是开启的解复用线程。首先找到音视频流的index,并得到音视频的AVCodecContext,然后在进行解复用,通过音视频流的index,把不同的音视频包放到对应的音视频队列中。开启音视频播放的线程。

void *begin(void *args) {

//找到视频流和音频流

for (int i = 0; i < pFormatCtx->nb_streams; ++i) {

//获取解码器

AVCodecParameters* params = pFormatCtx->streams[i]->codecpar;

AVCodecID codecID = params->codec_id;

AVCodec *avCodec = avcodec_find_decoder(codecID);

//copy一个解码器,

AVCodecContext *codecContext = avcodec_alloc_context3(avCodec);

avcodec_parameters_to_context(codecContext,params);

if (avcodec_open2(codecContext, avCodec, NULL) < 0) {

LOGE("打开失败")

continue;

}

//如果是视频流

if (params->codec_type == AVMEDIA_TYPE_VIDEO) {

ffmpegVideo->index = i;

ffmpegVideo->setAvCodecContext(codecContext);

ffmpegVideo->time_base = pFormatCtx->streams[i]->time_base;

if (window) {

ANativeWindow_setBuffersGeometry(window, ffmpegVideo->codec->width,

ffmpegVideo->codec->height,

WINDOW_FORMAT_RGBA_8888);

}

}//如果是音频流

else if (params->codec_type == AVMEDIA_TYPE_AUDIO) {

ffmpegMusic->index = i;

ffmpegMusic->setAvCodecContext(codecContext);

ffmpegMusic->time_base = pFormatCtx->streams[i]->time_base;

}

}

//开启播放

ffmpegVideo->setFFmepegMusic(ffmpegMusic);

ffmpegMusic->play();

ffmpegVideo->play();

isPlay = 1;

//seekTo(0);

//解码packet,并压入队列中

packet = (AVPacket *) av_mallocz(sizeof(AVPacket));

//跳转到某一个特定的帧上面播放

int ret;

//这是一个解复用的循环

while (isPlay) {

//

ret = av_read_frame(pFormatCtx, packet);

if (ret == 0) {

if (ffmpegVideo && ffmpegVideo->isPlay && packet->stream_index == ffmpegVideo->index

) {

//将视频packet压入队列

ffmpegVideo->put(packet);

} else if (ffmpegMusic && ffmpegMusic->isPlay &&

packet->stream_index == ffmpegMusic->index) {

ffmpegMusic->put(packet);

}

av_packet_unref(packet);

} else if (ret == AVERROR_EOF) {

// 读完了

//读取完毕 但是不一定播放完毕

while (isPlay) {

if (ffmpegVideo->queue.empty() && ffmpegMusic->queue.empty()) {

break;

}

// LOGE("等待播放完成");

av_usleep(10000);

}

}

}

//解码完过后可能还没有播放完

isPlay = 0;

if (ffmpegMusic && ffmpegMusic->isPlay) {

ffmpegMusic->stop();

}

if (ffmpegVideo && ffmpegVideo->isPlay) {

ffmpegVideo->stop();

}

//释放

av_packet_free(&packet);

avformat_free_context(pFormatCtx);

pthread_exit(0);

}

音频播放线程:

在音频播放的时候是通过OpenSL EL进行播放的,首先创建OPenSL EL 在回调函数中只要得到数据解码后的PCM数据就会不断的被拿到声音播放设备中进行播放。

1.初始化OpenSL

int FFmpegMusic::CreatePlayer() {

LOGE("创建opnsl es播放器")

//创建播放器

SLresult result;

// 创建引擎engineObject

result = slCreateEngine(&engineObject, 0, NULL, 0, NULL, NULL);

if (SL_RESULT_SUCCESS != result) {

return 0;

}

// 实现引擎engineObject

result = (*engineObject)->Realize(engineObject, SL_BOOLEAN_FALSE);

if (SL_RESULT_SUCCESS != result) {

return 0;

}

// 获取引擎接口engineEngine

result = (*engineObject)->GetInterface(engineObject, SL_IID_ENGINE,

&engineEngine);

if (SL_RESULT_SUCCESS != result) {

return 0;

}

// 创建混音器outputMixObject

result = (*engineEngine)->CreateOutputMix(engineEngine, &outputMixObject, 0,

0, 0);

if (SL_RESULT_SUCCESS != result) {

return 0;

}

// 实现混音器outputMixObject

result = (*outputMixObject)->Realize(outputMixObject, SL_BOOLEAN_FALSE);

if (SL_RESULT_SUCCESS != result) {

return 0;

}

result = (*outputMixObject)->GetInterface(outputMixObject, SL_IID_ENVIRONMENTALREVERB,

&outputMixEnvironmentalReverb);

const SLEnvironmentalReverbSettings settings = SL_I3DL2_ENVIRONMENT_PRESET_DEFAULT;

if (SL_RESULT_SUCCESS == result) {

(*outputMixEnvironmentalReverb)->SetEnvironmentalReverbProperties(

outputMixEnvironmentalReverb, &settings);

}

//======================

SLDataLocator_AndroidSimpleBufferQueue android_queue = {SL_DATALOCATOR_ANDROIDSIMPLEBUFFERQUEUE,

2};

SLDataFormat_PCM pcm = {SL_DATAFORMAT_PCM, 2, SL_SAMPLINGRATE_44_1, SL_PCMSAMPLEFORMAT_FIXED_16,

SL_PCMSAMPLEFORMAT_FIXED_16,

SL_SPEAKER_FRONT_LEFT | SL_SPEAKER_FRONT_RIGHT,

SL_BYTEORDER_LITTLEENDIAN};

// 新建一个数据源 将上述配置信息放到这个数据源中

SLDataSource slDataSource = {&android_queue, &pcm};

// 设置混音器

SLDataLocator_OutputMix outputMix = {SL_DATALOCATOR_OUTPUTMIX, outputMixObject};

SLDataSink audioSnk = {&outputMix, NULL};

const SLInterfaceID ids[3] = {SL_IID_BUFFERQUEUE, SL_IID_EFFECTSEND,

/*SL_IID_MUTESOLO,*/ SL_IID_VOLUME};

const SLboolean req[3] = {SL_BOOLEAN_TRUE, SL_BOOLEAN_TRUE,

/*SL_BOOLEAN_TRUE,*/ SL_BOOLEAN_TRUE};

//先讲这个

(*engineEngine)->CreateAudioPlayer(engineEngine, &bqPlayerObject, &slDataSource,

&audioSnk, 2,

ids, req);

//初始化播放器

(*bqPlayerObject)->Realize(bqPlayerObject, SL_BOOLEAN_FALSE);

// 得到接口后调用 获取Player接口

(*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_PLAY, &bqPlayerPlay);

// 注册回调缓冲区 //获取缓冲队列接口

(*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_BUFFERQUEUE,

&bqPlayerBufferQueue);

//缓冲接口回调

(*bqPlayerBufferQueue)->RegisterCallback(bqPlayerBufferQueue, bqPlayerCallback, this);

// 获取音量接口

(*bqPlayerObject)->GetInterface(bqPlayerObject, SL_IID_VOLUME, &bqPlayerVolume);

// 获取播放状态接口

(*bqPlayerPlay)->SetPlayState(bqPlayerPlay, SL_PLAYSTATE_PLAYING);

//启用回调函数

bqPlayerCallback(bqPlayerBufferQueue, this);

return 1;

}

2.OpenSL EL 的回调函数

void bqPlayerCallback(SLAndroidSimpleBufferQueueItf bq, void *context){

//得到pcm数据

LOGE("回调pcm数据")

FFmpegMusic *musicplay = (FFmpegMusic *) context;

int datasize = getPcm(musicplay);

if(datasize>0){

//第一针所需要时间采样字节/采样率

double time = datasize/(44100*2*2);

//

musicplay->clock=time+musicplay->clock;

LOGE("当前一帧声音时间%f 播放时间%f",time,musicplay->clock);

(*bq)->Enqueue(bq,musicplay->out_buffer,datasize);

LOGE("播放 %d ",musicplay->queue.size());

}

}

3.音频解码方法:

int getPcm(FFmpegMusic *agrs){

AVPacket *avPacket = (AVPacket *) av_mallocz(sizeof(AVPacket));

AVFrame *avFrame = av_frame_alloc();

int size;

int result;

LOGE("准备解码")

//这个循环只是用于解码一个packet包

while (agrs->isPlay){

size=0;

agrs->get(avPacket);

//时间矫正

if (avPacket->pts != AV_NOPTS_VALUE) {

agrs->clock = av_q2d(agrs->time_base) * avPacket->pts;

}

// 解码 mp3 编码格式frame----pcm frame

LOGE("解码")

result = avcodec_send_packet(agrs->codec,avPacket);

if(result!= 0){

continue;

}

result = avcodec_receive_frame(agrs->codec,avFrame);

if(result!= 0){

continue;

}

//重采样

swr_convert(agrs->swrContext, &agrs->out_buffer, 44100 * 2, (const uint8_t **) avFrame->data, avFrame->nb_samples);

// 缓冲区的大小

size = av_samples_get_buffer_size(NULL, agrs->out_channer_nb, avFrame->nb_samples,

AV_SAMPLE_FMT_S16, 1);

break;

}

av_free(avPacket);

av_frame_free(&avFrame);

return size;

}

视频解码线程

从视频包队列中取出视频包,对视频包进行解码,根据pts和times_base获取显示时间,然后跟前面音频播放的时间进行同步,确定当前视频帧什么时候进行显示,如果需要显示就回到到主线程中的方法。

1.视频包解码

void *videoPlay(void *args){

FFmpegVideo *ffmpegVideo = (FFmpegVideo *) args;

//申请AVFrame

AVFrame *frame = av_frame_alloc();//分配一个AVFrame结构体,AVFrame结构体一般用于存储原始数据,指向解码后的原始帧

AVFrame *rgb_frame = av_frame_alloc();//分配一个AVFrame结构体,指向存放转换成rgb后的帧

AVPacket *packet = (AVPacket *) av_mallocz(sizeof(AVPacket));

AVPixelFormat dstFormat = AV_PIX_FMT_RGBA;

uint8_t *out_buffer = (uint8_t *) av_malloc(

(size_t) av_image_get_buffer_size(dstFormat, ffmpegVideo->codec->width, ffmpegVideo->codec->height,1));

//更具指定的数据初始化/填充缓冲区

av_image_fill_arrays(rgb_frame->data, rgb_frame->linesize, out_buffer, dstFormat,

ffmpegVideo->codec->width, ffmpegVideo->codec->height, 1);

LOGE("转换成rgba格式")

ffmpegVideo->swsContext = sws_getContext(ffmpegVideo->codec->width,ffmpegVideo->codec->height,ffmpegVideo->codec->pix_fmt,

ffmpegVideo->codec->width,ffmpegVideo->codec->height,AV_PIX_FMT_RGBA,

SWS_BICUBIC,NULL,NULL,NULL);

LOGE("LC XXXXX %f",ffmpegVideo->codec);

double last_play //上一帧的播放时间

,play //当前帧的播放时间

,last_delay // 上一次播放视频的两帧视频间隔时间

,delay //两帧视频间隔时间

,audio_clock //音频轨道 实际播放时间

,diff //音频帧与视频帧相差时间

,sync_threshold

,start_time //从第一帧开始的绝对时间

,pts

,actual_delay//真正需要延迟时间

;

//从第一帧开始的绝对时间

start_time = av_gettime() / 1000000.0;

int frameCount;

LOGE("解码 ")

int result ;

while (ffmpegVideo->isPlay) {

ffmpegVideo->get(packet);

LOGE("解码 %d",packet->stream_index)

result = avcodec_send_packet(ffmpegVideo->codec,packet);

// avcodec_decode_video2(ffmpegVideo->codec, frame, &frameCount, packet);

if(result != 0){//表示解码失败

continue;

}

result = avcodec_receive_frame(ffmpegVideo->codec,frame);

if(result != 0){//表示解码失败

continue;

}

//转换为rgb格式

sws_scale(ffmpegVideo->swsContext,(const uint8_t *const *)frame->data,frame->linesize,0,

frame->height,rgb_frame->data,

rgb_frame->linesize);

LOGE("frame 宽%d,高%d",frame->width,frame->height);

LOGE("rgb格式 宽%d,高%d",rgb_frame->width,rgb_frame->height);

if((pts=frame->best_effort_timestamp)==AV_NOPTS_VALUE){

pts=0;

}

play = pts*av_q2d(ffmpegVideo->time_base);

//纠正时间

play = ffmpegVideo->synchronize(frame,play);

delay = play - last_play;

if (delay <= 0 || delay > 1) {

delay = last_delay;

}

audio_clock = ffmpegVideo->ffmpegMusic->clock;

last_delay = delay;

last_play = play;

//音频与视频的时间差

diff = ffmpegVideo->clock - audio_clock;

// 在合理范围外 才会延迟 加快

sync_threshold = (delay > 0.01 ? 0.01 : delay);

if (fabs(diff) < 10) {

if (diff <= -sync_threshold) {

delay = 0;

} else if (diff >=sync_threshold) {

delay = 2 * delay;

}

}

start_time += delay;

actual_delay=start_time-av_gettime()/1000000.0;

if (actual_delay < 0.01) {

actual_delay = 0.01;

}

av_usleep(actual_delay*1000000.0+6000);

LOGE("播放视频")

//利用这个回调是把解码后的rgb数据显示到界面上

video_call(rgb_frame);

// av_packet_unref(packet);

// av_frame_unref(rgb_frame);

// av_frame_unref(frame);

}

LOGE("free packet");

av_free(packet);

LOGE("free packet ok");

LOGE("free packet");

av_frame_free(&frame);

av_frame_free(&rgb_frame);

sws_freeContext(ffmpegVideo->swsContext);

size_t size = ffmpegVideo->queue.size();

for (int i = 0; i < size; ++i) {

AVPacket *pkt = ffmpegVideo->queue.front();

av_free(pkt);

ffmpegVideo->queue.erase(ffmpegVideo->queue.begin());

}

LOGE("VIDEO EXIT");

pthread_exit(0);

}

2.时间同步

double FFmpegVideo::synchronize(AVFrame *frame, double play) {

//clock是当前播放的时间位置

if (play != 0)

clock=play;

else //pst为0 则先把pts设为上一帧时间

play = clock;

//可能有pts为0 则主动增加clock

//frame->repeat_pict = 当解码时,这张图片需要要延迟多少

//需要求出扩展延时:

//extra_delay = repeat_pict / (2*fps) 显示这样图片需要延迟这么久来显示

double repeat_pict = frame->repeat_pict;

//使用AvCodecContext的而不是stream的

double frame_delay = av_q2d(codec->time_base);

//如果time_base是1,25 把1s分成25份,则fps为25

//fps = 1/(1/25)

double fps = 1 / frame_delay;

//pts 加上 这个延迟 是显示时间

double extra_delay = repeat_pict / (2 * fps);

double delay = extra_delay + frame_delay;

// LOGI("extra_delay:%f",extra_delay);

clock += delay;

return play;

}

3.回调显示视频

void call_video_play(AVFrame *frame) {

if (!window) {

return;

}

ANativeWindow_Buffer window_buffer;

if (ANativeWindow_lock(window, &window_buffer, 0)) {

return;

}

LOGE("绘制 宽%d,高%d", frame->width, frame->height);

LOGE("绘制 宽%d,高%d 行字节 %d ", window_buffer.width, window_buffer.height, frame->linesize[0]);

uint8_t *dst = (uint8_t *) window_buffer.bits;

int dstStride = window_buffer.stride * 4;

uint8_t *src = frame->data[0];

int srcStride = frame->linesize[0];

for (int i = 0; i < window_buffer.height; ++i) {

memcpy(dst + i * dstStride, src + i * srcStride, srcStride);

}

ANativeWindow_unlockAndPost(window);

}

这样这个视频同步就完成了。

源码地址:https://github.com/qq245425070/ffmpeg_player