基于spark实时分析nginx请求日志,自动封禁IP之二:日志实时分析

上一章讲了WEB功能设计,本章继续讲下日志实时分析设计。

《基于spark实时分析nginx请求日志,自动封禁IP之一:web功能设计》

整体思路:从kafka集群读取请求日志流,经过spark structured streaming(结构化流)实时分析,将触发阈值的数据输出至mysql。

1.数据源

nginx日志通过syslog协议输出至logstash,然后同时写入es和kafka:

nginx -> logstash -> elasticsearch and kafka

logstash配置:

output {

elasticsearch {

……

}

## 尽可能在来源处排除无用日志,减轻spark负担

if [log_source] in ["ip1","ip2"] and ## 只分析对外nginx的日志

[real_ip] !~ /10.\d{1,3}.\d{1,3}.\d{1,3}/ and ## 排除内网IP

[domain] != "" and ## 排除不需要分析的域名

[status] != "403" { ## IP拉黑后仍然有请求日志,只是状态为403,因此排除403状态日志

kafka {

bootstrap_servers => 'ip1:9092,ip1:9092,ip3:9092' ## kafka集群

topic_id => 'topic_nginxlog'

codec => plain {

format => "%{time_local},%{real_ip},%{domain},%{url_short}"

}

}

}

}需要的日志信息包括:请求时间、用户IP、域名、URL(不含?后的参数)

2.spark结构化流与滑动窗口

结构化流是以spark sql为基础的可扩展的高容错的数据处理引擎,利用它可以像处理静态数据一样处理流式数据。

结构化流底层通过micro-batch处理引擎,将数据流分成连续的小的批处理任务进行计算,实现0.1秒以内的延迟和exactly-once语义。

整个过程围绕一个很重要的功能:sliding event-time window(基于日志时间的滑动窗口)

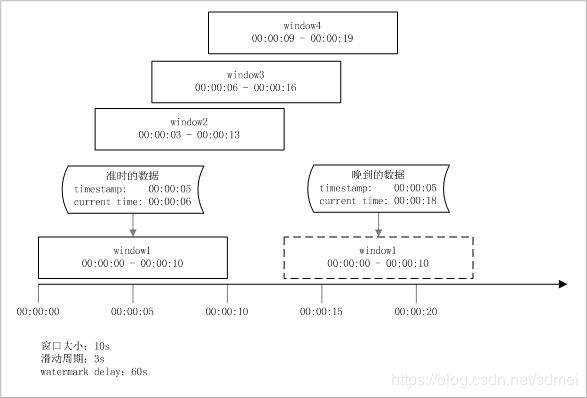

滑动窗口的概念见下图:

"窗口大小"对应规则表中的win_duration(时间范围),"滑动周期"对应slide_duration(监控频率)

这2个参数决定了规则使用spark计算资源的多少,窗口大小越多,说明每个batch需要处理的数据越多;滑动周期越小,说明需要处理的batch数量越多。

本案例中,窗口大小由用户配置,滑动周期根据窗口的大小计算,以节约资源(slide_duration = math.ceil(win_duration/5))。

watermark delay允许数据迟到一定的时间,如设置为1分钟,上图中00:00:18达到的时间戳为00:00:05的数据会重新计算。

3.数据处理逻辑

3.1.从kafak中读取数据,二进制数据转换成string

selectExpr("CAST(value AS STRING)")

3.2.数据分割

以","分割,生成4个字段:req_time、real_ip、domain、url,其中req_time转换为timestamp类型,成为event_time

.select(regexp_replace(regexp_replace(split("value",",")[0],"\+08:00",""),"T"," ").alias("req_time"),

split("value",",")[1].alias("real_ip"),

split("value",",")[2].alias("domain"),

split("value",",")[3].alias("url"))\

.selectExpr("CAST(req_time AS timestamp)","real_ip", "domain", "url")

3.3.允许数据延迟60秒,迟到超过60秒的数据丢弃

withWatermark("req_time", "60 seconds")

3.4.数据聚合

group by (real_ip,window) having count(*) > 请求阈值

.groupBy(

"real_ip",

window("req_time", str(i_rule.win_duration) + " seconds", str(i_rule.slide_duration) + " seconds")

).agg(F.count("*").alias("cnt")).filter("cnt >= " + str(i_rule.req_threshold))

3.5.补齐rule_id,mysql表入库需要的字段

windows.withColumn("id",F.lit(i_rule.id))

3.6.设置outputMode

outputMode包含以下三种:

- append mode: 不支持聚合

- complete mode: 从JOB开始所有结果都输出

- update mode: 数据有更新的结果才输出

这里选择update mode

outputMode("update")

3.7.去除batch中的重复值

每个batch中,经常会接受同一个IP多个窗口的数据,且这些数据都超出的阈值,因此一个batch中一个IP会出现多次,如:

Batch: 21

+---------------+------------------------------------------+---+---+

|real_ip |window |cnt|id |

+---------------+------------------------------------------+---+---+

|117.157.183.228|[2020-05-14 13:44:27, 2020-05-14 13:44:57]|11 |3 |

|117.157.183.228|[2020-05-14 13:44:28, 2020-05-14 13:44:58]|11 |3 |

|117.157.183.228|[2020-05-14 13:44:30, 2020-05-14 13:45:00]|10 |3 |

+---------------+------------------------------------------+---+---+一个IP只需要入库一次,因此需要去重;

因为要保留id,无法像mysql中一样直接select id但不group by,只能通过join实现:

与之前action的结果关联,需要缓存结果:persist

代码:

batchDf.persist()

batchDfGrp = batchDf.groupBy("real_ip").agg(F.min("window").alias("window"))

pd = batchDfGrp.join(

batchDf,

["real_ip", "window"],

'inner'

).select("id", batchDfGrp.real_ip, batchDfGrp.window, "cnt").toPandas()

insert_match_record(pd)

batchDf.unpersist()3.8.设置JOB执行周期

以滑动周期作为JOB执行周期

trigger(processingTime=str(i_rule.slide_duration) + ' seconds')

3.9.入库

上述步骤以及将结果转换成pandas对象,循环将其中的记录插入mysql表(记得用连接池)

pool = PooledDB(pymysql,

maxconnections=10,

mincached=5,

maxcached=5,

host='myip',

port=3306,

db='waf',

user='user',

passwd='password',

setsession=['set autocommit = 1'])

def insert_match_record(record_pandas):

connect = pool.connection()

cursor = connect.cursor()

for row in record_pandas.itertuples():

sql = "insert into match_record(rule_id,ip_addr,win_begin,win_end,request_cnt) values(%s,%s,%s,%s,%s)"

cursor.execute(sql,(getattr(row,'id'),getattr(row,'real_ip'),getattr(row,'window')[0].strftime('%Y-%m-%d %H:%M:%S'),getattr(row,'window')[1].strftime('%Y-%m-%d %H:%M:%S'),getattr(row,'cnt')))

connect.commit()

cursor.close()

connect.close()4.调度

4.1.新增规则

通过多线程启动每个规则JOB,线程name以rule_id命名

每3秒检查一次是否有rule不在当前线程列表中(新增rule),有则创建改rule对应的JOB线程;

if __name__ == '__main__':

while True:

time.sleep(3)

curThreadName = []

for t in threading.enumerate():

curThreadName.append(t.getName())

curRuleList = get_rules()

for r in curRuleList:

if "rule"+str(r.id) not in curThreadName:

t = threading.Thread(target=sub_job, args=(r,), name="rule"+str(r.id))

t.start()

4.2.修改规则

每个规则JOB运行过程中,每隔3秒检查当前规则JOB使用的规则参数与数据库中是否一致,如果不一致,则停止当前线程JOB(新增规则会将库中的规则重新创建)

while True:

time.sleep(3)

# this rule not in rule list

ruleValidFlag = 1

curRuleList = get_rules()

for r in curRuleList:

if i_rule.domain == r.domain \

and i_rule.url == r.url \

and i_rule.match_type == r.match_type \

and i_rule.win_duration == r.win_duration \

and i_rule.slide_duration == r.slide_duration \

and i_rule.req_threshold == r.req_threshold:

# this rule in rule list

ruleValidFlag = 0

if ruleValidFlag == 1:

query.stop()

break5.spark on yarn任务提交

本人用的版本:python3.6, hadoop-2.6.5, spark_2.11-2.4.4

hadoop与yarn的安装配置略,补充几个注意事项:

5.1.日志级别

默认INFO,会占用大量磁盘空间,因此要改成WARN

修改以下两个文件

# vi etc/hadoop/log4j.properties

hadoop.root.logger=WARN,console

# vi hadoop-daemon.sh

export HADOOP_ROOT_LOGGER=${HADOOP_ROOT_LOGGER:-"WARN,RFA"}

export HADOOP_SECURITY_LOGGER=${HADOOP_SECURITY_LOGGER:-"WARN,RFAS"}

export HDFS_AUDIT_LOGGER=${HDFS_AUDIT_LOGGER:-"WARN,NullAppender"}5.2.yarn配置说明

使用capacity调度,创建独立的队列,确保和别的JOB资源隔离

yarn.resourcemanager.scheduler.class: org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler

yarn.scheduler.capacity.root.queues: default,waf

yarn.scheduler.capacity.root.waf.capacity: 90

yarn.scheduler.capacity.root.default.capacity: 10

yarn.scheduler.capacity.root.waf.maximum-capacity: 100

yarn.scheduler.capacity.root.default.maximum-capacity: 100

每个container分配的内存最小、最大值

yarn.scheduler.minimum-allocation-mb: 100

yarn.scheduler.maximum-allocation-mb: 5120

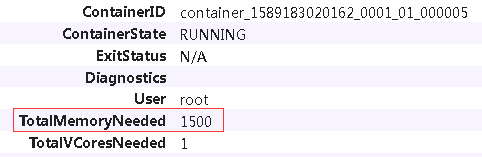

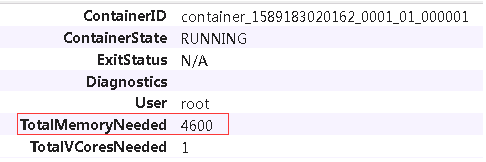

在指定driver-memory=4G的情况下,dirver实际使用的内存为: 4G + (以minimum-allocation-mb为单位向上取整(max(4G*0.1,384M))) = 4600M,因此指定maximum-allocation-mb为5120M

在指定spark.executor.memory=1G的情况下,container实际使用内存为: 1G + (以minimum-allocation-mb为单位向上取整(max(1G*0.1,384M))) = 1500M

5.3.环境准备:spark jar包和配置

客户端(提交任务的服务器,我用的master)部署spark-2.4.4环境,目录:/root/spark244/

jar上传hdfs

# hadoop fs -mkdir -p hdfs://hdfs_master_ip:9000/system/spark/jars/

# hadoop fs -put $SPARK_HOME/jars/* hdfs://hdfs_master_ip:9000/system/spark/jars/

# cat /root/spark244/conf/spark-defaults.conf

spark.yarn.jars hdfs://hdfs_master_ip:9000/system/spark/jars/*.jar

# cat /root/spark244/spark-env.sh

export SPARK_DIST_CLASSPATH=$(/root/hadoop/hadoop-2.6.5/bin/hadoop classpath)

export HADOOP_CONF_DIR=/root/hadoop/hadoop-2.6.5/etc/hadoop

export YARN_CONF_DIR=/root/hadoop/hadoop-2.6.5/etc/hadoop

export SPARK_CONF_DIR=/root/spark244/conf5.4.环境准备:python库

为了不在每个node安装python库,我们将python虚拟环境打包上传hdfs

先安装python虚拟环境,并将相关库安装好:pip install DBUtils PyMySQL backports.lzma pandas

处理lzma兼容性问题(否则提交任务后application会报错):

增加try except块:

# vi lib/python3.6/site-packages/backports/lzma/__init__.py

try:

from ._lzma import *

from ._lzma import _encode_filter_properties, _decode_filter_properties

except ImportError:

from backports.lzma import *

from backports.lzma import _encode_filter_properties, _decode_filter_properties

增加backports前缀:

# vi lib/python3.6/site-packages/pandas/compat/__init__.py

try:

import backports.lzma

return backports.lzma压缩并上传:

# zip -r pyspark-env.zip pyspark-env/

# hadoop fs -mkdir -p /env/pyspark

# hadoop fs -put pyspark-env.zip /env/pyspark

5.5.运行参数

# cat waf.py

ssConf = SparkConf()

ssConf.setMaster("yarn")

ssConf.setAppName("nginx-cc-waf")

ssConf.set("spark.executor.cores", "1")

ssConf.set("spark.executor.memory", "1024M")

ssConf.set("spark.dynamicAllocation.enabled", False)

## 数据量一般的情况下,executor数不宜过高,否则会很影响性能

ssConf.set("spark.executor.instances", "4")

ssConf.set("spark.scheduler.mode", "FIFO")

ssConf.set("spark.default.parallelism", "4")

ssConf.set("spark.sql.shuffle.partitions", "4")

ssConf.set("spark.debug.maxToStringFields", "1000")

ssConf.set("spark.sql.codegen.wholeStage", False)

ssConf.set("spark.jars.packages","org.apache.spark:spark-sql-kafka-0-10_2.11:2.4.4")

spark = SparkSession.builder.config(conf=ssConf).getOrCreate()提交:

# cat job_waf_start.sh

#!/bin/bash

spark-submit \

--name waf \ ## cluster模式下,代码里指定queue并不起作用,需要在spark-submit时指定

--master yarn \

--deploy-mode cluster \

--queue waf \

--driver-memory 4G \

--py-files job_waf/db_mysql.py \

--conf spark.yarn.dist.archives=hdfs://hdfs_master_ip:9000/env/pyspark/pyspark-env.zip#pyenv \

--conf spark.yarn.appMasterEnv.PYSPARK_PYTHON=pyenv/pyspark-env/bin/python \

job_waf/waf.py