超级账本hyperledger fabric第五集:共识排序及源码阅读

一.共识机制

达成共识需要3个阶段,交易背书,交易排序,交易验证

- 交易背书:模拟的

- 交易排序:确定交易顺序,最终将排序好的交易打包区块分发

- 交易验证:区块存储前要进行一下交易验证

二.orderer节点的作用

- 交易排序

- 目的:保证系统的最终一致性(有限状态机)

- solo:单节点排序

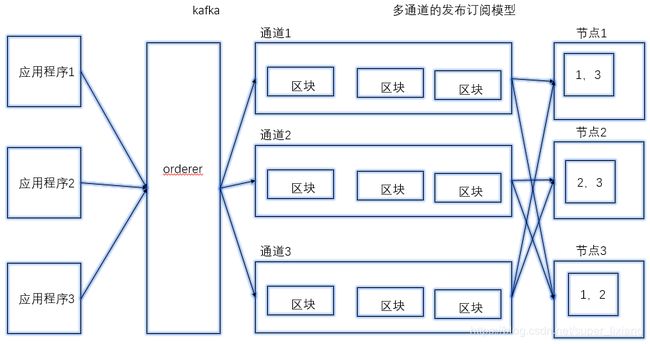

- kafka:外置的分布式消息队列

- 区块分发

- orderer中的区块并不是最终持久化的区块

- 是一个中间状态的区块

- 包含了所有交易,不管是有效还是无效,都会打包传给组织的锚节点

- 多通道的数据隔离

- 客户端可以使用某个通道,发送交易

三.源码目录

- 从goland中阅读

- 源码目录

- bccsp:与密码学相关的,加密,数字签名,证书,将密码学中的函数抽象成了接口,方便调用和扩展

- bddtests:行为驱动开发,从需求直接到开发

- common:公共库、错误处理、日志出项,账本存储,相关工具

- core:是fabric的核心库,子目录是各个模块的目录 / comm:网络通信相关

- devenv:官方提供的开发环境,使用的是Vagrant

- docs:文档

- events:事件监听机制

- examples:例子程序

- gossip:通信协议,组织内部的通信,区块同步

- gotools:用于编译

- images:docker镜像相关

- msp:成员服务管理,member serivce provider,读取证书做签名

- orderer:排序节点

- peer:peer节点

- proposals:用于扩展,新功能的提案

- protos:数据结构的定义

四.共识机制源码

orderer节点的源码

- 首先看orderer目录下的main.go ,main.go里有一个NewServer可以进入server.go

main.go中func main() 主要起到判断作用,如果接收到的是start命令,就加载和初始化各种配置,如果接收到的是version指令,就打印版本号;之后在下面定义了上面的各种方法。

func main() {

kingpin.Version("0.0.1")

//判断接受到的参数Args

switch kingpin.MustParse(app.Parse(os.Args[1:])) {

// 如果接受到"start" command

case start.FullCommand():

logger.Infof("Starting %s", metadata.GetVersionInfo())

//加载配置

conf := config.Load()

//初始化日志级别

//生产环境下日志级别调高

initializeLoggingLevel(conf)

//初始化profile,go内置的观察程序运行的工具

//可以通过http调用

initializeProfilingService(conf)

//初始化grpc服务端

grpcServer := initializeGrpcServer(conf)

//加载msp签名证书

initializeLocalMsp(conf)

//msp证书给签名者实例化

signer := localmsp.NewSigner()

//初始化链的管理者(也就是主节点)

manager := initializeMultiChainManager(conf, signer)

//实例化服务

server := NewServer(manager, signer)

//绑定服务

ab.RegisterAtomicBroadcastServer(grpcServer.Server(), server)

logger.Info("Beginning to serve requests")

//启动服务

grpcServer.Start()

// 如果接受到"version" command

case version.FullCommand():

//打印版本号

fmt.Println(metadata.GetVersionInfo())

}

}我们来看下初始化管理者的代码

func initializeMultiChainManager(conf *config.TopLevel, signer crypto.LocalSigner) multichain.Manager {

//创建账本工厂,产生临时区块

lf, _ := createLedgerFactory(conf)

//判断链是否存在

if len(lf.ChainIDs()) == 0 {

//链不存在

//启动引导链

initializeBootstrapChannel(conf, lf)

} else {

logger.Info("Not bootstrapping because of existing chains")

}

//实例化共识机制

//有solo和kafka两种模式

consenters := make(map[string]multichain.Consenter)

consenters["solo"] = solo.New()

consenters["kafka"] = kafka.New(conf.Kafka.TLS, conf.Kafka.Retry, conf.Kafka.Version)

return multichain.NewManagerImpl(lf, consenters, signer)

}- orderer的配置文件在orderer.yaml中,监听的地址是127.0.0.1;监听的端口是7050;BCCSP是密码学,账本存储最终还是存储到硬盘中。

- 接下来咱们看下实例化服务server.go,这里面定义了交易收集和广播区块。

type server struct {

//交易收集

bh broadcast.Handler

//广播区块

dh deliver.Handler

}

我们具体看下交易收集:broadcast.go

func (bh *handlerImpl) Handle(srv ab.AtomicBroadcast_BroadcastServer) error {

logger.Debugf("Starting new broadcast loop")

for {

//接收交易

msg, err := srv.Recv()

if err == io.EOF {

logger.Debugf("Received EOF, hangup")

return nil

}

if err != nil {

logger.Warningf("Error reading from stream: %s", err)

return err

}

payload, err := utils.UnmarshalPayload(msg.Payload)

if err != nil {

logger.Warningf("Received malformed message, dropping connection: %s", err)

return srv.Send(&ab.BroadcastResponse{Status: cb.Status_BAD_REQUEST})

}

//验证消息体的内容,有错误则返回Status_BAD_REQUEST

if payload.Header == nil {

logger.Warningf("Received malformed message, with missing header, dropping connection")

return srv.Send(&ab.BroadcastResponse{Status: cb.Status_BAD_REQUEST})

}

chdr, err := utils.UnmarshalChannelHeader(payload.Header.ChannelHeader)

if err != nil {

logger.Warningf("Received malformed message (bad channel header), dropping connection: %s", err)

return srv.Send(&ab.BroadcastResponse{Status: cb.Status_BAD_REQUEST})

}

if chdr.Type == int32(cb.HeaderType_CONFIG_UPDATE) {

logger.Debugf("Preprocessing CONFIG_UPDATE")

msg, err = bh.sm.Process(msg)

if err != nil {

logger.Warningf("Rejecting CONFIG_UPDATE because: %s", err)

return srv.Send(&ab.BroadcastResponse{Status: cb.Status_BAD_REQUEST})

}

err = proto.Unmarshal(msg.Payload, payload)

if err != nil || payload.Header == nil {

logger.Criticalf("Generated bad transaction after CONFIG_UPDATE processing")

return srv.Send(&ab.BroadcastResponse{Status: cb.Status_INTERNAL_SERVER_ERROR})

}

chdr, err = utils.UnmarshalChannelHeader(payload.Header.ChannelHeader)

if err != nil {

logger.Criticalf("Generated bad transaction after CONFIG_UPDATE processing (bad channel header): %s", err)

return srv.Send(&ab.BroadcastResponse{Status: cb.Status_INTERNAL_SERVER_ERROR})

}

if chdr.ChannelId == "" {

logger.Criticalf("Generated bad transaction after CONFIG_UPDATE processing (empty channel ID)")

return srv.Send(&ab.BroadcastResponse{Status: cb.Status_INTERNAL_SERVER_ERROR})

}

}

//获取support对象

support, ok := bh.sm.GetChain(chdr.ChannelId)

if !ok {

logger.Warningf("Rejecting broadcast because channel %s was not found", chdr.ChannelId)

return srv.Send(&ab.BroadcastResponse{Status: cb.Status_NOT_FOUND})

}

logger.Debugf("[channel: %s] Broadcast is filtering message of type %s", chdr.ChannelId, cb.HeaderType_name[chdr.Type])

//将消息传到support的过滤器中过滤

//是区块的第一次过滤,第二次是在区块切割时过滤的

_, filterErr := support.Filters().Apply(msg)

if filterErr != nil {

logger.Warningf("[channel: %s] Rejecting broadcast message because of filter error: %s", chdr.ChannelId, filterErr)

return srv.Send(&ab.BroadcastResponse{Status: cb.Status_BAD_REQUEST})

}

//消息入列,然后被solo或kafka处理

if !support.Enqueue(msg) {

return srv.Send(&ab.BroadcastResponse{Status: cb.Status_SERVICE_UNAVAILABLE})

}

if logger.IsEnabledFor(logging.DEBUG) {

logger.Debugf("[channel: %s] Broadcast has successfully enqueued message of type %s", chdr.ChannelId, cb.HeaderType_name[chdr.Type])

}

//返回正确的200码

err = srv.Send(&ab.BroadcastResponse{Status: cb.Status_SUCCESS})

if err != nil {

logger.Warningf("[channel: %s] Error sending to stream: %s", chdr.ChannelId, err)

return err

}

}

}我们具体看下广播区块:deliver.go

func (ds *deliverServer) Handle(srv ab.AtomicBroadcast_DeliverServer) error {

logger.Debugf("Starting new deliver loop")

for {

logger.Debugf("Attempting to read seek info message")

//接收请求

envelope, err := srv.Recv()

if err == io.EOF {

logger.Debugf("Received EOF, hangup")

return nil

}

if err != nil {

logger.Warningf("Error reading from stream: %s", err)

return err

}

//做校验

payload, err := utils.UnmarshalPayload(envelope.Payload)

if err != nil {

logger.Warningf("Received an envelope with no payload: %s", err)

return sendStatusReply(srv, cb.Status_BAD_REQUEST)

}

if payload.Header == nil {

logger.Warningf("Malformed envelope received with bad header")

return sendStatusReply(srv, cb.Status_BAD_REQUEST)

}

chdr, err := utils.UnmarshalChannelHeader(payload.Header.ChannelHeader)

if err != nil {

logger.Warningf("Failed to unmarshal channel header: %s", err)

return sendStatusReply(srv, cb.Status_BAD_REQUEST)

}

//获取chain对象

chain, ok := ds.sm.GetChain(chdr.ChannelId)

if !ok {

// Note, we log this at DEBUG because SDKs will poll waiting for channels to be created

// So we would expect our log to be somewhat flooded with these

logger.Debugf("Rejecting deliver because channel %s not found", chdr.ChannelId)

return sendStatusReply(srv, cb.Status_NOT_FOUND)

}

//监听是否有错误发生

//有错误,返回503

erroredChan := chain.Errored()

select {

case <-erroredChan:

logger.Warningf("[channel: %s] Rejecting deliver request because of consenter error", chdr.ChannelId)

return sendStatusReply(srv, cb.Status_SERVICE_UNAVAILABLE)

default:

}

lastConfigSequence := chain.Sequence()

//对链配置信息校验

sf := sigfilter.New(policies.ChannelReaders, chain.PolicyManager())

result, _ := sf.Apply(envelope)

if result != filter.Forward {

logger.Warningf("[channel: %s] Received unauthorized deliver request", chdr.ChannelId)

return sendStatusReply(srv, cb.Status_FORBIDDEN)

}

//解析请求消息内容

seekInfo := &ab.SeekInfo{}

if err = proto.Unmarshal(payload.Data, seekInfo); err != nil {

logger.Warningf("[channel: %s] Received a signed deliver request with malformed seekInfo payload: %s", chdr.ChannelId, err)

return sendStatusReply(srv, cb.Status_BAD_REQUEST)

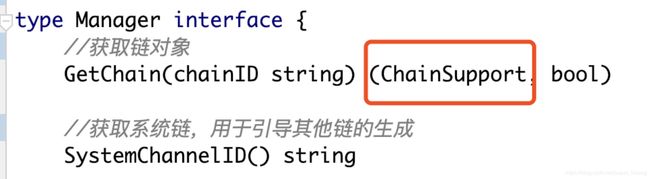

}- 在server.go中会遇到chain调用Manager Manage.go

type Manager interface {

//获取链对象

GetChain(chainID string) (ChainSupport, bool)

//获取系统链,用于引导其他链的生成

SystemChannelID() string

//生成或更新链的配置

NewChannelConfig(envConfigUpdate *cb.Envelope) (configtxapi.Manager, error)

}

//配置资源

type configResources struct {

configtxapi.Manager

}

//获取orderer相关的配置

//点进Orderer可以看相关配置

func (cr *configResources) SharedConfig() config.Orderer {

oc, ok := cr.OrdererConfig()

if !ok {

logger.Panicf("[channel %s] has no orderer configuration", cr.ChainID())

}

return oc

}

//定义账本资源

type ledgerResources struct {

//配置资源

*configResources

//账本的读写对象

//对账本操作的入口

ledger ledger.ReadWriter

}

//manager的实现类

type multiLedger struct {

//链

chains map[string]*chainSupport

//共识机制

consenters map[string]Consenter

//账本读写工厂

ledgerFactory ledger.Factory

//签名对象

signer crypto.LocalSigner

//系统链的标识

systemChannelID string

//定义系统链

systemChannel *chainSupport

}

//获取某条链更新的配置交易

func getConfigTx(reader ledger.Reader) *cb.Envelope {

//获取链上最新的一个区块

lastBlock := ledger.GetBlock(reader, reader.Height()-1)

//根据最新的区块信息,可以找到最新的配置交易的区块

index, err := utils.GetLastConfigIndexFromBlock(lastBlock)

if err != nil {

logger.Panicf("Chain did not have appropriately encoded last config in its latest block: %s", err)

}

//读取配置区块

configBlock := ledger.GetBlock(reader, index)

if configBlock == nil {

logger.Panicf("Config block does not exist")

}

//读取最新的配置交易

return utils.ExtractEnvelopeOrPanic(configBlock, 0)

}

//manager的实例化

func NewManagerImpl(ledgerFactory ledger.Factory, consenters map[string]Consenter, signer crypto.LocalSigner) Manager {

//接收传来的参数

//直接赋值,上面定义的

ml := &multiLedger{

chains: make(map[string]*chainSupport),

ledgerFactory: ledgerFactory,

consenters: consenters,

signer: signer,

}

//读取本地存储的链的ID

existingChains := ledgerFactory.ChainIDs()

//循环

for _, chainID := range existingChains {

//根据账本工厂实例化账本读的对象

//rl:read ledger

rl, err := ledgerFactory.GetOrCreate(chainID)

if err != nil {

logger.Panicf("Ledger factory reported chainID %s but could not retrieve it: %s", chainID, err)

}

//获取最新的配置交易

configTx := getConfigTx(rl)

if configTx == nil {

logger.Panic("Programming error, configTx should never be nil here")

}

//将配置交易和ledger对象绑定

ledgerResources := ml.newLedgerResources(configTx)

chainID := ledgerResources.ChainID()

//读取链是否有联盟配置

//联盟配置:是否有创建其他链的权限

//一般只有系统链有联盟配置

if _, ok := ledgerResources.ConsortiumsConfig(); ok {

//有联盟配置

if ml.systemChannelID != "" {

//已经存在系统链,报错

logger.Panicf("There appear to be two system chains %s and %s", ml.systemChannelID, chainID)

}

//实例化ChainSupport,依次赋值

chain := newChainSupport(createSystemChainFilters(ml, ledgerResources),

ledgerResources,

consenters,

signer)

logger.Infof("Starting with system channel %s and orderer type %s", chainID, chain.SharedConfig().ConsensusType())

ml.chains[chainID] = chain

ml.systemChannelID = chainID

ml.systemChannel = chain

// We delay starting this chain, as it might try to copy and replace the chains map via newChain before the map is fully built

//延迟启动

//其他链完成后,启动系统链

defer chain.start()

} else {

logger.Debugf("Starting chain: %s", chainID)

chain := newChainSupport(createStandardFilters(ledgerResources),

ledgerResources,

consenters,

signer)

//创建标准链

ml.chains[chainID] = chain

//启动

chain.start()

}

}

//系统链不存在,则报错

if ml.systemChannelID == "" {

logger.Panicf("No system chain found. If bootstrapping, does your system channel contain a consortiums group definition?")

}

//返回ml

return ml

}

//返回系统链id

func (ml *multiLedger) SystemChannelID() string {

return ml.systemChannelID

}

// GetChain retrieves the chain support for a chain (and whether it exists)

//得到链

func (ml *multiLedger) GetChain(chainID string) (ChainSupport, bool) {

cs, ok := ml.chains[chainID]

return cs, ok

}

//实例化一个账本资源对象

func (ml *multiLedger) newLedgerResources(configTx *cb.Envelope) *ledgerResources {

//初始化配置交易

initializer := configtx.NewInitializer()

//生成配置manager

configManager, err := configtx.NewManagerImpl(configTx, initializer, nil)

if err != nil {

logger.Panicf("Error creating configtx manager and handlers: %s", err)

}

//得到chainID

chainID := configManager.ChainID()

//根据chainID,实例化账本对象

ledger, err := ml.ledgerFactory.GetOrCreate(chainID)

if err != nil {

logger.Panicf("Error getting ledger for %s", chainID)

}

//最终返回赋值后的账本资源对象

return &ledgerResources{

configResources: &configResources{Manager: configManager},

ledger: ledger,

}

}

//生成一条新链

func (ml *multiLedger) newChain(configtx *cb.Envelope) {

//创建账本资源对象

ledgerResources := ml.newLedgerResources(configtx)

//组装区块,通过Append加到链上

ledgerResources.ledger.Append(ledger.CreateNextBlock(ledgerResources.ledger, []*cb.Envelope{configtx}))

// Copy the map to allow concurrent reads from broadcast/deliver while the new chainSupport is

//得到新的链,可以加锁

newChains := make(map[string]*chainSupport)

for key, value := range ml.chains {

newChains[key] = value

}

cs := newChainSupport(createStandardFilters(ledgerResources), ledgerResources, ml.consenters, ml.signer)

chainID := ledgerResources.ChainID()

logger.Infof("Created and starting new chain %s", chainID)

newChains[string(chainID)] = cs

cs.start()

ml.chains = newChains

}

func (ml *multiLedger) channelsCount() int {

return len(ml.chains)

}

//生成新的链的配置

func (ml *multiLedger) NewChannelConfig(envConfigUpdate *cb.Envelope) (configtxapi.Manager, error) {

//下面是生成新链前,做各种校验

configUpdatePayload, err := utils.UnmarshalPayload(envConfigUpdate.Payload)

if err != nil {

return nil, fmt.Errorf("Failing initial channel config creation because of payload unmarshaling error: %s", err)

}- 接下来看chainsupport.go

//定义共识机制的接口

type ConsenterSupport interface {

//本地签名

crypto.LocalSigner

//区块切割对象

BlockCutter() blockcutter.Receiver

//配置

SharedConfig() config.Orderer

//切割好的交易打包成区块

CreateNextBlock(messages []*cb.Envelope) *cb.Block

//将区块写入

WriteBlock(block *cb.Block, committers []filter.Committer, encodedMetadataValue []byte) *cb.Block

//获取链的ID

ChainID() string

//获取链当前的区块高度

Height() uint64 // Returns the number of blocks on the chain this specific consenter instance is associated with

}

type ChainSupport interface {

//背书策略

PolicyManager() policies.Manager

//读取账本的接口

Reader() ledger.Reader

//处理账本的错误

Errored() <-chan struct{}

//处理交易输入的接口

broadcast.Support

//定义共识机制的接口

ConsenterSupport

//序列

//每次对链进行修改,Sequence是加1的

Sequence() uint64

//将一个交易转为配置交易

//Envelope:可以理解为交易

ProposeConfigUpdate(env *cb.Envelope) (*cb.ConfigEnvelope, error)

}

type chainSupport struct {

//链的资源信息,链的配置和账本读写对象

*ledgerResources

//链

chain Chain

//区块切割

cutter blockcutter.Receiver

//过滤器

//orderer过滤一些交易为空的数据

filters *filter.RuleSet

//签名

signer crypto.LocalSigner

//最新配置信息所在的区块高度

lastConfig uint64

//最新配置信息所在的序列化

lastConfigSeq uint64

}

func newChainSupport(

filters *filter.RuleSet,

ledgerResources *ledgerResources,

consenters map[string]Consenter,

signer crypto.LocalSigner,

) *chainSupport {

//创建区块切割对象

cutter := blockcutter.NewReceiverImpl(ledgerResources.SharedConfig(), filters)

//根据配置查询orderer使用的共识机制

consenterType := ledgerResources.SharedConfig().ConsensusType()

//得到共识机制

consenter, ok := consenters[consenterType]

if !ok {

logger.Fatalf("Error retrieving consenter of type: %s", consenterType)

}

//赋值

cs := &chainSupport{

ledgerResources: ledgerResources,

cutter: cutter,

filters: filters,

signer: signer,

}

//序列号

cs.lastConfigSeq = cs.Sequence()

var err error

//最新区块

lastBlock := ledger.GetBlock(cs.Reader(), cs.Reader().Height()-1)

if lastBlock.Header.Number != 0 {

//获取最新配置信息所在的区块高度

cs.lastConfig, err = utils.GetLastConfigIndexFromBlock(lastBlock)

if err != nil {

logger.Fatalf("[channel: %s] Error extracting last config block from block metadata: %s", cs.ChainID(), err)

}

}

//获取区块元数据信息

metadata, err := utils.GetMetadataFromBlock(lastBlock, cb.BlockMetadataIndex_ORDERER)

if err != nil {

logger.Fatalf("[channel: %s] Error extracting orderer metadata: %s", cs.ChainID(), err)

}

logger.Debugf("[channel: %s] Retrieved metadata for tip of chain (blockNumber=%d, lastConfig=%d, lastConfigSeq=%d): %+v", cs.ChainID(), lastBlock.Header.Number, cs.lastConfig, cs.lastConfigSeq, metadata)

//用共识机制操作Chain

cs.chain, err = consenter.HandleChain(cs, metadata)

if err != nil {

logger.Fatalf("[channel: %s] Error creating consenter: %s", cs.ChainID(), err)

}

return cs

}

//实例化过滤器

func createStandardFilters(ledgerResources *ledgerResources) *filter.RuleSet {

return filter.NewRuleSet([]filter.Rule{

filter.EmptyRejectRule,

sizefilter.MaxBytesRule(ledgerResources.SharedConfig()),

sigfilter.New(policies.ChannelWriters, ledgerResources.PolicyManager()),

configtxfilter.NewFilter(ledgerResources),

filter.AcceptRule,

})

}func (cs *chainSupport) WriteBlock(block *cb.Block, committers []filter.Committer, encodedMetadataValue []byte) *cb.Block {

//遍历所有提交的交易

for _, committer := range committers {

committer.Commit()

}

// Set the orderer-related metadata field

//判断元数据

if encodedMetadataValue != nil {

block.Metadata.Metadata[cb.BlockMetadataIndex_ORDERER] = utils.MarshalOrPanic(&cb.Metadata{Value: encodedMetadataValue})

}

//进行区块签名

cs.addBlockSignature(block)

//配置签名

cs.addLastConfigSignature(block)

//将区块写入账本中

err := cs.ledger.Append(block)

if err != nil {

logger.Panicf("[channel: %s] Could not append block: %s", cs.ChainID(), err)

}

logger.Debugf("[channel: %s] Wrote block %d", cs.ChainID(), block.GetHeader().Number)

return block

}- 点Receiver进去,看区块切割,在blockcutter.go中:

func (r *receiver) Ordered(msg *cb.Envelope) (messageBatches [][]*cb.Envelope, committerBatches [][]filter.Committer, validTx bool, pending bool) {

//将交易信息再次过滤

//第一次过滤是orderer接收到交易请求时

committer, err := r.filters.Apply(msg)

if err != nil {

logger.Debugf("Rejecting message: %s", err)

return // We don't bother to determine `pending` here as it's not processed in error case

}

// message is valid

//将交易标记为有效

validTx = true

//计算交易体的大小

messageSizeBytes := messageSizeBytes(msg)

//判断是否交易隔离,配置交易进行隔离

//交易体的大小,如果比最大交易体大小大,认为交易内容过大,进行单独切块

if committer.Isolated() || messageSizeBytes > r.sharedConfigManager.BatchSize().PreferredMaxBytes {

if committer.Isolated() {

logger.Debugf("Found message which requested to be isolated, cutting into its own batch")

} else {

logger.Debugf("The current message, with %v bytes, is larger than the preferred batch size of %v bytes and will be isolated.", messageSizeBytes, r.sharedConfigManager.BatchSize().PreferredMaxBytes)

}

//若存在每被七个的交易

//将未被切割的交易存放到区块

if len(r.pendingBatch) > 0 {

messageBatch, committerBatch := r.Cut()

messageBatches = append(messageBatches, messageBatch)

committerBatches = append(committerBatches, committerBatch)

}

//单独切割当前交易

messageBatches = append(messageBatches, []*cb.Envelope{msg})

committerBatches = append(committerBatches, []filter.Committer{committer})

return

}

//不隔离的交易,这里处理

//判断加上当前交易后,区块大小是否超出预先设定的大小

messageWillOverflowBatchSizeBytes := r.pendingBatchSizeBytes+messageSizeBytes > r.sharedConfigManager.BatchSize().PreferredMaxBytes

//如果超出了预定大小,进入if

if messageWillOverflowBatchSizeBytes {

logger.Debugf("The current message, with %v bytes, will overflow the pending batch of %v bytes.", messageSizeBytes, r.pendingBatchSizeBytes)

logger.Debugf("Pending batch would overflow if current message is added, cutting batch now.")

//进行切割

messageBatch, committerBatch := r.Cut()

messageBatches = append(messageBatches, messageBatch)

committerBatches = append(committerBatches, committerBatch)

}

logger.Debugf("Enqueuing message into batch")

r.pendingBatch = append(r.pendingBatch, msg)

r.pendingBatchSizeBytes += messageSizeBytes

r.pendingCommitters = append(r.pendingCommitters, committer)

pending = true

//若区块队列超出阈值范围,进行切割

if uint32(len(r.pendingBatch)) >= r.sharedConfigManager.BatchSize().MaxMessageCount {

logger.Debugf("Batch size met, cutting batch")

messageBatch, committerBatch := r.Cut()

messageBatches = append(messageBatches, messageBatch)

committerBatches = append(committerBatches, committerBatch)

pending = false

}

return

}

//完成切割这个动作

func (r *receiver) Cut() ([]*cb.Envelope, []filter.Committer) {

batch := r.pendingBatch

r.pendingBatch = nil

committers := r.pendingCommitters

r.pendingCommitters = nil

r.pendingBatchSizeBytes = 0

return batch, committers

}

func messageSizeBytes(message *cb.Envelope) uint32 {

//将消息体和签名加起来求长度

return uint32(len(message.Payload) + len(message.Signature))

}

- 在consensus.go中是共识:

func (ch *chain) main() {

//定义定时器

var timer <-chan time.Time

//循环

for {

select {

//不停的从交易的channel中获取交易

//将获取到的交易,发送给区块切割对象

//返回需要切割的区块

case msg := <-ch.sendChan:

//区块切割

batches, committers, ok, _ := ch.support.BlockCutter().Ordered(msg)

//判断交易是否有效

//判断定时器是否未空

if ok && len(batches) == 0 && timer == nil {

//实例化定时器

timer = time.After(ch.support.SharedConfig().BatchTimeout())

continue

}

//创建区块

//最终保存到orderer节点的临时账本中

for i, batch := range batches {

block := ch.support.CreateNextBlock(batch)

ch.support.WriteBlock(block, committers[i], nil)

}

//判断交易的有效性

if len(batches) > 0 {

//定时器重新计时

timer = nil

}

//定时器触发

//马上进行区块切割

case <-timer:

//clear the timer

timer = nil

//区块切割

batch, committers := ch.support.BlockCutter().Cut()

if len(batch) == 0 {

logger.Warningf("Batch timer expired with no pending requests, this might indicate a bug")

continue

}

logger.Debugf("Batch timer expired, creating block")

//创建区块

block := ch.support.CreateNextBlock(batch)

//写区块

ch.support.WriteBlock(block, committers, nil)

case <-ch.exitChan:

logger.Debugf("Exiting")

//直接退出

return

}

}

}