手写数字识别问题

数据概要

数据来源于kaggle

其中,训练集是 785 × 42000 785\times 42000 785×42000 ,预测集是 784 × 28000 784\times 28000 784×28000

实际每个数字是 28 × 28 28\times 28 28×28 ,由0和1组成的图形所表示

导入必要的包

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

from sklearn.neighbors import KNeighborsClassifier

from sklearn.decomposition import PCA

from sklearn.pipeline import Pipeline

读取数据

train=pd.read_csv(r'C:\Users\yep\data\digit-recognizer\train.csv')

test=pd.read_csv(r'C:\Users\yep\data\digit-recognizer\test.csv')

观察样本点

train.head()

| label | pixel0 | pixel1 | pixel2 | pixel3 | pixel4 | pixel5 | pixel6 | pixel7 | pixel8 | ... | pixel774 | pixel775 | pixel776 | pixel777 | pixel778 | pixel779 | pixel780 | pixel781 | pixel782 | pixel783 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

5 rows × 785 columns

test.head()

| pixel0 | pixel1 | pixel2 | pixel3 | pixel4 | pixel5 | pixel6 | pixel7 | pixel8 | pixel9 | ... | pixel774 | pixel775 | pixel776 | pixel777 | pixel778 | pixel779 | pixel780 | pixel781 | pixel782 | pixel783 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

5 rows × 784 columns

train.shape,test.shape

((42000, 785), (28000, 784))

发现数据中只包含(0,1)这组元素,且为稀疏矩阵

target=train['label']

train=train.drop('label',1)

train.head(3)

| pixel0 | pixel1 | pixel2 | pixel3 | pixel4 | pixel5 | pixel6 | pixel7 | pixel8 | pixel9 | ... | pixel774 | pixel775 | pixel776 | pixel777 | pixel778 | pixel779 | pixel780 | pixel781 | pixel782 | pixel783 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ... | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

3 rows × 784 columns

t1_x,t2_x,t1_y,t2_y=train_test_split(train,target,test_size=0.2,random_state=1)

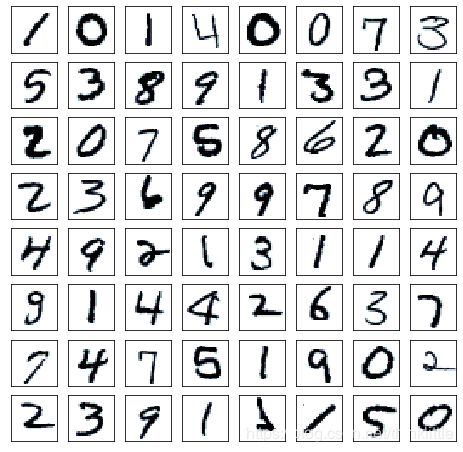

plt.figure(figsize=(8,8))

for digit_num in range(0,64):

plt.subplot(8,8,digit_num+1)

grid_data = train.iloc[digit_num].as_matrix().reshape(28,28) # reshape from 1d to 2d pixel array

plt.imshow(grid_data, interpolation = "none", cmap = "bone_r")

plt.xticks([])

plt.yticks([])

plt.show()

C:\Users\yep\Anaconda3\lib\site-packages\ipykernel_launcher.py:4: FutureWarning: Method .as_matrix will be removed in a future version. Use .values instead.

after removing the cwd from sys.path.

建立模型

PCA+KNN

pcn=Pipeline([('pca',PCA(n_components=0.95)),

('knn',KNeighborsClassifier())])

pcn.fit(t1_x,t1_y)

pcn.score(t2_x,t2_y)

0.9701190476190477

随机森林

利用交叉验证寻找最优参数

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

parameters={'n_estimators':[10,50,100,200,300,400,500,600],'min_samples_split':[2,3,4,5,6,7,8]}

random=RandomForestClassifier(n_jobs=-1)

Gridcv=GridSearchCV(random,parameters,cv=5,n_jobs=-1)

Gridcv.fit(train,target)

Gridcv.best_estimator_

C:\Users\yep\Anaconda3\lib\site-packages\joblib\externals\loky\process_executor.py:706: UserWarning: A worker stopped while some jobs were given to the executor. This can be caused by a too short worker timeout or by a memory leak.

"timeout or by a memory leak.", UserWarning

RandomForestClassifier(bootstrap=True, class_weight=None, criterion='gini',

max_depth=None, max_features='auto', max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, n_estimators=600,

n_jobs=-1, oob_score=False, random_state=None, verbose=0,

warm_start=False)

from sklearn.ensemble import RandomForestClassifier

random=RandomForestClassifier(n_estimators=600,n_jobs=-1,min_samples_split=2,oob_score=True)

random.fit(train,target)

random.oob_score_

0.9676428571428571

梯度提升树

from xgboost import XGBClassifier

xgb=XGBClassifier(n_estimators=500,n_jobs=-1)

xgb.fit(t1_x,t1_y)

xgb.score(t2_x,t2_y)

0.9669047619047619

from lightgbm import LGBMClassifier

lgb1=LGBMClassifier(n_estimators=300)

lgb1.fit(t1_x,t1_y)

lgb1.score(t2_x,t2_y)

0.9777380952380952

LR

from sklearn.linear_model import LogisticRegression

lr=LogisticRegression(C=0.4)

lr.fit(t1_x,t1_y)

lr.score(t2_x,t2_y)

c:\users\yep\miniconda3\lib\site-packages\sklearn\linear_model\logistic.py:432: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

c:\users\yep\miniconda3\lib\site-packages\sklearn\linear_model\logistic.py:469: FutureWarning: Default multi_class will be changed to 'auto' in 0.22. Specify the multi_class option to silence this warning.

"this warning.", FutureWarning)

c:\users\yep\miniconda3\lib\site-packages\sklearn\svm\base.py:929: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

"the number of iterations.", ConvergenceWarning)

0.9096428571428572

模型融合

from sklearn.ensemble import VotingClassifier

eclf=VotingClassifier([('RF',random),('lgb',lgb1),('knn',pcn),('xgb',xgb),('lr',lr)],n_jobs=-1)

eclf.fit(t1_x,t1_y)

eclf.score(t2_x,t2_y)

0.9736904761904762

result=eclf.predict(test)

np.savetxt('result1_1.csv',

np.c_[range(1,len(test)+1),result],

delimiter=',',

header = 'ImageId,Label',

comments = '',

fmt='%d')

stacking

from mlxtend.classifier import StackingClassifier

clf=StackingClassifier([random,pcn,xgb,lr],lgb1)

clf.fit(train,target)

c:\users\yep\miniconda3\lib\site-packages\sklearn\linear_model\logistic.py:432: FutureWarning: Default solver will be changed to 'lbfgs' in 0.22. Specify a solver to silence this warning.

FutureWarning)

c:\users\yep\miniconda3\lib\site-packages\sklearn\linear_model\logistic.py:469: FutureWarning: Default multi_class will be changed to 'auto' in 0.22. Specify the multi_class option to silence this warning.

"this warning.", FutureWarning)

c:\users\yep\miniconda3\lib\site-packages\sklearn\svm\base.py:929: ConvergenceWarning: Liblinear failed to converge, increase the number of iterations.

"the number of iterations.", ConvergenceWarning)

StackingClassifier(average_probas=False,

classifiers=[RandomForestClassifier(bootstrap=True,

class_weight=None,

criterion='gini',

max_depth=None,

max_features='auto',

max_leaf_nodes=None,

min_impurity_decrease=0.0,

min_impurity_split=None,

min_samples_leaf=1,

min_samples_split=2,

min_weight_fraction_leaf=0.0,

n_estimators=400,

n_jobs=-1,

oob_score=True,

random_st...

max_depth=-1,

min_child_samples=20,

min_child_weight=0.001,

min_split_gain=0.0,

n_estimators=300, n_jobs=-1,

num_leaves=31, objective=None,

random_state=None,

reg_alpha=0.0, reg_lambda=0.0,

silent=True, subsample=1.0,

subsample_for_bin=200000,

subsample_freq=0),

store_train_meta_features=False, use_clones=True,

use_features_in_secondary=False, use_probas=False,

verbose=0)

result=eclf.predict(test)

np.savetxt('result1.csv',

np.c_[range(1,len(test)+1),result],

delimiter=',',

header = 'ImageId,Label',

comments = '',

fmt='%d')

stacking的最终结果为97.3%,voting的最终结果为97%