Netty使用案例 -发送队列积压导致内存泄漏(一)

文章目录

- Netty发送队列积压案例

- 高并发故障场景模拟

- 使用MAT分析内存泄漏Dump文件

- 如何防止发送队列积压

- 问题总结

Netty发送队列积压案例

环境配置

//vm参数设置

-Xmx1000m -XX:+PrintGC -XX:+PrintGCDetails

对业务性能压测,N个客户端并发访问服务端,客户端基于Netty框架做网络通信,压测一段时间之后,响应时间越来越长,失败率增加,监控客户端内存使用情况,发现使用的内存一直飙升,吞吐量慢慢降为0,最后发生OOM异常,CPU占用居高不下,GC占满CPU。

高并发故障场景模拟

客户端内部创建一个线程,向服务端循环发送请求,模拟客户端高并发场景。

服务端代码入下:

/**

* Created by lijianzhen1 on 2019/1/24.

*/

public final class LoadRunnerServer {

static final int PORT = Integer.parseInt(System.getProperty("port", "8080"));

public static void main(String[] args) throws Exception {

EventLoopGroup bossGroup = new NioEventLoopGroup(1);

EventLoopGroup workerGroup = new NioEventLoopGroup();

try {

ServerBootstrap b = new ServerBootstrap();

b.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.option(ChannelOption.SO_BACKLOG, 100)

.handler(new LoggingHandler(LogLevel.INFO))

.childHandler(new ChannelInitializer<SocketChannel>() {

@Override

public void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline p = ch.pipeline();

p.addLast(new EchoServerHandler());

}

});

ChannelFuture f = b.bind(PORT).sync();

f.channel().closeFuture().sync();

} finally {

bossGroup.shutdownGracefully();

workerGroup.shutdownGracefully();

}

}

}

class EchoServerHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) {

ctx.write(msg);

}

@Override

public void channelReadComplete(ChannelHandlerContext ctx) {

ctx.flush();

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

// 发生异常关闭连接

cause.printStackTrace();

ctx.close();

}

}

客户端代码如下

public class LoadRunnerClient {

static final String HOST = System.getProperty("host", "127.0.0.1");

static final int PORT = Integer.parseInt(System.getProperty("port", "8080"));

@SuppressWarnings({"unchecked", "deprecation"})

public static void main(String[] args) throws Exception {

EventLoopGroup group = new NioEventLoopGroup();

try {

Bootstrap b = new Bootstrap();

b.group(group)

.channel(NioSocketChannel.class)

.option(ChannelOption.TCP_NODELAY, true)

//设置请求的高水位

.option(ChannelOption.WRITE_BUFFER_HIGH_WATER_MARK, 10 * 1024 * 1024)

.handler(new ChannelInitializer<SocketChannel>() {

@Override

public void initChannel(SocketChannel ch) throws Exception {

ChannelPipeline p = ch.pipeline();

p.addLast(new LoadRunnerClientHandler());

}

});

ChannelFuture f = b.connect(HOST, PORT).sync();

f.channel().closeFuture().sync();

} finally {

group.shutdownGracefully();

}

}

}

public class LoadRunnerClientHandler extends ChannelInboundHandlerAdapter {

private final ByteBuf firstMessage;

Runnable loadRunner;

AtomicLong sendSum = new AtomicLong(0);

Runnable profileMonitor;

static final int SIZE = Integer.parseInt(System.getProperty("size", "256"));

/**

* 创建客户端的handler

*/

public LoadRunnerClientHandler() {

firstMessage = Unpooled.buffer(SIZE);

for (int i = 0; i < firstMessage.capacity(); i ++) {

firstMessage.writeByte((byte) i);

}

}

@Override

public void channelActive(final ChannelHandlerContext ctx) {

loadRunner = new Runnable() {

@Override

public void run() {

try {

TimeUnit.SECONDS.sleep(30);

} catch (InterruptedException e) {

e.printStackTrace();

}

ByteBuf msg = null;

final int len = "Netty OOM Example".getBytes().length;

while(true)

{

msg = Unpooled.wrappedBuffer("Netty OOM Example".getBytes());

ctx.writeAndFlush(msg);

}

}

};

new Thread(loadRunner, "LoadRunner-Thread").start();

}

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg)

{

ReferenceCountUtil.release(msg);

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

cause.printStackTrace();

ctx.close();

}

}

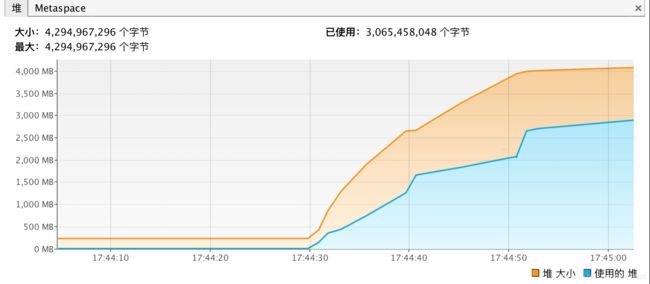

创建链路之后,客户端启动一个线程向服务端循环发送请求消息,模拟客户端压测场景,系统运行一段时间后,发现内存占用率飙升。

发现老年代已满,系统已经无法运行

FullGC次数增多,每次GC时间变长

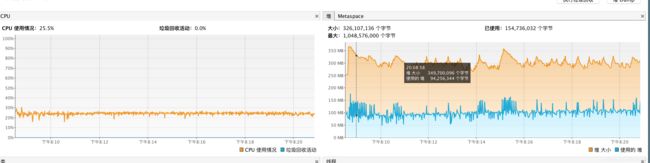

CPU使用情况,发现GC占用了大量的CPU资源。最后发生OOM。

Exception: java.lang.OutOfMemoryError thrown from the UncaughtExceptionHandler in thread "LoadRunner-Thread"

[PSYoungGen: 134602K->134602K(227328K)] [ParOldGen: 682881K->682880K(683008K)] 817483K->817483K(910336K), [Metaspace: 12452K->12452K(1060864K)], 1.8986145 secs] [Times: user=11.42 sys=0.06, real=1.90 secs]

使用MAT分析内存泄漏Dump文件

Dump客户端导出的文件heapdump-1548325358543.hprof 进行分析,发小Netty的NioEventLoop占用了99.75%,的内存,可以确认NioEventLoop发生了内存泄漏。

对引用关系进行分析,发现真正泄漏对象是WriteAndFlushTask,它包含了待发送的客户端请求消息msg及promise对象,如下图:

Netty的消息发送队列积压,通过源码看看,调用Channel的write方法时,如果发送方为业务线程,则将发送操作封装成WriteAndFlushTask,放到Netty的NioEventLoop中执行。

//AbstractChannelHandlerContext.write(Object msg, boolean flush, ChannelPromise promise)

private void write(Object msg, boolean flush, ChannelPromise promise) {

AbstractChannelHandlerContext next = findContextOutbound();

final Object m = pipeline.touch(msg, next);

EventExecutor executor = next.executor();

if (executor.inEventLoop()) {//判断当前线程是否是EventLoop线程

if (flush) {

next.invokeWriteAndFlush(m, promise);

} else {

next.invokeWrite(m, promise);

}

} else {

//主要是在这里

AbstractWriteTask task;

//这里传递古来true

if (flush) {

//WriteAndFlushTask构建

task = WriteAndFlushTask.newInstance(next, m, promise);

} else {

task = WriteTask.newInstance(next, m, promise);

}

safeExecute(executor, task, promise, m);

}

}

当消息太多了后,Netty的I/O线程NioEventLoop无法完成, 任务队列都转移到了任务队列中,这样任务队列积压导致内存泄漏。

如何防止发送队列积压

为了防止在高并发场景下,由于服务端处理慢导致客户端消息积压,除了服务端做流量控制,客户端也需要做并发保护,防止自身发生消息积压。

利用Netty提供的高低水位机制,可以实现客户端更精准的流控。调整客户端代码。

public class LoadRunnerClientHandler extends ChannelInboundHandlerAdapter {

private final ByteBuf firstMessage;

Runnable loadRunner;

AtomicLong sendSum = new AtomicLong(0);

Runnable profileMonitor;

static final int SIZE = Integer.parseInt(System.getProperty("size", "256"));

/**

* Creates a client-side handler.

*/

public LoadRunnerClientHandler() {

firstMessage = Unpooled.buffer(SIZE);

for (int i = 0; i < firstMessage.capacity(); i++) {

firstMessage.writeByte((byte) i);

}

}

@Override

public void channelActive(final ChannelHandlerContext ctx) {

//这里限制高水位

ctx.channel().config().setWriteBufferHighWaterMark(10 * 1024 * 1024);

loadRunner = new Runnable() {

@Override

public void run() {

try {

TimeUnit.SECONDS.sleep(30);

} catch (InterruptedException e) {

e.printStackTrace();

}

ByteBuf msg = null;

final int len = "Netty OOM Example".getBytes().length;

while (true) {

//判断是否越过高水位

if (ctx.channel().isWritable()) {

msg = Unpooled.wrappedBuffer("Netty OOM Example".getBytes());

//这里会有问题

ctx.writeAndFlush(msg);

} else {

System.out.println("写入队列已经满了对应buffer的大小 :" + ctx.channel().unsafe().outboundBuffer().nioBufferCount());

}

}

}

};

new Thread(loadRunner, "LoadRunner-Thread").start();

}

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) {

ReferenceCountUtil.release(msg);

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) {

cause.printStackTrace();

ctx.close();

}

}

调整后运行正常,内存和CPU使用情况正常,内存泄漏问题解决。

修改代码当发送队列待发送的字节数组达到高水位时,对应的Channel就变为不可写状态,由于高水位并不影响业务线程调用write方法并把消息加入待发队列,因此,必须在消息发送时对Channel的状态进行判断,当到达高水位时,Channel的状态被设置为不可写,通过对Channel的可写状态进行判断决定是否发送消息。

问题总结

在实际项目中,根据业务QPS规划,客户端处理性能,网络带宽,链路数,消息平均码流大小等综合因素计算并设置高水位值,利用高水位做消息发送速度的流控,既可以保护自己,也可以减轻服务端的压力,防止服务端被压宕机。