Logstash Centos上部署与入门测试

特别提示:Logstash是开箱即用,即解压后就能使用。

笔者环境:Centos6.9 + ES6.6.2

安装步骤如下示:

一、官网下载解压

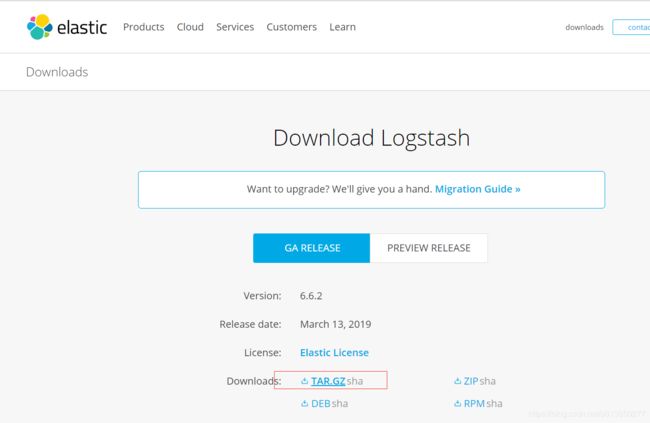

笔者ES 版本为6.6.2 版本,因此也就直接下载Logstash6.6.2了。未探究过,这两者版本是否相互有影响。

6.6.2 下载地址如下:

https://artifacts.elastic.co/downloads/logstash/logstash-6.6.2.tar.gz

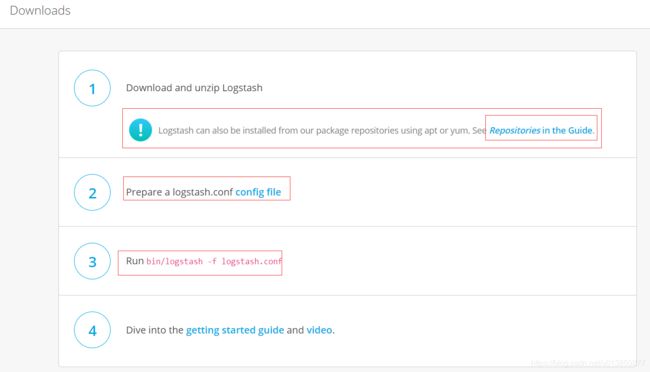

官网解释如下:下载,解压,准备logstash对应的配置文件(这里的配置文件详情见下文)便可运行,下载与安装也可直接通过yum方式,详情可见其官网(即下图中第一点对应的链接)

二、解压

[root@bigdata ~]# tar -zxvf logstash-6.6.2.tar.gz

[root@bigdata ~]# mv logstash-6.6.2 /usr/

三、测试

在这里首先做个小说明:

官网解释如下,个人认为非常形象(官网链接)

-

从上面的解释,可以知道Logstash是一个数据处理管道(笔者认为这个其实跟flume挺类似的),可同时从多个数据源获取数据,并对其进行转换,然后将其发送到你所要存储的位置,可以是ES也可以是其他数据源。

-

这个管道主要由三个组件组成分别是:input、filter、output;

-

在这里可以简单理解成,input 是接收数据,filter是对接收的数据进行过滤(也可理解成业务处理),output是对处理后的数据进行输出。这三个过统一写到上文所提到logstash对应的配置文件中,在开始执行logstash时以该配置文件来启动。

样例一:下文以官网给的例子来简单运行看下效果

- 启动logstash, 这里不写配置文件,直接将文件内容写到命令中,如下input部分通过控制台输入,filter部分不做处理,output部分通过控制台输出

bin/logstash -e 'input { stdin { } } output { stdout {} }'

稍等一小会,logstash 启动成功,日志如下:

[root@node logstash-6.6.2]# bin/logstash -e 'input { stdin { } } output { stdout {} }'

Sending Logstash logs to /usr/logstash-6.6.2/logs which is now configured via log4j2.properties

[2019-03-22T17:06:43,339][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2019-03-22T17:06:43,358][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.6.2"}

[2019-03-22T17:06:50,689][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>32, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2019-03-22T17:06:50,823][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#"}

The stdin plugin is now waiting for input:

[2019-03-22T17:06:50,872][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2019-03-22T17:06:51,114][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

在控制台输入HelloWorld,logstash处理后便会在控制台输出如下信息:

{

"@version" => "1",

"message" => "HelloWorld",

"host" => "node",

"@timestamp" => 2019-03-22T09:07:17.026Z

}

以上就是logstash 官网提供的一个最简单的样例

样例二:写在配置文件中,如下所示

监听path目录下的文件

[root@bigdata logstash]# vi test.conf

input {

file{

path => "/usr/logstash/testFile.txt"

}

}

output {

stdout {}

}

启动logstash

[root@bigdata logstash]# ./bin/logstash -f test.conf

Sending Logstash logs to /usr/logstash/logs which is now configured via log4j2.properties

[2019-03-22T17:28:52,488][WARN ][logstash.config.source.multilocal] Ignoring the 'pipelines.yml' file because modules or command line options are specified

[2019-03-22T17:28:52,510][INFO ][logstash.runner ] Starting Logstash {"logstash.version"=>"6.6.2"}

[2019-03-22T17:28:59,869][INFO ][logstash.pipeline ] Starting pipeline {:pipeline_id=>"main", "pipeline.workers"=>32, "pipeline.batch.size"=>125, "pipeline.batch.delay"=>50}

[2019-03-22T17:29:00,125][INFO ][logstash.inputs.file ] No sincedb_path set, generating one based on the "path" setting {:sincedb_path=>"/usr/logstash/data/plugins/inputs/file/.sincedb_9ae59c1ca8e4513c20f0153fea772b9a", :path=>["/usr/logstash/testFile.txt"]}

[2019-03-22T17:29:00,173][INFO ][logstash.pipeline ] Pipeline started successfully {:pipeline_id=>"main", :thread=>"#"}

[2019-03-22T17:29:00,230][INFO ][filewatch.observingtail ] START, creating Discoverer, Watch with file and sincedb collections

[2019-03-22T17:29:00,234][INFO ][logstash.agent ] Pipelines running {:count=>1, :running_pipelines=>[:main], :non_running_pipelines=>[]}

[2019-03-22T17:29:00,574][INFO ][logstash.agent ] Successfully started Logstash API endpoint {:port=>9600}

{

"message" => "",

"@version" => "1",

"@timestamp" => 2019-03-22T09:31:12.048Z,

"path" => "/usr/logstash/testFile.txt",

"host" => "bigdata"

}

修改所监听文件则输出如下日志

[root@bigdata logstash]# vi testFile.txt

Hello word

Hello Java

{

"message" => "Hello word",

"@version" => "1",

"@timestamp" => 2019-03-22T09:31:12.016Z,

"path" => "/usr/logstash/testFile.txt",

"host" => "bigdata"

}

{

"message" => "Hello Java",

"@version" => "1",

"@timestamp" => 2019-03-22T09:31:39.185Z,

"path" => "/usr/logstash/testFile.txt",

"host" => "bigdata"

}

样例三:同步Mysql 数据到ES 中

见链接 Logstash-input-jdbc 同步mysql 数据至ElasticSearch

供参考的好文档:

Logstash详解之——input模块

Logstash详解之——filter模块

Logstash安装和基本使用