CDH6集成Kerberos

一、概述

本文是针对cloudera大数据集群安全部署整理的文档,主要介绍了大数据集群Kerberos服务器部署和集成的过程。

这篇文章介绍了kerberos配置高可用HA的过程:Kerberos高可用HA配置

二、环境说明

1、主机名

主机名首字母必须为小写,否则在集成kerberos时会出现很多异常问题。

2、hosts文件

/etc/hosts文件中不要包含127.0.0.1的解析。

3、ASE-256加密

我们的系统是Red Hat Enterprise Linux Server

release 7.5,对于centos5.6及以上的系统,默认使用AES-256来加密的。无论采用何种加密方式,zookeeper都必须要求集群中所有节点安装java Cryptography Extension(JCE) Unlimited Strength Jurisdiction Policy File。否则会报错:

Failed to authenticate using SASL

javax.security.sasl.SaslException:GSS initiate failed 【Caused by GSSException:Failure unspecified at GSS-API level (Mechanism level:Encryption type AES256 CTS mode with HMAC SHA1-96 is not supported/enabled)】...

该文件解压后,是两个jar包,local_policy.jar和US_export_policy.jar,默认的jdk自带的这两个文件是强度加密的,不能满足要求,需要替换掉,在官网上下载非限制强度加密的这两个文件,并放在/usr/java/jdk1.8.0_121/jre/lib/security路径下。

说明:也可以不使用AES-256加密方式,本文后续采用的是AES-128-cts方式。

三、Kerberos服务器(KDC)安装

1、为所有节点安装kerberos软件包:

![]()

2、选择Cloudera Manager节点作为KDC服务器,安装额外包:

![]()

3、KDC服务器涉及到三个配置文件,/etc/krb5.conf、/var/kerberos/krb5kdc/kdc.conf和/var/kerberos/krb5kdc/kadm5.acl。

注意:这三个文件的配置已通过数十遍的测试验证,每个参数都不能随意修改!!!

编辑/etc/krb5.conf,这是客户端上的配置,需要同步到每个客户端节点上。修改其中的realm以及对应的kdc/kadmin服务器地址。

[root@host1 bin]# cat/ etc/krb5.conf

[logging]

default = FILE:/var/log/krb5libs.log

kdc= FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

default_realm = SPDB.COM

dns_lookup_realm = false

dns_lookup_kdc = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

[realms]

SPDB.COM = {

kdc= host1

admin_server = host1

}

[domain_realm]

.spdb.com = SPDB.COM

spdb.com = SPDB.COM

[kdc]

profile=/var/kerberos/krb5kdc/kdc.conf

编辑/var/kerberos/krb5kdc/kdc.conf,这是Kerberos服务器的配置,修改其中的配置文件中的realm名称。

[root@host1 bin]# cat /var/kerberos/krb5kdc/kdc.conf

[kdcdefaults]

kdc_ports = 88

kdc_tcp_ports = 88

[realms]

SPDB.COM = {

max_renewable_life = 7d

acl_file = /var/kerberos/krb5kdc/kadm5.acl

dict_file = /usr/share/dict/words

admin_keytab = /var/kerberos/krb5kdc/kadm5.keytab

supported_enctypes = aes256-cts:normal aes128-cts:normal des3-hmac-sha1:normal arcfour-hmac:normal camellia256-cts:normal camellia128-cts:normal des-hmac-sha1:normal des-cbc-md5:normal des-cbc-crc:normal

}

编辑/var/kerberos/krb5kdc/kadm5.acl,添加作为管理员的类型,这里以/admin结尾的用户是作为管理员用户。

4、完成文件配置后,通过命令初始化KDC Database。该命令会在/var/kerberos/krb5kdc/目录下创建principal数据库。

5、然后启动KDC进程,并加入开机启动项

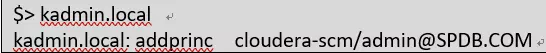

6、使用Kadmin给Cloudera Manager建立Kerberos账户。

7、将KDC Server上的krb5.conf文件拷贝到所有Kerberos客户端

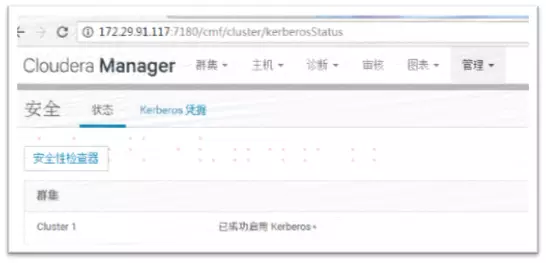

四、CDH集群启用kerberos

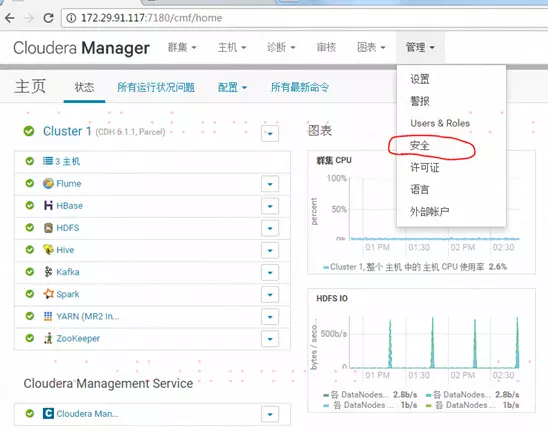

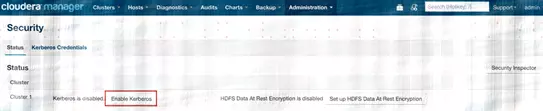

1、进入Cloudera Manager的“管理”—>“安全”界面,选择启用“kerberos”

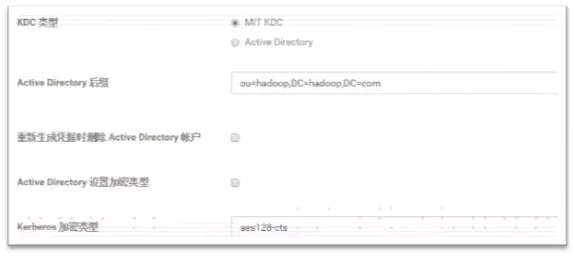

2、填写基本配置信息,选择MIT KDC,加密类型输入aes128-cts,kerberos安全领域输入SPDB.COM,KDC Server和KDC Admin Server Host都填写CM主机名

3、前置条件,全部勾选,点击继续

4、点击继续,不勾选让cloudera

Manager来管理krb5.conf,点击继续

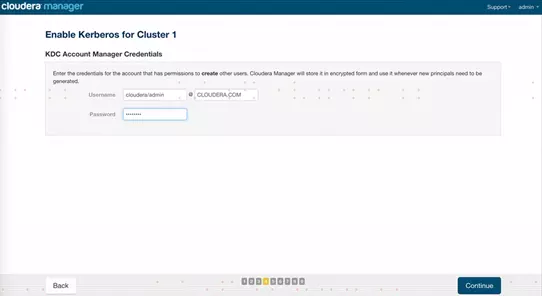

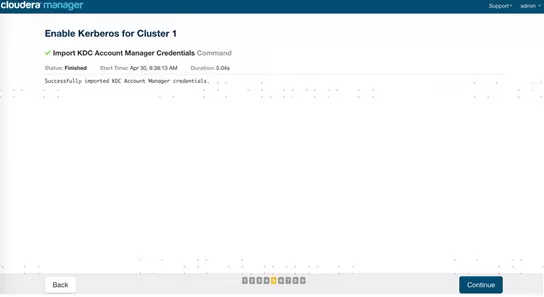

5、输入cloudera Manager的kerberos管理员账号,必须和之前创建的账号一致:cloudera-scm/admin,点击继续

6、配置对应服务使用的principal名称,这里用默认的就可以,点击继续

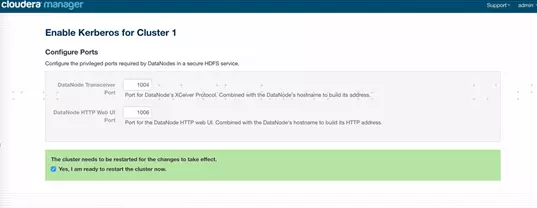

7、配置HDFS需要的端口,使用默认就可以,然后点击可以重启集群(“Yes, I am ready…”部分),点击继续。

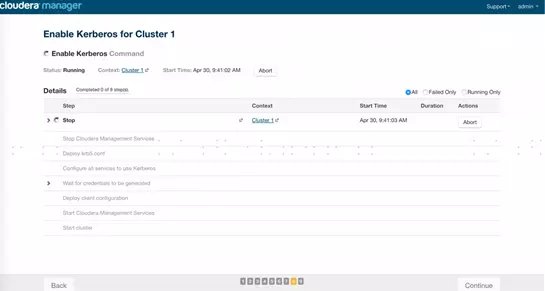

8、等待集群重启完成

9、至此已成功启用kerberos

五、Kafka额外配置及验证(kafka集成kerberos)

CDH集群在启用了kerberos后,kafka还需要做额外的配置,否则启动会报如下错:

19/06/19 19:27:22 WARNzookeeper.ClientCnxn: SASL configuration failed:

javax.security.auth.login.LoginException:No JAAS configuration section named 'Client' was found in specified JAASconfiguration file: '/tmp/wk/kafka/jaas-keytab.conf'. Will continue connectionto Zookeeper server without SASL authentication, if Zookeeper server allows it.

19/06/19 19:27:22 INFOzookeeper.ClientCnxn: Opening socket connection to server

localhost/127.0.0.1:2181

19/06/19 19:27:22 ERRORzookeeper.ZooKeeperClient: [ZooKeeperClient] Auth failed.

1、Server端

在CDH集群启用kerberos后,CM默认会将ssl.client.auth配置为none并Enable Kerberos Authentication,但除此之外,kafka还有一项需要额外配置,在CM上修改security.inter.broker.protocol为SASL_PLAINTEXT,再重启kafka服务。

2、Client端

需要分别创建三个文件,jaas.conf、client.properties和jaas-keytab.conf

创建jaas.conf文件,内容如下:

[root@host1 kafka]# cat jaas.conf

KafkaClient{

com.sun.security.auth.module.Krb5LoginModule

required

useTicketCache=true;

};

创建client.properties文件,内容如下:

[root@host1 kafka]# client.properties

KafkaClient{

com.sun.security.auth.module.Krb5LoginModule

required

useTicketCache=true;

};

[root@host1 kafka]# cat client.properties

security.protocol=SASL_PLAINTEXT

sasl.kerberos.service.name=kafka

创建jaas-keytab.conf文件,内容如下:

[root@host1 kafka]# cat jaas-keytab.conf

KafkaClient{

com.sun.security.auth.module.Krb5LoginModule

required

useKeyTab=true

keyTab="/root/hdfs.keytab"

principal="[email protected]";

};

之后进行认证,认证有两种方式,一种是通过kinit username,另一种是通过keytab文件。

第一种:

exportKAFKA_OPTS="-Djava.security.auth.login.config=/tmp/wk/kafka/jaas.conf"

第二种:

exportKAFKA_OPTS="-Djava.security.auth.login.config=/tmp/wk/kafka/jaas-keytab.conf"

两种都可以。

测试Producer

[root@host1 kafka]# kafka-console-producer --broker-list 172.29.X.X:9092 --topic test --producer.config

client.properties

19/06/20 09:23:07 INFOutils.Log4jControllerRegistration$: Registered kafka:type=kafka.Log4jControllerMBean

19/06/20 09:23:07 INFOproducer.ProducerConfig: ProducerConfig values:

acks= 1

batch.size= 16384

bootstrap.servers= [172.29.X.X:9092]

buffer.memory= 33554432

client.id= console-producer

compression.type= none

connections.max.idle.ms= 540000

enable.idempotence= false

interceptor.classes= []

key.serializer= class org.apache.kafka.common.serialization.ByteArraySerializer

linger.ms= 1000

max.block.ms= 60000

max.in.flight.requests.per.connection= 5

max.request.size= 1048576

metadata.max.age.ms= 300000

metric.reporters= []

metrics.num.samples= 2

metrics.recording.level= INFO

metrics.sample.window.ms= 30000

partitioner.class= class org.apache.kafka.clients.producer.internals.DefaultPartitioner

receive.buffer.bytes= 32768

reconnect.backoff.max.ms= 1000

reconnect.backoff.ms= 50

request.timeout.ms= 1500

retries= 3

retry.backoff.ms= 100

sasl.client.callback.handler.class= null

sasl.jaas.config= null

sasl.kerberos.kinit.cmd= /usr/bin/kinit

sasl.kerberos.min.time.before.relogin= 60000

sasl.kerberos.service.name= kafka

sasl.kerberos.ticket.renew.jitter= 0.05

sasl.kerberos.ticket.renew.window.factor= 0.8

sasl.login.callback.handler.class= null

sasl.login.class= null

sasl.login.refresh.buffer.seconds= 300

sasl.login.refresh.min.period.seconds= 60

sasl.login.refresh.window.factor= 0.8

sasl.login.refresh.window.jitter= 0.05

sasl.mechanism= GSSAPI

security.protocol= SASL_PLAINTEXT

send.buffer.bytes= 102400

ssl.cipher.suites= null

ssl.enabled.protocols= [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm= null

ssl.key.password= null

ssl.keymanager.algorithm= SunX509

ssl.keystore.location= null

ssl.keystore.password= null

ssl.keystore.type= JKS

ssl.protocol= TLS

ssl.provider= null

ssl.secure.random.implementation= null

ssl.trustmanager.algorithm= PKIX

ssl.truststore.location= null

ssl.truststore.password= null

ssl.truststore.type= JKS

transaction.timeout.ms= 60000

transactional.id= null

value.serializer= class org.apache.kafka.common.serialization.ByteArraySerializer

19/06/20 09:23:07 INFOauthenticator.AbstractLogin: Successfully logged in.

19/06/20 09:23:07 INFOkerberos.KerberosLogin: [Principal=null]: TGT refresh thread started.

19/06/20 09:23:07 INFOkerberos.KerberosLogin: [Principal=null]: TGT valid starting at: Thu Jun 2009:20:41 CST 2019

19/06/20 09:23:07 INFOkerberos.KerberosLogin: [Principal=null]: TGT expires: Fri Jun 21 09:20:41 CST2019

19/06/20 09:23:07 INFOkerberos.KerberosLogin: [Principal=null]: TGT refresh sleeping until: Fri Jun21 05:43:42 CST 2019

19/06/20 09:23:07 INFO utils.AppInfoParser:Kafka version : 2.0.0-cdh6.1.1

19/06/20 09:23:07 INFO utils.AppInfoParser:Kafka commitId : unknown

>Hello World!

19/06/20 09:23:29 INFO clients.Metadata:Cluster ID: 8JBaORpCR5evbE0EjF8SrA

>Hello

Kerberos!

>quit;

启动producer客户端过程和发消息过程没报错即代表producer客户端配置成功。

测试Consumer

[root@host1 kafka]#kafka-console-consumer --topic test --from-beginning --bootstrap-server 172.29.X.X:9092 --consumer.config client.properties

19/06/20 09:34:02 INFOutils.Log4jControllerRegistration$: Registered kafka:type=kafka.Log4jControllerMBean

19/06/20 09:34:03 INFOconsumer.ConsumerConfig: ConsumerConfig values:

auto.commit.interval.ms= 5000

auto.offset.reset= earliest

bootstrap.servers= [172.29.91.117:9092]

check.crcs= true

client.id=

connections.max.idle.ms= 540000

default.api.timeout.ms= 60000

enable.auto.commit= true

exclude.internal.topics= true

fetch.max.bytes= 52428800

fetch.max.wait.ms= 500

fetch.min.bytes= 1

group.id= console-consumer-26237

heartbeat.interval.ms= 3000

interceptor.classes= []

internal.leave.group.on.close= true

isolation.level= read_uncommitted

key.deserializer= class org.apache.kafka.common.serialization.ByteArrayDeserializer

max.partition.fetch.bytes= 1048576

max.poll.interval.ms= 300000

max.poll.records= 500

metadata.max.age.ms= 300000

metric.reporters= []

metrics.num.samples= 2

metrics.recording.level= INFO

metrics.sample.window.ms= 30000

partition.assignment.strategy= [class org.apache.kafka.clients.consumer.RangeAssignor]

receive.buffer.bytes= 65536

reconnect.backoff.max.ms= 1000

reconnect.backoff.ms= 50

request.timeout.ms= 30000

retry.backoff.ms= 100

sasl.client.callback.handler.class= null

sasl.jaas.config= null

sasl.kerberos.kinit.cmd= /usr/bin/kinit

sasl.kerberos.min.time.before.relogin= 60000

sasl.kerberos.service.name= kafka

sasl.kerberos.ticket.renew.jitter= 0.05

sasl.kerberos.ticket.renew.window.factor= 0.8

sasl.login.callback.handler.class= null

sasl.login.class= null

sasl.login.refresh.buffer.seconds= 300

sasl.login.refresh.min.period.seconds= 60

sasl.login.refresh.window.factor= 0.8

sasl.login.refresh.window.jitter= 0.05

sasl.mechanism= GSSAPI

security.protocol= SASL_PLAINTEXT

send.buffer.bytes= 131072

session.timeout.ms= 10000

ssl.cipher.suites= null

ssl.enabled.protocols= [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm= null

ssl.key.password= null

ssl.keymanager.algorithm= SunX509

ssl.keystore.location= null

ssl.keystore.password= null

ssl.keystore.type= JKS

ssl.protocol= TLS

ssl.provider= null

ssl.secure.random.implementation= null

ssl.trustmanager.algorithm= PKIX

ssl.truststore.location= null

ssl.truststore.password= null

ssl.truststore.type= JKS

value.deserializer= class org.apache.kafka.common.serialization.ByteArrayDeserializer

19/06/20 09:34:03 INFOauthenticator.AbstractLogin: Successfully logged in.

19/06/20 09:34:03 INFOkerberos.KerberosLogin: [Principal=null]: TGT refresh thread started.

19/06/20 09:34:03 INFO kerberos.KerberosLogin:[Principal=null]: TGT valid starting at: Thu Jun 20 09:20:41 CST 2019

19/06/20 09:34:03 INFOkerberos.KerberosLogin: [Principal=null]: TGT expires: Fri Jun 21 09:20:41 CST2019

19/06/20 09:34:03 INFOkerberos.KerberosLogin: [Principal=null]: TGT refresh sleeping until: Fri Jun21 05:01:47 CST 2019

19/06/20 09:34:03 INFO utils.AppInfoParser:Kafka version : 2.0.0-cdh6.1.1

19/06/20 09:34:03 INFO utils.AppInfoParser:Kafka commitId : unknown

19/06/20 09:34:03 INFO clients.Metadata:Cluster ID: 8JBaORpCR5evbE0EjF8SrA

19/06/20 09:34:04 INFOinternals.AbstractCoordinator: [Consumer clientId=consumer-1,groupId=console-consumer-26237] Discovered group coordinator wgqcasappun06:9092(id: 2147483459 rack: null)

19/06/20 09:34:04 INFO internals.ConsumerCoordinator:[Consumer clientId=consumer-1, groupId=console-consumer-26237] Revokingpreviously assigned partitions []

19/06/20 09:34:04 INFOinternals.AbstractCoordinator: [Consumer clientId=consumer-1,groupId=console-consumer-26237] (Re-)joining group

19/06/20 09:34:04 INFOinternals.AbstractCoordinator: [Consumer clientId=consumer-1,groupId=console-consumer-26237] Group coordinator wgqcasappun06:9092 (id:2147483459 rack: null) is unavailable or invalid, will attempt rediscovery

19/06/20 09:34:04 INFOinternals.AbstractCoordinator: [Consumer clientId=consumer-1,groupId=console-consumer-26237] Discovered group coordinator wgqcasappun06:9092(id: 2147483459 rack: null)

19/06/20 09:34:04 INFOinternals.AbstractCoordinator: [Consumer clientId=consumer-1, groupId=console-consumer-26237](Re-)joining group

19/06/20 09:34:04 INFOinternals.AbstractCoordinator: [Consumer clientId=consumer-1,groupId=console-consumer-26237] (Re-)joining group

19/06/20 09:34:04 INFOinternals.AbstractCoordinator: [Consumer clientId=consumer-1,groupId=console-consumer-26237] (Re-)joining group

19/06/20 09:34:04 INFOinternals.AbstractCoordinator: [Consumer clientId=consumer-1,groupId=console-consumer-26237] (Re-)joining group

19/06/20 09:34:05 INFOinternals.AbstractCoordinator: [Consumer clientId=consumer-1,groupId=console-consumer-26237] (Re-)joining group

19/06/20 09:34:05 INFOinternals.AbstractCoordinator: [Consumer clientId=consumer-1,groupId=console-consumer-26237] (Re-)joining group

19/06/20 09:34:05 INFO internals.AbstractCoordinator:[Consumer clientId=consumer-1, groupId=console-consumer-26237] (Re-)joininggroup

19/06/20 09:34:08 INFOinternals.AbstractCoordinator: [Consumer clientId=consumer-1,groupId=console-consumer-26237] Successfully joined group with generation 1

19/06/20 09:34:08 INFOinternals.ConsumerCoordinator: [Consumer clientId=consumer-1,groupId=console-consumer-26237] Setting newly assigned partitions [test-0]

19/06/20 09:34:08 INFO internals.Fetcher:[Consumer clientId=consumer-1, groupId=console-consumer-26237] Resetting offsetfor partition test-0 to offset 0.

Hello World!

Hello Kerberos!

quit

启动consumer客户端过程和接受消息过程没报错即代表客户端配置成功。

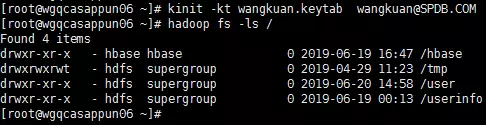

六、Kerberos使用

使用wangkuan用户访问HDFS及运行MapReduce。

1、访问HDFS

没有登录kerberos会报“Client cannot authenticate via:[TOKEN, KERBEROS]”授权失败

使用wangkuan用户登录kerberos,没有报错就说明登录成功

再次访问则通过

2、运行MapReduce

没有登录kerberos会报“Client cannot authenticate via:[TOKEN, KERBEROS]”授权失败

使用wangkuan用户登录kerberos,没有报错就说明登录成功

再次访问则通过

Kafka的配置及验证过程见第5章。