- 论文笔记—NDT-Transformer: Large-Scale 3D Point Cloud Localization using the Normal Distribution Transfor

入门打工人

笔记slam定位算法

论文笔记—NDT-Transformer:Large-Scale3DPointCloudLocalizationusingtheNormalDistributionTransformRepresentation文章摘要~~~~~~~在GPS挑战的环境中,自动驾驶对基于3D点云的地点识别有很高的要求,并且是基于激光雷达的SLAM系统的重要组成部分(即闭环检测)。本文提出了一种名为NDT-Transf

- [论文笔记]Circle Loss: A Unified Perspective of Pair Similarity Optimization

愤怒的可乐

#文本匹配[论文]论文翻译/笔记自然语言处理论文阅读人工智能

引言为了理解CoSENT的loss,今天来读一下CircleLoss:AUnifiedPerspectiveofPairSimilarityOptimization。为了简单,下文中以翻译的口吻记录,比如替换"作者"为"我们"。这篇论文从对深度特征学习的成对相似度优化角度出发,旨在最大化同类之间的相似度sps_ps

- 【论文笔记】Multi-Task Learning as a Bargaining Game

xhyu61

机器学习学习笔记论文笔记论文阅读人工智能深度学习

Abstract本文将多任务学习中的梯度组合步骤视为一种讨价还价式博弈(bargaininggame),通过游戏,各个任务协商出共识梯度更新方向。在一定条件下,这种问题具有唯一解(NashBargainingSolution),可以作为多任务学习中的一种原则方法。本文提出Nash-MTL,推导了其收敛性的理论保证。1Introduction大部分MTL优化算法遵循一个通用方案。计算所有任务的梯度g

- [论文笔记] LLaVA

心心喵

论文笔记论文阅读

一、LLaVA论文中的主要工作和实验结果ExistingGap:之前的大部分工作都在做模态对齐,做图片的representationlearning,而没有针对ChatBot(多轮对话,指令理解)这种场景优化。Contribution:这篇工作已经在BLIP-2之后了,所以Image的理解能力不是LLaVA希望提升的重点,LLaVA是想提升多模态模型的Instruction-Followingab

- [论文笔记] LLM模型剪枝

心心喵

论文笔记论文阅读剪枝算法

AttentionIsAllYouNeedButYouDon’tNeedAllOfItForInferenceofLargeLanguageModelsLLaMA2在剪枝时,跳过ffn和跳过fulllayer的效果差不多。相比跳过ffn/fulllayer,跳过attentionlayer的影响会更小。跳过attentionlayer:7B/13B从100%参数剪枝到66%,平均指标只下降1.7~

- 【论文笔记】Training language models to follow instructions with human feedback B部分

Ctrl+Alt+L

大模型论文整理论文笔记论文阅读语言模型人工智能自然语言处理

TraininglanguagemodelstofollowinstructionswithhumanfeedbackB部分回顾一下第一代GPT-1:设计思路是“海量无标记文本进行无监督预训练+少量有标签文本有监督微调”范式;模型架构是基于Transformer的叠加解码器(掩码自注意力机制、残差、Layernorm);下游各种具体任务的适应是通过在模型架构的输出后增加线性权重WyW_{y}Wy实

- 【论文笔记】:LAYN:用于小目标检测的轻量级多尺度注意力YOLOv8网络

hhhhhhkkkyyy

论文阅读目标检测YOLO

背景针对嵌入式设备对目标检测算法的需求,大多数主流目标检测框架目前缺乏针对小目标的具体改进,然后提出的一种轻量级多尺度注意力YOLOv8小目标检测算法。小目标检测精度低的原因随着网络在训练过程中的加深,检测到的目标容易丢失边缘信息和灰度信息等。获得高级语义信息也较少,图像中可能存在一些噪声信息,误导训练网络学习不正确的特征。映射到原始图像的感受野的大小。当感受野相对较小时,空间结构特征保留较多,但

- 激光SLAM--(8) LeGO-LOAM论文笔记

lonely-stone

slam激光SLAM论文阅读

论文标题:LeGO-LOAM:LightweightandGround-OptimizedLidarOdometryandMappingonVariableTerrain应用在可变地形场景的轻量级的、并利用地面优化的LOAMABSTRACT轻量级的、基于地面优化的LOAM实时进行六自由度位姿估计,应用在地面的车辆上。强调应用在地面车辆上是因为在这里面要求雷达必须水平安装,而像LOAM和LIO-SA

- 论文浅尝 - AAAI2020 | 迈向建立多语言义元知识库:用于 BabelNet Synsets 义元预测...

开放知识图谱

机器学习人工智能知识图谱自然语言处理深度学习

论文笔记整理:潘锐,天津大学硕士。来源:AAAI2020链接:https://arxiv.org/pdf/1912.01795.pdf摘要义原被定义为人类语言的最小语义单位。义原知识库(KBs)是一种包含义原标注词汇的知识库,它已成功地应用于许多自然语言处理任务中。然而,现有的义原知识库建立在少数几种语言上,阻碍了它们的广泛应用。为此论文提出在多语种百科全书词典BabelNet的基础上建立一个统一

- [论文笔记] LLM数据集——LongData-Corpus

心心喵

论文笔记服务器ubuntulinux

https://huggingface.co/datasets/yuyijiong/LongData-Corpus1、hf的数据在开发机上要设置sshkey,然后cat复制之后在设置在hf上2、中文小说数据在云盘上清华大学云盘下载:#!/bin/bash#BaseURLbase_url="https://cloud.tsinghua.edu.cn/d/0670fcb14d294c97b5cf/fi

- [论文笔记] eval-big-refactor lm_eval 每两个任务使用一个gpu,并保证端口未被使用

心心喵

论文笔记restful后端

1.5B在eval时候两个任务一个gpu是可以的。7B+在evalbelebele时会OOM,所以分配时脚本不同。eval_fast.py:importsubprocessimportargparseimportosimportsocket#参数列表task_name_list=["flores_mt_en_to_id","flores_mt_en_to_vi","flores_mt_en_to_

- 【论文笔记】Separating the “Chirp” from the “Chat”: Self-supervised Visual Grounding of Sound and Language

xhyu61

机器学习学习笔记论文笔记论文阅读

Abstract提出了DenseAV,一种新颖的双编码器接地架构,仅通过观看视频学习高分辨率、语义有意义和视听对齐的特征。在没有明确的本地化监督的情况下,DenseAV可以发现单词的"意义"和声音的"位置"。此外,它在没有监督的情况下自动发现并区分这两种类型的关联。DenseAV的定位能力源于一种新的多头特征聚合算子,该算子直接比较稠密的图像和音频表示进行对比学习。相比之下,许多其他学习"全局"音

- 图形学论文笔记

Jozky86

图形学图形学笔记

文章目录PBD:XPBD:shapematchingPBD:【深入浅出NvidiaFleX】(1)PositionBasedDynamics最简化的PBD(基于位置的动力学)算法详解-论文原理讲解和太极代码最简化的PBD(基于位置的动力学)算法详解-论文原理讲解和太极代码XPBD:基于XPBD的物理模拟一条龙:公式推导+代码+文字讲解(纯自制)【论文精读】XPBD基于位置的动力学XPBD论文解读(

- 计算机设计大赛 行人重识别(person reid) - 机器视觉 深度学习 opencv python

iuerfee

python

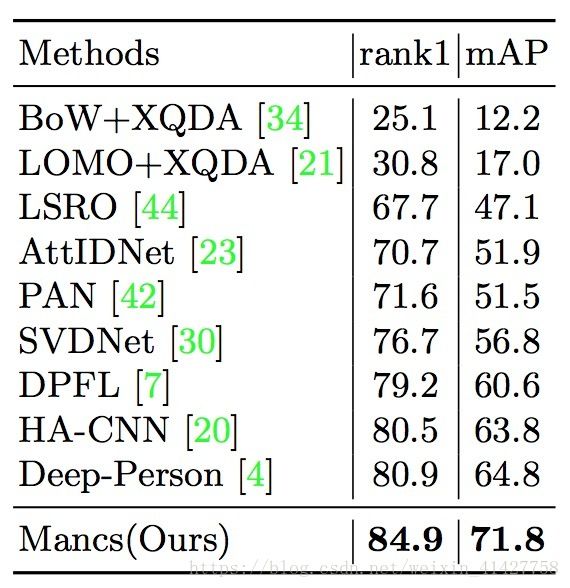

文章目录0前言1技术背景2技术介绍3重识别技术实现3.1数据集3.2PersonREID3.2.1算法原理3.2.2算法流程图4实现效果5部分代码6最后0前言优质竞赛项目系列,今天要分享的是深度学习行人重识别(personreid)系统该项目较为新颖,适合作为竞赛课题方向,学长非常推荐!学长这里给一个题目综合评分(每项满分5分)难度系数:3分工作量:3分创新点:5分更多资料,项目分享:https:

- 【视觉三维重建】【论文笔记】Deblurring 3D Gaussian Splatting

CS_Zero

论文阅读

去模糊的3D高斯泼溅,看Demo比3D高斯更加精细,对场景物体细节的还原度更高,[官网](https://benhenryl.github.io/Deblurring-3D-Gaussian-Splatting/)背景技术Volumetricrendering-basednerualfields:NeRF.Rasterizationrendering:3D-GS.Rasterization比vol

- [论文笔记] Transformer-XL

心心喵

论文笔记transformer深度学习人工智能

这篇论文提出的Transformer-XL主要是针对Transformer在解决长依赖问题中受到固定长度上下文的限制,如Bert采用的Transformer最大上下文为512(其中是因为计算资源的限制,不是因为位置编码,因为使用的是绝对位置编码正余弦编码)。Transformer-XL能学习超过固定长度的依赖性,而不破坏时间一致性。它由段级递归机制和一种新的位置编码方案组成。该方法不仅能够捕获长期

- SimpleShot: Revisiting Nearest-Neighbor Classification for Few-Shot Learning 论文笔记

头柱碳只狼

小样本学习

前言目前大多数小样本学习器首先使用一个卷积网络提取图像特征,然后将元学习方法与最近邻分类器结合起来,以进行图像识别。本文探讨了这样一种可能性,即在不使用元学习方法,而仅使用最近邻分类器的情况下,能否很好地处理小样本学习问题。本文发现,对图像特征进行简单的特征转换,然后再进行最近邻分类,也可以产生很好的小样本学习结果。比如,使用DenseNet特征的最近邻分类器,在结合均值相减(meansubtra

- 多模态相关论文笔记

靖待

大模型人工智能论文阅读

(cilp)LearningTransferableVisualModelsFromNaturalLanguageSupervision从自然语言监督中学习可迁移的视觉模型openAI2021年2月48页PDFCODECLIP(ContrastiveLanguage-ImagePre-Training)对比语言图像预训练模型引言它比ImageNet模型效果更好,计算效率更高。尤其是zero-sho

- 【论文笔记 · PFM】Lag-Llama: Towards Foundation Models for Time Series Forecasting

lokol.

论文笔记论文阅读llama

Lag-Llama:TowardsFoundationModelsforTimeSeriesForecasting摘要本文提出Lag-Llama,在大量时间序列数据上训练的通用单变量概率时间序列预测模型。模型在分布外泛化能力上取得较好效果。模型使用平滑破坏幂律(smoothlybrokenpower-laws)。介绍目前任务主要集中于在相同域的数据上训练模型。当前已有的大规模通用模型在大规模不同数

- 【论文笔记】Unsupervised Learning of Video Representations using LSTMs

奶茶不加糖え

lstm深度学习自然语言处理

摘要翻译我们使用长短时记忆(LongShortTermMemory,LSTM)网络来学习视频序列的表征。我们的模型使用LSTM编码器将输入序列映射到一个固定长度的表征向量。之后我们用一个或多个LSTM解码器解码这个表征向量来实现不同的任务,比如重建输入序列、预测未来序列。我们对两种输入序列——原始的图像小块和预训练卷积网络提取的高层表征向量——都做了实验。我们探索不同的设计选择,例如解码器的LST

- 行人重识别

NineDays66

人工智能

在人的感知系统所获得的信息中,视觉信息大约占到80%~85%。行人重识别(personre-identification)是近几年智能视频分析领域兴起的一项新技术,属于在复杂视频环境下的图像处理和分析范畴,是许多监控和安防应用中的主要任务,并且在计算机视觉领域获得了越来越多的关注。下面我们就仔细来聊聊行人重识别(ReID)。1.什么是行人重识别行人重识别(PersonRe-identificat

- MOSSE算法论文笔记以及代码解释

five days

计算机视觉深度学习机器学习

论文《VisualObjectTrackingusingAdaptiveCorrelationFilters》代码github1.论文idea提出以滤波器求相关的形式,找到最大响应处的位置,也就是我们所跟踪的目标的中心,进而不断的更新跟踪目标框和滤波器。2.跟踪策略如图,根据初始帧圈出的目标框训练滤波器,最大响应处为目标框的中心点,当移动到下一帧时,根据滤波器求相关的算法获得最大响应值,进而得出下

- Attention Is All Your Need论文笔记

xiaoyan_lu

论文笔记论文阅读

论文解决了什么问题?提出了一个新的简单网络架构——transformer,仅仅是基于注意力机制,完全免去递推和卷积,使得神经网络训练地速度极大地提高。Weproposeanewsimplenetworkarchitecture,theTransformer,basedsolelyonattentionmechanisms,dispensingwithrecurrenceandconvolution

- 论文笔记:相似感知的多模态假新闻检测

图学习的小张

论文笔记论文阅读python

整理了RecSys2020ProgressiveLayeredExtraction:ANovelMulti-TaskLearningModelforPersonalizedRecommendations)论文的阅读笔记背景模型实验论文地址:SAFE背景 在此之前,对利用新闻文章中文本信息和视觉信息之间的关系(相似性)的关注较少。这种相似性有助于识别虚假新闻,例如,虚假新闻也许会试图使用不相关的图

- [论文总结] 深度学习在农业领域应用论文笔记12

落痕的寒假

论文总结深度学习论文阅读人工智能

文章目录1.3D-ZeF:A3DZebrafishTrackingBenchmarkDataset(CVPR,2020)摘要背景相关研究所提出的数据集方法和结果个人总结2.Automatedflowerclassificationoveralargenumberofclasses(ComputerVision,Graphics&ImageProcessing,2008)摘要背景分割与分类数据集和实

- 论文笔记之LINE:Large-scale Information Network Embedding

小弦弦喵喵喵

原文:LINE:Large-scaleInformationNetworkEmbedding本文提出一种新的networkembeddingmodel:LINE.能够处理大规模的各式各样的网络,比如:有向图、无向图、有权重图、无权重图.文中指出对于networkembedding问题,需要保留localstructure和globalstructure,分别对应first-orderproximi

- 打败一切NeRF! 3D Gaussian Splatting 的 简单入门知识

Ci_ci 17

3dpython

新手的论文笔记3DGaussianSplatting的笔记introductionRelatedwork预备知识Gaussiansplatting3D高斯泼溅原理Overview3DGaussianSplatting的笔记每次都是在csdn上找救命稻草,这是第一次在csdn上发东西。确实是个不错的笔记网站,还能同步,保存哈哈哈。印象笔记,Onenote逊爆了。研一刚开学两个月,导师放养,给的方向还

- 跨模态行人重识别:Cross-Modality Person Re-Identification with Generative Adversarial Training 学习记录笔记

深度学不会习

深度学习

目录摘要方法cmGANGeneratorDiscriminatorTrainingAlgorithmExperiments论文链接:https://www.ijcai.org/Proceedings/2018/0094.pdf摘要(1)提出一种新的跨模态生成对抗网络(称为cmGAN)。为了解决鉴别信息不足的问题,设计了一种基于生成对抗训练的鉴别器,从不同的模式中学习鉴别特征表示。(2)为了解决大规

- 跨模态行人重识别:Discover Cross-Modality Nuances for Visible-Infrared Person Re-Identification学习记录笔记

深度学不会习

学习

目录摘要网络结构具体方法MAMPAM模态分类损失共享特征ID损失中心簇损失总损失试验注意模式可视化分布结果原文链接:DiscoverCross-ModalityNuancesforVisible-InfraredPersonRe-Identification摘要提出了一种联合模态和模式对齐网络(MPANet)来发现可见红外人Re-ID不同模式中的跨模态细微差别,它引入了模态缓解模块和模式对齐模块来共

- 跨模态行人重识别:Dynamic Dual-Attentive Aggregation Learningfor Visible-Infrared Person Re-Identification学习笔记

深度学不会习

学习

目录摘要方法模态内加权聚合(IWPA)跨模态图结构化注意力(CGSA)GraphConstructionGraphAttention动态对偶聚合学习试验论文链接:DynamicDual-AttentiveAggregationLearningforVisible-InfraredPersonRe-Identification摘要通过挖掘VI-ReID的模态内部分级和跨模态图级上下文线索,提出了一种新

- java线程Thread和Runnable区别和联系

zx_code

javajvmthread多线程Runnable

我们都晓得java实现线程2种方式,一个是继承Thread,另一个是实现Runnable。

模拟窗口买票,第一例子继承thread,代码如下

package thread;

public class ThreadTest {

public static void main(String[] args) {

Thread1 t1 = new Thread1(

- 【转】JSON与XML的区别比较

丁_新

jsonxml

1.定义介绍

(1).XML定义

扩展标记语言 (Extensible Markup Language, XML) ,用于标记电子文件使其具有结构性的标记语言,可以用来标记数据、定义数据类型,是一种允许用户对自己的标记语言进行定义的源语言。 XML使用DTD(document type definition)文档类型定义来组织数据;格式统一,跨平台和语言,早已成为业界公认的标准。

XML是标

- c++ 实现五种基础的排序算法

CrazyMizzz

C++c算法

#include<iostream>

using namespace std;

//辅助函数,交换两数之值

template<class T>

void mySwap(T &x, T &y){

T temp = x;

x = y;

y = temp;

}

const int size = 10;

//一、用直接插入排

- 我的软件

麦田的设计者

我的软件音乐类娱乐放松

这是我写的一款app软件,耗时三个月,是一个根据央视节目开门大吉改变的,提供音调,猜歌曲名。1、手机拥有者在android手机市场下载本APP,同意权限,安装到手机上。2、游客初次进入时会有引导页面提醒用户注册。(同时软件自动播放背景音乐)。3、用户登录到主页后,会有五个模块。a、点击不胫而走,用户得到开门大吉首页部分新闻,点击进入有新闻详情。b、

- linux awk命令详解

被触发

linux awk

awk是行处理器: 相比较屏幕处理的优点,在处理庞大文件时不会出现内存溢出或是处理缓慢的问题,通常用来格式化文本信息

awk处理过程: 依次对每一行进行处理,然后输出

awk命令形式:

awk [-F|-f|-v] ‘BEGIN{} //{command1; command2} END{}’ file

[-F|-f|-v]大参数,-F指定分隔符,-f调用脚本,-v定义变量 var=val

- 各种语言比较

_wy_

编程语言

Java Ruby PHP 擅长领域

- oracle 中数据类型为clob的编辑

知了ing

oracle clob

public void updateKpiStatus(String kpiStatus,String taskId){

Connection dbc=null;

Statement stmt=null;

PreparedStatement ps=null;

try {

dbc = new DBConn().getNewConnection();

//stmt = db

- 分布式服务框架 Zookeeper -- 管理分布式环境中的数据

矮蛋蛋

zookeeper

原文地址:

http://www.ibm.com/developerworks/cn/opensource/os-cn-zookeeper/

安装和配置详解

本文介绍的 Zookeeper 是以 3.2.2 这个稳定版本为基础,最新的版本可以通过官网 http://hadoop.apache.org/zookeeper/来获取,Zookeeper 的安装非常简单,下面将从单机模式和集群模式两

- tomcat数据源

alafqq

tomcat

数据库

JNDI(Java Naming and Directory Interface,Java命名和目录接口)是一组在Java应用中访问命名和目录服务的API。

没有使用JNDI时我用要这样连接数据库:

03. Class.forName("com.mysql.jdbc.Driver");

04. conn

- 遍历的方法

百合不是茶

遍历

遍历

在java的泛

- linux查看硬件信息的命令

bijian1013

linux

linux查看硬件信息的命令

一.查看CPU:

cat /proc/cpuinfo

二.查看内存:

free

三.查看硬盘:

df

linux下查看硬件信息

1、lspci 列出所有PCI 设备;

lspci - list all PCI devices:列出机器中的PCI设备(声卡、显卡、Modem、网卡、USB、主板集成设备也能

- java常见的ClassNotFoundException

bijian1013

java

1.java.lang.ClassNotFoundException: org.apache.commons.logging.LogFactory 添加包common-logging.jar2.java.lang.ClassNotFoundException: javax.transaction.Synchronization

- 【Gson五】日期对象的序列化和反序列化

bit1129

反序列化

对日期类型的数据进行序列化和反序列化时,需要考虑如下问题:

1. 序列化时,Date对象序列化的字符串日期格式如何

2. 反序列化时,把日期字符串序列化为Date对象,也需要考虑日期格式问题

3. Date A -> str -> Date B,A和B对象是否equals

默认序列化和反序列化

import com

- 【Spark八十六】Spark Streaming之DStream vs. InputDStream

bit1129

Stream

1. DStream的类说明文档:

/**

* A Discretized Stream (DStream), the basic abstraction in Spark Streaming, is a continuous

* sequence of RDDs (of the same type) representing a continuous st

- 通过nginx获取header信息

ronin47

nginx header

1. 提取整个的Cookies内容到一个变量,然后可以在需要时引用,比如记录到日志里面,

if ( $http_cookie ~* "(.*)$") {

set $all_cookie $1;

}

变量$all_cookie就获得了cookie的值,可以用于运算了

- java-65.输入数字n,按顺序输出从1最大的n位10进制数。比如输入3,则输出1、2、3一直到最大的3位数即999

bylijinnan

java

参考了网上的http://blog.csdn.net/peasking_dd/article/details/6342984

写了个java版的:

public class Print_1_To_NDigit {

/**

* Q65.输入数字n,按顺序输出从1最大的n位10进制数。比如输入3,则输出1、2、3一直到最大的3位数即999

* 1.使用字符串

- Netty源码学习-ReplayingDecoder

bylijinnan

javanetty

ReplayingDecoder是FrameDecoder的子类,不熟悉FrameDecoder的,可以先看看

http://bylijinnan.iteye.com/blog/1982618

API说,ReplayingDecoder简化了操作,比如:

FrameDecoder在decode时,需要判断数据是否接收完全:

public class IntegerH

- js特殊字符过滤

cngolon

js特殊字符js特殊字符过滤

1.js中用正则表达式 过滤特殊字符, 校验所有输入域是否含有特殊符号function stripscript(s) { var pattern = new RegExp("[`~!@#$^&*()=|{}':;',\\[\\].<>/?~!@#¥……&*()——|{}【】‘;:”“'。,、?]"

- hibernate使用sql查询

ctrain

Hibernate

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import org.hibernate.Hibernate;

import org.hibernate.SQLQuery;

import org.hibernate.Session;

import org.hibernate.Transa

- linux shell脚本中切换用户执行命令方法

daizj

linuxshell命令切换用户

经常在写shell脚本时,会碰到要以另外一个用户来执行相关命令,其方法简单记下:

1、执行单个命令:su - user -c "command"

如:下面命令是以test用户在/data目录下创建test123目录

[root@slave19 /data]# su - test -c "mkdir /data/test123"

- 好的代码里只要一个 return 语句

dcj3sjt126com

return

别再这样写了:public boolean foo() { if (true) { return true; } else { return false;

- Android动画效果学习

dcj3sjt126com

android

1、透明动画效果

方法一:代码实现

public View onCreateView(LayoutInflater inflater, ViewGroup container, Bundle savedInstanceState)

{

View rootView = inflater.inflate(R.layout.fragment_main, container, fals

- linux复习笔记之bash shell (4)管道命令

eksliang

linux管道命令汇总linux管道命令linux常用管道命令

转载请出自出处:

http://eksliang.iteye.com/blog/2105461

bash命令执行的完毕以后,通常这个命令都会有返回结果,怎么对这个返回的结果做一些操作呢?那就得用管道命令‘|’。

上面那段话,简单说了下管道命令的作用,那什么事管道命令呢?

答:非常的经典的一句话,记住了,何为管

- Android系统中自定义按键的短按、双击、长按事件

gqdy365

android

在项目中碰到这样的问题:

由于系统中的按键在底层做了重新定义或者新增了按键,此时需要在APP层对按键事件(keyevent)做分解处理,模拟Android系统做法,把keyevent分解成:

1、单击事件:就是普通key的单击;

2、双击事件:500ms内同一按键单击两次;

3、长按事件:同一按键长按超过1000ms(系统中长按事件为500ms);

4、组合按键:两个以上按键同时按住;

- asp.net获取站点根目录下子目录的名称

hvt

.netC#asp.nethovertreeWeb Forms

使用Visual Studio建立一个.aspx文件(Web Forms),例如hovertree.aspx,在页面上加入一个ListBox代码如下:

<asp:ListBox runat="server" ID="lbKeleyiFolder" />

那么在页面上显示根目录子文件夹的代码如下:

string[] m_sub

- Eclipse程序员要掌握的常用快捷键

justjavac

javaeclipse快捷键ide

判断一个人的编程水平,就看他用键盘多,还是鼠标多。用键盘一是为了输入代码(当然了,也包括注释),再有就是熟练使用快捷键。 曾有人在豆瓣评

《卓有成效的程序员》:“人有多大懒,才有多大闲”。之前我整理了一个

程序员图书列表,目的也就是通过读书,让程序员变懒。 写道 程序员作为特殊的群体,有的人可以这么懒,懒到事情都交给机器去做,而有的人又可

- c++编程随记

lx.asymmetric

C++笔记

为了字体更好看,改变了格式……

&&运算符:

#include<iostream>

using namespace std;

int main(){

int a=-1,b=4,k;

k=(++a<0)&&!(b--

- linux标准IO缓冲机制研究

音频数据

linux

一、什么是缓存I/O(Buffered I/O)缓存I/O又被称作标准I/O,大多数文件系统默认I/O操作都是缓存I/O。在Linux的缓存I/O机制中,操作系统会将I/O的数据缓存在文件系统的页缓存(page cache)中,也就是说,数据会先被拷贝到操作系统内核的缓冲区中,然后才会从操作系统内核的缓冲区拷贝到应用程序的地址空间。1.缓存I/O有以下优点:A.缓存I/O使用了操作系统内核缓冲区,

- 随想 生活

暗黑小菠萝

生活

其实账户之前就申请了,但是决定要自己更新一些东西看也是最近。从毕业到现在已经一年了。没有进步是假的,但是有多大的进步可能只有我自己知道。

毕业的时候班里12个女生,真正最后做到软件开发的只要两个包括我,PS:我不是说测试不好。当时因为考研完全放弃找工作,考研失败,我想这只是我的借口。那个时候才想到为什么大学的时候不能好好的学习技术,增强自己的实战能力,以至于后来找工作比较费劲。我

- 我认为POJO是一个错误的概念

windshome

javaPOJO编程J2EE设计

这篇内容其实没有经过太多的深思熟虑,只是个人一时的感觉。从个人风格上来讲,我倾向简单质朴的设计开发理念;从方法论上,我更加倾向自顶向下的设计;从做事情的目标上来看,我追求质量优先,更愿意使用较为保守和稳妥的理念和方法。

&