openstack_Stein版安装文档

![]()

文章目录

- 1. openstack介绍

- 1.1. 云计算模式

- 2. OpenStack 中有哪些项目?

- 2.1. Openstack创建实例的流程

- 2.2. 总图

- 3. openstack项目搭建

- 4. 环境配置

- 4.1. 配置SQL数据库

- 4.2. 配置Memcached

- 4.3. 安装rabbit-MQ

- 4.4. 配置haproxy+keepalived

- 5. 配置keystone认证服务

- 5.1. 数据库:106

- 5.2. 控制端: 101

- 6. 配置glance服务

- 7. 配置placement服务

- 7.1. 数据库

- 7.2. 控制端

- 8. 配置nova

- 8.1. 配置nova控制节点

- 8.1.1. 安装并配置nova控制节点

- 8.1.2. 在控制端

- 8.2. 配置nova计算节点

- 8.2.1. 控制端

- 9. 配置neutron服务

- 9.1. 配置neutron控制节点

- 9.2. 配置neutron计算节点

- 10. 创建实例

- 10.1. 控制端

- 10.2. 创建实例类型

- 11. 配置Dashboard服务

- 11.1. 控制端

- 12. openstack高可用配置(可选)

- 12.1. NFS

- 12.2. 控制端挂载NFS

- 12.3. haproxy高可用

- 12.4. 控制端的高可用

- 12.5. 快速添加node节点

- 13. 配置cinder(块存储服务)

- 13.1. 配置cinder控制器节点

- 13.2. 存储服务器

- 13.3. NFS作为openstack后端存储

- 13.4. (可选)配置备份服务

- 14. 实现VPC 自定义网络

- 14.1. 控制端配置

- 14.2. 计算节点配置

- 14.3. 创建自服务网络

- 15. 实现内外网结构

- 15.1. 各虚拟机网卡添加

- 15.2. 控制节点配置

- 15.3. 计算节点配置

- 15.4. 创建网络并验证

- 16. openstack镜像制作:

- 虚拟机镜像有哪些?

- 16.1. 网络环境准备

- 16.2. Centos 7.2 镜像制作

- 浮动IP分配

- 安装httpd服务测试访问

- 17. openstack企业应用案例

- 17.1. quota相关配置

- 17.1.1. web端修改项目配额

- 17.1.2. 修改配置文件来修改配额

- 17.2. 更改实例IP地址

- 17.3. 实例迁移(调整实例)

- 17.4. 主机聚合(创建指定IP的虚拟机)

- 17.5. openstack 相关优化

- 17.4. 主机聚合(创建指定IP的虚拟机)

- 17.5. openstack 相关优化

1. openstack介绍

OpenStack 是一系列开源工具(或开源项目)的组合,主要使用池化虚拟资源来构建和管理私有云及公共云。其中的六个项目主要负责处理核心云计算服务,包括计算、网络、存储、身份和镜像服务。还有另外十多个可选项目,用户可把它们捆绑打包,用来创建独特、可部署的云架构。

1.1. 云计算模式

一、IaaS:基础设施即服务(个人比较习惯的):用户通过网络获取虚机、存储、网络,然后用户根据自己的需求操作获取的资源

二、PaaS:平台即服务:将软件研发平台作为一种服务, 如Eclipse/Java编程平台,服务商提供编程接口/运行平台等

三、SaaS:软件即服务 :将软件作为一种服务通过网络提供给用户,如web的电子邮件、HR系统、订单管理系统、客户关系系统等。用户无需购买软件,而是向提供商租用基于web的软件,来管理企业经营活动

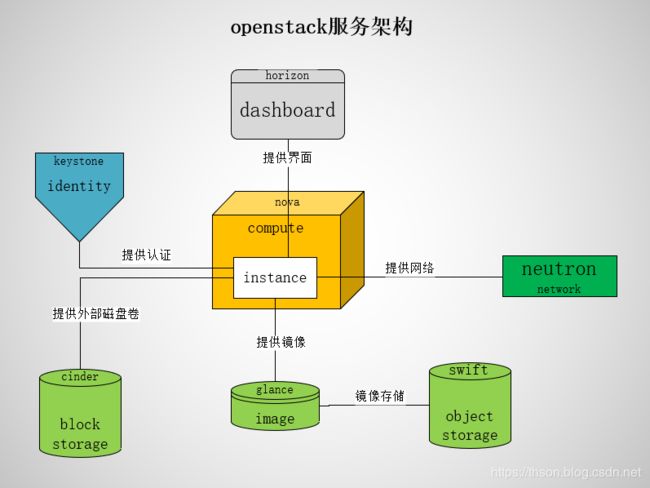

2. OpenStack 中有哪些项目?

OpenStack 架构由大量开源项目组成。其中包含 6 个稳定可靠的核心服务,用于处理计算、网络、存储、身份和镜像; 同时,还为用户提供了十多种开发成熟度各异的可选服务。OpenStack 的 6 个核心服务主要担纲系统的基础架构,其余项目则负责管理控制面板、编排、裸机部署、信息传递、容器及统筹管理等操作。

- keystone:Keystone 认证所有 OpenStack 服务并对其进行授权。同时,它也是所有服务的端点目录。

- glance:Glance 可存储和检索多个位置的虚拟机磁盘镜像。

- nova:是一个完整的OpenStack 计算资源管理和访问工具,负责处理规划、创建和删除操作。

- neutron:Neutron 能够连接其他 OpenStack 服务并连接网络。

- dashboard:web管理界面

- Swift: 是一种高度容错的对象存储服务,使用 RESTful API 来存储和检索非结构数据对象。

- Cinder 通过自助服务 API 访问持久块存储。

- Ceilometer:计费

- Heat:编排

通过消息队列和数据库,各个组件可以相互调用,互相通信。每个项目都有各自的特性,大而全的架构并非适合每一个用户,如Glance在最早的A、B版本中并没有实际出现应用,Nova可以脱离镜像服务独立运行。当用户的云计算规模大到需要管理多种镜像时,才需要像Glance这样的组件。

OpenStack的逻辑架构

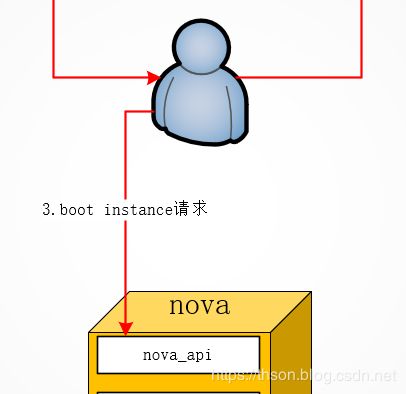

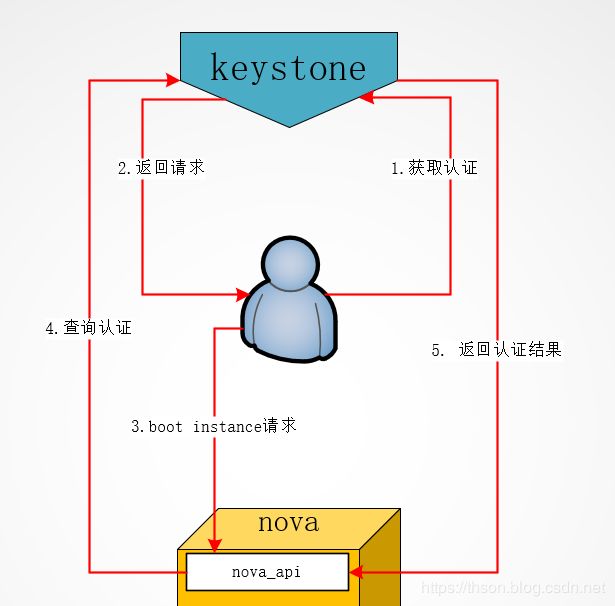

2.1. Openstack创建实例的流程

-

通过登录界面dashboard或命令行CLI通过

RESTful API向keystone获取认证信息。 -

keystone通过用户请求认证信息,并生成auth-token返回给对应的认证请求。

![]()

- 然后携带

auth-token通过RESTful API向nova-api发送一个boot instance的请求。

nova-api接受请求后向keystone发送认证请求,查看token是否为有效用户和token。- keystone验证token是否有效,将结果返回给

nova-api。

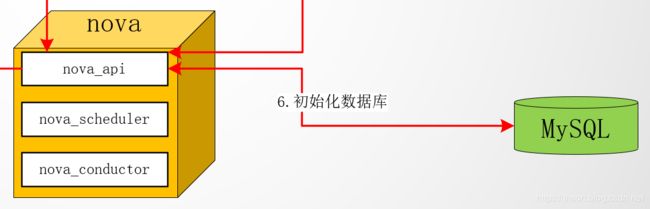

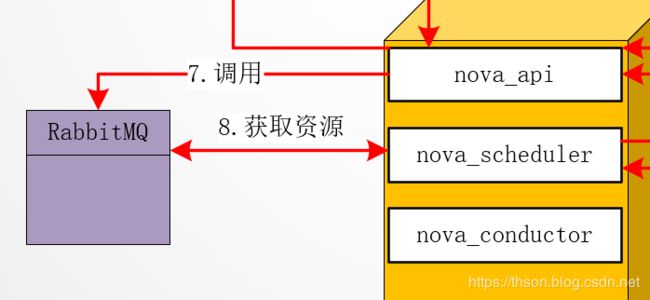

-

nova-api调用rabbitmq,向nova-scheduler请求是否有创建虚拟机的资源(node主机)。 -

nova-scheduler进程侦听消息队列,获取nova-api的请求。

-

nova-scheduler通过查询nova数据库中计算资源的情况,并通过调度算法计算符合虚拟机创建需要的主机。

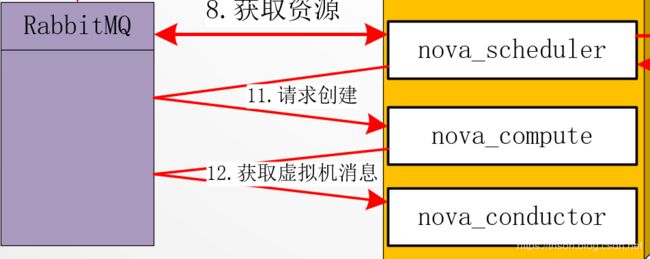

nova-scheduler通过rpc调用向nova-compute发送对应的创建虚拟机请求的消息。

nova-compute会从对应的消息队列中获取创建虚拟机请求的消息。

![]()

nova-compute通过rpc调用向nova-conductor请求获取虚拟机消息。(Flavor)

nova-conductor从消息队队列中拿到nova-compute请求消息。

nova-conductor根据消息查询虚拟机对应的信息。

nova-conductor从数据库中获得虚拟机对应信息。

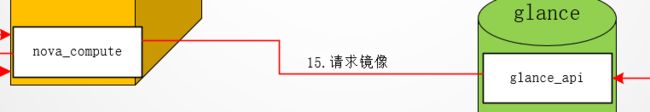

![]()

nova-conductor把虚拟机信息通过消息的方式发送到消息队列中。

nova-compute从对应的消息队列中获取虚拟机信息消息。

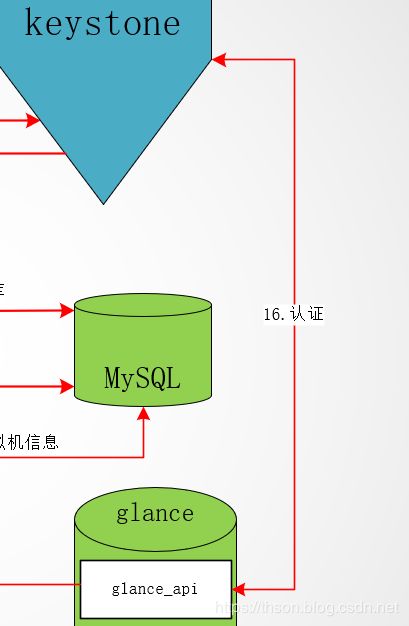

![]()

glance-api向keystone认证token是否有效,并返回验证结果。

- token验证通过,

nova-compute获得虚拟机镜像信息(URL)。

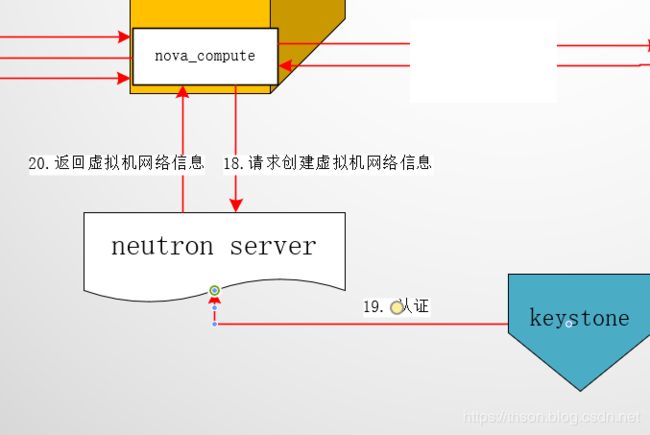

nova-compute请求neutron-server获取创建虚拟机所需要的网络信息。neutron-server向keystone认证token是否有效,并返回验证结果。- token验证通过,

nova-compute获得虚拟机网络信息。

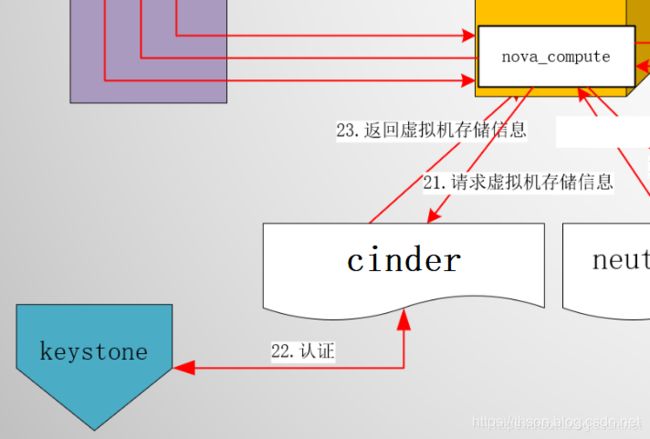

nova-compute请求cinder-api获取创建虚拟机所需要的持久化存储信息。cinder-api向keystone认证token是否有效,并返回验证结果。- token验证通过,

nova-compute获得虚拟机持久化存储信息。

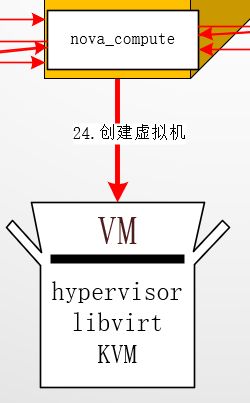

nova-compute根据instance的信息调用配置的虚拟化驱动来创建虚拟机。

2.2. 总图

3. openstack项目搭建

1、环境布署

2、配置keystone服务

3、配置glance服务

4、配置placement服务

5、配置nova服务控制节点

6、配置nova服务计算节点

7、配置neutron服务控制节点

8、配置neutron服务计算节点

9、创建实例

10、配置dashboard服务

图中数字,如10,表示ip:192.168.99.10

4. 环境配置

-

系统:

计算节点:centos 7.2.1511

其它:centos 7.6.1810 -

准备yum源:

/etc/yum.repos.d/openstack.repo

yum install centos-release-openstack-stein

- 安装openstack客户端、openstack SELinux管理包(控制端与计算节点安装,其它不需要)

yum install python-openstackclient openstack-selinux

- 更改主机名

hostnamectl set-hostname 主机名

- 时间同步

cp -f /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

ntpdate time3.aliyun.com && hwclock -w

- 配置hosts

echo "192.168.99.100 openstackvip.com" >> /etc/hosts

这里设置你的vip和你的域名

- 如果你做bond,就做这步,否则就跳过。

bond配置

cd /etc/sysconfig/network-scripts/

vim ifcfg-bond0

BOOTPROTO=static

NAME=bond0

DEVICE=bond0

ONBOOT=yes

BONDING_MASTER=yes

BONDING_OPTS="mode=1 miimon=100" #指定绑定类型为1及链路状态监测间隔时间

IPADDR=192.168.99.101

NETMASK=255.255.255.0

GATEWAY=192.168.99.2

DNS1=202.106.0.20

eth0配置:

vim ifcfg-eth0

BOOTPROTO=static

NAME=eth0

DEVICE=eth0

ONBOOT=yes

NM_CONTROLLED=no

MASTER=bond0

USERCTL=no

SLAVE=yes

eth1配置:

vim ifcfg-eth1

BOOTPROTO=static

NAME=eth1

DEVICE=eth1

ONBOOT=yes

NM_CONTROLLED=no

MASTER=bond0

USERCTL=no

SLAVE=yes

4.1. 配置SQL数据库

- 在数据库节点上配置

- 安装组件

yum -y install mariadb mariadb-server

- 配置my.cnf

vim /etc/my.cnf.d/openstack.cnf

[mysqld]

bind-address = 192.168.99.106

default-storage-engine = innodb

innodb_file_per_table = on

max_connections = 4096

collation-server = utf8_general_ci

character-set-server = utf8

- 启动数据库和设置开机启动

systemctl enable mariadb.service

systemctl restart mariadb.service

- 通过运行脚本来保护数据库服务

mysql_secure_installation

4.2. 配置Memcached

- 在数据库节点上配置

- 安装包:

yum -y install memcached python-memcached

- 编辑配置文件

配置服务以使用控制器节点的管理IP地址。这是为了通过网络访问其他节点:

vim /etc/sysconfig/memcached

替换下面这句

OPTIONS="-l 192.168.99.106"

- 启动Memcached服务并将其配置为在系统引导时启动:

systemctl enable memcached.service

systemctl restart memcached.service

4.3. 安装rabbit-MQ

- 在数据库节点上配置

- 安装

yum -y install rabbitmq-server

- 启动(端口15672)

systemctl enable rabbitmq-server

systemctl restart rabbitmq-server

- 添加用户和密码

rabbitmqctl add_user openstack 123

授权

rabbitmqctl set_permissions openstack ".*" ".*" ".*"

- 打开web插件

rabbitmq-plugins enable rabbitmq_management

- 查看插件

rabbitmq-plugins list

- web访问端口15672,用户密码都是guest

4.4. 配置haproxy+keepalived

- 在haproxy节点上配置

- 安装keepalived和haproxy

yum -y install keepalived haproxy

- 配置master_keepalived

vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id ha_1

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_iptables

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.99.100 dev eth0 label eth0:1

}

}

- 启动

systemctl restart keepalived

systemctl enable keepalived

- haproxy配置

vim /etc/haproxy/haproxy.cfg

global

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 180s

timeout queue 10m

timeout connect 180s

timeout client 10m

timeout server 10m

timeout http-keep-alive 180s

timeout check 10s

maxconn 3000

listen stats

mode http

bind :9999

stats enable

log global

stats uri /haproxy-status

stats auth admin:123

listen dashboard

bind :80

mode http

balance source

server dashboard 192.168.99.106:80 check inter 2000 fall 3 rise 5

listen mysql

bind :3306

mode tcp

balance source

server mysql 192.168.99.106:3306 check inter 2000 fall 3 rise 5

listen memcached

bind :11211

mode tcp

balance source

server memcached 192.168.99.106:11211 inter 2000 fall 3 rise 5

listen rabbit

bind :5672

mode tcp

server rabbit 192.168.99.106:5672 inter 2000 fall 3 rise 5

listen rabbit_web

bind :15672

mode http

server rabbit_web 192.168.99.106:15672 inter 2000 fall 3 rise 5

listen keystone

bind :5000

mode tcp

server keystone 192.168.99.101:5000 inter 2000 fall 3 rise 5

listen glance

bind :9292

mode tcp

server glance 192.168.99.101:9292 inter 2000 fall 3 rise 5

listen placement

bind :8778

mode tcp

server placement 192.168.99.101:8778 inter 2000 fall 3 rise 5

listen neutron

bind :9696

mode tcp

server neutron 192.168.99.101:9696 inter 2000 fall 3 rise 5

listen nova

bind :8774

mode tcp

server nova 192.168.99.101:8774 inter 2000 fall 3 rise 5

listen VNC

bind :6080

mode tcp

server VNC 192.168.99.101:6080 inter 2000 fall 3 rise 5

- 启动

systemctl restart haproxy

systemctl enable haproxy

查检下端口

ss -tnl

# 输出

State Recv-Q Send-Q Local Address:Port Peer Address:Port

LISTEN 0 128 *:5000 *:*

LISTEN 0 128 *:5672 *:*

LISTEN 0 128 *:8778 *:*

LISTEN 0 128 *:3306 *:*

LISTEN 0 128 *:11211 *:*

LISTEN 0 128 *:9292 *:*

LISTEN 0 128 *:9999 *:*

LISTEN 0 128 *:80 *:*

LISTEN 0 128 *:22 *:*

LISTEN 0 128 *:15672 *:*

LISTEN 0 100 127.0.0.1:25 *:*

LISTEN 0 128 *:6080 *:*

LISTEN 0 128 *:9696 *:*

LISTEN 0 128 *:8774 *:*

LISTEN 0 128 :::22 :::*

LISTEN 0 100 ::1:25 :::*

- 配置内核参数

echo "net.ipv4.ip_nonlocal_bind=1" >> /etc/sysctl.conf

启动haproxy的时候,允许忽视VIP的存在

echo "net.ipv4.ip_forward=1" >> /etc/sysctl.conf

允许ip转发

sysctl -p

使之生效

5. 配置keystone认证服务

5.1. 数据库:106

keystone数据库配置

[mysql]$ mysql -uroot -p123

MariaDB [(none)]> create database keystone;

MariaDB [(none)]> grant all on keystone.* to keystone@'%' identified by '123';

5.2. 控制端: 101

- 安装插件

yum -y install python2-PyMySQL mariadb

控制端上测试

mysql -ukeystone -h 192.168.99.106 -p123

在控制端添加host文件:/etc/hosts

192.168.99.100 openstackvip.com

配置keystone

- 安装

yum -y install openstack-keystone httpd mod_wsgi python-memcached

- 生成临时token

openssl rand -hex 10

输出,记住ta,有用

db148a2487000ad12b90

- 配置

/etc/keystone/keystone.conf

sed -i.bak -e '/^#/d' -e '/^$/d' /etc/keystone/keystone.conf

vim /etc/keystone/keystone.conf

[DEFAULT]

admin_token = db148a2487000ad12b90

[access_rules_config]

[application_credential]

[assignment]

[auth]

[cache]

[catalog]

[cors]

[credential]

[database]

connection = mysql+pymysql://keystone:[email protected]/keystone

[domain_config]

[endpoint_filter]

[endpoint_policy]

[eventlet_server]

[federation]

[fernet_receipts]

[fernet_tokens]

[healthcheck]

[identity]

[identity_mapping]

[jwt_tokens]

[ldap]

[memcache]

[oauth1]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[policy]

[profiler]

[receipt]

[resource]

[revoke]

[role]

[saml]

[security_compliance]

[shadow_users]

[signing]

[token]

provider = fernet

[tokenless_auth]

[trust]

[unified_limit]

[wsgi]

- 填充Identity服务数据库

su -s /bin/sh -c "keystone-manage db_sync" keystone

- 初始化Fernet密钥存储库

keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone

keystone-manage credential_setup --keystone-user keystone --keystone-group keystone

验证:

ls /etc/keystone/fernet-keys/

1 0

- 配置apache配置文件

/etc/httpd/conf/httpd.conf

加配置Servername controller:80

sed -i '1s#$#\nServername controller:80#' /etc/httpd/conf/httpd.conf

- 软链接配置文件

ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

- 启动Apache HTTP服务

systemctl enable httpd.service

systemctl restart httpd.service

- 配置管理帐户

export OS_TOKEN=db148a2487000ad12b90

export OS_URL=http://openstackvip.com:5000/v3

export OS_IDENTITY_API_VERSION=3

验证下:

openstack domain list

The request you have made requires authentication. (HTTP 401) (Request-ID: req-03ea8186-0af9-4fa8-ba53-d043cd28e2c0)

这里出错了,检查下你的token,OS_TOKEN设置变量的时候是不是没有跟你在/etc/keystone/keystone.conf配置文件中设置的TOKEN的一样,改成一样的就可以了。

openstack domain list

输出是空的就对了,因为我们还没有添加

- 创建新域的正式方法

openstack domain create --description "exdomain" default

openstack project create --domain default \

--description "Admin Project" admin

- 创建admin,密码设置123

openstack user create --domain default --password-prompt admin

- 创建角色

openstack role create admin

- 给admin用户授权

openstack role add --project admin --user admin admin

- 创建demo项目

openstack project create --domain default --description "Demo project" demo

- 给demo创建用户

openstack user create --domain default --password-prompt demo

- 创建user角色(现在就有user和admin)

openstack role create user

- 给demo用户授权user

openstack role add --project demo --user demo user

- 创建service项目

openstack project create --domain default --description "service project" service

- 创建用户glance

openstack user create --domain default --password-prompt glance

- 给service添加glance用户并授权admin角色

openstack role add --project service --user glance admin

- 创建nova、neutron用户并授权

openstack user create --domain default --password-prompt nova

openstack role add --project service --user nova admin

- 创建keystone的认证服务

openstack service create --name keystone --description "openstack identify" identity

- 查看服务列表

openstack service list

- 创建endpoint,地址写vip

公共端点

openstack endpoint create --region RegionOne identity public http://openstackvip.com:5000/v3

openstack endpoint create --region RegionOne identity internal http://openstackvip.com:5000/v3

管理端点

openstack endpoint create --region RegionOne identity admin http://openstackvip.com:5000/v3

- 测试keystone能否验证

unset OS_TOKEN

openstack --os-auth-url http://openstackvip.com:5000/v3 \

--os-project-domain-name default \

--os-user-domain-name default \

--os-project-name admin \

--os-username admin token issue

- 使用脚本配置环境变量

admin用户脚本keystone_admin.sh

#!/bin/bash

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=123

export OS_AUTH_URL=http://openstackvip.com:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

demo用户脚本keystone_demo.sh

#!/bin/bash

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=123

export OS_AUTH_URL=http://openstackvip.com:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

6. 配置glance服务

Glance是Openstack镜像服务组件,监听在9292端口,接收REST API请求,通过其它模块来完成镜像的获取,上传,删除等。

在创建虚拟机的时候,先把镜像上传到glace,

glance-api接收镜像的删除、上传和读取;

glance-registry(port:9191)与mysql交互,存储获取镜像的元数据。

glance数据库有两张表,一张image表,一张image property表:保存了镜像格式、大小等信息

image store是一个存储的接口层,通过这个接口glance可以获取镜像

- 控制端安装glance

yum -y install openstack-glance

- 在数据库数据库与用户

mysql -uroot -p123

MariaDB [(none)]> create database glance;

MariaDB [(none)]> grant all on glance.* to 'glance'@'%' identified by '123';

验证glance用户连接

mysql -hopenstackvip.com -uglance -p123

- 编辑配置文件

/etc/glance/glance-api.conf

sed -i -e '/^#/d' -e '/^$/d' /etc/glance/glance-api.conf

vim /etc/glance/glance-api.conf

最终如下

[DEFAULT]

[cinder]

[cors]

[database]

connection = mysql+pymysql://glance:[email protected]/glance

[file]

[glance.store.http.store]

[glance.store.rbd.store]

[glance.store.sheepdog.store]

[glance.store.swift.store]

[glance.store.vmware_datastore.store]

[glance_store]

stores = file,http

default_store = file

filesystem_store_datadir = /var/lib/glance/images

[image_format]

[keystone_authtoken]

auth_uri = http://openstackvip.com:5000

auth_url = http://openstackvip.com:5000

memcached_servers = openstackvip.com:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123

[oslo_concurrency]

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[paste_deploy]

flavor = keystone

[profiler]

[store_type_location_strategy]

[task]

[taskflow_executor]

- 编辑配置文件

/etc/glance/glance-registry.conf

sed -i -e '/^#/d' -e '/^$/d' /etc/glance/glance-registry.conf

vim /etc/glance/glance-registry.conf

最终如下

[DEFAULT]

[database]

connection = mysql+pymysql://glance:[email protected]/glance

[keystone_authtoken]

auth_uri = http://openstackvip.com:5000

auth_url = http://openstackvip.com:5000

memcached_servers = openstackvip.com:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = glance

password = 123

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_policy]

[paste_deploy]

flavor = keystone

[profiler]

- 初始化glance数据库

su -s /bin/sh -c "glance-manage db_sync" glance

- 启动glance并设置为开机启动

systemctl enable openstack-glance-api.service openstack-glance-registry.service

systemctl restart openstack-glance-api.service openstack-glance-registry.service

- glance服务注册(设置环境变量)

source keystone_admin.sh

- 创建glance服务

openstack service create --name glance --description "OpenStack Image" image

- 创建公有endpoint

openstack endpoint create --region RegionOne image public http://openstackvip.com:9292

- 创建私有endpoint

openstack endpoint create --region RegionOne image internal http://openstackvip.com:9292

- 创建管理endpoint

openstack endpoint create --region RegionOne image admin http://openstackvip.com:9292

- 验证以上步骤

openstack endpoint list

- 验证glance服务

glance image-list

#与

openstack image list

- 测试glance上传镜像

wget http://download.cirros-cloud.net/0.3.4/cirros-0.3.4-x86_64-disk.img

- 创建

openstack image create "cirros" \

--file /root/cirros-0.3.4-x86_64-disk.img \

--disk-format qcow2 \

--container-format bare \

--public

验证glance镜像

glance image-list

#和

openstack image list

- 查看指定镜像信息

openstack image show cirros

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| Field | Value |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

| checksum | ee1eca47dc88f4879d8a229cc70a07c6 |

| container_format | bare |

| created_at | 2019-08-22T06:20:18Z |

| disk_format | qcow2 |

| file | /v2/images/7ae353f8-db19-4449-b4ac-df1e70fe96f7/file |

| id | 7ae353f8-db19-4449-b4ac-df1e70fe96f7 |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros |

| owner | 7cbf02c5e55f43938062a9e31e9ea4bb |

| properties | os_hash_algo='sha512', os_hash_value='1b03ca1bc3fafe448b90583c12f367949f8b0e665685979d95b004e48574b953316799e23240f4f739d1b5eb4c4ca24d38fdc6f4f9d8247a2bc64db25d6bbdb2', os_hidden='False' |

| protected | False |

| schema | /v2/schemas/image |

| size | 13287936 |

| status | active |

| tags | |

| updated_at | 2019-08-22T06:20:19Z |

| virtual_size | None |

| visibility | public |

+------------------+--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

7. 配置placement服务

7.1. 数据库

mysql -u root -p

MariaDB [(none)]> CREATE DATABASE placement;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' \

IDENTIFIED BY '123';

7.2. 控制端

- 使用您选择的创建Placement服务用户PLACEMENT_PASS

openstack user create --domain default --password-prompt placement

- 使用admin角色将Placement用户添加到服务项目

openstack role add --project service --user placement admin

- 在服务目录中创建Placement API条目

openstack service create --name placement \

--description "Placement API" placement

- 创建Placement API服务端点

openstack endpoint create --region RegionOne placement public http://openstackvip.com:8778

openstack endpoint create --region RegionOne placement internal http://openstackvip.com:8778

openstack endpoint create --region RegionOne placement admin http://openstackvip.com:8778

- 安装openstack-placement-api

yum -y install openstack-placement-api

- 编辑

/etc/placement/placement.conf

sed -i -e '/^#/d' -e '/^$/d' /etc/placement/placement.conf

vim /etc/placement/placement.conf

[DEFAULT]

[api]

auth_strategy = keystone

[keystone_authtoken]

auth_url = http://openstackvip.com:5000/v3

memcached_servers = openstackvip.com:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = placement

password = 123

[placement]

[placement_database]

connection = mysql+pymysql://placement:[email protected]/placement

- 填充placement数据库

su -s /bin/sh -c "placement-manage db sync" placement

- 重启httpd服务

systemctl restart httpd

- 验证

placement-status upgrade check

+----------------------------------+

| Upgrade Check Results |

+----------------------------------+

| Check: Missing Root Provider IDs |

| Result: Success |

| Details: None |

+----------------------------------+

| Check: Incomplete Consumers |

| Result: Success |

| Details: None |

+----------------------------------+

8. 配置nova

8.1. 配置nova控制节点

nova分为控制节点和计算节点,计算节点通过nova computer进行虚拟机创建,通过libvirt调用kvm创建虚拟机,nova之间通信通过rabbitMQ队列进行通信

其组件和功能如下:

API:负责接收和响应外部请求。

Scheduler:负责调度虚拟机所在的物理机。

Conductor:计算节点访问数据库的中间件。

Consoleauth:用于控制台的授权认证。

Novncproxy:VNC 代理,用于显示虚拟机操作终端。

Nova-API的功能:

Nova-api组件实现了restful API的功能,接收和响应来自最终用户的计算API请求,接收外部的请求并通过message queue将请求发动给其他服务组件,同时也兼容EC2 API,所以也可以使用EC2的管理工具对nova进行日常管理。

nova scheduler:

nova scheduler模块在openstack中的作用是决策虚拟机创建在哪个主机(计算节点)上。决策一个虚拟机应该调度到某物理节点,需要分为两个步骤:

过滤(filter):过滤出可以创建虚拟机的主机

计算权值(weight):根据权重大进行分配,默认根据资源可用空间进行权重排序

8.1.1. 安装并配置nova控制节点

在数据库服务器操作

- 准备数据库

mysql -uroot -p123

MariaDB [(none)]> CREATE DATABASE nova_api;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' IDENTIFIED BY '123';

MariaDB [(none)]> CREATE DATABASE nova;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' IDENTIFIED BY '123';

MariaDB [(none)]> CREATE DATABASE nova_cell0;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' IDENTIFIED BY '123';

MariaDB [(none)]> flush privileges;

8.1.2. 在控制端

- 安装

yum -y install openstack-nova-api openstack-nova-conductor \

openstack-nova-console openstack-nova-novncproxy \

openstack-nova-scheduler

- 创建nova服务(类型compute)

openstack service create --name nova \

--description "OpenStack Compute" compute

- 创建公共端点

openstack endpoint create --region RegionOne \

compute public http://openstackvip.com:8774/v2.1

- 创建私有端点

openstack endpoint create --region RegionOne \

compute internal http://openstackvip.com:8774/v2.1

- 创建管理端点

openstack endpoint create --region RegionOne \

compute admin http://openstackvip.com:8774/v2.1

- 编辑

/etc/nova/nova.conf

sed -i -e '/^#/d' -e '/^$/d' /etc/nova/nova.conf

vim /etc/nova/nova.conf

详细配置:

[DEFAULT]

my_ip = 192.168.99.101

use_neutron = true

firewall_driver = nova.virt.firewall.NoopFirewallDriver

enabled_apis = osapi_compute,metadata

transport_url = rabbit://openstack:[email protected]

rpc_backend=rabbit

[api]

auth_strategy=keystone

[api_database]

connection = mysql+pymysql://nova:[email protected]/nova_api

[database]

connection = mysql+pymysql://nova:[email protected]/nova

[glance]

api_servers = http://openstackvip.com:9292

[keystone_authtoken]

auth_url = http://openstackvip.com:5000/v3

memcached_servers = openstackvip.com:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123

[oslo_concurrency]

lock_path = /var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = default

project_name = service

auth_type = password

user_domain_name = default

auth_url = http://openstackvip.com:5000/v3

username = placement

password = 123

[vnc]

enabled = true

server_listen = $my_ip

server_proxyclient_address = $my_ip

- 配置apache允许访问placement API

vim /etc/httpd/conf.d/00-placement-api.conf

最下方添加以下配置:

<Directory /usr/bin>

<IfVersion >= 2.4>

Require all granted

</IfVersion>

<IfVersion < 2.4>

Order allow,deny

Allow from all

</IfVersion>

</Directory>

- 重启http

systemctl restart httpd

- 初始化数据库

#nova_api数据库

su -s /bin/sh -c "nova-manage api_db sync" nova

#nova cell0数据库

su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova

#nova cell1 数据库

su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova

#nova数据库

su -s /bin/sh -c "nova-manage db sync" nova

- 验证nova cell0和nova cell1是否正常注册

su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

- 启动并将nova服务设置为开机启动

systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth.service \

openstack-nova-scheduler.service \

openstack-nova-conductor.service \

openstack-nova-novncproxy.service

systemctl restart openstack-nova-api.service \

openstack-nova-consoleauth.service \

openstack-nova-scheduler.service \

openstack-nova-conductor.service \

openstack-nova-novncproxy.service

- 重启nova控制端脚本(nova-restart.sh)

#!/bin/bash

systemctl restart openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

chmod a+x nova-restart.sh

nova service-list

8.2. 配置nova计算节点

我的计算节点ip:192.168.99.10

- 计算节点

yum -y install openstack-nova-compute

- 配置nova

sed -i -e '/^#/d' -e '/^$/d' /etc/nova/nova.conf

vim /etc/nova/nova.conf

#全部配置:

[DEFAULT]

my_ip = 192.168.99.23

use_neutron=true

firewall_driver=nova.virt.firewall.NoopFirewallDriver

enabled_apis=osapi_compute,metadata

transport_url = rabbit://openstack:[email protected]

[api]

auth_strategy=keystone

[glance]

api_servers=http://openstackvip.com:9292

[keystone_authtoken]

auth_url = http://openstackvip.com:5000/v3

memcached_servers = openstackvip.com:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = nova

password = 123

[oslo_concurrency]

lock_path=/var/lib/nova/tmp

[placement]

os_region_name = RegionOne

project_domain_name = default

project_name = service

auth_type = password

user_domain_name = default

auth_url = http://openstackvip.com:5000/v3

username = placement

password = 123

[vnc]

enabled=true

server_listen=0.0.0.0

server_proxyclient_address = $my_ip

novncproxy_base_url=http://openstackvip.com:6080/vnc_auto.html

- 确认计算节点是否支持硬件加速

egrep -c '(vmx|svm)' /proc/cpuinfo

40

如果此命令返回值zero,则您的计算节点不支持硬件加速,您必须配置libvirt为使用QEMU而不是KVM。

编辑文件中的[libvirt]部分,/etc/nova/nova.conf如下所示:

[libvirt]

# ...

virt_type = qemu

- 启动nova 计算服务并设置为开机启动

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl restart libvirtd.service openstack-nova-compute.service

8.2.1. 控制端

- 添加计算节点到cell 数据库

source admin-openstack.sh

openstack compute service list --service nova-compute

- 主动发现计算节点

使用命令发现

su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova

定期主动发现

vim /etc/nova/nova.conf

加上这条

[scheduler]

discover_hosts_in_cells_interval=300

- 重启nova服务

bash nova-restart.sh

下面是验证:

- 验证1:列出服务组件以验证每个进程的成功启动和注册

[controller]$ openstack compute service list

+----+------------------+------------+----------+---------+-------+----------------------------+

| ID | Binary | Host | Zone | Status | State | Updated At |

+----+------------------+------------+----------+---------+-------+----------------------------+

| 1 | nova-consoleauth | controller | internal | enabled | up | 2019-08-23T03:24:19.000000 |

| 2 | nova-scheduler | controller | internal | enabled | up | 2019-08-23T03:24:19.000000 |

| 3 | nova-conductor | controller | internal | enabled | up | 2019-08-23T03:24:13.000000 |

| 6 | nova-compute | note1 | nova | enabled | up | 2019-08-23T03:24:19.000000 |

+----+------------------+------------+----------+---------+-------+----------------------------+

- 验证2:列出Identity服务中的API端点以验证与Identity服务的连接

openstack catalog list

- 验证3:列出Image服务中的图像以验证与Image服务的连接

openstack image list

+--------------------------------------+--------+--------+

| ID | Name | Status |

+--------------------------------------+--------+--------+

| 7ae353f8-db19-4449-b4ac-df1e70fe96f7 | cirros | active |

+--------------------------------------+--------+--------+

- 验证4:检查单元格和放置API是否正常运行以及其他必要的先决条件是否到位

nova-status upgrade check

9. 配置neutron服务

9.1. 配置neutron控制节点

- 在数据库服务器上创建

要创建数据库,请完成以下步骤

mysql -u root -p

MariaDB [(none)] CREATE DATABASE neutron;

MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \

IDENTIFIED BY '123';

- 在控制端上

- 创建neutron用户

openstack user create --domain default --password-prompt neutron

- 将admin角色添加到neutron用户

openstack role add --project service --user neutron admin

- 创建neutron服务实体

openstack service create --name neutron \

--description "OpenStack Networking" network

- 创建网络服务API端点

openstack endpoint create --region RegionOne \

network public http://openstackvip.com:9696

openstack endpoint create --region RegionOne \

network internal http://openstackvip.com:9696

openstack endpoint create --region RegionOne \

network admin http://openstackvip.com:9696

配置网络选项

5. 安装组件

yum -y install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge ebtables

配置服务器组件

6. 编辑neutron

sed -i.bak -e '/^#/d' -e '/^$/d' /etc/neutron/neutron.conf

vim /etc/neutron/neutron.conf

[DEFAULT]

core_plugin = ml2

service_plugins =

transport_url = rabbit://openstack:[email protected]

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

[cors]

[database]

connection = mysql+pymysql://neutron:[email protected]/neutron

[keystone_authtoken]

www_authenticate_uri = http://openstackvip.com:5000

auth_url = http://openstackvip.com:5000

memcached_servers = openstackvip.com:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[privsep]

[ssl]

[nova]

auth_url = http://openstackvip.com:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = nova

password = 123

[nova]这个选项没有,要手动加,在结尾加

配置模块化第2层(ML2)插件

7. 编辑ml2_conf.ini文件

sed -i.bak -e '/^#/d' -e '/^$/d' /etc/neutron/plugins/ml2/ml2_conf.ini

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

[ml2]

type_drivers = flat,vlan

tenant_network_types =

mechanism_drivers = linuxbridge

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[securitygroup]

enable_ipset = true

配置Linux桥代理

8. 编辑linuxbridge_agent.ini文件

sed -i.bak -e '/^#/d' -e '/^$/d' /etc/neutron/plugins/ml2/linuxbridge_agent.ini

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[linux_bridge]

physical_interface_mappings = provider:eth0

[vxlan]

enable_vxlan = false

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

- 设置

/etc/sysctl.conf文件

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

生效

sysctl -p

这里会报错,不管

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-iptables: No such file or directory

sysctl: cannot stat /proc/sys/net/bridge/bridge-nf-call-ip6tables: No such file or directory

配置DHCP代理

10. 编辑dhcp_agent.ini文件

vim /etc/neutron/dhcp_agent.ini

[DEFAULT]

interface_driver = linuxbridge

dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq

enable_isolated_metadata = true

配置元数据代理

11. 编辑metadata_agent.ini文件

vim /etc/neutron/metadata_agent.ini

[DEFAULT]

nova_metadata_host = 192.168.99.101

metadata_proxy_shared_secret = 123

nova_metadata_host写控制端ip,这里我们写vip,再由ha反向代理回来

metadata_proxy_shared_secret为元数据代理的密码

配置Compute服务以使用Networking服务

12. 编辑/etc/nova/nova.conf文件

vim /etc/nova/nova.conf

在最后加上

[neutron]

url = http://openstackvip.com:9696

auth_url = http://openstackvip.com:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123

service_metadata_proxy = true

metadata_proxy_shared_secret = 123

metadata_proxy_shared_secret 这是我们第11条里配置的密码

- 网络服务初始化脚本需要一个

/etc/neutron/plugin.ini指向ML2插件配置文件的符号链接/etc/neutron/plugins/ml2/ml2_conf.ini。

ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini

- 填充数据库:

su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

- 重新启动Compute API服务:

systemctl restart openstack-nova-api.service

- 启动网络服务并将其配置为在系统引导时启动

systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service \

neutron-dhcp-agent.service \

neutron-metadata-agent.service

systemctl restart neutron-server.service \

neutron-linuxbridge-agent.service \

neutron-dhcp-agent.service \

neutron-metadata-agent.service

注:如果选择了Self-service networks,就需要启动第3层服务,我们选择的是Provider networks所以不需要

systemctl enable neutron-l3-agent.service

systemctl restart neutron-l3-agent.service

9.2. 配置neutron计算节点

- 计算节点上

- 安装组件

yum -y install openstack-neutron-linuxbridge ebtables ipset

配置公共组件

2. 编辑neutron.conf文件

sed -i.bak -e '/^#/d' -e '/^$/d' /etc/neutron/neutron.conf

vim /etc/neutron/neutron.conf

[DEFAULT]

transport_url = rabbit://openstack:[email protected]

auth_strategy = keystone

[cors]

[database]

[keystone_authtoken]

www_authenticate_uri = http://openstackvip.com:5000

auth_url = http://openstackvip.com:5000

memcached_servers = openstackvip.com:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = neutron

password = 123

[oslo_concurrency]

lock_path = /var/lib/neutron/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[privsep]

[ssl]

配置Linux桥代理

3. 编辑linuxbridge_agent.ini文件

sed -i.bak -e '/^#/d' -e '/^$/d' /etc/neutron/plugins/ml2/linuxbridge_agent.ini

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[linux_bridge]

physical_interface_mappings = provider:eth0

[vxlan]

enable_vxlan = false

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

确保您的Linux操作系统内核支持网桥过滤器

4. 配置/etc/sysctl.conf文件

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

生效

sysctl -p

配置Compute服务以使用Networking服务

5. 编辑nova.conf文件

vim /etc/nova/nova.conf

[neutron]

url = http://openstackvip.com:9696

auth_url = http://openstackvip.com:5000

auth_type = password

project_domain_name = default

user_domain_name = default

region_name = RegionOne

project_name = service

username = neutron

password = 123

- 重新启动Compute服务:

systemctl restart openstack-nova-compute.service

- 启动Linux网桥代理并将其配置为在系统引导时启动:

systemctl enable neutron-linuxbridge-agent.service

systemctl restart neutron-linuxbridge-agent.service

- 控制节点

- 验证

openstack extension list --network

openstack network agent list

10. 创建实例

10.1. 控制端

创建网络

- 创建提供者网络(最后的provider是网络名)

openstack network create --share --external \

--provider-physical-network provider \

--provider-network-type flat provider

验证:

openstack network list

#或

neutron net-list

- 在网络上创建子网

openstack subnet create --network provider \

--allocation-pool start=192.168.99.200,end=192.168.99.210 \

--dns-nameserver 192.168.99.2 --gateway 192.168.99.2 \

--subnet-range 192.168.99.0/24 provider-sub

–network需要写你上面创建的网络名

provider-sub是子网名

验证:

openstack subnet list

#或

neutron subnet-list

10.2. 创建实例类型

openstack flavor create --id 0 --vcpus 1 --ram 1024 --disk 10 m1.nano

–vcpus :几个核的cpu

–ram :内存(单位M)

–disk :储存(单位G)

最后为类型名;

查看类型列表

openstack flavor list

生成密钥对

- 生成密钥对并添加公钥

ssh-keygen -q -N ""

openstack keypair create --public-key ~/.ssh/id_rsa.pub mykey

验证密钥对的添加

openstack keypair list

添加安全组规则

2. 允许ICMP(ping)

openstack security group rule create --proto icmp default

- 允许安全shell(SSH)访问:

openstack security group rule create --proto tcp --dst-port 22 default

![]()

- 验证

查看类型

openstack flavor list

查看镜像

openstack image list

列出可用网络

openstack network list

列出可用的安全组:

openstack security group list

- 启动实例

openstack server create --flavor m1.nano --image cirros \

--nic net-id=a57d2907-a59d-4422-b231-8d3c788d10d3 \

--security-group default \

--key-name mykey provider-instance

–flavor: 类型名称

–image: 镜像名称

–security-group:安全组名

PROVIDER_NET_ID替换网络ID

最后provider-instance是实例名

- 查看实例状态

openstack server list

- 使用虚拟控制台访问实例

openstack console url show provider-instance

provider-instance是你的实例名称

在浏览器使用url来连接实例

11. 配置Dashboard服务

horizon是openstack的管理其他组件的图形显示和操作界面,通过API和其他服务进行通讯,如镜像服务、计算服务和网络服务等结合使用,horizon基于python django开发,通过Apache的wsgi模块进行web访问通信,Horizon只需要更改配置文件连接到keyston即可

11.1. 控制端

- 安装和配置组件

yum -y install openstack-dashboard

- 编辑

/etc/openstack-dashboard/local_settings文件

打开配置文件,搜索下面这些键,替换他们(下面提供sed命令)

controller节点

OPENSTACK_HOST = "192.168.99.101"

OPENSTACK_HOST写控制端本机的IP

启用Identity API版本3

OPENSTACK_KEYSTONE_URL = "http://%s:5000/v3" % OPENSTACK_HOST

配置user为通过仪表板创建的用户的默认角色:

OPENSTACK_KEYSTONE_DEFAULT_ROLE = "user"

接受所有主机

ALLOWED_HOSTS = ['*']

配置memcached会话存储服务

SESSION_ENGINE = 'django.contrib.sessions.backends.cache'

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': 'openstackvip.com:11211',

}

}

启用对域的支持:

OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT = True

配置API版本:

OPENSTACK_API_VERSIONS = {

"identity": 3,

"image": 2,

"volume": 3,

}

配置Default为通过仪表板创建的用户的默认域:

OPENSTACK_KEYSTONE_DEFAULT_DOMAIN = "default"

如果选择网络选项1,请禁用对第3层网络服务的支持:

OPENSTACK_NEUTRON_NETWORK = {

'enable_router': False,

'enable_quotas': False,

'enable_distributed_router': False,

'enable_ha_router': False,

'enable_lb': False,

'enable_firewall': False,

'enable_': False,

'enable_fip_topology_check': False,

}

(可选)配置时区:

TIME_ZONE = "UTC"

sed一键配置

sed -i.bak '/^OPENSTACK_HOST/s#127.0.0.1#192.168.99.101#' /etc/openstack-dashboard/local_settings

sed -i '/^OPENSTACK_KEYSTONE_DEFAULT_ROLE/s#".*"#"user"#' /etc/openstack-dashboard/local_settings

sed -i "/^ALLOWED_HOSTS/s#\[.*\]#['*']#" /etc/openstack-dashboard/local_settings

sed -i '/^#SESSION_ENGINE/s/#//' /etc/openstack-dashboard/local_settings

sed -i "/^SESSION_ENGINE/s#'.*'#'django.contrib.sessions.backends.cache'#" /etc/openstack-dashboard/local_settings

sed -i "/^# 'default'/s/#//" /etc/openstack-dashboard/local_settings

sed -i "/^#CACHES/,+6s/#//" /etc/openstack-dashboard/local_settings

sed -i "/^ 'LOCATION'/s#127.0.0.1#openstackvip.com#" /etc/openstack-dashboard/local_settings

sed -i "/OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT/s/#//" /etc/openstack-dashboard/local_settings

sed -i "/OPENSTACK_KEYSTONE_MULTIDOMAIN_SUPPORT/s#False#True#" /etc/openstack-dashboard/local_settings

sed -i "/OPENSTACK_API_VERSIONS/,+5s/#//" /etc/openstack-dashboard/local_settings

sed -i '/"compute"/d' /etc/openstack-dashboard/local_settings

sed -i '/^#OPENSTACK_KEYSTONE_DEFAULT_DOMAIN/s/#//' /etc/openstack-dashboard/local_settings

sed -i '/^OPENSTACK_KEYSTONE_DEFAULT_DOMAIN/s/Default/default/' /etc/openstack-dashboard/local_settings

sed -i '/^OPENSTACK_NEUTRON_NETWORK/,+7s#True#False#' /etc/openstack-dashboard/local_settings

sed -i '/TIME_ZONE/s#UTC#UTC#' /etc/openstack-dashboard/local_settings

sed -i "/^OPENSTACK_NEUTRON_NETWORK/s/$/\n 'enable_lb': False,/" /etc/openstack-dashboard/local_settings

sed -i "/^OPENSTACK_NEUTRON_NETWORK/s/$/\n 'enable_firewall': False,/" /etc/openstack-dashboard/local_settings

sed -i "/^OPENSTACK_NEUTRON_NETWORK/s/$/\n 'enable_': False,/" /etc/openstack-dashboard/local_settings

继续配置下面的

- 添加下行到配置文件

/etc/httpd/conf.d/openstack-dashboard.conf

vim /etc/httpd/conf.d/openstack-dashboard.conf

WSGIApplicationGroup %{GLOBAL}

- 重新启动Web服务器和会话存储服务()

systemctl restart httpd.service

memcached我安装在其它机器上

systemctl restart memcached.service

12. openstack高可用配置(可选)

12.1. NFS

IP:192.168.99.105

- 安装nfs

yum -y install nfs-utils

- 添加用户

useradd openstack

- 修改配置文件

echo "/var/lib/glance/images 192.168.99.0/24(rw,all_squash,anonuid=`id -u openstack`,anongid=`id -g openstack`)" > /etc/exports

- 创建文件

mkdir -p /var/lib/glance/images

- 启动服务

systemctl restart nfs-server

systemctl enable nfs-server

exportfs -r

- 验证下

showmount -e

- 给权限

chown -R openstack.openstack /var/lib/glance/images/

12.2. 控制端挂载NFS

showmount -e 192.168.99.115

在挂载之前先保存下镜像

mkdir /data ; mv /var/lib/glance/images/* /data

挂载

echo "192.168.99.115:/var/lib/glance/images /var/lib/glance/images nfs defaults 0 0" >> /etc/fstab

mount -a

再把镜像移回来

mv /data/* /var/lib/glance/images

12.3. haproxy高可用

需要的包haproxy + keepalived

在前面已经做了一台haproxy+keepalived,所以我们需要再加一台物理机,做backup。

IP: 192.168.99.104

开始配置

- 安装

yum -y install keepalived haproxy

- 配置keepalived:

vim /etc/keepavlied/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id ha_1

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_iptables

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.99.100 dev eth0 label eth0:1

}

}

- 启动

systemctl start keepalived

systemctl enable keepalived

- 配置haproxy:

在配置之前要看下需要做反向代理的端口

| PORT | 服务 |

|---|---|

| 5000 | keystone |

| 9292 | glance |

| 8778 | placement |

| 8774 | nova |

| 9696 | neutron |

| 6080 | VNC |

| 3306 | MySQL |

| 5672 | rabbitMQ |

| 15672 | rabbitMQ_WEB |

| 11211 | memcached |

这个配置在ha_1上也要加上

vim /etc/haproxy/haproxy.conf

listen stats

mode http

bind :9999

stats enable

log global

stats uri /haproxy-status

stats auth admin:123

listen dashboard

bind :80

mode http

balance source

server dashboard 192.168.99.101:80 check inter 2000 fall 3 rise 5

server dashboard 192.168.99.103:80 check inter 2000 fall 3 rise 5

listen mysql

bind :3306

mode tcp

balance source

server mysql 192.168.99.106:3306 check inter 2000 fall 3 rise 5

listen memcached

bind :11211

mode tcp

balance source

server memcached 192.168.99.106:11211 inter 2000 fall 3 rise 5

listen rabbit

bind :5672

mode tcp

balance source

server rabbit 192.168.99.106:5672 inter 2000 fall 3 rise 5

listen rabbit_web

bind :15672

mode http

server rabbit_web 192.168.99.106:15672 inter 2000 fall 3 rise 5

listen keystone

bind :5000

mode tcp

server keystone 192.168.99.101:5000 inter 2000 fall 3 rise 5

server keystone 192.168.99.103:5000 inter 2000 fall 3 rise 5

listen glance

bind :9292

mode tcp

server glance 192.168.99.101:9292 inter 2000 fall 3 rise 5

server glance 192.168.99.103:9292 inter 2000 fall 3 rise 5

listen placement

bind :8778

mode tcp

server placement 192.168.99.101:8778 inter 2000 fall 3 rise 5

server placement 192.168.99.103:8778 inter 2000 fall 3 rise 5

listen neutron

bind :9696

mode tcp

server neutron 192.168.99.101:9696 inter 2000 fall 3 rise 5

server neutron 192.168.99.103:9696 inter 2000 fall 3 rise 5

listen nova

bind :8774

mode tcp

server nova 192.168.99.101:8774 inter 2000 fall 3 rise 5

server nova 192.168.99.103:8774 inter 2000 fall 3 rise 5

listen VNC

bind :6080

mode tcp

server VNC 192.168.99.101:6080 inter 2000 fall 3 rise 5

server VNC 192.168.99.103:6080 inter 2000 fall 3 rise 5

12.4. 控制端的高可用

要实现高可以用,要再准备一台物理机,设置主机名为controller2,

IP:192.168.99.113

从controller1准备这些文件

$ ls

admin.keystone* glance.tar keystone.tar placement.tar

dashboard.tar http_conf_d.tar neutron.tar yum/

demo.keystone* install_controller_openstack.sh* nova.tar

最终如图,yum源是centos安装时自带,如果你删除了也要从其它主机拷贝过来

准备的过程(在原有的controller上)

#准备httpd

cd /etc/httpd/conf.d

tar cf /root/http_conf_d.tar *

#准备keystone

cd /etc/keystone

tar cf /root/keystone.tar *

#准备glance

cd /etc/glance

tar cf /root/glance.tar *

#准备placement

cd /etc/placement

tar cf /root/placement.tar *

#准备nova

cd /etc/nova

tar cf /root/nova.tar *

#准备neutron

cd /etc/neutron

tar cf /root/neutron.tar *

#准备dashboard

cd /etc/openstack-dashboard

tar cf /root/dashboard.tar *

admin.keystone

#!/bin/bash

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=admin

export OS_USERNAME=admin

export OS_PASSWORD=123

export OS_AUTH_URL=http://openstackvip.com:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

demo.keystone

#!/bin/bash

export OS_PROJECT_DOMAIN_NAME=default

export OS_USER_DOMAIN_NAME=default

export OS_PROJECT_NAME=demo

export OS_USERNAME=demo

export OS_PASSWORD=123

export OS_AUTH_URL=http://openstackvip.com:5000/v3

export OS_IDENTITY_API_VERSION=3

export OS_IMAGE_API_VERSION=2

脚本内容,要先设置好主机名,主机名不能包含_下划线

#!/bin/bash

gecho() {

echo -e "\e[1;32m${1}\e[0m" && sleep 1

}

recho() {

echo -e "\e[1;31m${1}\e[0m" && sleep 1

}

gecho "配置yum源..."

PWD=`dirname $0`

mkdir /etc/yum.repos.d/bak

mv /etc/yum.repos.d/* /etc/yum.repos.d/bak/

mv $PWD/yum/* /etc/yum.repos.d/

yum -y install centos-release-openstack-stein

gecho "安装openstack客户端、openstack SELinux管理包..."

yum -y install python-openstackclient openstack-selinux

yum -y install python2-PyMySQL mariadb

yum -y install openstack-keystone httpd mod_wsgi python-memcached

tar xf http_conf_d.tar -C /etc/httpd/conf.d

echo "192.168.99.211 openstackvip.com" >> /etc/hosts

echo "192.168.99.211 controller" >> /etc/hosts

gecho "安装keystone..."

tar xf $PWD/keystone.tar -C /etc/keystone

systemctl enable httpd.service

systemctl start httpd.service

gecho "安装glance..."

yum -y install openstack-glance

tar xf $PWD/glance.tar -C /etc/glance

systemctl enable openstack-glance-api.service openstack-glance-registry.service

systemctl start openstack-glance-api.service openstack-glance-registry.service

gecho "安装placement..."

yum -y install openstack-placement-api

tar xf $PWD/placement.tar -C /etc/placement

gecho "安装nova。。。"

yum -y install openstack-nova-api openstack-nova-conductor openstack-nova-console openstack-nova-novncproxy openstack-nova-scheduler openstack-nova-placement-api

tar xf $PWD/nova.tar -C /etc/nova

systemctl restart httpd

systemctl enable openstack-nova-api.service \

openstack-nova-consoleauth.service \

openstack-nova-scheduler.service \

openstack-nova-conductor.service \

openstack-nova-novncproxy.service

systemctl restart openstack-nova-api.service \

openstack-nova-consoleauth.service \

openstack-nova-scheduler.service \

openstack-nova-conductor.service \

openstack-nova-novncproxy.service

cat > /root/nova-restart.sh <<EOF

#!/bin/bash

systemctl restart openstack-nova-api.service openstack-nova-consoleauth.service openstack-nova-scheduler.service openstack-nova-conductor.service openstack-nova-novncproxy.service

EOF

chmod a+x /root/nova-restart.sh

gecho "安装neutron。。。"

yum -y install openstack-neutron openstack-neutron-ml2 \

openstack-neutron-linuxbridge ebtables

tar xf $PWD/neutron.tar -C /etc/neutron

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

sysctl -p

systemctl restart openstack-nova-api.service

systemctl enable neutron-server.service \

neutron-linuxbridge-agent.service \

neutron-dhcp-agent.service \

neutron-metadata-agent.service

systemctl restart neutron-server.service \

neutron-linuxbridge-agent.service \

neutron-dhcp-agent.service \

neutron-metadata-agent.service

gecho "安装dashboard..."

yum -y install openstack-dashboard

tar xf $PWD/dashboard.tar -C /etc/openstack-dashboard

systemctl restart httpd.service

recho "5秒后重启系统..."

for i in `seq 5 -1 1` ; do

tput sc

echo -n $i

sleep 1

tput rc

tput ed

done

reboot

把之前所有的/etc/hosts改成

192.168.99.211 openstackvip.com

192.168.99.211 controller

12.5. 快速添加node节点

新的物理机,安装好centos7.2,配置好IP地址与主机名。

准备这些包

准备

#准备neutron,在你原来的node节点上

cd /etc/neutron

tar cf /root/neutron-compute.tar *

#准备nova,在你原来的node节点上

cd /etc/nova

tar cf /root/nova-compute.tar *

文件limits.conf

# /etc/security/limits.conf

#

#This file sets the resource limits for the users logged in via PAM.

#It does not affect resource limits of the system services.

#

#Also note that configuration files in /etc/security/limits.d directory,

#which are read in alphabetical order, override the settings in this

#file in case the domain is the same or more specific.

#That means for example that setting a limit for wildcard domain here

#can be overriden with a wildcard setting in a config file in the

#subdirectory, but a user specific setting here can be overriden only

#with a user specific setting in the subdirectory.

#

#Each line describes a limit for a user in the form:

#

# -

#

#Where:

# can be:

# - a user name

# - a group name, with @group syntax

# - the wildcard *, for default entry

# - the wildcard %, can be also used with %group syntax,

# for maxlogin limit

#

# can have the two values:

# - "soft" for enforcing the soft limits

# - "hard" for enforcing hard limits

#

#- can be one of the following:

# - core - limits the core file size (KB)

# - data - max data size (KB)

# - fsize - maximum filesize (KB)

# - memlock - max locked-in-memory address space (KB)

# - nofile - max number of open file descriptors

# - rss - max resident set size (KB)

# - stack - max stack size (KB)

# - cpu - max CPU time (MIN)

# - nproc - max number of processes

# - as - address space limit (KB)

# - maxlogins - max number of logins for this user

# - maxsyslogins - max number of logins on the system

# - priority - the priority to run user process with

# - locks - max number of file locks the user can hold

# - sigpending - max number of pending signals

# - msgqueue - max memory used by POSIX message queues (bytes)

# - nice - max nice priority allowed to raise to values: [-20, 19]

# - rtprio - max realtime priority

#

# -

#

#* soft core 0

#* hard rss 10000

#@student hard nproc 20

#@faculty soft nproc 20

#@faculty hard nproc 50

#ftp hard nproc 0

#@student - maxlogins 4

# End of file

* soft core unlimited

* hard core unlimited

* soft nproc 1000000

* hard nproc 1000000

* soft nofile 1000000

* hard nofile 1000000

* soft memlock 32000

* hard memlock 32000

* soft msgqueue 8192000

* hard msgqueue 8192000

文件profile

# /etc/profile

# System wide environment and startup programs, for login setup

# Functions and aliases go in /etc/bashrc

# It's NOT a good idea to change this file unless you know what you

# are doing. It's much better to create a custom.sh shell script in

# /etc/profile.d/ to make custom changes to your environment, as this

# will prevent the need for merging in future updates.

pathmunge () {

case ":${PATH}:" in

*:"$1":*)

;;

*)

if [ "$2" = "after" ] ; then

PATH=$PATH:$1

else

PATH=$1:$PATH

fi

esac

}

if [ -x /usr/bin/id ]; then

if [ -z "$EUID" ]; then

# ksh workaround

EUID=`id -u`

UID=`id -ru`

fi

USER="`id -un`"

LOGNAME=$USER

MAIL="/var/spool/mail/$USER"

fi

# Path manipulation

if [ "$EUID" = "0" ]; then

pathmunge /usr/sbin

pathmunge /usr/local/sbin

else

pathmunge /usr/local/sbin after

pathmunge /usr/sbin after

fi

HOSTNAME=`/usr/bin/hostname 2>/dev/null`

HISTSIZE=1000

if [ "$HISTCONTROL" = "ignorespace" ] ; then

export HISTCONTROL=ignoreboth

else

export HISTCONTROL=ignoredups

fi

export PATH USER LOGNAME MAIL HOSTNAME HISTSIZE HISTCONTROL

# By default, we want umask to get set. This sets it for login shell

# Current threshold for system reserved uid/gids is 200

# You could check uidgid reservation validity in

# /usr/share/doc/setup-*/uidgid file

if [ $UID -gt 199 ] && [ "`id -gn`" = "`id -un`" ]; then

umask 002

else

umask 022

fi

for i in /etc/profile.d/*.sh ; do

if [ -r "$i" ]; then

if [ "${-#*i}" != "$-" ]; then

. "$i"

else

. "$i" >/dev/null

fi

fi

done

unset i

unset -f pathmunge

export HISTTIMEFORMAT="%F %T `whoami` "

文件sysctl.conf

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

脚本openstack_node_script.sh

#!/bin/bash

gecho() {

echo -e "\e[1;32m${1}\e[0m" && sleep 1

}

recho() {

echo -e "\e[1;31m${1}\e[0m" && sleep 1

}

vip=192.168.99.211

controller_ip=192.168.99.211

gecho "配置yum源"

PWD=`dirname $0`

mkdir /etc/yum.repos.d/bak

mv /etc/yum.repos.d/* /etc/yum.repos.d/bak/

mv $PWD/yum/* /etc/yum.repos.d/

gecho "安装包..."

yum -y install centos-release-openstack-stein

yum -y install python-openstackclient openstack-selinux

yum -y install openstack-nova-compute

yum -y install openstack-neutron-linuxbridge ebtables ipset

cat $PWD/limits.conf > /etc/security/limits.conf

cat $PWD/profile > /etc/profile

cat $PWD/sysctl.conf > /etc/sysctl.conf

gecho "配置nova"

tar xvf $PWD/nova-compute.tar -C /etc/nova/

myip=`ifconfig eth0 | awk '/inet /{print $2}'`

sed -i "/my_ip =/s#.*#my_ip = ${myip}#" /etc/nova/nova.conf

gecho "配置neutron"

tar xf neutron-compute.tar -C /etc/neutron

echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf

sysctl -p

echo "${vip} openstackvip.com" >> /etc/hosts

echo "${controller_ip} controller" >> /etc/hosts

vcpu=${egrep -c '(vmx|svm)' /proc/cpuinfo}

if [ vcpu -eq 0 ] ; then

cat >> /etc/nova/nova.conf <<EOF

[libvirt]

virt_type = qemu

EOF

fi

gecho "启动服务..."

systemctl enable libvirtd.service openstack-nova-compute.service

systemctl restart libvirtd.service || recho "libvirtd启动失败"

systemctl restart openstack-nova-compute.service || recho "openstack-nova-compute启动失败"

systemctl enable neutron-linuxbridge-agent.service

systemctl restart neutron-linuxbridge-agent.service

recho "5秒后重启系统..."

for i in `seq 5 -1 1` ; do

tput sc

echo -n $i

sleep 1

tput rc

tput ed

done

reboot

13. 配置cinder(块存储服务)

OpenStack的存储组件—Cinder和Swift—让你在你的私有云里构建块存储和对象的存储系统,Openstack从Folsom开始使用Cinder替换原来的Nova-Volume服务,为Openstack云平台提供块存储服务,Cinder接口提供了一些标准功能,允许创建和附加块设备到虚拟机,如“创建卷”,“删除卷”和“附加卷”。还有更多高级的功能,支持扩展容量的能力,快照和创建虚拟机镜像克隆,主要涉及到的组件如下:

cinder-api:接受API请求,并将其路由到“cinder-volume“执行,即请求cinder要先请求此对外API。

cinder-volume:与块存储服务和例如“cinder-scheduler“的进程进行直接交互。它也可以与这些进程通过一个消息队列进行交互。“cinder-volume“服务响应送到块存储服务的读写请求来维持状态。它也可以和多种存储提供者在驱动架构下进行交互。

cinder-scheduler守护进程:选择最优存储提供节点来创建卷。其与“nova-scheduler“组件类似。

cinder-backup守护进程:“cinder-backup“服务提供任何种类备份卷到一个备份存储提供者。就像“cinder-volume“服务,它与多种存储提供者在驱动架构下进行交互。

消息队列:在块存储的进程之间路由信息。

监听端口:8776

13.1. 配置cinder控制器节点

- 数据库端

新建数据库

mysql -u root -p

CREATE DATABASE cinder;

GRANT ALL PRIVILEGES ON cinder.* TO 'cinder'@'%' IDENTIFIED BY '123';

flush privileges;

- 控制端

source admin-openrc

- 创建cinder用户:

openstack user create --domain default --password-prompt cinder

密码我设置为:123

- 将admin角色添加到cinder用户:

openstack role add --project service --user cinder admin

- 创建cinderv2和cinderv3服务实体

openstack service create --name cinderv2 \

--description "OpenStack Block Storage" volumev2

openstack service create --name cinderv3 \

--description "OpenStack Block Storage" volumev3

- 创建Block Storage服务API端点

openstack endpoint create --region RegionOne \

volumev2 public http://openstackvip.com:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne \

volumev2 internal http://openstackvip.com:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne \

volumev2 admin http://openstackvip.com:8776/v2/%\(project_id\)s

openstack endpoint create --region RegionOne \

volumev3 public http://openstackvip.com:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne \

volumev3 internal http://openstackvip.com:8776/v3/%\(project_id\)s

openstack endpoint create --region RegionOne \

volumev3 admin http://openstackvip.com:8776/v3/%\(project_id\)s

- 安装和配置组件

yum -y install openstack-cinder

- 编辑/etc/cinder/cinder.conf文件并完成以下操作

过滤下注释信息

sed -i -e '/^#/d' -e '/^$/d' /etc/cinder/cinder.conf

vim /etc/cinder/cinder.conf

[DEFAULT]

my_ip = 192.168.99.101

transport_url = rabbit://openstack:[email protected]

auth_strategy = keystone

[backend]

[backend_defaults]

[barbican]

[brcd_fabric_example]

[cisco_fabric_example]

[coordination]

[cors]

[database]

connection = mysql+pymysql://cinder:[email protected]/cinder

[fc-zone-manager]

[healthcheck]

[key_manager]

[keystone_authtoken]

www_authenticate_uri = http://openstackvip.com:5000

auth_url = http://openstackvip.com:5000

memcached_servers = openstackvip.com:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = 123

[nova]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[privsep]

[profiler]

[sample_castellan_source]

[sample_remote_file_source]

[service_user]

[ssl]

[vault]

- 填充块存储数据库

su -s /bin/sh -c "cinder-manage db sync" cinder

- 配置计算以使用块存储

编辑/etc/nova/nova.conf文件

vim /etc/nova/nova.conf

在对应选项加上这个配置

[cinder]

os_region_name = RegionOne

- 重新启动Compute API服务

systemctl restart openstack-nova-api.service

- 启动Block Storage服务并将其配置为在系统引导时启动

systemctl enable openstack-cinder-api.service openstack-cinder-scheduler.service

systemctl start openstack-cinder-api.service openstack-cinder-scheduler.service

- haproxy代理8776端口。

- 在ha上配置

vim /etc/haproxy/haproxy.cfg

在最后追加

listen cinder

bind :8776

mode tcp

server t1 192.168.99.101:8776 check inter 3s fall 3 rise 5

- 重启服务

systemctl restart haproxy

13.2. 存储服务器

准备一台存储服务器,也可以在数据库服务器上来配(省机器,分配2G内存),称之为“块存储”节点

- "块存储"节点(我在数据库节点上做)

- 在虚拟机上新加一块硬盘,然后执行下面命令来识别

echo "- - -" > /sys/class/scsi_host/host0/scan

- 安装LVM包:

yum -y install lvm2 device-mapper-persistent-data

- 启动LVM元数据服务

systemctl enable lvm2-lvmetad.service

systemctl restart lvm2-lvmetad.service

- 创建LVM物理卷/dev/sdb

pvcreate /dev/sdb

- 创建LVM卷组cinder-volumes

vgcreate cinder-volumes /dev/sdb

- 编辑lvm配置文件

过滤下注释

sed -i -e '/#/d' -e '/^$/d' /etc/lvm/lvm.conf

vim /etc/lvm/lvm.conf

找个下面这个字段修改

devices {

...

filter = [ "a/sdb/", "r/.*/"]

a是access,r是reject,只授受sdb磁盘,

- 安装包:

yum -y install openstack-cinder targetcli python-keystone

- 编辑cinder.conf文件

过滤下注释

sed -i -e '/#/d' -e '/^$/d' /etc/cinder/cinder.conf

vim /etc/cinder/cinder.conf

[DEFAULT]

my_ip = 192.168.99.106

transport_url = rabbit://openstack:[email protected]

auth_strategy = keystone

enabled_backends = lvm

glance_api_servers = http://openstackvip.com:9292

[backend]

[backend_defaults]

[barbican]

[brcd_fabric_example]

[cisco_fabric_example]

[coordination]

[cors]

[database]

connection = mysql+pymysql://cinder:[email protected]/cinder

[fc-zone-manager]

[healthcheck]

[key_manager]

[keystone_authtoken]

www_authenticate_uri = http://openstackvip.com:5000

auth_url = http://openstackvip.com:5000

memcached_servers = openstackvip.com:11211

auth_type = password

project_domain_name = default

user_domain_name = default

project_name = service

username = cinder

password = 123

[nova]

[oslo_concurrency]

lock_path = /var/lib/cinder/tmp

[oslo_messaging_amqp]

[oslo_messaging_kafka]

[oslo_messaging_notifications]

[oslo_messaging_rabbit]

[oslo_middleware]

[oslo_policy]

[oslo_reports]

[oslo_versionedobjects]

[privsep]

[profiler]

[sample_castellan_source]

[sample_remote_file_source]

[service_user]

[ssl]

[vault]

[lvm]

volume_driver = cinder.volume.drivers.lvm.LVMVolumeDriver

volume_group = cinder-volumes

target_protocol = iscsi

target_helper = lioadm

volume_backend_name = openstack-lvm

my_ip写你的本机的IP

- 启动Block Storage卷服务

systemctl enable openstack-cinder-volume.service target.service

systemctl restart openstack-cinder-volume.service target.service

- 控制节点上

- 查看卷列表

openstack volume service list

- mkfs对卷进行格式化,dashboard中卷模块管理连接,连接到虚拟机。

将逻辑卷格式化为ext4

mkfs.ext4 /dev/vdb

- 在虚拟机内进行挂载(同一卷不能同时挂载在两个虚拟机上)

mount /dev/vdb /data

扩展卷

- 扩展卷,重新连接,重新挂载,文件系统动态扩展

resize2fs /vev/vdb

13.3. NFS作为openstack后端存储

当做硬盘使用:cinder存储服务器只能同时提供一种存储方式lvm或者nfs,不能同时提供两种

准备一台新的虚拟机当nfs服务器,IP为192.168.99.105

- 在新的NFS服务器上

- 配置nfs服务器端

yum -y install nfs-utils rpcbind

- 添加用户

useradd nfsuser

id nfsuser

- 编辑配置文件

vim /etc/exports

/nfsdata *(rw,all_squash,anonuid=1000,anongid=1000)

给权限

mkdir /nfsdata

chown nfsuser.nfsuser /nfsdata

anonuid和anongid写的id是nfsuser的id

- 重启服务

systemctl enable nfs

systemctl restart nfs

- 在控制节点上

- 在修改配置cinder配置文件,使用nfs

vim /etc/cinder/cinder.conf

[DEFAULT]

enabled_backends = nfs

[nfs]

volume_backend_name = openstack-nfs

volume_driver = cinder.volume.drivers.nfs.NfsDriver

nfs_shares_config = /etc/cinder/nfs_shares.conf

nfs_mount_point_base = $state_path/mnt

volume_backend_name,定义名称,后面做关联的时候使用

volume_driver,驱动

nfs_shares_config ,定义 NFS 挂载的配置文件路径

nfs_mount_point_base,定义 NFS 挂载点

- 创建nfs挂载配置文件,并修改所有者及所属组

vim /etc/cinder/nfs_shares.conf

文件不存在,需要创建

192.168.99.105:/nfsdata

- 授权

chown root.cinder /etc/cinder/nfs_shares.conf

- 重启cinder服务

systemctl restart openstack-cinder-volume.service

- 验证nfs自动挂载

cinder service-list

- 磁盘类型关联

创建磁盘类型:

cinder type-create lvm

cinder type-create nfs

- 将磁盘类型与磁盘关联:

cinder type-key nfs set volume_backend_name=openstack-nfs

cinder type-key lvm set volume_backend_name=Openstack-lvm

- nfs需重新进行格式化mkfs.ext4(同lvm,过程略)

- fdisk查看。(nfs存储可拉伸)

13.4. (可选)配置备份服务

- "块存储"节点

- 安装包:

yum -y install openstack-cinder

- 编辑cinder.conf文件

vim /etc/cinder/cinder.conf

[DEFAULT]

backup_driver = cinder.backup.drivers.swift

backup_swift_url = SWIFT_URL

openstack catalog show object-store

- 启动Block Storage备份服务

systemctl enable openstack-cinder-backup.service

systemctl start openstack-cinder-backup.service

14. 实现VPC 自定义网络

专有网络VPC(Virtual Private Cloud)是一个相互隔离的网络环境,每个专有网络之间逻辑上彻底隔离,可以自己选择自己的IP地址范围、划分网段、配置路由表和网关等,从而实现安全而轻松的资源访问和应用程序访问

在配置完provider网络的基础上配置,通过自服务网络提供的路由进行连接provider外网

14.1. 控制端配置

- 控制端上

- 安装相关包

yum install -y openstack-neutron openstack-neutron-ml2 openstack-neutron-linuxbridge ebtables

- 修改配置文件neutron.conf

vim /etc/neutron/neutron.conf

在原来的基础上修改或添加

[DEFAULT]

core_plugin = ml2

service_plugins = router

allow_overlapping_ips = true

transport_url = rabbit://openstack:[email protected]

auth_strategy = keystone

notify_nova_on_port_status_changes = true

notify_nova_on_port_data_changes = true

仅修改DEFAULT

- 配置模块化第二层ML2插件

vim /etc/neutron/plugins/ml2/ml2_conf.ini

[DEFAULT]

[ml2]

type_drivers = flat,vlan,vxlan

tenant_network_types = vxlan

mechanism_drivers = linuxbridge,l2population

extension_drivers = port_security

[ml2_type_flat]

flat_networks = provider

[securitygroup]

enable_ipset = true

[ml2_type_vxlan]

vni_ranges = 1:1000

[securitygroup]

enable_ipset = true

- 配置桥接代理:

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[linux_bridge]

physical_interface_mappings = provider:eth0

[vxlan]

enable_vxlan = true

local_ip = 192.168.99.101

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

- 配置内核参数:

#之前已经有了,不用重复添加

echo "net.bridge.bridge-nf-call-iptables = 1" > /etc/sysctl.conf

echo "net.bridge.bridge-nf-call-ip6tables = 1 " >> /etc/sysctl.conf

生效

sysctl -p

- 配置第三层代理:

vim /etc/neutron/l3_agent.ini

[DEFAULT]

interface_driver = linuxbridge

- 重启compute API服务:

systemctl restart openstack-nova-api.service

- 启动服务并开机启动

systemctl enable neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

systemctl restart neutron-server.service neutron-linuxbridge-agent.service neutron-dhcp-agent.service neutron-metadata-agent.service

- 自服务网络还需启动下面两个服务:

systemctl enable neutron-l3-agent.service

systemctl restart neutron-l3-agent.service

14.2. 计算节点配置

- 计算节点上

- 配置桥接代理:

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

[DEFAULT]

[linux_bridge]

physical_interface_mappings = provider:eth0

[vxlan]

enable_vxlan = true

local_ip = 192.168.99.23

l2_population = true

[securitygroup]

enable_security_group = true

firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver

- 重启compute服务:

systemctl restart openstack-nova-compute.service

- 网桥代理配置开启启动并启动

systemctl restart neutron-linuxbridge-agent.service

- 控制端

- 验证:

openstack network agent list

14.3. 创建自服务网络

- 控制端

- 创建自服务网络(需要provider网络支持)

openstack network create selfnetwork

验证

openstack network list

- 创建自定义子网

openstack subnet create --network selfnetwork --dns-nameserver 8.8.8.8 --gateway 172.16.0.1 --subnet-range 172.16.0.0/16 selfnetwork-subnet

命令格式:

openstack subnet create --network 网络名称 \

--dns-nameserver 8.8.8.8 --gateway 172.16.1.1 \

--subnet-range 172.16.1.0/24 自定义子网名称

- 创建路由器:

openstack router create router

- 添加内网子网到路由:

neutron router-interface-add router selfnetwork-subnet

- 设置路由器网关:(需要bridge网络支持)

neutron router-gateway-set router provider

- 配置Horzen支持三层网络:

vim /etc/openstack-dashboard/local_settings

修改这段

OPENSTACK_NEUTRON_NETWORK = {

'enable_router': True,

'enable_quotas': True,

'enable_ipv6': True,

'enable_distributed_router': True,

'enable_ha_router': True,

'enable_lb': True,

'enable_firewall': True,

'enable_': True,

'enable_fip_topology_check': True,

...

- 查看路由ip

openstack router show router | grep status

- 检查下,有2条记录

neutron router-port-list router

验证能否ping得通外网

![]()

15. 实现内外网结构

实现类似于阿里云ECS主机的内外网(双网卡不通网段)的结构,最终实现内外网区分隔离

15.1. 各虚拟机网卡添加

15.2. 控制节点配置

- 控制节点上

- 编辑配置文件linuxbridge_agent.ini

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

修改这个选项块

[linux_bridge]

physical_interface_mappings = provider:eth0, external:eth1

- 编辑配置文件如下ml2_conf.ini

vim /etc/neutron/plugins/ml2/ml2_conf.ini

修改这个选项块

[ml2_type_flat]

flat_networks = provider, external

- 重启neutron服务

systemctl restart neutron-linuxbridge-agent

systemctl restart neutron-server

15.3. 计算节点配置

- 计算节点上

- 编辑配置文件

vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini

修改这个选项块

[linux_bridge]

physical_interface_mappings = provider:eth0, external:eth1

- 重启neutron服务

systemctl restart neutron-linuxbridge-agent

15.4. 创建网络并验证

- 控制节点上

- 控制端创建网络

neutron net-create --shared --provider:physical_network external --provider:network_type flat external-net

- 创建子网

neutron subnet-create --name external-subnet \

--allocation-pool start=172.16.23.200,end=172.16.23.220 \

--dns-nameserver 114.114.114.114 external-net 172.16.0.0/16

![]()

- 验证子网创建完成

neutron net-list

16. openstack镜像制作:

通过KVM安装虚拟机Centos 7.2 、centos 6.9 和Windwos 2008 R2_x86_64 操作系统步骤,还有基于官方GenericCloud 7.2.1511镜像制作。并将磁盘文件作为镜像上传到openstack glance,作为批量创建虚拟机的镜像文件,其中windowsn 2008安装virtio 半虚拟化驱动,以实现网络IO和磁盘IO的半虚拟化提升速度。

Centos 7默认即支持半虚拟化,不需要安装驱动,Virtio最初由澳大利亚的一个天才级程序员Rusty Russell编写,是一个在hypervisor之上的抽象API接口,让客户机知道自己运行在虚拟化环境中,从而与hypervisor根据 virtio 标准协作,从而在客户机中达到更好的性能(特别是I/O性能),目前,有不少虚拟机都采用了virtio半虚拟化驱动来提高性能。

虚拟机镜像有哪些?

ISO

ISO格式是使用通常用于CD和DVD的只读ISO 9660文件系统格式化的磁盘镜像。

OVF

OVF(开放虚拟化格式)是虚拟机的打包格式,由分布式管理任务组(DMTF)标准组定义。OVF包中包含一个或多个镜像文件,一个.ovf XML元数据文件,其中包含有关虚拟机的信息,也可能包含其他文件。

QCOW2

QCOW2格式通常与KVM管理程序一起使用。它具有原始格式的一些附加功能,例如:

- 使用稀疏表示,因此镜像尺寸更小。

- 支持快照。

因为qcow2是稀疏的,所以qcow2镜像通常小于原始镜像。较小的镜像意味着上传速度更快,因此将原始镜像转换为qcow2进行上传通常会更快,而不是直接上传原始文件。

Raw

原始镜像格式是最简单的格式,KVM和Xen虚拟机管理程序本身都支持这种格式。

VDI

VirtualBox 对镜像文件使用 VDI(虚拟磁盘镜像)格式。OpenStack Compute虚拟机管理程序都不直接支持VDI,因此您需要将这些文件转换为其他格式才能与OpenStack一起使用。

VHD

Microsoft Hyper-V使用VHD(虚拟硬盘)格式的镜像。

VHDX

Microsoft Server 2012附带的Hyper-V版本使用较新的 VHDX格式,该格式与VHD相比具有一些附加功能,例如支持更大的磁盘大小和防止电源故障期间的数据损坏。

VMDK