pytorch高级库pytorch-ignite

Pytorch 高级库Pytorch-Ignite的使用

8行代码训练模型,2行代码搞定pytorch训练的进度条。

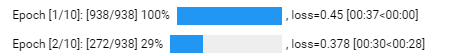

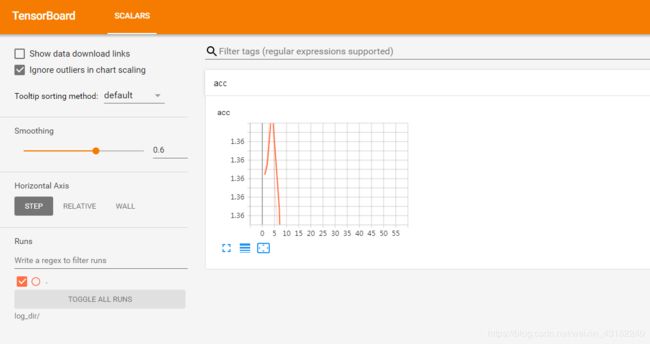

这个库训练模型时,比较简洁,不需要写一大堆前向传播,后向传播等代码,代码更干净,逻辑更清晰。甚至进度条的调用也封装好了,可以自定义跟随进度条变化的字段,比如loss,准确率等,比写tqdm还要简单,进度条如下。同样也支持可视化TensorboardX

安装

这里用清华源安装,一般用pip安装时使用国内源速度飞快,这里用 -i 参数安装。

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple pytorch-ignite

# 安装可视化工具tensorboardX,可选安装

pip install -i https://pypi.tuna.tsinghua.edu.cn/simple tensorboardX tensorflow

使用实例

import torch

from torch import nn

from torch.optim import SGD

from torch.utils.data import DataLoader

import torch.nn.functional as F

from torchvision.transforms import Compose, ToTensor, Normalize

from torchvision.datasets import MNIST

import torchvision.models as models

# ignite的一些库

from ignite.engine import Events, create_supervised_trainer, create_supervised_evaluator

from ignite.metrics import Accuracy, Loss,RunningAverage

from ignite.contrib.handlers import ProgressBar # 进度条

# 可视化

from tensorboardX import SummaryWriter

"""

实例一个writer,这里的log_dir表示存放tensorboardX需要读取的数据,输入目录若不存在,会自己创建,

这里要在每个迭代更新一次,或者可选在epoch更新一次。

"""

writer = SummaryWriter(log_dir='log_dir')

#############################################################

class Net(nn.Module):

"""

定义模型

"""

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = nn.Conv2d(10, 20, kernel_size=5)

self.conv2_drop = nn.Dropout2d()

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, x):

x = F.relu(F.max_pool2d(self.conv1(x), 2))

x = F.relu(F.max_pool2d(self.conv2_drop(self.conv2(x)), 2))

x = x.view(-1, 320)

x = F.relu(self.fc1(x))

x = F.dropout(x, training=self.training)

x = self.fc2(x)

return F.log_softmax(x, dim=-1)

def get_data_loaders(train_batch_size, val_batch_size):

"""

生成手写数字识别的dataloader,自己的图片数据集要如何处理为dataloader?

"""

data_transform = Compose([ToTensor(), Normalize((0.1307,), (0.3081,))])

train_loader = DataLoader(

MNIST(download=True, root=".", transform=data_transform, train=True), batch_size=train_batch_size, shuffle=True

)

val_loader = DataLoader(

MNIST(download=False, root=".", transform=data_transform, train=False), batch_size=val_batch_size, shuffle=False

)

return train_loader, val_loader

#############################################################

# 重头戏,ignite的训练

def run(train_batch_size, val_batch_size, epochs, lr, momentum, log_interval):

train_loader, val_loader = get_data_loaders(train_batch_size, val_batch_size) # 加载数据集

model = Net() # 实例化网络

device = "cpu" # 判断用cpu还是gpu

if torch.cuda.is_available():

device = "cuda"

optimizer = SGD(model.parameters(), lr=lr, momentum=momentum)

trainer = create_supervised_trainer(model, optimizer, F.nll_loss, device=device) # 创建训练器

evaluator = create_supervised_evaluator(model, metrics={"accuracy": Accuracy(), "nll": Loss(F.nll_loss)}, device=device)

# 这里的’loss'可以改为上述metrcs的key,比如"accuracy"等.

RunningAverage(output_transform=lambda x: x).attach(trainer, 'loss')

# 进度条

pbar = ProgressBar()

pbar.attach(trainer, ['loss']) # loss表示要跟进度条一起显示的数据

# 装饰器,在这里可以在每个epoch结束(Events.EPOCH_COMPLETED)或者每一个迭代结束(Events.ITERATION_COMPLETED)做一些事情,这就是自定义的了,可以打印想看的东西或者修改学习率等。

@trainer.on(Events.ITERATION_COMPLETED)

def log_tensorboard(engine):

writer.add_scalar('acc', engine.state.metrics['pixel_error'], engine.state.iteration)

trainer.run(train_loader, max_epochs=epochs)

run(64, 1000, 10, 0.01, 0.5, 10)

这里启动tensorboard需要新开一个命令行窗口,输入

tensorboard --logdir='log_dir/' --port=16006

最喜欢还是进度条ProgressBar(),贼方便。