【初学python】实例九:词频统计

今天我们来统计一下一本书里面的一些关键字。

这里首先忽略了一个问题:这是一本什么书?中文?还是英文?

如果是英文,我们要解决的问题有很多:比如说大小写问题,标点符号问题等等,首先我们在网上下载一个全英的TXT文本(这里以莎士比亚的哈姆雷特为蓝本),然后我们给出代码:

#实例9:词频统计

def gettext():

txt = open("471228.txt","r",encoding="utf-8").read()

txt = txt.lower()

for ch in '|\/*-+.!@#$%^&*()''/.,?><"~`':

txt = txt.replace(ch," ")

return txt

hamlettxt = gettext()

words = hamlettxt.split()

counts = {}

for word in words:

counts[word] = counts.get(word,0) +1

items = list(counts.items())

items.sort(key = lambda x:x[1],reverse = True)

for i in range(10):

word,count = items[i]

print("{0:<10}{1:>5}".format(word,count))

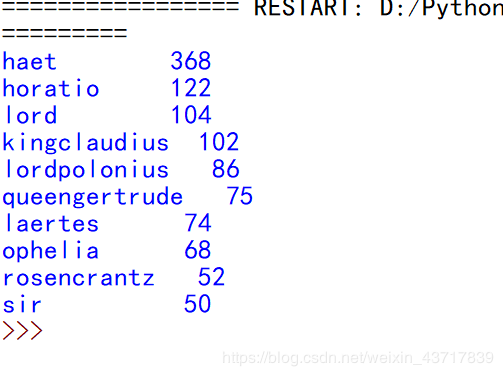

运行效果:

类似的,我们给出中文版式的名著《三国演义》,要求统计人物姓名的出场次数,相比于英文版,这个比较简单,因为不存在大小写问题,这里我们引入了一个非常优秀的第三方库函数:jieba,如果我们按照上面的格式来编程:

#实例9:词频统计

import jieba

txt = open("三国演义.txt","r",encoding = "gb18030").read()

words = jieba.lcut(txt)

counts = {}

for word in words:

if len(word) == 1:

continue

else:

counts[word] = counts.get(word,0) + 1

items = list(counts.items())

items.sort(key = lambda x:x[1],reverse = True)

for i in range(15):

word,count = items[i]

print("{0:<10}{1:>5}".format(word,count))

运行效果确是这样的:

我们发现:这里的很多词并不是人名,因此我们需要对程序进行改进:

1.将前十以内的不是人名的剔除

2.将同一个人的不同称呼与这个人相关联

于是 2.0 版本正式诞生:

#实例9:词频统计

import jieba

txt = open("三国演义.txt","r",encoding = "gb18030").read()

excludes = {"将军","却说","荆州","二人","不可","不能","如此","商议","如何","主公","军士","吕布","左右","军马","引兵","次日","大喜","天下","东吴","于是","不敢","人马","都督","一人","魏兵","陛下","今日"}

words = jieba.lcut(txt)

counts = {}

for word in words:

if len(word) ==1:

continue

elif word=="诸葛亮" or word == "孔明曰":

rword = "孔明"

elif word=="关公" or word == "关云长":

rword = "关羽"

elif word=="玄德" or word == "玄德曰":

rword = "刘备"

elif word=="孟德" or word == "丞相":

rword = "曹操"

else:

rword = word

counts[rword] = counts.get(rword,0) + 1

for word in excludes:

del counts[word]

items = list(counts.items())

items.sort(key = lambda x:x[1],reverse = True)

for i in range(10):

word,count = items[i]

print("{0:<10}{1:>5}".format(word,count))