elasticsearch快速入门新手教程(基于Python)

一、开发需要的环境工具

- Python 建议直接使用anaconda集成,方便省事(注意环境变量的设置)

- elasticsearch服务,直接从官网下载安装,本文以2.4.4版本为例,cmd启动服务,默认端口9200,建议安装head工具,方便集群监控,数据浏览

- postman测试工具 ,es提供restful接口,可通过post等请求进行操作

二、通过Python与es服务建立连接

def connect_elasticsearch():

_es = None

_es = Elasticsearch([{'host':'localhost','port':9200}])

if _es.ping():

print('yay connected')

else:

print('not connected')

return _es`

三、通过Python创建索引及类型`在这里插入代码片

def create_index(es_object,index_name='recipes'):

created = False

settings = {

"settings":{

"number_of_shards":1,

"number_of_replicas":0

},

"mappings":{

"members":{

"dynamic":"strict",

"properties":{

"title":{

"type":"string"

},

"submitter":{

"type":"string"

},

"description":{

"type":"string"

},

"calories":{

"type":"integer"

},

}

}

}

}

try:

if not es_object.indices.exists(index_name):

es_object.indices.create(index = index_name,ignore = 400,body = settings)

print('Created')

created = True

except Exception as ex:

print(str(ex))

finally:

return created

connect_elasticsearch()

es = Elasticsearch([{'host':'localhost','port':9200}])

create_index(es)`

数据说明:

本次试验使用的数据为某网站爬取的食物数据,索引名为recipes,类型名为salads,字段包括title,submitter,description,calories,和ingredients

四、通过爬虫获取测试数据,并将数据写入之前创建好的索引类型中

import json

from time import sleep

import requests

from bs4 import BeautifulSoup

import create_index as ci

def parse(u):

title = '-'

submit_by = '-'

description = '-'

calories = 0

ingredients=[]

rec = {}

try:

r = requests.get(u,headers = headers)

if r.status_code == 200:

html = r.text

soup = BeautifulSoup(html,'lxml')

title_section = soup.select('.recipe-summary__h1')

submitter_section = soup.select('.submitter__name')

description_section = soup.select('.submitter__description')

ingredients_section = soup.select('.recipe-ingred_txt')

calories_section = soup.select('.calorie-count')

if calories_section:

calories = calories_section[0].text.replace('cals','').strip()

if ingredients_section:

for ingredient in ingredients_section:

ingredient_text = ingredient.text.strip()

if 'Add all ingredients to list' not in ingredient_text and ingredient_text != '':

ingredients.append({'step':ingredient_text.strip()})

if description_section:

description = description_section[0].text.strip().replace('"','')

if submitter_section:

submit_by = submitter_section[0].text.strip()

if title_section:

title = title_section[0].text

rec = {'title':title,'submitter':submit_by,'description':description,'calories':calories,'ingredients':ingredients}

except Exception as ex:

print('Exception while parsing')

print(str(ex))

finally:

return json.dumps(rec)

def store_record(elastic_object,index_name,record):

try:

outcome = elastic_object.index(index = index_name,doc_type = 'salads',body= record)

except Exception as ex:

print('Error in indexing data')

print(str(ex))

if __name__ == '__main__':

headers = {

'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:64.0) Gecko/20100101 Firefox/64.0',

'Pragma':'no-cache'

}

url = 'https://www.allrecipes.com/recipes/96/salad/'

r = requests.get(url,headers = headers)

if r.status_code == 200:

html = r.text

soup = BeautifulSoup(html,'lxml')

links = soup.select('.fixed-recipe-card__h3 a')

for link in links:

sleep(2)

result = parse(link['href'])

print(result)

print('=======================================================')

store_record(ci.es, 'recipes', result)`

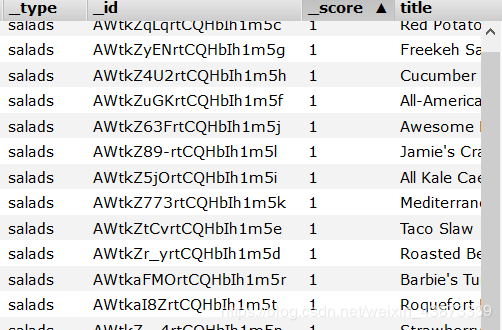

成功爬取并写入数据后,通过es head工具可查看索引类型中的数据信息如下:

五、通过Python语句实现简单查找操作`def search (es_object,index_name,search):

res = es_object.search(index = index_name,body = search)

print(res)

if __name__=='__main__':

es = ci.connect_elasticsearch()

if es is not None:

search_object = {'query':{'match':{'calories':'131'}}}

search(es,'recipes',json.dumps(search_object))

search_object = {'_source':['_id','title'],'query':{'range':{'calories':{'gte':20}}}}

search(es,'recipes',json.dumps(search_object))

以上代码可查询所有卡路里值大于二十的事物的ID信息和title信息