SR综述论文总结

文章目录

- 论文:A Deep Journey into Super-resolution: A Survey

- 论文概要

- BackGround

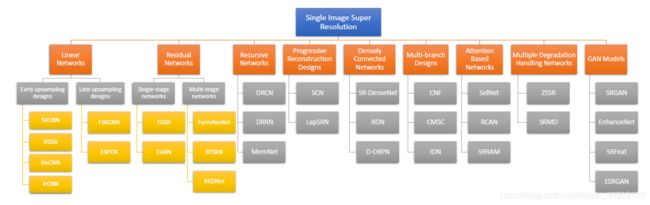

- SISR 分类

- 实验评估

- 未来方向

论文:A Deep Journey into Super-resolution: A Survey

作者:Saeed Anwar, Salman Khan, and Nick Barnes

论文概要

-

论文概要:

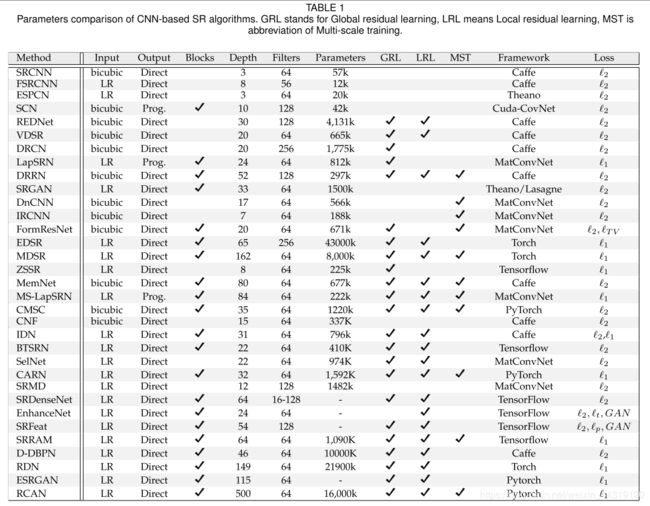

对比了近30个最新的超分辨率卷积网络在6个数据集上(3个经典的,3个最近提出的)的表现,来给SISR定下基准。分成9类。我们还提供了在网络复杂性、内存占用、模型输入和输出、学习细节、网络损失类型和重要架构差异方面的比较。 -

SISR应用方面:

- large computer displays

- HD television sets

- hand-held devices (mobile phones,tablets, cameras etc.).

- object detection in scenes (particularly small objects )

- face recognition in surveillance videos

- medical imaging

- improving interpretation of images in remote sensing

- astronomical images

- forensics

-

超分辨率是一个经典的问题,但由于种种原因,仍然是计算机视觉领域一个具有挑战性和开放性的研究课题

原因:- SR is an ill-posed inverse problem

(There exist multiple solutions for the same low-resolution image. To constrain the solution-space, reliable prior information is typically required.) - the complexity of the problem increases as the up-scaling factor increases.(x2,x4,x8,问题就会变得越来越难)

- assessment of the quality of output is not straightforward(模型的质量评估不容易,质量指标PSNR,SSIM只与人类的感知有松散的联系)

- SR is an ill-posed inverse problem

-

DL 在其他 AI 领域的应用:

- 目标分类与探测

- 自然语言处理

- 图像处理

- 音频信号处理

-

本论文的贡献:

- 全面回顾超分辨率的最新技术

- 基于各种超分辨率算法结构的不同提出一个的新的分类方法

- 基于参数数量、算法设置、训练细节和重要的结构创新进行全面的分析

- 我们对算法进行系统的评估(在6个SISR数据集上)

- 讨论了目前超分领域的挑战和对未来研究的展望

BackGround

-

Degradation Process:

y = Φ ( x ; θ η ) (1) y = \Phi( x ; \theta_\eta)\tag{1} y=Φ(x;θη)(1)

x x x:HR 图像

y y y :LR 图像

Φ \Phi Φ :degradation function

θ η \theta_\eta θη:degradation parameters (scaling factor,noise)现实中,只有 y y y 是可获取的,并且没有降解过程和也没有降解参数,超分辨率就是试图消除降解效应去获得和 x x x (真实HR图像) 近似的图像 x ^ \hat{x} x^

x ^ = Φ − 1 ( y , θ ς ) (2) \hat{x}=\Phi^{-1}(y,\theta_\varsigma)\tag{2} x^=Φ−1(y,θς)(2)

θ ς \theta_\varsigma θς: Φ − 1 \Phi^{-1} Φ−1的参数

降解的过程是未知且非常复杂的,受到很多因素影响,例如:noise (sensor and speckle), compression, blur (defocus and motion), and other artifacts因此,大多数研究工作相对(1)更喜欢下边降解模型:

y = ( x ⊗ k ) ↓ s + n (3) y = (x \otimes k) \downarrow_s+ \ n\tag{3} y=(x⊗k)↓s+ n(3)

k k k:blurring kernel

x ⊗ k x \otimes k x⊗k :convolution operation

↓ s \downarrow_s ↓s :downsampling operation with a scaling factor s s s

n n n : the additive white Gaussian noise (AWGN) with a standard deviation of σ \sigma σ (noise level).图像超分辨率的目标就是去最小化与模型 y = x ⊗ k + n y = x \otimes k+ \ n y=x⊗k+ n相关的数据保真项 (data fidelity term) 如下:

J ( x ^ , θ ς , k ) = ∥ x ⊗ k − y ∥ ⏟ d a t a f i d e l i t y t e r m + α Ψ ( x , θ ς ) ⏟ r e g u l a r i z e r ( 正 则 化 ) J(\hat{x},\theta_\varsigma,k)=\underbrace{\|x \otimes k -y\|}_{data\ fidelity\ term}+\underbrace{\alpha \Psi(x,\theta_\varsigma)}_{regularizer(正则化)} J(x^,θς,k)=data fidelity term ∥x⊗k−y∥+regularizer(正则化) αΨ(x,θς)

α \alpha α:(the data fidelity term and image prior Ψ ( ⋅ ) \Psi(\cdot) Ψ(⋅))平衡系数自然图像先验

在自然图像处理领域里,有很多问题(比如图像去噪、图像去模糊、图像修复、图像重建等)都是反问题 ,即问题的解不是唯一的。为了缩小问题的解的空间或者说为了更好的逼近真实解,我们需要添加限制条件。这些限制条件来自自然图像本身的特性,即自然图像的先验信息。如果能够很好地利用自然图像的先验信息,就可以从低质量的图像上恢复出高质量的图像,因此研究自然图像的先验信息是非常有意义的。

目前常用的自然图像的先验信息有自然图像的局部平滑性、非局部自相似性、非高斯性、统计特性、稀疏性等特征 。

作者:showaichuan

链接:https://www.jianshu.com/p/ed8a5b05c3a4

来源:简书基于图像先验,超分辨率的方法大致可以分为如下几个类别:

- prediction methods

- edgebased methods

- statistical methods

- patch-based methods

- deep learning methods

SISR 分类

-

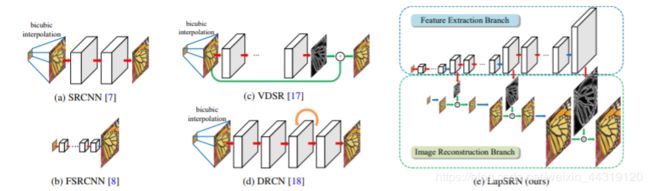

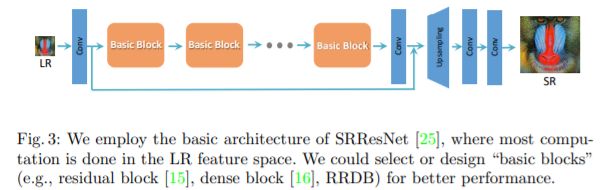

Linear networks

only a single path for signal flow without any skip connections or multiple-branchesnote:some linear networks learn to reproduce the residual image (the difference between the LR and HR images)

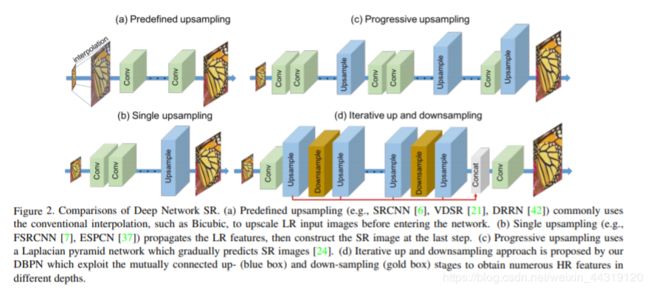

根据 up-sampling operation 可以分两类:

-

early upsampling

首先对LR输入进行上采样以匹配所需的HR输出大小,然后学习层次特征表示以生成输出

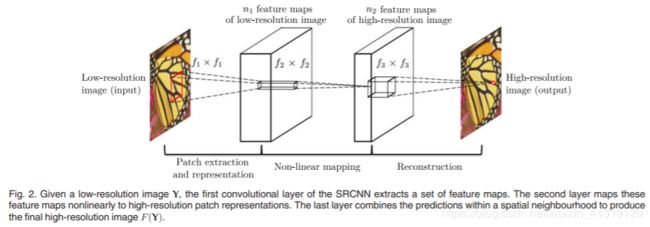

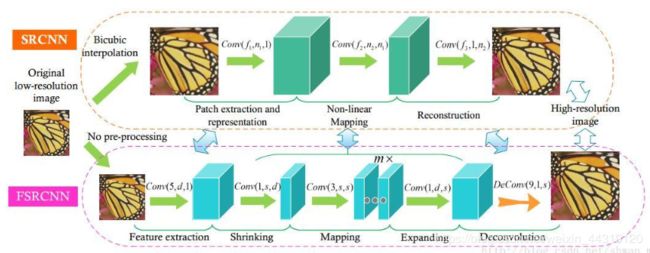

常用的上采样方法:双立方插值算法- SRCNN(using only convolutional layers for super-resolution)

- 数据集:

training data set:

HR图像:synthesized by extracting non-overlapping dense patches of size 32 × \times × 32 from the HR images

LR图像:The LR input patches are first downsampled and then upsampled using bicubic interpolation having the same size as the high-resolution output image - Layers :three convolutional and two ReLU layers

convolutional layer is termed as patch extraction or feature extraction(从输入图像创建特征映射)

convolutional layer is called non-linear mapping(非线性映射,将特征映射转换为高维特征向量)

convolutional layer aggregates the features maps to output the final high-resolution image - Loss function:Mean Squared Error (MSE)

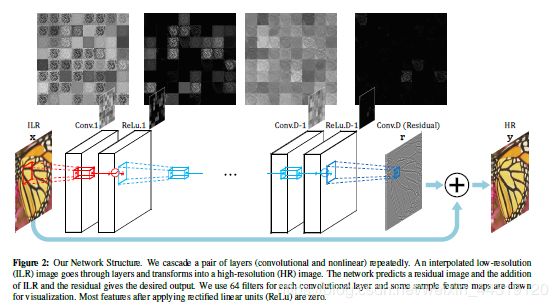

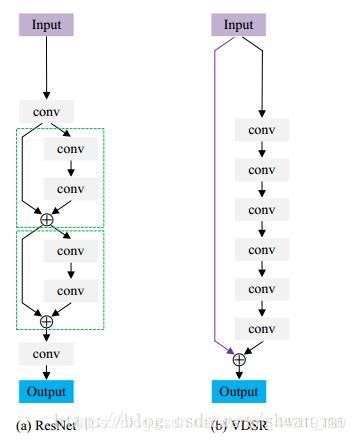

- VDSR

- Layers :deep CNN architecture

(the VGG-net and uses fixed-size convolutions 3 × \times × 3 in all network layers)

To avoid slow convergence(缓慢收敛) in deep networks (specifically with 20 weight layers), they propose two effective strategies :- learn a residual mapping(残差映射) that generates the difference between the HR and LR image(使得目标更简单,网络只聚焦在高频信息)

- gradients are clipped with(夹在) in the range [ − θ , + θ ] [\ -\theta,+\theta\ ] [ −θ,+θ ](使得到学习率可以加速训练过程)

- 观点:deeper networks can provide better contextualization and learn generalizable representations that can be used for multi-scale super-resolution

VDSR 与 ResNet

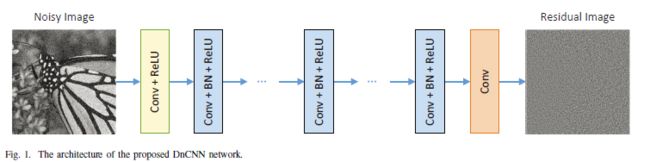

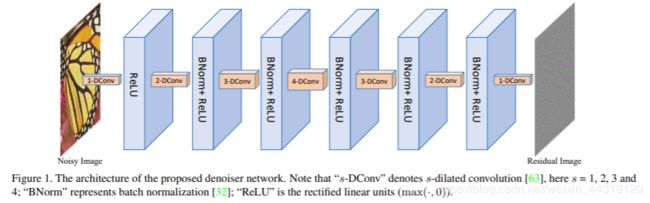

- DnCNN

- learns to predict a high-frequency residual directly instead of the latent super-resolved image

- Layers :similar to SRCNN

- depends heavily on the accuracy of noise estimation without knowing the underlying structures and textures present in the image

- computationally expensive (batch normalization operations after every convolutional layer)

- IRCNN(Image Restoration CNN)

- 提出了一套基于CNN的去噪器,可以联合用于图像去噪、去模糊和超分辨率等几个低层次的视觉任务

- Specifically,利用半二次分裂Half Quadratric Splitting (HQS)技术对观测模型中的正则项和保真项进行解耦,然后,利用CNN具有较强的建模能力和测试时间效率,对去噪先验进行判别学习

- Layers:CNN去噪器由7个(dilated convolution layers)扩张卷积层组成的堆栈组成,这些卷积层与批归一化和ReLU非线性层交错。扩展操作通过封闭更大的接受域有助于对较大的上下文进行建模。

- residual image learning is performed in a similar manner to previous architectures (VDSR, DRCN and DRRN)

- 使用小尺寸的训练样本和零填充来避免卷积运算造成的(boundary artifacts)边界伪影

-

late upsampling

后上采样网络对低分辨率输入进行学习,然后对网络输出附近的特征进行上采样(低内存占用)- FSRCNN

- improves speed and quality over SRCNN

- Datasets: 91-image dataset ,

Data augmentation such as rotation, flipping,and scaling is also employed to increase the number of images by 19 times - Layers:consists of four convolution layers (feature extraction, shrinking, non-linear mapping, and expansion layers)and one deconvolution

- feature extraction step is similar to SRCNN(difference lies in the input size and the filter size, the input to FSRCNN is the original patch without upsampling it)

- shrinking layer : reduce the feature dimensions (number of parameters) by adopting a smaller filter size (i.e. f=1)

- non-linear mapping (critical step):the size of filters in the non-linear mapping layer is set to three,while the number of channels is kept the same as the previous layer

- expansion layers :an inverse operation of the shrinking step to increase the number of dimensions

- upsampling and aggregating deconvolution layer : stride acts as an upscaling factor

- 使用(PReLU)代替了每个卷积层后的整流线性单元(ReLU)

- Loss Funcion : mean-square error

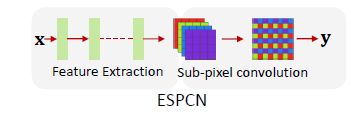

- ESPCN(Efficient sub-pixel convolutional neural network)

a fast SR approach that can operate in real-time

both for images and videos- perform feature extraction in the LR space

- at the very end to aggregate LR feature maps and simultaneously perform projection to high dimensional space to reconstruct the HR image.

- sub-pixel convolution operation used in this work is essentially similar to convolution transpose or deconvolution operation(使用 fractional kernel stride 分数级步幅用于提高输入特征图的空间分辨率)

- Loss Function : l 1 l_1 l1 loss

A separate upscaling kernel is used to map each feature map

-

-

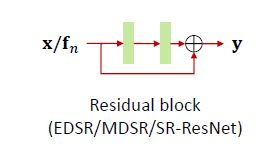

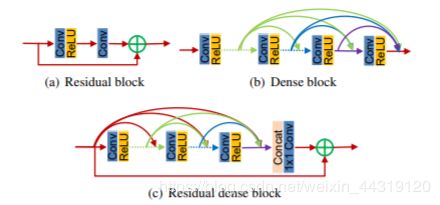

Residual Networks(残差网络)

uses skip connections in the network design (avoid gradients vanishing, more feasible)algorithms learn residue i.e. the high-frequencies between the input and ground-truth

根据 the number of stages used in such networks 可以分成两类:

-

Single-stage Residual Nets

- EDSR(The Enhanced Deep Super-Resolution)

modifies the ResNet architecture to work with the SR task

- Removing Batch Normalization layers (from each residual block) and ReLU activation (outside residual blocks) (实质性的改进)

- Similar to VDSR, they also extended their single scale approach to work on multiple scales.

- Propose Multi-scale Deep SR (MDSR) architecture(reduces the number of parameters through a majority of shared parameters)

- 特定于尺度的层仅并行地应用于输入和输出块附近,以学习与尺度相关的表示。

- Data augmentation (rotations and flips) was used to create a ‘self-ensemble’ ( transformed inputs are passed through the network, reverse-transformed and averaged together to create a single output )

- Better performance compared to SR-CNN, VDSR,SR-GAN

- Loss Function : l 1 l_1 l1 loss

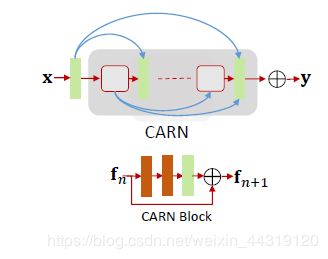

- CARN(Cascading residual network)级联残差网络

- 与其他模型的区别在于本地和全局级联模块的存在

- 中间层的特点是级联的,且聚集到一个 1 × 1 1\times1 1×1的卷积层上

- 本地级联连接与全局级联连接相同,只是这些块是简单的剩余块。

- DateSets:using 64 × 64 64\times64 64×64 patches from BSD , Yang et al. and DIV2K dataset with data augmentation

- Loss Function : l 1 l_1 l1 loss

- Adam is used for optimization with an initial learning rate of 1 0 − 4 10^{-4} 10−4 which is halved after every 4 × 1 0 5 4\times 10 ^ 5 4×105 steps

- EDSR(The Enhanced Deep Super-Resolution)

-

Multi-stage Residual Nets

composed of multiple subnets that are generally trained in succession (第一个子网通常预测粗特征,而其他子网改进了初始预测)

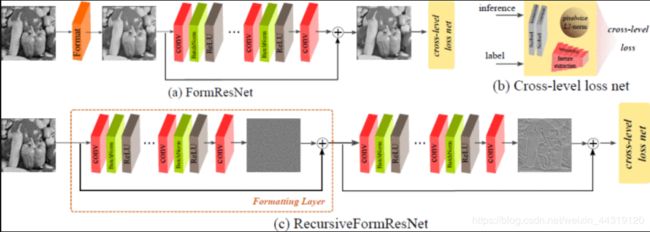

encoder-decoder designs(first downsample the input using an encoder and then perform upsampling via a decoder)(hence two distinct stages)- FormResNet

composed of two networks, both of which are similar to DnCNN,the difference lies in the loss layers

- The first network(Formatting layer格式化层)

Loss = Euclidean loss + perceptual loss

The classical algorithms such as BM3D can also replace this formatting layer - The second deep network (DiffResNet)

第二层网络的输入取自第一层网络 - Formatting layer removes high-frequency corruption in uniform areas

DiffResNet learns the structured regions

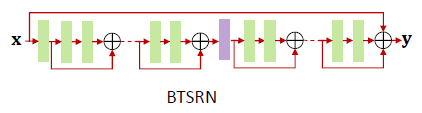

- BTSRN(balanced two-stage residual networks)

composed of a low-resolution stage and a high-resolution stage

- low-resolution stage:

feature maps have a smaller size, the same as the input patch

(通过反褶积和最近邻上采样对特征图进行上采样) - high-resolution stages:

The upsampled feature maps are then fed into the high-resolution stage - a variant of residual block called projected convolution is employed(In both the low-resolution and the high-resolution stages)

residual block consists of 1 × 1 1 \times 1 1×1 convolutional layer as a feature map projection to decrease the input size of 3 × 3 3 \times 3 3×3 convolutional features

LR stage has six residual blocks,HR stage consists of four residual blocks - DataSets: DIV2K dataset

During training, the images are cropped to 108 × 108 108 \times 108 108×108 sized patches and augmented using flipping and rotation operations - The optimization was performed using Adam

- The residual block consists of 128 feature maps as input and 64 as output

- Loss Function : l 2 l_2 l2 loss

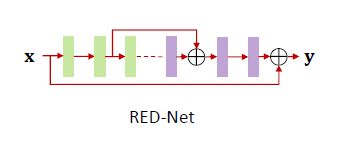

- REDNet(Residual Encoder Decoder Network)

composed of convolutional and symmetric deconvolutional layers

(ReLU is added after each convolutional and deconvolutional layer)

- the convolutional layers

(在保留对象结构和去除退化的同时提取特征映射) - the deconvolutional layers

reconstruct the missing details of the images - skip connections are added between the convolutional and the symmetric deconvolutional layer

卷积层的特征映射与镜像反卷积层的输出相加,然后进行非线性校正 - (input)bicubic interpolated images

(outcome)high-resolution image

该网络具有端到端可训练性,通过最小化系统output与ground truth之间的 l 2 − n o r m l_2 -norm l2−norm 来达到收敛性 - proposed three variants of the REDNet architecture(改变了卷积和反卷积层的数量)

best performing architecture has 30 weight layers, each with 64 feature maps - Datasets:the luminance channel from the Berkeley Segmentation Dataset(BSD) is used to generate the training image set

Ground truth: The patches of size 50 × 50 50 \times 50 50×50

Input patches : 输入的patch是通过对patch进行降采样,再用双三次插值的方法将其恢复到原来的大小 - Loss Function :Mean square error (MSE)

- The input and output patch sizes are 9 × 9 9 \times 9 9×9 and

5 × 5 5 \times 5 5×5, respectively.

这些小块通过其平均值和方差被归一化,这些平均值和方差随后被添加到相应的恢复后的最终高分辨率输出中

the kernel has a size of 5 × 5 5 \times 5 5×5 with 128 feature channels

- FormResNet

-

-

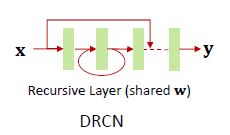

Recursive networks(递归网络)

employ recursively connected convolutional layers or recursively linked units

这些设计背后的主要动机是将较难的SR问题逐步分解为一组较简单的SR问题- DRCN(Deep Recursive Convolutional Network)

这种技术的一个优点是,对于更多的递归,参数的数量保持不变

composed of three smaller networks:

- embedding network:converts the input (either grayscale or color image) to feature maps

- inference net:performs super-resolution

analyzes image regions by recursively applying a single layer (consisting of convolution and ReLU)

The size of the receptive field is increased after each recursion.

The output of the inference net is high-resolution feature maps - reconstruction net:high-resolution feature maps which are transformed to grayscale or color

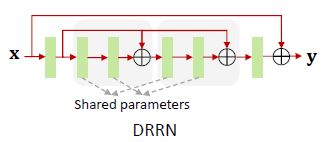

- DRRN(Deep Recursive Residual Network)

a deep CNN model but with conservative parametric complexity

- deeper architecture with as many as 52 convolutional layers.

- At the same time, they reduce the network complexity

这是通过将residual image learning与网络中small blocks层之间的local identity connections相结合来实现的

这种并行信息流实现了对更深层架构的稳定训练 - DRRN utilizes recursive learning which replicates a basic skip-connection block several times to achieve a multi-path network block

由于在复制之间共享参数,内存成本和计算复杂度显著降低 - used the standard SGD optimizer

- The loss layer is based on MSE loss

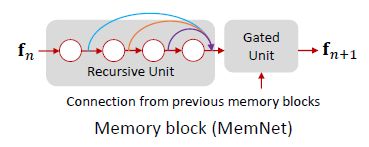

- MemNet(memory network)

MemNet can be broken down into three parts similar to SRCNN

- (the first part)feature extraction block :

extracts features from the input image - (the second part)(crucial role):

consists of a series of memory blocks

memory block = a recursive unit + a gate unit- The recursive part is similar to ResNet

composed of two convolutional layers with a pre-activation mechanism and dense connections to the gate unit - Each gate unit is a convolutional layer with 1 × 1 1 \times 1 1×1 convolutional kernel size

- The recursive part is similar to ResNet

- Loss Function: MSE

- DataSets:using 200 images from BSD and 91 images from Yang et al

- The network consists of six memory blocks with six recursions.The total number of layers in MemNet is 80

- MemNet也被用于其他图像恢复任务,如图像去噪,JPEG去块

- DRCN(Deep Recursive Convolutional Network)

-

Progressive reconstruction designs

To deal with large factors,predict the output in multiple steps i . e . i.e. i.e. × 2 \times 2 ×2 followed by × 4 \times 4 ×4

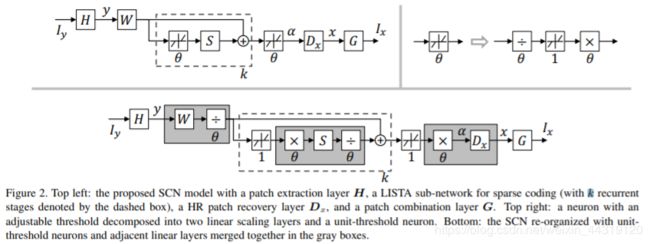

(CNN算法可一步预测输出;但是,对于大比例因子而言,这可能不可行)- SCN(sparse coding-based network)基于稀疏编码的网络

将稀疏编码的优点与深度神经网络的领域知识相结合,以获得一个紧凑的模型并提高性能

mimics a Learned Iterative Shrinkage and Thresholding Algorithm (LISTA) network to build a multi-layer neural network

- the first convolutional layer extracts features from the low-resolution patches which are then fed into a LISTA network.

- the LISTA network consists of a finite number of recurrent stages(to obtain the sparse code for each feature)

LISTA阶段由两个线性层和一个非线性层组成,其中激活函数具有一个阈值(threshold),该阈值在训练过程中被学习/更新。

为了简化训练,将非线性神经元分解为两个(linear scaling layers)线性标度层和一个(unit-threshold neuron)单位阈值神经元

两个尺度层是对角矩阵,它们互为倒数,例如,如果存在乘法尺度层,则在阈值单位之后进行除法 - 在LISTA网络之后,将(sparse code)稀疏编码与(high-resolution dictionary)高分辨率字典相乘,在连续的线性层中重构原始的高分辨率patch

- 最后一步,再次使用线性层,将高分辨率的patch放置在图像的原始位置,获得高分辨率的输出。

- LapSRN(Deep Laplacian pyramid super-resolution network)深度拉普拉斯金字塔超分辨率网络

consists of three sub-networks that progressively predict the residual images up to a factor of × 8 \times8 ×8

将每个子网络的残差图像加入到输入LR图像中,得到SR图像

- The output :

(first sub-network) a residue of × 2 \times2 ×2

(second sub-network) a residue of × 4 \times4 ×4

(last sub-network) a residue of × 8 \times8 ×8

将这些剩余图像加入相应比例的上采样图像中,得到最终的超分辨图像。 - 将residual prediction branch称为feature extraction

将the addition of bicubic images with the residue称为image reconstruction branch - the LapSRN network consists of three types of elements (the convolutional layers, leaky ReLU,and deconvolutional layers)

- Loss Function:Charbonnier(一种可微分的 l 1 l_1 l1 损失函数变体,它可以处理异常值)

employed at every sub-network, resembling a multi-loss structure - the filter sizes for convolutional and deconvolutional layers are 3 × 3 3\times3 3×3 and 4 × 4 4\times4 4×4, having 64 channels each

- DataSets: images from Yang et al. and 200 images from BSD dataset

- 他们还提出了一个称为Multi-scale (MS) LapSRN的单一模型,该模型联合学习处理多个SR尺度。单个MSLapSRN模型的性能优于三个不同模型的结果。对这种效应的一种解释是,单一模型利用了共同的内部尺度特征,这有助于获得更准确的结果

- SCN(sparse coding-based network)基于稀疏编码的网络

-

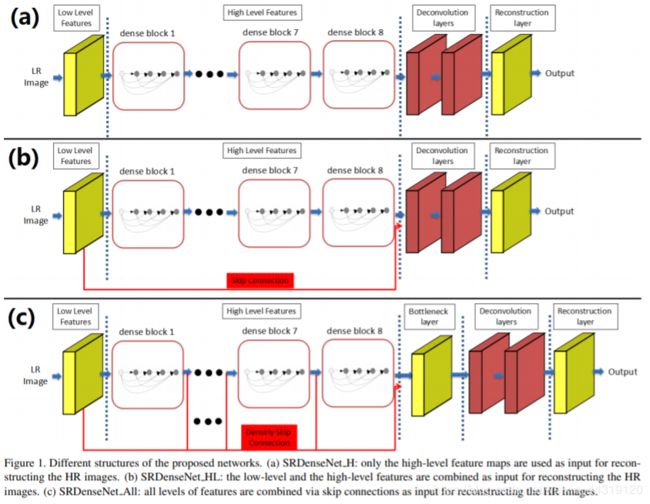

Densely Connected Networks

DenseNet architecture

这种设计的主要动机是将沿着网络深度可用的层次线索组合起来(combine hierarchical cues available along the network depth),以实现更高的灵活性和更丰富的特性表示。- SR-DenseNet

based on the DenseNet which uses dense connections between the layers(a layer directly operates on the output from all previous layers)

- 这种从低层到高层的信息流动避免了梯度消失的问题,使学习compact models成为可能,并加快了训练过程。

- Towards the rear part of the network, SR-DenseNet uses a couple of deconvolution layers to upscale the inputs.

- three variants of SR-DenseNet :

- a sequential arrangement of dense blocks followed by deconvolution layers

这样,只有高层次的特征被用于重建最终的SR图像 - Low-level features from initial layers are combined before final reconstruction.

跳跃连接用于组合低层次和高层次的特征 - All features are combined by using multiple skip connections between low-level features and the dense blocks(to allow a direct flow of information for a better HR reconstruction)

Since complementary features are encoded at multiple stages in the network, the combination of all feature maps gives the best performance

- a sequential arrangement of dense blocks followed by deconvolution layers

- Loss Function :MSE error ( l 2 l_{2} l2 loss )

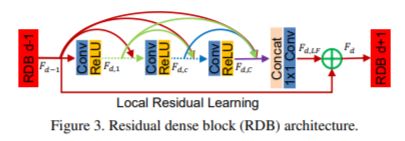

- RDN(Residual Dense Network)

combines residual skip connections (inspired by SR-ResNet) with dense connections (inspired by SR-DenseNet)

主要动机是充分利用(hierarchical feature representations)分层特性表示来学习(local patterns)局部模式

- residual connections are introduced at two levels; local and global

- (At the local level) 提出了一种新的残差密集块(residual density block, RDB),将每个块的输入传到RDB中的所有层,并将其添加到块的输出中,使每个块更关注残差模式(residual patterns)。

由于密集的连接会很快产生高维输出,因此每个RDB使用了一种包含一个 1 × 1 1\times1 1×1卷积的局部特征融合方法来减少维数 - (At the global level)将多个RDB的输出融合在一起(通过拼接和一个 1 × 1 1\times1 1×1卷积操作),并执行全局残差学习来合并网络中多个块的特征

- The residual connections help stabilize network training and results in an improvement over the SR-DenseNet

- (At the local level) 提出了一种新的残差密集块(residual density block, RDB),将每个块的输入传到RDB中的所有层,并将其添加到块的输出中,使每个块更关注残差模式(residual patterns)。

- Loss Function: l 1 l_1 l1 loss

- 在每批随机选择 32 × 32 32\times32 32×32的patch来进行网络训练

- 通过翻转和旋转来增加数据是一种正则化措施

- 作者还对在LR图像中不同降解degradation形式(噪音和人为干扰))的环境进行了实验。该方法具有良好的抗退化能力(resilience against such degradation),能够恢复较好的SR图像

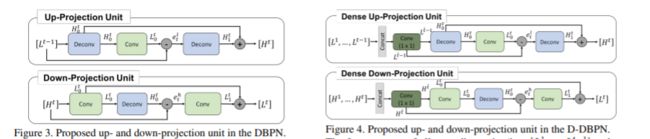

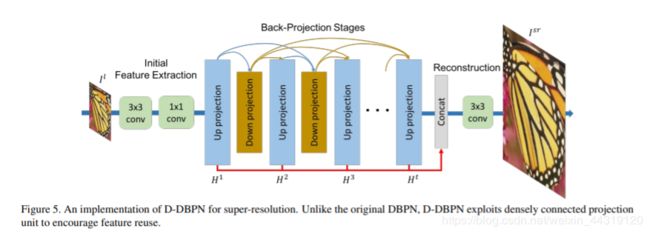

- D-DBPN(Dense deep back-projection network)致密深部反投影网络

从传统的SR方法中获得灵感(迭代地执行反向投影,以了解LR和HR图像之间的反馈错误信号)

其动机是,只有前馈方法不是建模从LR到HR图像映射的最佳方法,而反馈机制可以极大地帮助实现更好的结果

- comprises of a series of up and down sampling layers that are densely connected with each other

将网络中多个深度的HR图像进行组合,得到最终的输出 - 该设计的一个重要特点是将 upsampling outputs for input feature map 与residual signal 相结合。

在upsampled feature map中添加residual signal 可提供错误反馈,并迫使网络专注于精细细节 - Loss Function : l 1 l_{1} l1 loss

- 计算复杂度较高( ∼ \sim ∼ 10 million parameters for × 4 \times 4 ×4 SR)一个较低的复杂性版本的最终模型也被提出(性能略有下降)

- SR-DenseNet

-

Multi-branch designs

多分支网络的目标是在多个上下文范围(multiple context scales)内获得一组不同的特性,然后将这些互补信息融合在一起,得到更好的HR重构。

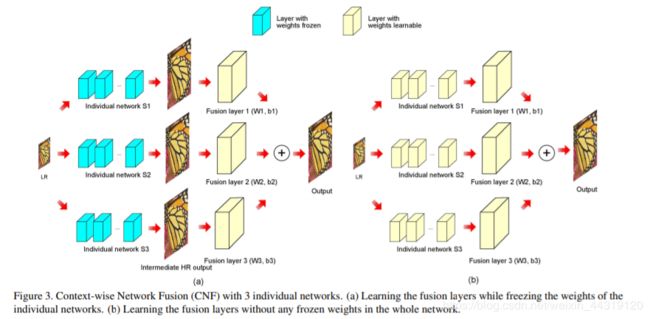

这种设计还支持多路径信号流,从而在训练过程中更好地进行前向和后向的信息交换- CNF(Context-wise Network Fusion)

融合多个卷积神经网络实现图像超分辨率

每个SRCNN都由不同数量的层构成,然后,每个SRCNN的输出通过一个单独的卷积层传递,最终使用sum-pooling将它们融合在一起

- DataSets:20 million patches collected from Open Image Dataset

The size of each patch is 33 × 33 33 \times 33 33×33 pixels of luminance channel only - (First)each SRCNN is trained individually (epochs = 50 ,learning rate =1e-4 )

(then)the fused network is trained (epochs = 10 ,learning rate =1e-4 ) - 这种渐进的学习策略类似于课程学习,从简单的任务开始,然后转向更复杂的任务,联合优化多个子网,以实现改进的SR。

- Loss Function:Mean square error

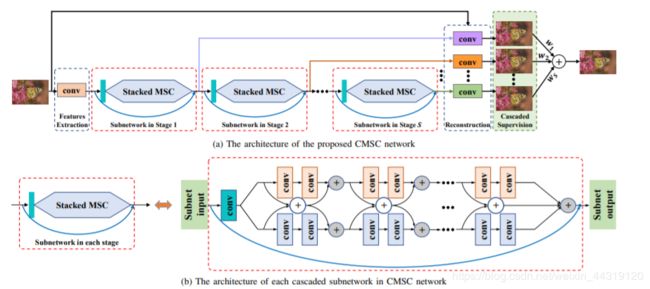

- CMSC(Cascaded multi-scale cross-network)级联多尺度交叉网络

composed of a feature extraction layer, cascaded subnets, and a reconstruction network

- (feature extraction layer) performs the same function as mentioned for the cases of SRCNN , FSRCNN

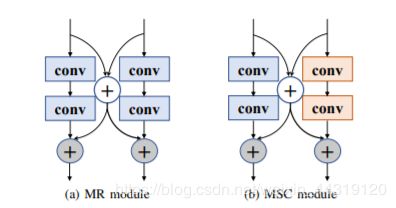

- (cascaded subnets) Each subnet is composed of merge-and-run (MR) blocks

每个MR块由两个并行的分支组成,每个分支有两个卷积层,每个分支的(residual connections)剩余连接累积在一起,然后分别添加到两个分支的输出中

CMSC的每个子网均由四个MR块组成,这些MR块具有 3 × 3 3\times3 3×3、 5 × 5 5\times5 5×5和 7 × 7 7\times7 7×7的不同接收字段,以多个尺度捕获上下文信息

MR块中的每个卷积层后面都是batch normalization和Leaky-ReLU - (last reconstruction layer) generates the final output

- Loss Function: l 1 l_{1} l1 (使用平衡项将中间输出与最终输出组合在一起)

- Input : 使用双三次插值对网络的输入进行向上采样,patch的大小为 41 × 41 41\times41 41×41

- 该模型使用与VDSR相似的291幅图像进行训练,初始学习率为 1 0 − 1 10^{-1} 10−1,每10个epochs 学习后每十世总共50时代

- 与EDSR及其变体MDSR相比,CMSC的性能有所滞后

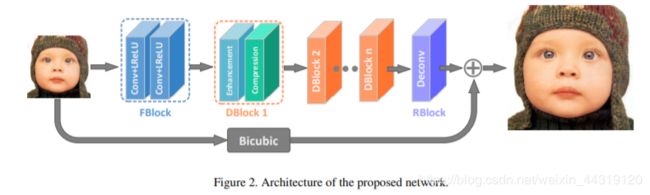

- IDN(Information Distillation Network)

consists of three blocks: a feature extraction block, multiple stacked information distillation blocks and a reconstruction block

-

(feature extraction block)composed of two convolutional layers to extract features

-

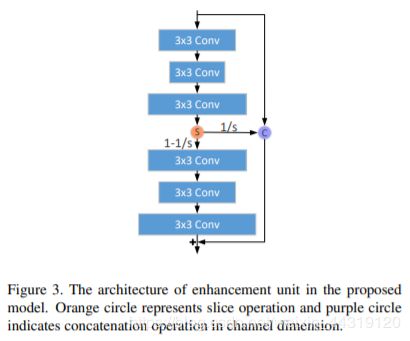

(distillation block)made up of two other blocks, an enhancement unit, and a compression unit.

enhancement unit :six convolutional layers followed by leaky ReLU

将第三个卷积层的输出进行切片,将其中的一半与block的输入进行拼接,将剩下的一半作为第四个convolutional layer的输入

The output of the concatenated component (连接组件) is added with the output of the enhancement block. In total, four enhancement blocks are utilized.compression unit :the compression unit is realized using a 1 × 1 1\times1 1×1 convolutional layer after each enhancement block.

-

( reconstruction block) a deconvolution layer with a kernel size of 17 × 17 17\times17 17×17 .

-

Loss Function: 首先利用(absolute mean error loss)绝对平均误差损失对网络进行训练,然后利用(mean square error loss)均方误差损失对网络进行微调

-

Input :The input patch size is 26 × 26 26\times26 26×26

-

The initial learning rate is set to be 1 e − 4 1e-4 1e−4 for a total of 1 0 5 10^5 105 iterations

- CNF(Context-wise Network Fusion)

-

Attention-based Networks

在前面讨论的网络设计中,所有的空间位置和信道对于超分辨率都具有统一的重要性,在某些情况下,它有助于有选择地关注给定层中的少数特性。

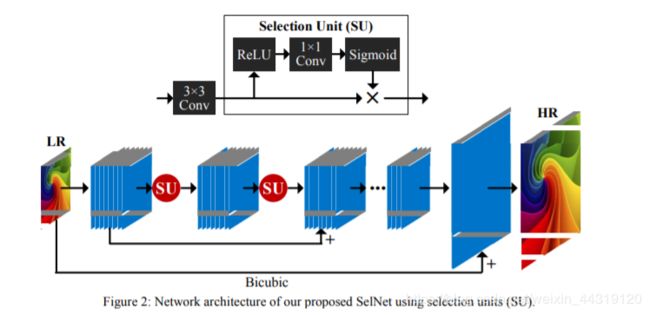

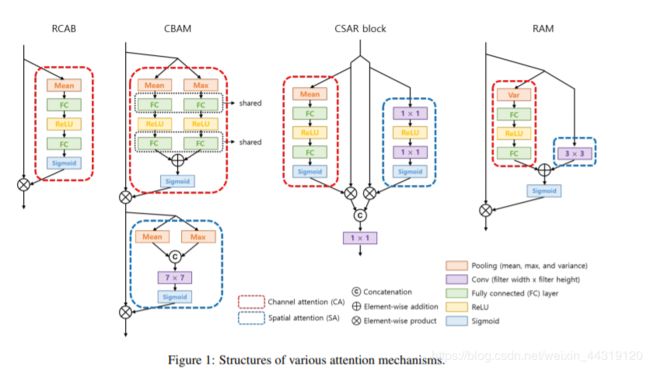

基于注意力的模型允许这种灵活性,并考虑到并非所有的特性都是超分辨率的必要条件,但它们的重要性各不相同。与深度网络相结合,最近的基于注意力的模型显示了SR的显著改进。- SelNet

a novel selection unit for the image super-resolution network

- The selection unit serves as a gate between convolutional layers, allowing only selected values from the feature maps.

选择单元由一个恒等映射和一个ReLU级联、一个 1 × 1 1\times 1 1×1卷积层和一个sigmoid层组成 - SelNet共包含22个卷积层,每个卷积层之后都添加一个选择单元。与VDSR类似,SelNet也使用了残差学习和gradient switching (a version of gradient clipping)来提高学习速度。

- DataSets:low-resolution patches of size 120 × 120 120\times 120 120×120(cropped from DIV2K dataset)

- epochs = 50 ,learning rate = 1 0 − 1 10^{-1} 10−1

- Loss Function : l 2 l_{2} l2

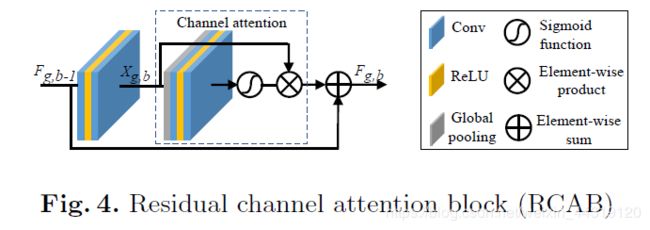

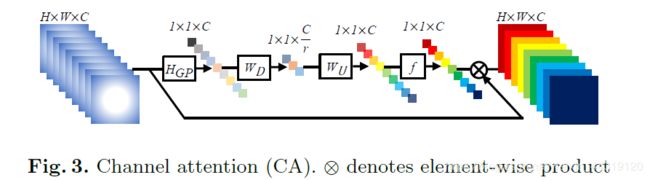

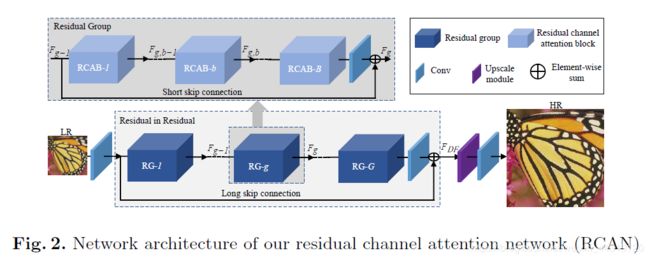

- RCAN(Residual Channel Attention Network)

- The main highlights of the architecture include:

(a) 一种递归残差设计,其中(residual connections)残差连接存在于(global residual network)全局残差网络的每个块中

(b) 每个(local residual block)局部剩余块都有一个(channel attention mechanism)通道注意机制:the filter activations are collapsed from h × w × c h\times w \times c h×w×c to a vector with 1 × 1 × c 1\times 1\times c 1×1×c dimensions (after passing through a bottleneck) that acts as a selective attention over channel maps - 第一个新奇之处是允许信息从最初的层流向最终的层

第二个贡献是允许网络将重点放在对最终任务更重要的选择性特征映射上,并有效地建模特征映射之间的关系 - Loss Function : l 1 l_{1} l1 loss

- recursive residual style architecture使超深网络具有更好的收敛性。与当代的方法如IRCNN,VDSR和RDN 相比,它具有更好的性能,这说明了通道注意机制对低水平视觉任务的有效性

- high computational complexity compared to LapSRN, MemNet and VDSR.( ∼ 15 \sim 15 ∼15 million parameters for × 4 \times 4 ×4 SR)

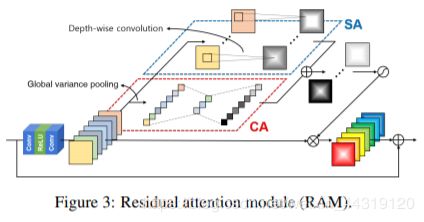

- SRRAM(Residual Attention Module for SR)

SRRAM结构类似于RCAN,这两种方法都受到了EDSR的启发

The SRRAM can be divided into three parts :

- (feature extraction) similar to SRCNN

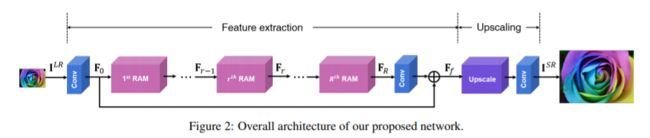

- (feature upscaling) composed of residual attention modules (RAM).

SRRAM的基本单元,由residual blocks、spatial attention和channel attention组成,用于学习inter-channel and intra-channel dependencies通道间和通道内的依赖关系 - (feature reconstruction) similar to SRCNN

- DataSets : using randomly cropped 48 × 48 48\times 48 48×48 patches from DIV2K dataset with data augmentation

- The filters are of 3 × 3 3\times 3 3×3 size with feature maps of 64

- The optimizer used is Adam

- Loss Function : l 1 l_{1} l1 loss

- learning rate = 1 0 − 4 10^{-4} 10−4

- 在最终的模型中总共使用了64个RAM块

- SelNet

-

Multiple-degradation handling networks

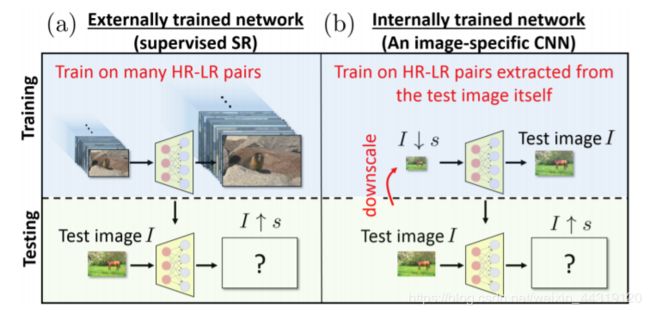

in reality, multiple degradations can simultaneously occur- ZSSR(Zero-Shot Super-Resolution)

该方法在经典方法的基础上,利用内部图像统计信息,利用深度神经网络对图像进行超分辨

- The ZSSR is trained using a downsampled version of the test image

这里的目的是根据测试图像生成的LR图像预测测试图像

一旦网络学习了LR测试图像和测试图像之间的关系,就会使用相同的网络以测试图像为输入来预测SR图像

因此,它不需要对特定的退化训练图像,并且可以在推理过程中动态地学习特定于图像的网络 - eight convolutional layers followed by ReLU consisting of 64 channels

- Loss Function : l 1 l_{1} l1 loss

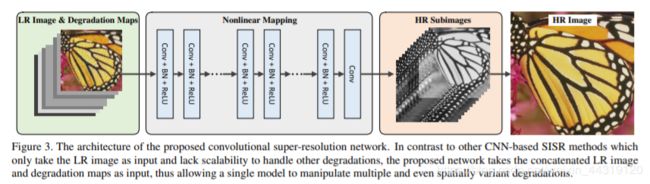

- SRMD(Super-resolution network for multiple degradations)

takes a concatenated low-resolution image and its degradation maps.

- The architecture of SRMD is similar to SRCNN.

(First) a cascade of convolutional layers of 3 × 3 3 \times 3 3×3 filter size is applied to extracted features, followed by a sequence of Conv, ReLU and Batch normalization layers

(Furthermore)similar to ESPCN,利用卷积运算提取HR子图像

(final) HR sub-images are transformed to the final single HR output - SRMD直接学习HR图像,而不是图像的残差

- a variant called SRMDNF,learns from noise-free degradations

the connections from the first noise-level maps in the convolutional layers are removed

the rest of the architecture is similar to SRMD - The authors trained individual models for each upsampling scale in contrast to the multi-scale training

- Loss Function: l 1 l_{1} l1 loss

- Input : training patches ( 40 × 40 40 \times 40 40×40)

- Layers: The number of convolution layers is fixed to 12, while each layer has 128 feature maps

- DataSets: 5,944 images from BSD, DIV2K and Waterloo datasets

- initial learning( 1 0 − 3 10^{-3} 10−3), later decreased( 1 0 − 5 10^{-5} 10−5)

学习速率降低的标准是基于the error change between successive epochs - SRMD和它的变体都不能打破早期SR网络如EDSR,MDSR,和CMSC的PSNR记录

然而,它联合处理多种降解的能力提供了一种独特的能力

- ZSSR(Zero-Shot Super-Resolution)

-

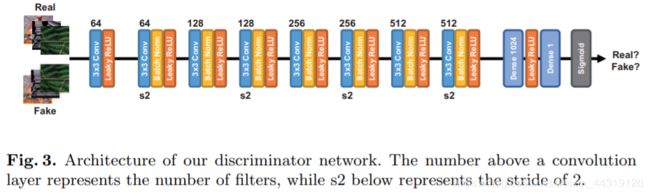

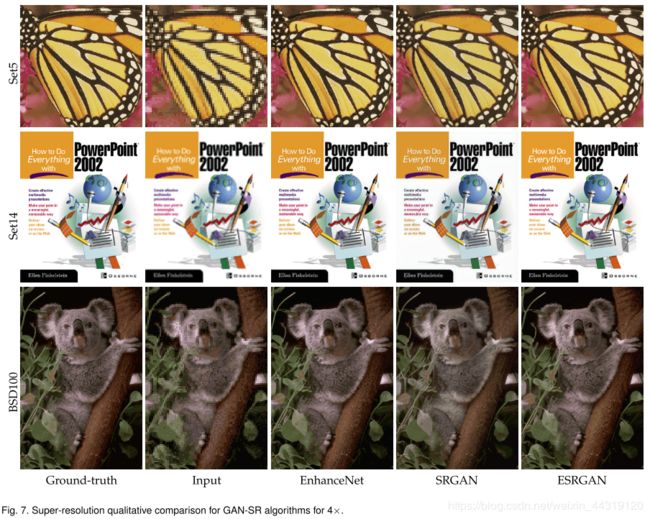

GAN Models

采用博弈论方法,其中模型由两个部分组成,即生成器和鉴别器。该生成器生成的SR图像是鉴别器无法识别是否是真实HR图像或人工超分辨输出

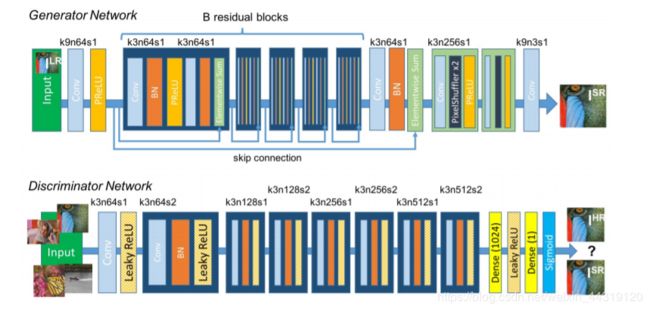

这样就产生了感知质量更好的HR图像,相应的PSNR值通常会降低(PSNR值越小表示图像失真越大)(这突出了SR文献中流行的定量测量方法没能很好的描述出生成的HR图像的感知质量)- SRGAN

SRGAN提出使用一种对抗目标函数来促使超分辨输出近乎接近自然图像。

- (highlight)a multi-task loss formulation that consists of three main parts :

(1)a MSE loss that encodes pixel-wise similarity

(2)a perceptual similarity metric in terms of a distance metric (defined over high-level image representation (e.g., deep network features))

(3)an adversarial loss

平衡了生成器和鉴别器之间的最小最大博弈(标准GAN目标) - favors outputs that are perceptually similar to the high-dimensional images

- To quantify this capability(perceptually similar), they introduce a new Mean Opinion Score (MOS) which is assigned manually by human raters indicating bad/excellent quality of each super-resolved

image. - SRGAN在感知质量指标上明显优于竞争对手

competitors:optimize direct data dependent measures (such as pixel-errors)

- EnhanceNet

这个网络设计的重点是在高分辨率的超分辨率图像中创建如实的纹理细节。

- 常规图像质量测量如PSNR的一个关键问题是它们不符合图像的感知质量。这导致过度平滑的图像没有锐利的纹理。为了克服这个问题,EnhanceNet 使用了(the regular pixel-level MSE loss)常规像素级MSE损耗之外的另外两个loss terms :

(the perceptual loss function)was defined on the intermediate feature representation of a pretrained network in the form of l 1 l_{1} l1 distance

(the texture matching loss)用于低分辨率和高分辨率图像的纹理匹配 , is quantified as the l 1 l_{1} l1 loss between gram matrices computed from deep features - 整个网络架构都经过了对抗性训练,SR网络的目标是欺骗鉴别器网络。

- EnhanceNet使用的架构基于全卷积网络和残差学习原理

- 他们的结果表明,尽管在只使用(a pixel level loss)像素级损失的情况下可以获得最佳的PSNR,但是额外的损失项和对抗性训练机制会产生更实际和感知上更好的输出

- 不利的一面是,当超分辨高纹理区域时,所提出的对抗性训练可能会产生visible artifacts。

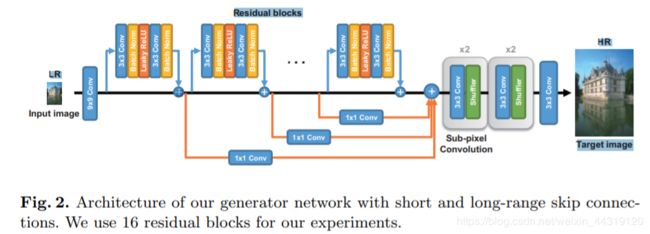

- SRFeat

another GAN-based Super-Resolution algorithm with Feature Discrimination

这项工作的重点是输入图像的真实感,使用一个额外的鉴别器来帮助生成器生成高频结构特征(是通过鉴别机器生成图像和真实图像的特征来实现的),而不是noisy artifacts

- 该网络使用了个 9 × 9 9\times9 9×9个卷积层来提取特征

- 使用类似于ResNet 带有long-range skip connections 的residual blocks,它有 1 × 1 1\times1 1×1个卷积

- 通过(pixel shuffler layers)像素变换层对特征图进行向上采样以获得所需的输出大小

- used 16 residual blocks with two different settings of feature maps i.e. 64 and 128

- Loss Function: perceptual (adversarial loss) and pixel-level loss ( l 2 l_{2} l2) functions

- Adam optimizer

- Input : The input resolution to the system is 74 × 74 74\times74 74×74 which only outputs 296 × 296 296\times296 296×296 image

- 120k images from the ImageNet for pre-training the generator

followed by fine-tuning on augmented DIV2K dataset using learning rates of 1 0 − 4 10^{-4} 10−4 to 1 0 − 6 10^{-6} 10−6.

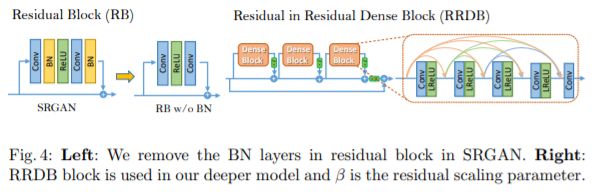

- ESRGAN(Enhanced Super-Resolution Generative Adversarial Networks)

在SRGAN的基础上构建,删除batch normalization和incorporating dense blocks

- Each dense block’s input is also connected to the output of the respective block making a residual connection over each dense block

- ESRGAN also has a global residual connection to enforce residual learning

- the authors also employ an enhanced discriminator called Relativistic GAN

- DataSets:3,450 images from the DIV2K and Flicker2K datasets employing augmentation

- Loss Function: 训练模型 l 1 l_{1} l1 loss ,训练好的模型 perceptual loss

- Input :patch size for training is set to 128 × 128 128\times128 128×128

- having a network depth of 23 blocks,Each block contains five convolutional layers, each with 64 feature maps

- 与RCAN相比,视觉结果相对较好,但在定量测度方面,RCAN表现较好,ESRGAN 存在滞后

- SRGAN

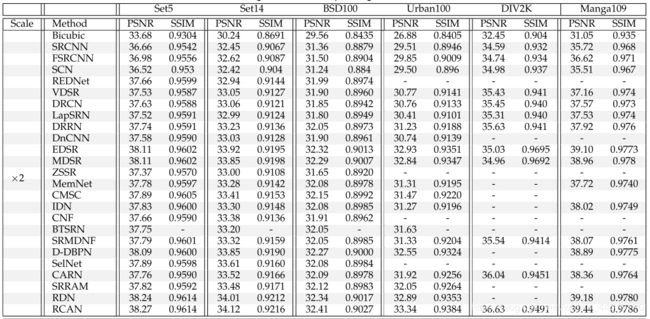

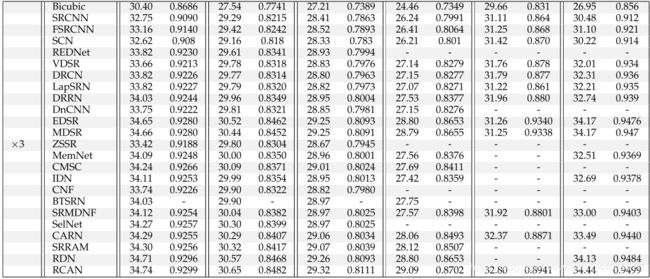

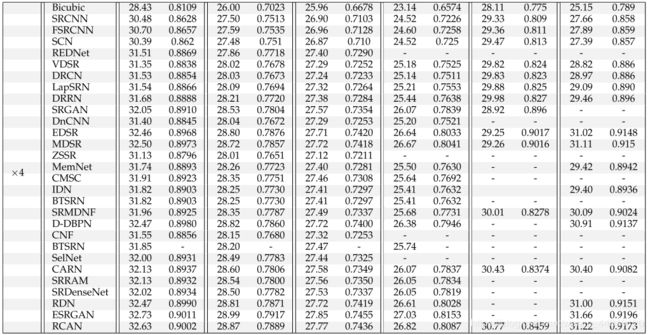

实验评估

-

Dataset

Set5

Set14

BSD100

Urban100

DIV2K

Manga109 -

Quantitative Measures

PSNR(peak signal-to-noise ratio)

SSIM(structural similarity index)

-

Choice of network loss

卷积神经网络 :- 平均绝对误差 l 1 l_{1} l1

- 均方误差 MSE l 2 l_{2} l2

生成对抗网络(GANs):

- 感知损失(对抗损失)

- 像素级损失(MSE)

-

Network Depth

目前这批CNNs正在加入更多的卷积层来构建更深层次的网络,以提高图像质量和数量,自SRCNN诞生以来,这一趋势一直是深度SR的主导趋势 -

Skip Connections

这些连接可以分为四种主要类型:全局连接、局部连接、递归连接和密集连接

未来方向

- Incorporation of Priors

- Objective Functions and Metrics

- Need for Unified Solutions

- Unsupervised Image SR

- Higher SR rates

- Arbitrary SR rates

- Real vs Artificial Degradation